RavenDB supports a dedicated batch processing mode, using the notion of subscriptions. A subscription is simply a way to register a query with the database and have the database send the subscriber the documents that match the query.

RavenDB supports a dedicated batch processing mode, using the notion of subscriptions. A subscription is simply a way to register a query with the database and have the database send the subscriber the documents that match the query.

The previous sentence is taken directly from the Inside RavenDB book, and it is a good intro for the topic. A subscription is a way to process documents that match a query. A good example might be to run various business processes as a result of data changes. Let’s assume that we have a bank, and a new customer was registered. We need to run plenty of such processes (Know Your Customer, Anti Money Laundering, Credit Score, in-house estimation, credit limits & authorization, etc).

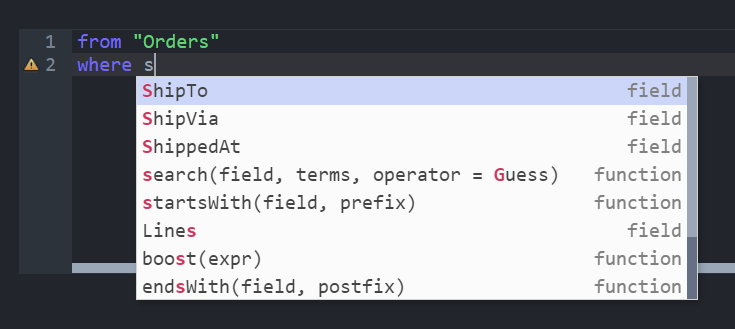

A typical subscription query would then be:

from Customers where Onboarded = false

And then we can register to that subscription. At this point, the database will start sending us all the customers that haven’t been onboarded yet. This is a persistent query, so restarts and failures are handled properly. And the key aspect is that RavenDB will push the matching documents to the subscription worker. RavenDB will handle batching of the results, ensure that we can process humungous amount of data safely and easily and in general remove a lot of hassle from backend processing.

Up until RavenDB 5.3, however, a subscription was defined to be a singleton. In other words, at any given point, only a single subscription worker could be running. That is enforced by the server and help making it much easier to reason about processing documents. One comment that we got is that this is great, if the processing that we are doing is internal, but if there is the need to make a remote call to a potentially slow service, that can be an issue.

For example, consider the following worker code:

What happens when the CheckCreditScore() is slow? We are halting processing for everything. In some cases, it is only particular customers that are slow, and we absolutely want to process them in parallel. However, RavenDB did not allow that.

In RavenDB 5.3, we are bringing concurrent subscriptions to the table. When you create the subscription worker, you can define it with a Concurrent mode, like so:

When you have done that, RavenDB will allow multiple concurrent workers to run at the same time, processing batches in parallel. That means that a single slow customer will not halt your entire processing pipeline.

In general, I would like you to think about this flag as just removing a limitation. Previously we blocked you from an operation, and now you can run freely. However…

We didn’t decide to limit your capabilities just because we like doing that. One of the key aspects of subscriptions is that they offer reliable processing of documents. If an exception has been thrown when processing a batch, RavenDB will resend the batch to the worker again, until processing is susccessful. If we handed a batch of documents to process to a worker, and that worker crashed without letting us know, we need to make sure that the next client to connect will start processing from the last acknowledged batch.

It turns out that adding concurrency and the ability for workers to work completely independently of one another make such promises a lot harder to implement.

There is also another aspect that we have to consider. When we have just a single worker, certain concurrency issues never happen, but when we allow you to run concurrently, we have to deal with them.

Consider the subscription above, running on two workers. We handed a new customer document to Worker A, which started processing it. While Worker A is processing the document, that document has changed. That means that it needs to be processed again by the subscription. We have Worker B available and ready, but if we allow such a scenario, we risk getting a race between the workers, working on the same document.

We could punt that to the user and ask them to ensure that this is something that they handle, but that isn’t the philosophy of RavenDB. Instead, we have implemented the following behavior for concurrent subscriptions:

When the server sends a batch of documents to a worker, that worker “checks them out”. Until that worker signals the server that the batch has been either processed or failed, we’ll not send those documents out to other workers, even if they have been modified. Once a batch is acknowledged as processed, we’ll scan all the documents in that batch and see if we need to schedule them for the next batch, because they were missed while they were checked out.

That means that from the perspective of the user, they can write code knowing that only a single subscription worker will run on a given document at a time. This is a very powerful promise and can significantly simplify the complexity of building your systems. A single worker that is stalling will not prevent the other workers from making progress. There aren’t any timeouts to deal with. If you have a process that may take a long time, as long as the worker is alive and functioning (maintaining the TCP connection to the server), the server will consider the documents that the worker is processing as checked out.

Concurrent subscriptions require you to opt in, using the Concurrent flag. All workers for a subscription must agree to run in a concurrent mode. This is to ensure that there aren’t any workers that expect pure serial work model. If you aren’t setting this flag, you’ll keep getting the usual serial behavior of subscriptions. We require opting in to this behavior because we violate an important guarantee of the subscription, that you’ll process the documents in the order in which they were modified. This is now no longer the case, obviously.

The first worker to connect to a subscription will determine if it will run in concurrent mode or serial mode. Any new worker trying to run on that subscription needs to be concurrent (if the first one was concurrent) and no concurrent worker can join a subscription that has a serial worker active. This is a transient setting, it is important to note. When the last worker is shut down, the subscription state is reset, and then you can connect a worker for the first time again (which will then be able to set the mode of the subscription).

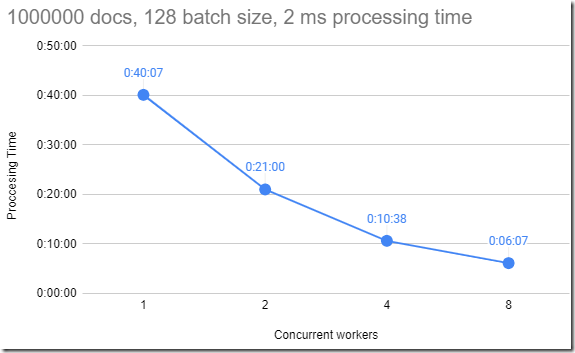

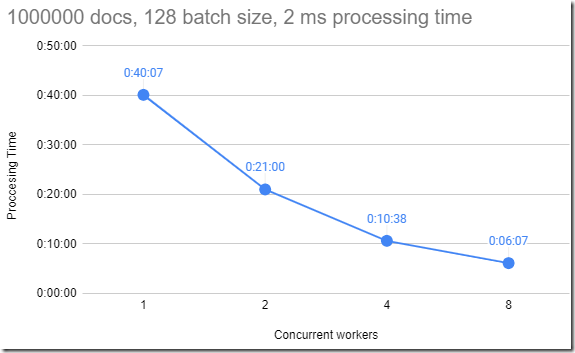

You can see in the benchmark image on the right the impact of adding concurrent workers when there is a non trivial processing time. It is important to note that the concurrent part of the concurrent subscriptions is the fact that the workers are running in parallel. We are still sending batches of documents for each worker independently and then waiting for confirmation. If you have no significant processing time for a batch, you’ll not see a significant improvement in processing time (the server side cost of processing the documents, sending the batch, etc is related to the total number of documents, and won’t be impacted).

Concurrent subscriptions are available in RavenDB 5.3 (due to be released by mid November) and will be available in the Professional and Enterprise editions of RavenDB.