Architecture on the cloud: Avoid writing the code

Assume that you have a service that needs to accept some data from a user. Let’s say that the scenario in question is that the user wants to upload a photo that you’ll later process / aggregate / do stuff with.

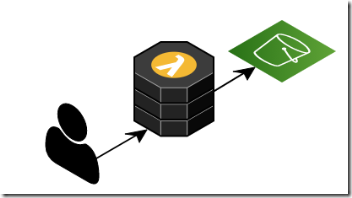

How would you approach such a system? One way to do this is to do something similar to this:

The user will upload the function to your code (in the case above, a Lambda function, but can be an EC2 instance, etc) which will then push the data to its final location (S3, in this case). This is simple, and quite obvious to do. It is also wrong.

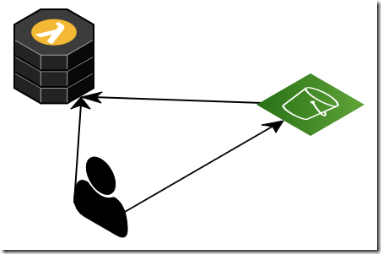

There is no need to involve your code in the middle. What you should do, instead, is to have the user talk directly to the end location (S3, Azure Blob Storage, Backblaze, etc). A better option would be:

In this model, we have:

- User ping your code to generate a secured upload link. You can also setup an “upload only area” in storage that a user can upload files to ahead of time, removing this step.

- User upload directly to S3 (or equivalent).

- S3 will then ping your code when the upload is done.

Why use this approach rather than the first one?

Quite simply, because in the first example, you are paying for the processing / upload / bandwidth for the work. In the second option, it is on the cloud / storage provider to actually provision enough resources to handle this. You are paying for the storage, but nothing else.

Consider the case of a user that uploads a 5 MB image over 5 seconds, if you are using the first option, you’ll pay for the full 5 seconds of compute time if you are using something like Lambda. If you are using EC2, your machine is busy and consume resources.

This is most noticeable if you also have to handle spikes / load. If you have 100 concurrent users, the first option will likely cost quite a lot just in the compute resources you use (either server less or provisioned machines). In the second option, it is the cloud provider that needs to have the machines ready to accept the data, and we don’t pay for any of that.

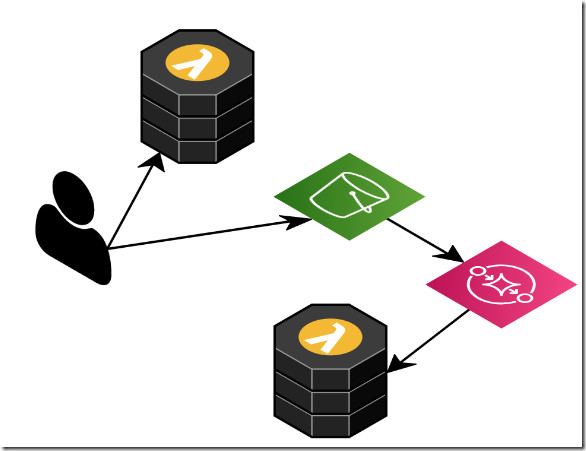

In fact, a much better solution is shown here. Again, the user gets the upload link in some manner and then upload directly to S3. At that point, instead of S3 calling you, it will push the notification to a queue (SQS) and then your code can handle this.

Here is what this looks like:

Note that in this case, you are in control of how fast or slow you want to process the data on the queue. You can set a maximum number of concurrent workers / lambdas and let the cloud infrastructure manage that for you. At this point, you can smooth any peaks that you have in the process.

A lot of this is just setting up the orchestration properly so you aren’t in the way, that you utilize the cloud infrastructure instead of writing your code.

Comments

These cost gotchas in the cloud are an extra dimension we continually struggle with.

What would be the title of the job position whose skillset is determining and optimizing these points? is there such a thing yet?

peter,

On the cloud? I'm aware of multiple companies whose business model is basically looking at your cloud bill, reducing it significantly and taking a cut of the savings.

I'm teaching cloud computing at university and the amount of waste that some solutions have are horrible.

This is why FinOps, where you focus on the money you pay and make value/cost driven decisions, will play its role. Even though one can argue with having it wrapped as a foundation, but still, the idea behind being value/cost driven is the thing. Especially when you apply it to commoditized landscape of the cloud.

Scooletz,

I like the FinOps term, first time that I hear it. Did you come up with the term?

I wish I did :) https://www.finops.org

How do you implement constraints like upload quotas with this architecture? Does, e.g., Azure Blob Storage support generating individual upload links that are limited to a certain max upload size?

Fabian,

To my knowledge, you don't have such a feature available. In general it looks like there is a hard limit of 5GB in Azure's case.

S3 offers a way to ensure that this is an exact size, I guess, but not exactly what you are asking for.

Yeah, it's a pity. So if you need to enforce quotas or limit the maximum file size for some other reason, I guess you can't help but upload through application code 😔.

@Fabian You could let the user upload files of any size and then check them once they're uploaded. I don't know about S3, but fairly sure Azure Blob Storage allows you to fetch metadata of a blob without downloading it. Then you can delete/ignore it and return an error to the user. Waste of time and space of course, but unless this is a frequent scenario, it's likely still way cheaper than routing all traffic over your app.

Enzi,

Agree, that would be a viable option. And unless you have to deal with serious abuse, likely the best one

Comment preview