Investigating self inflicted wounds: The SSL failure on the Linux build server

A nasty cousin of It Works On My Machine is the It Fails On That Machine. It is nasty because you know that there is something wrong, but you can’t reproduce this.

The machine in question was our Linux build agent, and the failure in question was a set of failing tests that failed to perform certain operation when TLS was enabled. The problem? They were failing with I/O errors, but only with TLS, and the connection was using localhost. Further investigation showed that the most likely reason for the failure was a timeout. But how could that be? For fun, sometimes, the test passed. So it wasn’t an issue of a firewall of some such. Testing using openssl s_server and connecting to it manually didn’t show any issues.

The failures reproduced only on that particular build machine. Trying to reproduce the failure on other Linux machines failed. The problem was that these other machines had different kernel version (shouldn’t matter, probably) or different openssl versions (which likely mattered). We started to investigate what the issue was and tried to setup a secured server on the box and connect to it.

Which worked, so that sucked. Running the tests again showed them failing again… which was confusing.

Eventually we figured out that we couldn’t make it fail when running a server outside the tests, but we could observe that it was slow. Slow as in, took ~2.5 seconds to reply to the client’s hello message.

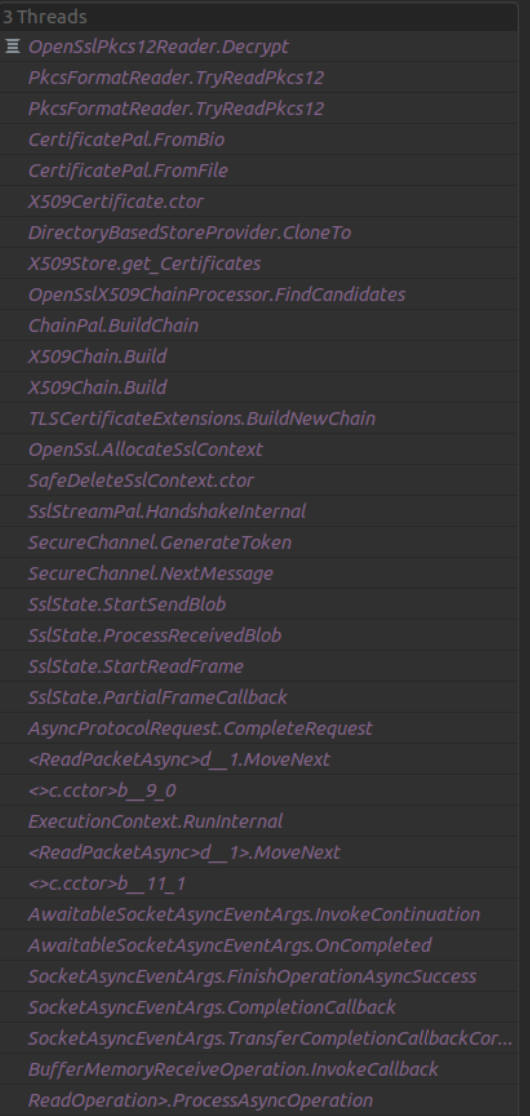

We tried it in the debugger, and whenever we paused it, it was always some variant of the following:

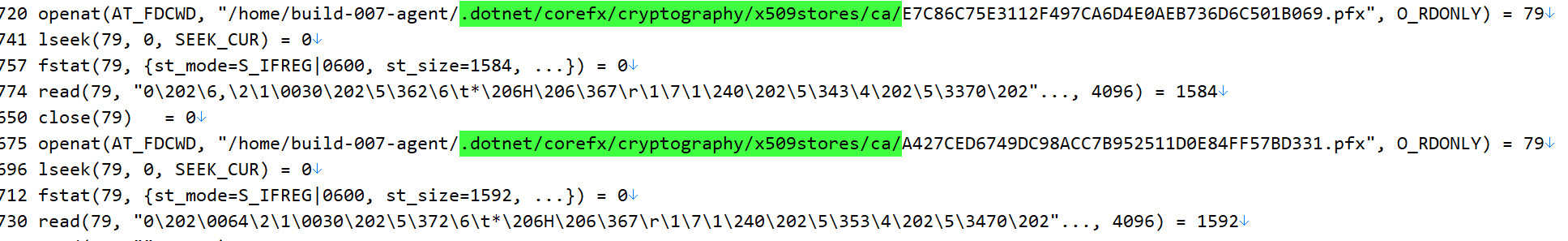

That led us to believe that there is something with openssl that could cause this slowdown. We tried to use strace to understand what it was doing, and we got stuff like this:

To be rather exact, we got over 300,000 calls to openat with some file in that directory.

That… didn’t seem right, to us. So we looked a bit deeper. As you can see, during the processing of an SSL connection, we can to X509Chain.Build, and that end up calling to X509Store.Certificates, and that end up reading all the certificates that you have on the “~/.dotnet/corefx/cryptography/x509stores/ca” directory.

That directory had…. 2,103 certificates files in it. And every single time that we had to do anything with a certificate, we would go and scan through all of them, including paying all the cryptographic costs of verifying all those certs. If we had multiple threads doing that at the same time, we would run into starvation issues. Just a single pass through that was 2.5 seconds for a single core. The test scenario had 20 connections at a minimum going at once, and that was the reason for the timeouts. We basically run out of CPU because we were spending all of those cycles on the certs.

And why did we have so many certs? The tests create a certificate every time that they run, and register that in the X509Store to make sure that certain features work. But we never delete it from the store…. And over time, we had more and more certs going on there, until the load was big enough to start breaking things.

Opps.

Comments

Comment preview