Stabilization stories: Slow TCP under Linux

RavenDB is pretty big, it is over 600,000 lines of C# code and over 220,000 lines of TypeScript code. In a codebase that large, there are unexpected interactions between different components (written by us, third parties and even the operating systems we use).

RavenDB is pretty big, it is over 600,000 lines of C# code and over 220,000 lines of TypeScript code. In a codebase that large, there are unexpected interactions between different components (written by us, third parties and even the operating systems we use).

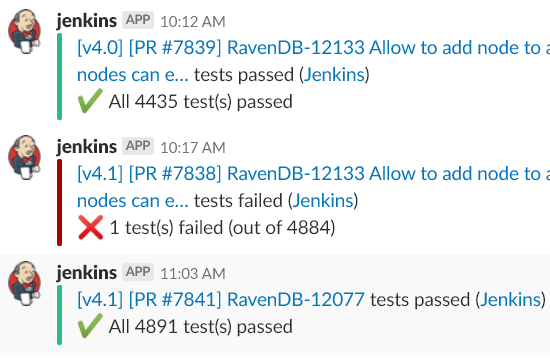

Given how important the stability of RavenDB is, we spend quite a bit of time (as in, the majority of it) not writing new features, but ensuring that the system is stable, predictable and observable. One part of that is a large suite of tests, which are being run on a variety of machines and conditions.

Some of these tests fail, in which case we fix them. A failing test is wonderful, because it tell us that something is wrong. A predictably failing test is a pleasure, because it states, in an unambiguous terms, what is going on and what the trouble is. I love getting a failing test, there is usually a pretty straightforward way to figure out what went wrong and then to actually fixing it.

Then there are tests that fail occasionally, and I really hate them. They almost always relate to some sort of race condition. Sometimes, the race is in the test itself, but sometimes the problem is in the actual code. The problem is that tracking down such an issue is pretty hard and annoying. The more frequently can we induce the failure, the faster we can actually get to resolving it.

We recently had a test that failed, very rarely, and only on Linux.

The debugging landscape* on Linux is dramatically poorer compare to Windows, so that adds another hurdle.

* Yes, we have JetBrains’ Rider, and it is great. But it is still quite far from the debugging capabilities of Visual Studio, especially for non trivial debugging.

The test failed because of a timeout waiting for a cluster to fully disseminate changes between all the members in the cluster. That means that we had a test that would spin up three to five independent nodes, combine them into a cluster, create a database that is shared among all these nodes, write documents to one of the nodes and then validate that the document is indeed on all the nodes. A failure there, and a timeout failure in that aspect, means that we have to inspect pretty much the whole system.

Luckily, we had some good people on this issue, and they manage to come up with a minimal reproduction of the issue. All it took was to spin up a TcpListener and TcpClient and have them talk to one another, then do the same using SSL. We got some really interesting results because of that.

| Windows | Linux | Diff | |

| Single Threaded – Plain | 192.8 | 200.8 | 104% |

| Single Threaded – SSL | 5,762.3 | 667,549.8 | 11,584% |

| Concurrent (200) – Plain | 11,377.5 | 932,487.9 | 8,195% |

| Concurrent (200) – SSL | 145,494.8 | 35,283,175.3 | 24,250% |

As you can see, there is a minor discrepancy in the performance of TCP connection times. All the tests were run on the same machine, testing over localhost.

We opened an issue for this problem, and for now we deal with it by accepting that the connection time can be very long and adjusted the timeout for the test.

Comments

Comment preview