RavenDB 4.0Full database encryption

Late last year I talked about our first thoughts about implementing encryption in RavenDB in the 4.0 version. I got some really good feedback and that led to this post, detailing the initial design for RavenDB encryption in the 4.0 version. I’m happy to announce that we are now pretty much done with regards to implementing, testing and banging on this, and we have working full database encryption in 4.0. This post will discuss how we implemented it and additional considerations regarding management and working with encryption databases and clusters.

Late last year I talked about our first thoughts about implementing encryption in RavenDB in the 4.0 version. I got some really good feedback and that led to this post, detailing the initial design for RavenDB encryption in the 4.0 version. I’m happy to announce that we are now pretty much done with regards to implementing, testing and banging on this, and we have working full database encryption in 4.0. This post will discuss how we implemented it and additional considerations regarding management and working with encryption databases and clusters.

RavenDB uses ChaCha20Poly1305 authenticated encryption scheme, with 256 bits keys. Each database has a master key, which on Windows is kept encrypted via DPAPI (on Linux we are still figuring out the best thing to do there), and we encrypt each page (or range of pages, if a value takes more than a single page) using its a key derived from the master key and the page number. We are also using a random 64 bits nonce for each page the first time it is encrypted, and then increment the nonce every time we need to encrypt the page again.

We are using libsodium as the encryption library, and in general it make it a pleasure to work with encryption, since it is very focused on getting things done and getting them done right. The number of decisions that we had to make by using it is minimal (which is good). The pattern of initial random generation of the nonce and then incrementing on each use is the recommended method for using ChaCha20Poly1305. The WAL is also encrypted on a per transaction basis, with a random nonce and a derived from the master key and the transaction id.

This encryption is done at the lowest possible level, so it is actually below pretty much anything in RavenDB itself. When a transaction need to access some data, it is decrypted on the fly for it, and then it is available for the duration of the transaction. When the transaction is closed, we’ll encrypt all modified data again, then wipe all the buffered we used to ensure that there is no leakage. The only time we have decrypted data in memory is during the lifetime of a transaction.

A really nice benefit of working at such low level is that all the feature of the database are automatically included. That means that adding our Lucene on Voron implementation, for example, is also part of this, and all indexes are encrypted as well, without having to take any special steps.

Encrypted database does poses some challenges. Not so much for the database author (that’s me) as to the database users (that’s you). If you take a backup of an encrypted database, restoring it pretty much requires that you’ll have the master key to enable actually accessing the data. When you are running in a cluster, that represent another challenge, since you need to make sure that all the nodes in the cluster running the database are encrypting it. Communication about the database should also be encrypted. Tasks such as periodic backup / export / etc also need special treatment. Key generation is also important, you want to make sure that the key isn’t “123456” or some such.

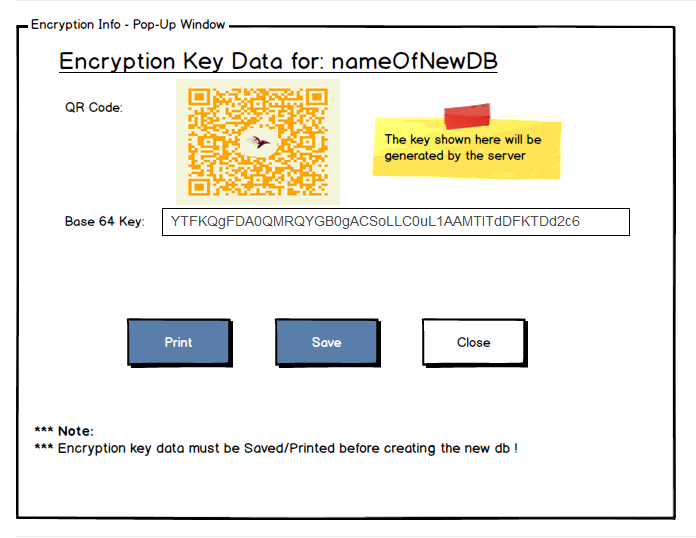

Here is what we came up with. When you create a new encrypted database, you’ll be presented with the following UI:

The idea is that you can either have the server generate the key for you (secured, cryptographically random 256 bits), and then we’ll show it to you and allow you to save it / print it / record it for later. This is the only chance you’ll have to get the key from the server.

After you have the key, you then select which nodes and how many this database is going to run on, which will cause it to create the master key on all of those for that particular database. In this manner, all the nodes for this database will use the same key, which simplify some operational tasks (recovery / backup / restore / etc). Alternatively, you can create the database on a single node, and then add additional nodes to it, which will give you the option to generate / provide a key. I’m not sure whatever ops will be happy with either option, but we have the ability to either have all of them using the same key or having separate key for each node.

Encrypted databases can only reside on nodes that communicate with each other via HTTPS / SSL. The idea is that if you are running an encrypted database, requests between the different nodes should also be private, so you’ll have protection for data at rest and as the data is being moved around. Note that we verify that at the sender, not the origin side. Primarily, this is about typical deployment patterns. We expect to see users running RavenDB behind firewalls / proxy / load balancers, we expect people to use nginx as the SSL endpoint and then talk http to ravendb (which is reasonable if they are on the same machine or using unix sockets). Regardless, when sending data over the wire, we are always talking RavenDB instance to RavenDB instance via TCP connection, and for encrypted databases, this will require SSL connection to work.

Other things, such as ETL tasks, will generate a suggestion to the user to ensure that they are also secured, but we’ll assume here that this is an explicit operation to move some of the data to another location, possibly filtering it, so we’ll not block it if it isn’t using https (leaving aside the fact that just figuring out whatever the connection to a relational database is using SSL or not is too complex to try), so we’ll warn about it and trust the user.

Finally, we have the issue of backups and exports. Those can be done on a regular basis, and frequently you’ll want them to be done to a remote location (cloud, S3, Dropbox, etc). In that case, all automated backup processes will require you to generate a public / private key pair (and only retain the public key, obviously). From then on, all the backups will be encrypted using the public key and uploaded to the cloud. That means that even if your cloud account is hacked, the hackers can’t do anything with the data, since they are missing the private key.

Again, to encourage users to actually do the right thing and save the private key, we’ll offer it in a form that is easily maintained safely. So you’ll be asked to print it and store it in some file folder somewhere (in addition to whatever digital backups you have), so you can restore it at a later point when / if you need it.

For manual operations, such as exporting an encrypted database, we’ll warn if you are trying to export without a key, but allow it (since forcing a key for a manual operation does nothing to security). Conversely, exporting a non encrypted database will also allow you to provide key pair and encrypt it, both for manual operations and automatic backup configurations.

Aside from those considerations, you can pretty much treat an encrypted database as a regular one (except, of course, that the data is encrypted at all times unless actively accessed). That means that all features would just work. The cost of actually encrypting and decrypting all the time is another concern, and we have seen about 60% additional cost in write speed and about 15% extra cost for reading.

Just to give you some idea about the performance we are talking about… Ingesting the entire Stack Overflow dataset, some 52GB in size and over 18 million documents can be done in 22 minutes with encryption. Without encryption we are faster, roughly 13 and a half minutes on that same machine, but that still gives us a rate of close to 2.5 GB per minute with encryption.

As you can tell from the mockup, while we have completed most of the encryption work around the engine, the actual UI and behavioral semantics are still in a bit of a flux. Your comments about those are welcome.

More posts in "RavenDB 4.0" series:

- (30 Oct 2017) automatic conflict resolution

- (05 Oct 2017) The design of the security error flow

- (03 Oct 2017) The indexing threads

- (02 Oct 2017) Indexing related data

- (29 Sep 2017) Map/reduce

- (22 Sep 2017) Field compression

Comments

You said you are encrypting the entire page using ChaCha20, but that is a stream cypher, not a block cypher. Usually files and other blocks are encrypted using a block cypher (like AES). Why did you choose a stream cypher?

David, There are several reasons here. Blocking chiper like AES is typically slower (and / or require dedicated hardware support to run) than a stream chiper. In AES case, most of the platforms that we are running on are likely to have AES hardware, but many Android phones don't have it. So it is a factor, but not a major one.

AES also requires padding, and there has been a whole host of issues around that in various implementations. In practice, in pretty much all our scenarios, we'll always encrypt pages, which are always multiples of 4KB, which means that we probably won't have to deal with padding.

In practice, block chiper is always used in chaining mode, and the recommended ones are almost always turning that into a stream chiper anyway (CTR, frex). A major headache with AES is that it is tricky to implement without exposing side channel information. I'm assuming that whatever I'll use will be protected from that, but that has repeatedly been tripping implementors, so that is a concern. See the history here: https://en.wikipedia.org/wiki/Advanced_Encryption_Standard

What really drove the decision was that we looked to see what would be the gold standard, and by far the simplest and most highly recommended option with lib sodium. Mostly because it was written by people who actually know cryptography, who actually sat down and thought about how to make APIs that would make it possible for consumers to offer valid security guarantees without a PHD in crypto-analysis.

After selecting lib sodium, the rest was a matter of selecting the appropriate algorithm. ChaCha is faster, simpler to use with the API provided (I can actually follow what the code is doing, to a certain degree) and has been extensively reviewed. It is also portable across platforms. The libsodium AES implementation requires SSE3 instructions that aren't available on the ARM, for example, and we do want to run on that.

This is an unfortunate decision.

AES is a national standard in the US, and it's required in numerous compliance scenarios such as healthcare.

All of the technical challenges you mention have simple solutions. You argue that padding is a problem, but go on to say that your implementation doesn't require padding. By now AES implementations are mature and widely available. To talk about implementation issues of AES as an excuse to use ChaCha is nonsense.

Most modern PCs will have SSE support, with which AES is extraordinarily fast. If it's true that sodium ONLY supports AES with SSE, then it's simply not a good library to use.

And then I would hesitate to cal libsodium a "gold standard" when it comes to libraries.

When it comes to algorithms, AES is the gold standard; chacha is not a standard at all.

Larry, AES is actually not required for compliance. What is required is a certified implementation of it, which is something quite different.

AES attacks are also something that happens on a regular basis, see: https://en.wikipedia.org/wiki/Side-channel_attack#cite_note-3

In particular, take a look at

OpenSSL, as one of the most widely used crypto libraries. A side channel attach was able to get the key in 13 ms. See: http://cs.tau.ac.il/~tromer/papers/cache.pdfGiven that we wan to use libsodium, because it has a great reputation and is generally trusted, it doesn't matter if we use ChaCha or AES for the crypto, both of them haven't been formally certified. However, given the amount of attention libsodium got, and the fact that the code is actually something that we can read and understand, we choose to use that.

The provided API is also intentionally made to be used by people who aren't cryptographers, which help prevent mistakes. Given the general state of crypto libraries (see: https://en.wikipedia.org/wiki/Comparison_of_cryptography_libraries) I'll rather choose something that we can support, has good community and is respected.

I understand the issue with regards to FIPS / certification, etc. But given that stuff like BouncyCastle is in the same boat, I don't think that matters. Given our requirement for running cross platform and be able to move between systems, which include ARM systems, not just PC, we need something that we can take with us. Given that, we pretty much rule out all certified libs (which are tied to a specific version / OS / etc).

Leaving that aside, we chose to use libsodium for the aforementioned reasons.

Thank you for the interesting notes on vulnerabilities and libraries.

I see no problem with the choice of libsodium in general. My concerns were not about FIPS certification. The issue is that in the US healthcare industry, AES256 almost universally cited as the required algorithm (and key length) for storing patient data (encryption at rest). And potential healthcare relationships undergo security reviews where questions about encryption are asked in detail. The use of any other algorithm will pose business challenges.

Will AES still be an option for encryption in 4.0? It's not clear to me the relationship between the new "full database encryption" and what was available in 3.5 through the Encryption bundles. I would hope the algorithm will still be configurable so that AES256 is still a possibility.

Larry, Switching to AES-GCM is something that we can do relatively easily. We explicitly reserved enough space to do that if we need to. However, I'm not sure how much requirement there is for that without certification.

The full database encryption replaces the encryption bundle in 3.5.

I think you will find there is a lot. I have a large database on a Windows server. I don't care about ARM support. I wouldn't suggest to switch, ideally you can have encryption options, as you do now. ChaCha may even be better than AES. But if AES is not even a choice I believe you will get complaints. And what about existing databases? They are going to have to be rebuilt on 4.0?

Larry, Existing databases are going to be migrated to RavenDB 4.0, yes. This can be done as part of the setup of 4.0

Comment preview