RavenDB is a distributed database, it has been a distributed database since pretty much the very start, although over time we have been making the distribution part easier and easier. You might be able to tell that the design of RavenDB was heavily influenced by the Dynamo paper and RavenDB implements a multi master system that allow every node to accept writes and disseminate them across the network.

This is great, because it ensure that we have a high stability in the face of error, but this also opens us up to some interesting failure modes. In particular, if a document is modified in two nodes at the same time, there is no way to immediately detect that. Unlike a single master system, where such a thing would be detected, but requires at least a majority of the nodes to be up. A scenario where we have concurrent modifications on the same document on different server is called a conflict, and is something that RavenDB is quite able to detect and handle.

For a very long time, we had multiple mechanism to handle such conflicts. You could specify that RavenDB would resolve them automatically, in favor of a particular node, or using the latest or specifying a resolution strategy on the server or the client. But by default, if you did nothing, a conflict would cause an exception and require you to resolve it.

No one ever handled that exception, and very few users set the conflict resolution or did something meaningful with it. We typically heard about it as support calls about “this document is not accessible and the sky has just fallen”. Which is perfectly understandable from the point of view of the user, but incredibly frustrating from ours. Here we are, careful to account for correctness in behavior in a distributed system, properly detecting conflicts and brining them up to the attention of the user and the result is… they just want the error to go away.

In the vast majority of the cases, the user didn’t care about the conflict at all. It wasn’t important and any version would do. And that is after we went to all the trouble of making sure that you have a powerful conflict resolution option and allow you to do some really fun things. The overwhelming response we got was “make this go away”. The problem is that we can’t really make such a thing go away, this is a fundamentally an issue a multi master distributed system must handle. And just throwing one of the conflicted versions under the bus didn’t sit right with us.

RavenDB is an ACID database because I strongly believe that transactions matters, that you data is important and should be respected, not shredded to pieces on a moments notice in fear of someone figuring out that there has been a conflict. I wrote about another aspect of this issue previously what the user expects and the right things are decidedly at odds here. In particular because the right thing (handling conflicts) can be hard for the user, and something that you would typically do only on some parts of your domain model.

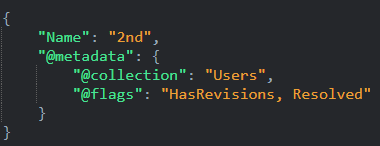

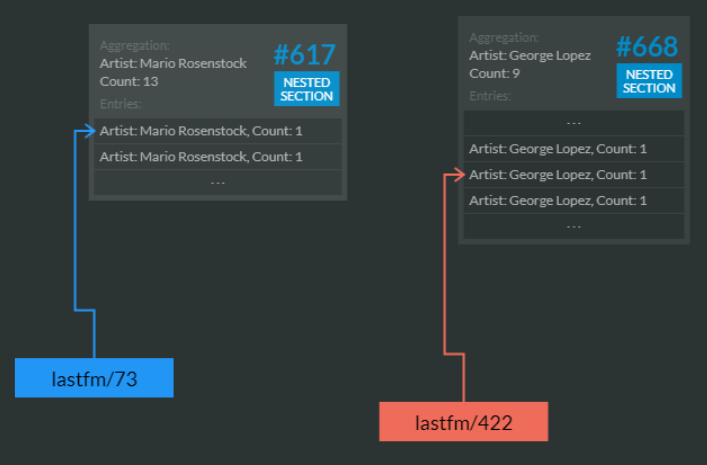

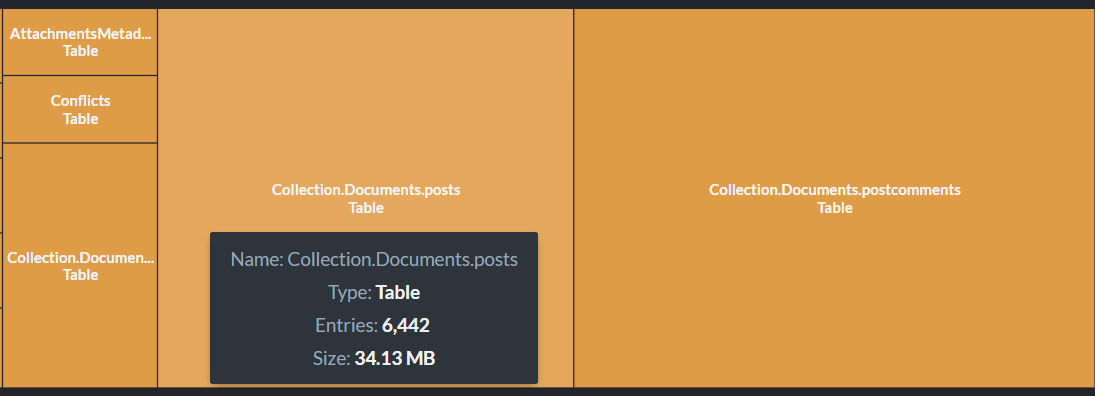

Because of this, with RavenDB 4.0 we moved to automatic conflict resolution. Unless configured outside, whenever RavenDB discover a conflict, it will automatically resolve it (in an arbitrary but consistent manner across the cluster). Here is what this looks like:

Notice the flags? This document is the resolve of conflict resolution. In this case, we had both 1st and 2nd as conflicting versions, and we chose one of them.

But didn’t I just finished telling you that RavenDB doesn’t shred your data? The key here is that in addition to the Resolved flag, we also have the HasRevisions flag. In this case, the database doesn’t have revisions defined, but even so, we have revisions for this document. Let us look at them, shall we?

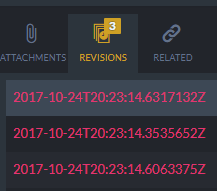

We have three of them:

| Created on Node A | Created on Node B | Resolved |

|

|

|

Pay special attention to the flags. You can see that we have here three revisions. The conflicted versions as well as the resolved document. We’ll be reporting these documents in the studio, so an admin can go and take a look and verify that nothing was missed and this also applies to conflict resolution that wasn’t done by arbitrarily choosing a winner.

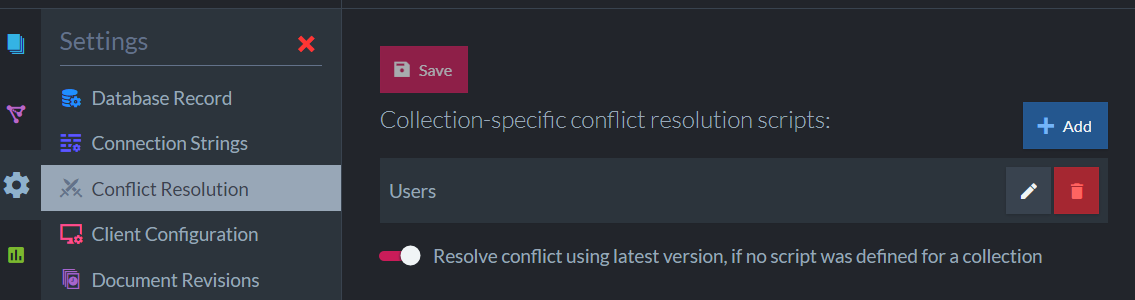

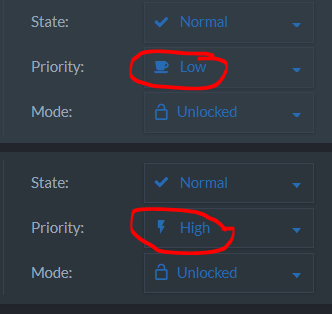

Remember, this is the default configuration, so you can set RavenDB to manual mode, in which case you’ll get an error on access a conflict and will need to resolve it manually, or you can define a script that would resolve the conflict. This can be defined on a per collection basis or globally for the database.

Here is an example of how you can handle conflicts using a script:

Regardless of the way you chose to resolve the conflict, you will still have all the conflicting versions available after the resolution, so if your script missed something, no data has been lost.

The idea is that we want to enable you to deploy a distributed system without too much hassle, but without taking undue risks or running in a configuration that is only suitable for demos. I think that this is the kind of features that you would never really notice, until you really notice that it just saved you a bunch of sleepless nights.

And as this is written at 00:12 AM, I think that I’ll listen to my own advice, hit the post button and head to bed.

Update: Here is how you configure this in the studio: