Database Building 101Stable node ids

A few posts ago, I talked about the problem of having unstable ids, in particular, ids that can be reused. That leads to quite a lot of complexity, as anyone who ever had to deal with Lucene documents ids knows.

So we are willing to pay something toward stable ids, the questions is what?

One way of doing that is to just store the physical id (unstable) and a virtual id (stable) in a B+Tree (actually, a pair of them, since you’ll need to refer to them back and forth). That means that for the most part, internally to the engine, we’ll use the physical id (with its nice property of having O(1) access time), but externally we’ll expose the stable virtual id (probably sequential numbering, since that is easiest).

Note that I still want to use the physical ids, I’ll discuss exactly why that is important in my next post, for now, let us just say that it is an important component of ensuring high performance for large datasets.

The problem with using B+Tree is that the cost of finding the virtual <—> physical id mapping is O(logN), which for 10 million nodes and 100 million edges is 23 & 24 respectively. Except that this isn’t the real cost function for B+Tree.

Assuming that we have 255 items per page, we actually would need to do 4 page lookups, and a total of 54 comparisons to find the right value. For the edges, we would need 5 page look ups and over 60 comparisons. Note that this isn’t an issue on its own, but it is an issue when we are talking about having this kind of cost in the hot path of the application. And this is very likely going to be in the hot path.

Oh, there are ways around it, we can only translate back and forth at the edges of the database, so internally we’ll always use the physical address, and only translate it out when we are done. But that is hard to actually do properly, since you need the virtual address for a whole lot of stuff all over the place.

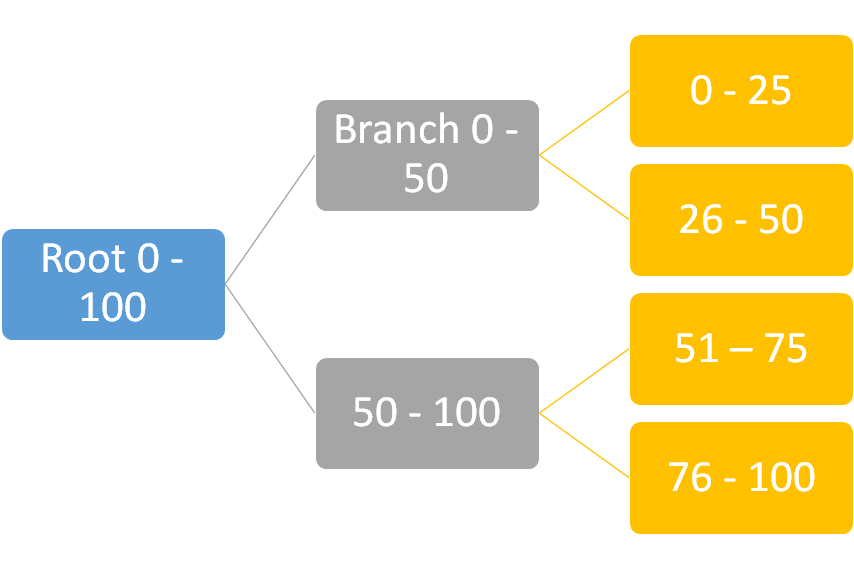

We can steal the idea of page translation tables from the processor. Something like this:

Effectively, we’ll lazy allocate segments of pages and pull them together into a hierarchy. So finding out the physical address of id 84 would involve looking at the root, finding the next page down with mod operation, and so forth until we find the right value and check there. This has the advantage of being simple, O(1) and obvious. It is also pretty good in terms of space saving, since the virtual id can be “stored” without taking any space (it is the position of the physical id in the “array” we created.

This has one drawback, there is no way to recover space. Because the indexer into this data structure is meaningful, we can’t just compact things. Once space is allocated, that is it. Now, to be fair, the cost in size here for all 100 million edges is about 0.75 GB, so not meaningful in the long run, but if we have a busy database that always write and delete, we have no way to recover the space.

The “proper” answer, by the way, is to implement an external hash table. That has the property of O(1), can grow and shrink as the amount of data changes. I’m not presenting it here mostly because it is something that we haven’t yet had the need to implement in Voron, so it isn’t something that I can just show and move on. Beside, it is fun to explore all the wrong ways of doing something.

More posts in "Database Building 101" series:

- (25 Aug 2016) Graph querying over large datasets

- (24 Aug 2016) Stable node ids

- (22 Aug 2016) High level graph operations

- (19 Aug 2016) Graphs aren’t toys

- (18 Aug 2016) The cost of graph storage

- (17 Aug 2016) The cost of graphing

- (16 Aug 2016) The storage layer

- (15 Aug 2016) Let us graph this for real

Comments

I have a stupid idea here, why not use a raw data section to store the virtual ids to physical id? There is no real need for a hash table here since we are creating sequential ids and they are dense, so we only need an "array" (of course it is not in memory but on memory mapped file). So what we will end up with is 8numberOfNodes bytes per graph, yes this is limiting us to 250 million nodes because of the 2GB limitation but we can have an array of those and navigate to the correct one using the div operation (will only need this when over 250 million nodes was used) . now if we have a node with virtual id #513 we will go to the position 5138 read the actual position on the disk (the physical id) and we are good. This is an o(1) operation to get the mapping and o(1) to get the nodes (yes this might be 2 seeks in a file so this is more expensive than physical id but i think it is the best we can do)

Tal, How is this different from the array approach at the end of the post?

It is not :( I didn't understand your discretion of the solution, but from the comment I see you meant the same thing.

It is not :( I didn't understand your discretion of the solution, but from the comment I see you meant the same thing.

Comment preview