I mentioned that maintaining physical ids is important for performance reasons in my previous post, but I skipped on exactly why. The short answer is that if I have a physical ids, it is much easier to implement locality and much easier to implement parallel locality.

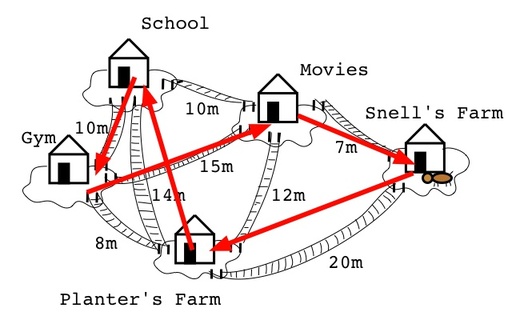

Let us imagine a database whose size is about 100GB, running on a machine that has 6 GB of RAM. You need to do run some sort of computation that traverse the graph, but doing so naively will likely cause us to trash quite a lot, as we page memory in and out of the disk, only to jump far away in the graph, paging even more, and effectively killing all your performance.

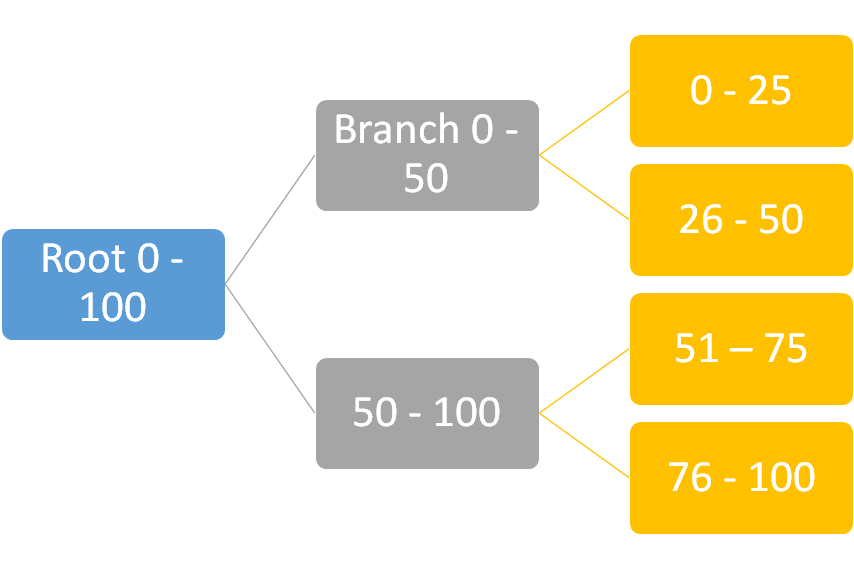

Instead, we can do something like this, let us imagine that you have a machine with 4 cores on it, and the previous mention setup. And then you start 4 threads (each marked with a different color on the image, and start processing nodes.

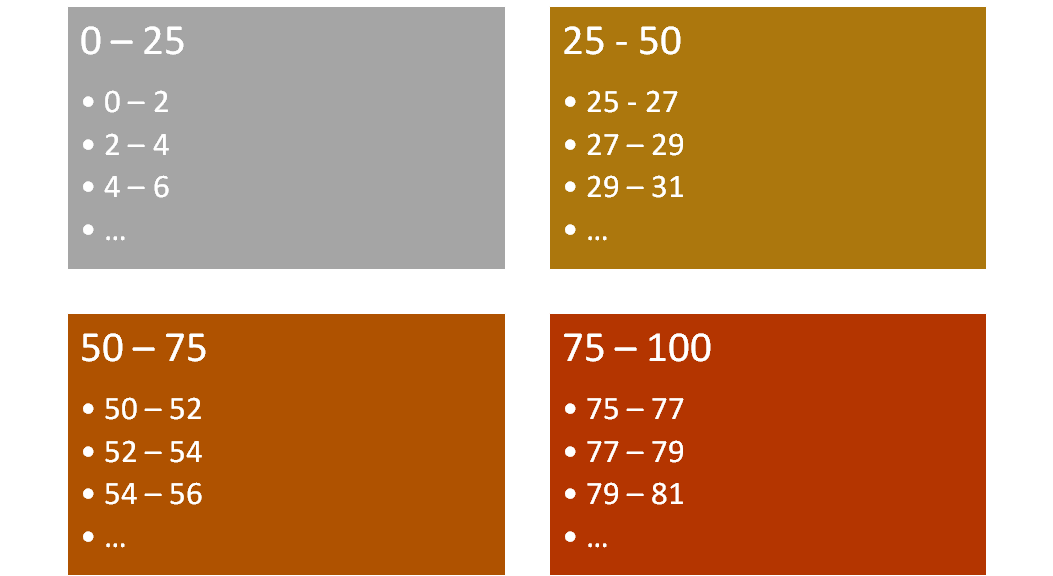

However, there is a trick here, each thread has a queue, and only ids that fall without the area of responsibility of the thread will arrive there. But we aren’t done, inside a thread we define additional regions, and route requests to process each region into each own queue.

Finally, within each thread, we process one region at a time. So the idea is that while we are running over a region, we may produce work that will need to run on other regions (or even other threads), but we don’t care, we queue that work and continue emptying the work that exists on our own region. Only once once we have completed all work in a particular region will we move to the next one. The whole task complete when, in all threads, there are no more regions with work to be done.

Note that the idea here is that each thread is working on one region at a time, and that region maps to a section of the database file that was memory mapped. So we keep that are of the page cache alive and well.

When we move between regions, we can hint to the memory manager that we are going to need the next region, etc. We can’t escape the need to process the same region multiple times, because processing in one region may lead us to processing in another, and then back, but assuming we run the regions using least recently accessed, we can take advantage on the stuff remaining in the page cache (hopefully) from the previous run and using that.

Which is why the physical location on disk is important.

Note that the actual query that we run is less important. Typical graph queries are fall into one of two categories:

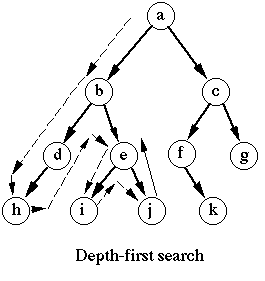

- Some sort of Breadth First Search or Depth First Search and walking through the graph.

- Finding a sub-graph in the larger graph that matches this criteria.

In both cases, we can process such queries using the aforementioned process, and the reduction in random work that the database has to do is big.

In the

In the