RavenDB & FreeDBAn optimization opportunity

Update: The numbers in this post are not relevant. I include them here solely so you would have a frame of reference. We have done a lot of optimization work, and the numbers are orders of magnitude faster now. See the next post for details.

The purpose of this post is to setup a scenario, see how RavenDB do with it, and then optimize the parts that we don’t like. This post is scheduled to go about two months after it was written, so anything that you see here is likely already fixed. In future posts, I’ll talk about the optimizations, what we did, and what was the result.

System note: I run those tests on a year old desktop, with all the database activity happening on a single 7200 RPM 300GB disk with 8 GB of RAM. Please don’t get to hung up on the actual numbers, I include them for reference, but real hardware on production system should kick this drastically higher. Another thing to remember is that this was an active system, while all of those operations were running, I was actively working and developing on the machine. The main point is to give us some sort of a metric about where we are, and to see whatever we like this or not.

We keep looking at additional things that we can do with RavenDB, and having large amount of information to tests things with is awesome. Having non fake data is even awesomer, because fake data is predictable data, while real data tend to be much more… interesting.

That is why I decided to load the entire freedb database into RavenDB and see what is happening.

What is freedb?

freedb is a database to look up CD information using the internet. This is done by a client (a freedb aware application) which calculates a (nearly) unique disc ID for a CD in your CD-Rom and then queries the database. As a result, the client displays the artist, CD-title, tracklist and some additional info.

The nice thing about freedb is that you can download their data* and make use of it yourself.

* The not so nice thing is that the data is in free form text format. I wrote a parser for it if you really want to use it, which you can find here: https://github.com/ayende/XmcdParser

So I decided to push all of this data into RavenDB. The import process took a couple of hours (didn’t actually measure, so I am not sure exactly how much), and we ended up with a RavenDB database with: 3,133,903 documents. Memory usage during the import process was ~100 MB – 150 MB (no indexes were present).

The actual size in RavenDB is 3.59 GB with 3.69 GB reserved on the file system.

Starting the database from cold boot takes about 4 seconds.

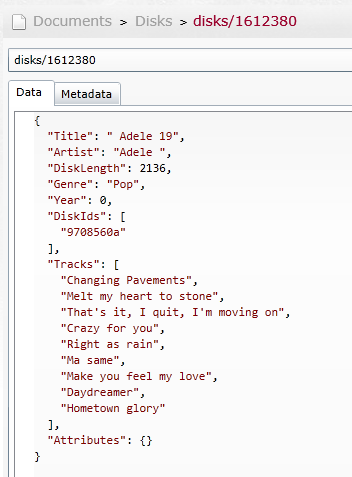

This is what the document looks like:

A full backup of the database took about 3 minutes, with all of the time dedicate for pure I/O.

Doing an export, using smuggler (on the local machine, 128 document batches) took about 18 minutes and resulted in a 803MB file (not surprising, smuggler output is a compressed file).

Note that we created this in a completely empty database, so the next step was to actually create an index and see how the database behaves. We create the default Raven/DocumentsByEntityName index, and got 5,870 seconds, so just over an hour and a half. For what it worth, this resulted in on disk index with a size of 125MB.

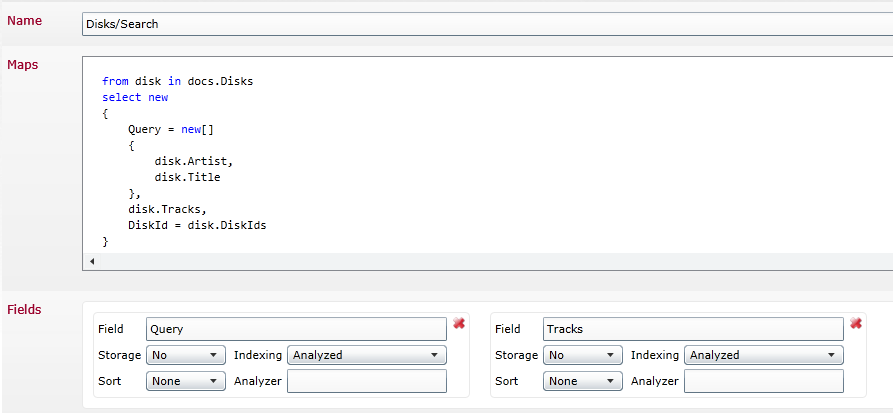

I then tried a much more complex index:

Just to give you some idea, this index gives you full text search support over just about every music cd that was ever made. To be frank, this index scares me, because it means that we have to have index entry for every single track in the world.

After indexing was completed, we ended up with a 700 MB on disk presence. Indexing took about 7 hours to complete. That is a lot, but remember what we are dealing with, we indexed 3.1 million documents, but we actually indexed, 52,561,894 values (remember, we index each and every track). The interesting bit is that while it took a lot of CPU (full text indexing usually does) memory usage was relatively low, it peaked about 300 MB and usually was around the 180MB).

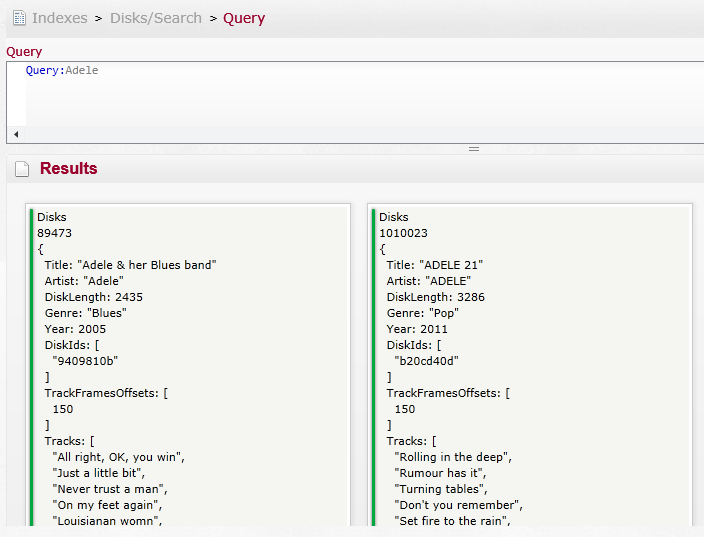

Searching over this index is not as fast as I would like, taking about a second to complete. Then again, the results are quite impressive:

Well, given that this is the equivalent of a 52 million records (in this case, literally records ![]() ) , and we are performing full text search, quite nice.

) , and we are performing full text search, quite nice.

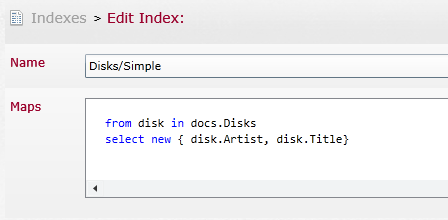

Let us see what happens when do something a little simpler, shall we?

In this case, we are only indexing 3.1 millions documents, and we don’t do full text searches. This index took 2.3 hours to run.

Queries on that are a much more satisfactory rate of starting out at 75 ms and dropping to 5 ms very quickly.

More posts in "RavenDB & FreeDB" series:

- (17 Apr 2012) An optimization story

- (16 Apr 2012) An optimization opportunity

Comments

COMPARE TO SQL DB? EG. POSTGRESQL?

Not sure if you are going down the route of comparisons.. but these tests would be interesting side-by-side on other document databases such as MongoDB or Redis. You obviously can't compare performance metrics alone when evaluating a product.. but it proves to be a useful metric.

Nathan I suspect you will find many databases faster than Raven in such benchmarks, imho Raven guys concentrate on ease of use and on features rather than speed. Ayende, I don't understand how you get 'index entry for every single track in the world' - from what I see you have a Lucene document for each CD with multivalued Query and Track fields, both of them analyzed - this means you have an index entry for each distinct word in track and disk titles. And there are not so many such words - song names don't use too rich vocabulary... Out of curiosity, could you post the list of top 100 most frequently used words in song titles? Such list should be possible to get from Lucene index..

Interresting stats. I work on an ASP.Net site that currently uses FAST as the search engine. We've had all sorts of issues with it and to put it simply, it's just not reliable enough. So we are considering using RavenDB as a replacement. All our needs seem to be covered and because we are using .Net it would be easy to use RavenDB. However, I have to say that I am a bit concerned with the time indexes take to create and was hoping you could clarify a few things for me.

Imagine this scenario: we currently have 800,000 documents needing to be in RavenDB. We create the indexes before adding the documents so that the indexes are populated as we go. Testing goes great and everything is performing well. Now the problem is that we missed one scenario and there is another index needed that we didn't know about. On production, because of the auto-tuning on RavenDB, when this scenario is encountered the new index will be automatically create and because of the 800,000 documents it will take a while...

What is going to happen to my search query at this point?

The same goes if there is a code change producing queries with a different query profile but we don't realise that before going to production. I know it's our fault in both cases but I'm just curious of the consequences.

Thanks

Fabien, my understanding is that indexing proceeds in the background and you deal with a slow query in the meantime. Best you can hope for -- except maybe making use of partial indices, and indexing on query, and the like.

Let's assume that you've applied sharding. What would have happened then with the performance? Would indexing time be shortened to total time / instances?

Would that also mean the query of 1sec over 31 million records with 52 million fields can be made much faster when letting multiple instances do the actual work; maybe with a map / reduce on all instances?

Fabien, As I noted in the post, those were PRE optimization number. The numbers now are FAR better. You can see this in today's post. In production, you are likely to see RavenDB keeping up with your workload. If you are creating a new index, RavenDB will split the load between the new index and the current ones, making sure that they are all up to date.

Searches keep being fast, but you might not be able to search the entire data set until the new index caught up. In our testing, even for complex indexing, for your data set size, this should take roughly 5 - 10 minutes.

Fabien, indexing is a background operation. So on a production system, everythings stays online and responds to requests while the indexing is done behind the scenes.

To all the other: Re-read the title of this post. There will be optimization and todays indexing performance is much faster than at the time Oren wrote this post.

Chris, Actually, no. There are no such things as slow queries in RavenDB :-)

Micha, Sharding would split the load, yes. But the numbers you are seeing there are not real numbers. They are PRE optimization numbers. Our current numbers are much faster.

Thanks for the answer Ayende. I'm felling much better looking at those numbers :)

Daniel, I understand indexing runs in the background and I was not really concerned about the performance of the queries not using the new index. I was just wondering what would happen to the queries needing them. Now I know it will just use a stale index, which is a problem if the process takes hours, not so much when it's a few minutes.

Comment preview