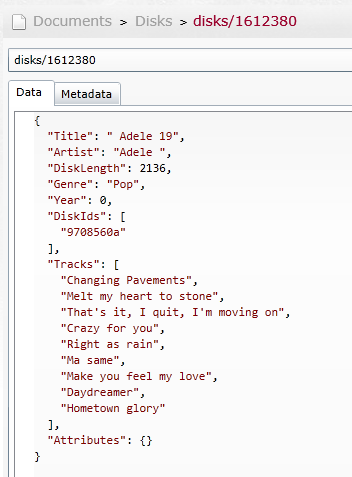

So, as I noted in a previous post, we loaded RavenDB with all of the music CDs in existence (or nearly so). A total of 3.1 million disks and 43 million tracks. And we had some performance problems. But we got over them, and I am proud to give you the results:

| Old | New | |

| Importing Data | Couple of hours | 42 minutes |

| Raven/DocumentsByEntityName | And hour and a half | 23.5 minutes |

| Simple index over disks | Two hours and twenty minutes | 24.1 minutes |

| Full text index over disks and tracks | More than seven hours | 37.5 minutes |

Tests were run on the same machine, and the database HD was a single 300 GB 7200 RPM drive.

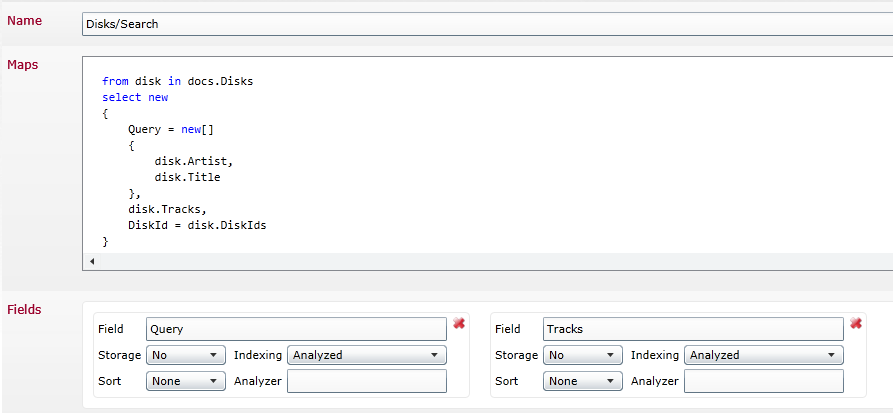

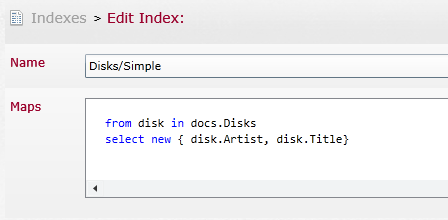

I then decided to take this one step further, and check what would happen when we already had the indexes. So we created three indexes. One Raven/DocumentsByEntityName, one for doing simple querying over disks and one for full text searches on top of all disks and tracks.

With 3.1 million documents streaming in, and three indexes (at least one of them decidedly non trivial), the import process took an hour and five minutes. Even more impressive, the indexing process was fast enough to keep up with the incoming data so we only had about 1.5 seconds latency between inserting a document and having it indexed. (Note that we usually seem much lower times for indexing latencies, usually in the low tens of milliseconds, when we aren’t being bombarded with documents).

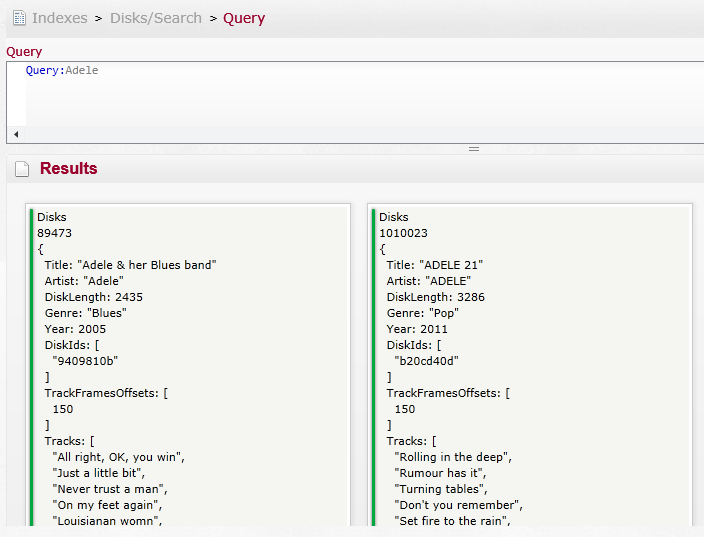

Next up, and something that we did not optimize, was figuring out how costly it would be to query this. I decided to go for the big guns, and tested querying the full text search index.

Testing “Query:Adele” returned a result (from a cold booted database) in less than 0.8 seconds. But remember, this is after a cold boot. So let us see what happen when we issue a few other queries?

- Query:Pearl - 0.65 seconds

- Query:Abba – 0.67 seconds

- Query:Queen – 0.56 seconds

- Query:Smith – 0.55 seconds

- Query:James – 0.77 seconds

Note that I am querying radically different values, so I force different parts of the index to load.

Querying for “Query:Adele” again? 32 milliseconds.

Let us see a few more:

- Query:Adams – 0.55 seconds

- Query:Abrahams – 0.6 seconds

- Query:Queen – 85 milliseconds

- Query:James – 0.1 seconds

Now here are a few things that you might want to consider:

- We have done no warm up to the database, just started it up from cold boot and started querying.

- I actually think that we can do better than this, and this is likely to be the next place we are going to focus our optimization efforts.

- We are doing a query here over 3.1 million documents, using full text search.

- There is no caching involved in the speed increases.

More goodies are coming in.