I have run into this post by John Rush, which I found really interesting, mostly because I so vehemently disagree with it. Here are the points that I want to address in John’s thesis:

1. Open Source movement gonna end because AI can rewrite any oss repo into a new code and commercially redistribute it as their own.

2. Companies gonna use AI to generate their none core software as a marketing effort (cloudflare rebuilt nextjs in a week).

Can AI rewrite an OSS repo into new code? Let’s dig into this a little bit.

AI models today do a great job of translating code from one language to another. We have good testimonies that this is actually a pretty useful scenario, such as the recent translation of the Ladybird JS engine to Rust.

At RavenDB, we have been using that to manage our client APIs (written in multiple languages & platforms). It has been a great help with that.

But that is fundamentally the same as the Java to C# converter that shipped with Visual Studio 2005. That is 2005, not 2025, mind you. The link above is to the Wayback Machine because the original link itself is lost to history.

AI models do a much better job here, but they aren’t bringing something new to the table in this context.

Claude C Compiler

Now, let’s talk about using the model to replicate a project from scratch. And here we have a bunch of examples. There is the Claude C Compiler, an impressive feat of engineering that can compile the Linux kernel.

Except… it is a proof of concept that you wouldn’t want to use. It produces code that is significantly slower than GCC, and its output is not something that you can trust. And it is not in a shape to be a long-term project that you would maintain over the years.

For a young project, being slower than the best-of-breed alternative is not a bad thing. You’ve shown that your project works; now you can work on optimization.

For an AI project, on the other hand, you are in a pretty bad place. The key here is in terms of long-term maintainability. There is a great breakdown of the Claude C Compiler from the creator of Clang that I highly recommend reading.

The amount of work it would require to turn it into actual production-level code is enormous. I think that it would be fair to say that the overall cost of building a production-level compiler with AI would be in the same ballpark as writing one directly.

Many of the issues in the Claude C Compiler are not bugs that you can “just fix”. They are deep architectural issues that require a very different approach.

Leaving that aside, let’s talk about the actual use case. The Linux kernel’s relationship with its compiler is not a trivial one. Compiler bugs and behaviors are routine issues that developers run into and need to work on.

See the occasional “discussion” on undefined behavior optimizations by the compiler for surprisingly straightforward code.

Cloudflare’s vinext

So Cloudflare rebuilt Next.js in a week using AI. That is pretty impressive, but that is also a lie. They might have done some work in a week, but that isn’t something that is ready. Cloudflare is directly calling this highly experimental (very rightly so).

They also have several customers using it in production already. That is awesome news, except that within literal days of this announcement, multiple critical vulnerabilities have been found in this project.

A new project having vulnerabilities is not unexpected. But some of those vulnerabilities were literal copies of (fixed) vulnerabilities in the original Next.js project.

The issue here is the pace of change and the impact. If it takes an agent a week to build a project and then you throw that into production, how much real testing has been done on it? How much is that code worth?

John stated that this vinext project for Cloudflare was a marketing effort. I have to note that they had to pay bug bounties as a result and exposed their customers to higher levels of risk. I don’t consider that a plus. There is also now the ongoing maintenance cost to deal with, of course.

The key here is that a line of code is not something that you look at in isolation. You need to look at its totality. Its history, usage, provenance, etc. A line of code in a project that has been battle-tested in production is far more valuable than a freshly generated one.

I’ll refer again to the awesome “Things You Should Never Do” from Spolsky. That is over 25 years old and is still excellent advice, even in the age of AI-generated code.

NanoClaw’s approach

You’ve probably heard about the Clawdbot ⇒ Moltbot ⇒ OpenClaw, a way to plug AI directly into everything and give your CISO a heart attack. That is an interesting story, but from a technical perspective, I want to focus on what it does.

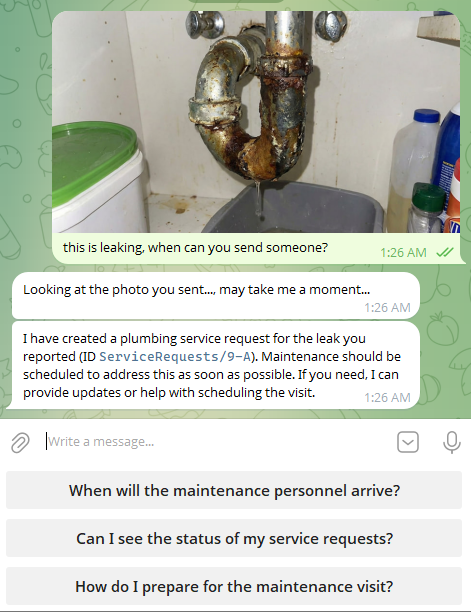

A key part of what made OpenClaw successful was the number of integrations it has. You can connect it to Telegram, WhatsApp, Discord, and more. You can plug it into your Gmail, Notes, GitHub, etc.

It has about half a million lines of code (TypeScript), which were mostly generated by AI as well.

To contrast that, we have NanoClaw with ~500 lines of code. Not a typo, it is roughly a thousand times smaller than OpenClaw. The key difference between these two projects is that NanoClaw rebuilds itself on the fly.

If you want to integrate with Telegram, for example, NanoClaw will use the AI model to add the Telegram integration. In this case, it will use pre-existing code and use the model as a weird plugin system. But it also has the ability to generate new code for integrations it doesn’t already have. See here for more details.

On the one hand, that is a pretty neat way to reduce the overall code in the project. On the other hand, it means that each user of NanoClaw will have their own bespoke system.

Contrasting the OpenClaw and NanoClaw approaches, we have an interesting problem. Both of those systems are primarily built with AI, but NanoClaw is likely going to show a lot more variance in what is actually running on your system.

For example, if I want to use Signal as a communication channel, OpenClaw has that built in. You can integrate Signal into NanoClaw as well, but it will generate code (using the model) for this integration separately for each user who needs it.

A bespoke solution for each user may sound like a nice idea, but it just means that each NanoClaw is its own special snowflake. Just thinking about supporting something like that across many users gives me the shivers.

For example, OpenClaw had an agent takeover vulnerability (reported literally yesterday) that would allow a simple website visit to completely own the agent (with all that this implies). OpenClaw’s design means that it can be fixed in a single location.

NanoClaw’s design, on the other hand, means that for each user, there is a slightly different implementation, which may or may not be vulnerable. And there is no really good way to actually fix this.

Summary

The idea that you can just throw AI at a problem and have it generate code that you can then deploy to production is an attractive one. It is also by no means a new one.

The notion of CASE tools used to be the way to go about it. The book Application Development Without Programmers was published in 1982, for example. The world has changed since then, but we are still trying to get rid of programmers.

Generating code quickly is easy these days, but that just shifts the burden. The cost of verifying code has become a lot more pronounced. Note that I didn’t say expensive. It used to be the case that writing the code and verifying it were almost the same task. You wrote the code and thus had a human verifying that it made sense. Then there are the other review steps in a proper software lifecycle.

When we can drop 15,000 lines of code in a few minutes of prompting, the entire story changes. The value of a line of code on its own approaches zero. The value of a reviewed line of code, on the other hand, hasn’t changed.

A line of code from a battle-tested, mature project is infinitely more valuable than a newly generated one, regardless of how quickly it was produced. The cost of generating code approaches zero, sure.

But newly generated code isn’t useful. In order for me to actually make use of that, I need to verify it and ensure that I can trust it. More importantly, I need to know that I can build on top of it.

I don’t see a lot of people paying attention to the concept of long-term maintainability for projects. But that is key. Otherwise, you are signing up upfront to be a legacy system that no one understands or can properly operate.

Production-grade software isn’t a prompt away, I’m afraid to say. There are still all the other hurdles that you have to go through to actually mature a project to be able to go all the way to production and evolve over time without exploding costs & complexities.