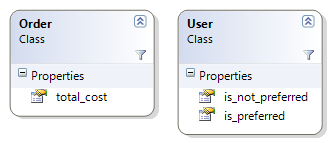

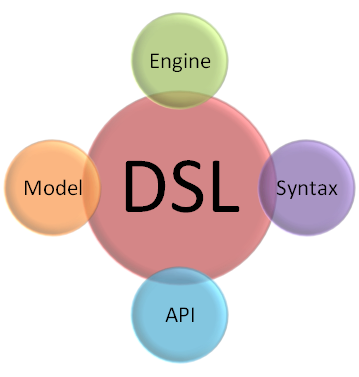

Roughly speaking, a DSL is composed of the following parts:

It should come as no surprise that when we test it, we test each of those components individually. When the time comes to test a DSL, I have the following tests:

- CanCompile - This is the most trivial test, it assert that I can take a known script and compile it.

- Syntax tests - Didn’t we just test that when we wrote the CanCompile() test? When I am talking about testing the syntax I am not talking about just verifying that it can compile successfully. I am talking about whatever the syntax that we have created has been compiled into the correct output. The CanCompile() test is only the first step in that direction. Here is an example of such a test.

- DSL API tests - What exactly is the DSL API? In general, I think about the DSL API as any API that is directly exposed to the DSL. The methods and properties of the anonymous base class is an obvious candidate, of course. Anything else that was purposefully built to be used by the DSL also fall into this category. Those I test using standard unit tests, without involving the DSL at all. Testing in isolation again.

- Engine tests - A DSL engine is the responsible for managing the interactions between the application and the DSL scripts. It is the gateway to the DSL in our application, allowing us to shell out policy decisions and oft-changed rules to an external entity. Since the engine is usually just a consumer of the DSL instances, we have several choices when the time comes to create test cases for the engine. We can perform a cross cutting test, which would involve the actual DSL, or test just the interaction of the engine with the provided instances. Since we generally want to test the engine behavior in invalid scenarios (a DSL script which cannot be compiled, for example), I tend to choose the first approach.

Testing the scripts

We have talked about how we can create tests for our DSL implementation, but we still haven’t talked about how we can actually test the DSL scripts themselves. Considering the typical scenarios for using a DSL (providing a policy, defining rules, making decisions, driving the application, etc), I don’t think anyone can argue against the need to have tests in place to verify that we actually do what we think we do.

In fact, because we usually use DSL as a way to define high level application behavior, there is an absolute need to be aware of what it is doing, and protect ourselves from accidental changes.

One of the more important things to remember when dealing with Boo based DSL is that the output of those DSL is just IL. This means that this output is subject to all the standard advantages and disadvantages of all other IL based languages.In this specific case, it means that we can just reference the resulting assembly and perform something write a test case directly against it.

In most cases, however, we can safely utilize the anonymous base class as a way to test the behavior of the scripts that we build. This allows us to have a nearly no-cost approach to building our tests. Let us see how we can test this piece of code:

specification @vacations:

requires @scheduling_work

requires @external_connections

specification @scheduling_work:

return # doesn't require anything

And we can test this with this code:

[Test]

public void WhenUsingVacations_SchedulingWork_And_ExternalConnections_AreRequired()

{

QuoteGeneratorRule rule = dslFactory.Create<QuoteGeneratorRule>(

@"Quotes/simple.boo",

new RequirementsInformation(200, "vacations"));

rule.Evaluate();

SystemModule module = rule.Modules[0];

Assert.AreEqual("vacations", module.Name);

Assert.AreEqual(2, module.Requirements.Count);

Assert.AreEqual("scheduling_work", module.Requirements[0]);

Assert.AreEqual("external_connections", module.Requirements[1]);

}

Or we can utilize a test DSL to do the same:

script "quotes/simple.boo"

with @vacations:

should_require @scheduling_work

should_require @external_connections

with @scheduling_work:

should_have_no_requirements

Note that creating a test DSL is only worth it if you expect to have a large number of DSL scripts of the tested language that you want to test.