Testing Domain Specific Languages

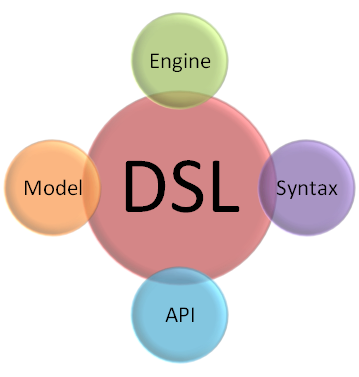

Roughly speaking, a DSL is composed of the following parts:

It should come as no surprise that when we test it, we test each of those components individually. When the time comes to test a DSL, I have the following tests:

- CanCompile - This is the most trivial test, it assert that I can take a known script and compile it.

- Syntax tests - Didn’t we just test that when we wrote the CanCompile() test? When I am talking about testing the syntax I am not talking about just verifying that it can compile successfully. I am talking about whatever the syntax that we have created has been compiled into the correct output. The CanCompile() test is only the first step in that direction. Here is an example of such a test.

- DSL API tests - What exactly is the DSL API? In general, I think about the DSL API as any API that is directly exposed to the DSL. The methods and properties of the anonymous base class is an obvious candidate, of course. Anything else that was purposefully built to be used by the DSL also fall into this category. Those I test using standard unit tests, without involving the DSL at all. Testing in isolation again.

- Engine tests - A DSL engine is the responsible for managing the interactions between the application and the DSL scripts. It is the gateway to the DSL in our application, allowing us to shell out policy decisions and oft-changed rules to an external entity. Since the engine is usually just a consumer of the DSL instances, we have several choices when the time comes to create test cases for the engine. We can perform a cross cutting test, which would involve the actual DSL, or test just the interaction of the engine with the provided instances. Since we generally want to test the engine behavior in invalid scenarios (a DSL script which cannot be compiled, for example), I tend to choose the first approach.

Testing the scripts

We have talked about how we can create tests for our DSL implementation, but we still haven’t talked about how we can actually test the DSL scripts themselves. Considering the typical scenarios for using a DSL (providing a policy, defining rules, making decisions, driving the application, etc), I don’t think anyone can argue against the need to have tests in place to verify that we actually do what we think we do.

In fact, because we usually use DSL as a way to define high level application behavior, there is an absolute need to be aware of what it is doing, and protect ourselves from accidental changes.

One of the more important things to remember when dealing with Boo based DSL is that the output of those DSL is just IL. This means that this output is subject to all the standard advantages and disadvantages of all other IL based languages.In this specific case, it means that we can just reference the resulting assembly and perform something write a test case directly against it.

In most cases, however, we can safely utilize the anonymous base class as a way to test the behavior of the scripts that we build. This allows us to have a nearly no-cost approach to building our tests. Let us see how we can test this piece of code:

specification @vacations:

requires @scheduling_work

requires @external_connections

specification @scheduling_work:

return # doesn't require anything

And we can test this with this code:

[Test] public void WhenUsingVacations_SchedulingWork_And_ExternalConnections_AreRequired() { QuoteGeneratorRule rule = dslFactory.Create<QuoteGeneratorRule>( @"Quotes/simple.boo", new RequirementsInformation(200, "vacations")); rule.Evaluate(); SystemModule module = rule.Modules[0]; Assert.AreEqual("vacations", module.Name); Assert.AreEqual(2, module.Requirements.Count); Assert.AreEqual("scheduling_work", module.Requirements[0]); Assert.AreEqual("external_connections", module.Requirements[1]); }

Or we can utilize a test DSL to do the same:

script "quotes/simple.boo"

with @vacations:

should_require @scheduling_work

should_require @external_connections

with @scheduling_work:

should_have_no_requirements

Note that creating a test DSL is only worth it if you expect to have a large number of DSL scripts of the tested language that you want to test.

Comments

I've always used a different approach that I think works quite nicely.

I usually split my DSL tests in two categories:

Syntax -> AST

and

AST -> Output ( IL / Eval / Whatever )

Since AST:s are trees, it is easy to transform them to

a string representation by just flattening the tree and output each node type in

a special way.(like a decompiler)

I used this alot when creating the NPath parser for NPersist.

Eg if the source was:

--

Select Name

From Customer

Where Address.StreetName == "foo"

--

Then I knew that if the parser (and tree flattener) worked correctly

I would get an output like:

"select Customer.Name from Customer Where (Customer.Address.StreetName == "foo)"

So I could very easily verify if I got the expected AST from the parsed source by simply comparing an expected string with the decompiled string

(instead of coding huge AST graphs and then compare those)

And in order to test the output of the compiler/evaluator I simply

code a small AST graph and then run my compiler/evaluator over that AST

Thus keeping the AST -> Output tests independent from the Syntax -> AST tests.

But I guess the best approach is depends highly on what type of DSL you are dealing with.

Eg internal / external DSL and how much control you have over the AST:s etc.

//Roger

Roger,

You approach works when you care about the parsing step. I don't care about this, because I assume that I get for free from Boo.

Roger,

How would you test the next level? Beyond parsing.

The DSL to write tests looks very similar to the DSL you want to test. It's basically saying the same thing, at the same level of abstraction.

Wouldn't make sense to build another "interpreter" for the main DSL that knows how to verify it?

Well it depends on the next step.

If the next step is an AST verifier that verifies if the AST is correct.

Eg, checking if you are trying to add 123 + "string" or something like that.

(you might support auto casts so the AST have to allow different types in an operation)

In such case I would simply hand code the AST nodes needed to represent the invalid AST structure.

and then call Accept on the root AST node and pass it my verification visitor.

And Assert that I get the correct output of the visitor (eg a list of broken rules)

--

If the next step is an AST optimizer that removes unreachable nodes or shortens constant expressions then I could use the same approach as in my first post.

Just Assert that I get a decompiled string representing the optimized code.

--

If the next step is an AST -> SQL Generator with support for property path traversal and complex joins.

Then I would run away and scream in terror :-P

(That has to be one of the most complex things I have ever touched... lucky for me, Mats Helander did most of the SQL emitter in NPersist :-P)

--

Testing an evaluator is fairly easy, atleast in all cases where I've been dealing with it I have an evaluator with methods like

"EvalExpression"/"EvalStatement" that takes some abstract AST expression/statement nodes and then redirect calls to more specific methods.

Eg. "EvalAddExpression"

You simply pass a fragment of an AST to those methods and assert that you get the expected result back.

(It can ofcourse be alot harder depending on what kind of constructs your DSL have.. eg if you support more general purpose langauge features such as defining classes... But I have never created that kind of DSL)

--

When it comes to IL compilation I've never done any "real" dsl.

Just demo/experiment compilers so I've got a very limited experience in that area.

In such case its not possible to test as small fragments as in an evaluator.

I've had to emit atleast as much as a dynamic method so that I can call it once emitted and then assert the output.

And just to clarify if it not obvious from my mindless blabbering:

Im not a language guy, I only think DSL:s are a very interesting topic.

So its absolutely possible that I'm doing completely idiotic things :-)

Andres,

Yes, that is the point, they are very similar.

But the main DSL may contain logic, and the test DSL will rarely have any

Roger,

You are working your way up the stack very well.

It is just those things that make me not want to build an external DSL. I skip all those paths and move directly from text to compile, executable, code, which I can test by simple TDD, no need for complex techniques.

OK, but can't you use the same DSL code to know what to test? Why do you need to create another DSL?

Is it possible to generate two IL outputs from boo using the same DSL code?

The DSLs define metadata, and it's very likely that I could want to use that metadata in an scenario that I did not think of originally..

Because having the test generated from the code isn't really useful.

When it is changed, the test would be changed as well, which is exactly what I don't want.

I want a separate artifact, which will break if the specified behavior change.

Andres,

In addition to that,

how are you going to test something like:

specification @vacations:

If the test is written at the same level of abstraction than the DSL then asking the DSL programmer to write test is asking him to write the same code twice, which I'm not sure it makes a lot of sense. I better write two DSL engines, where one can verify the other's behavior. It could be impossible to do in some cases, and in those cases then a manual test should be required.

About the example, I'm not sure what it does, but if you can write the test in the test DSL you described, you can probably infer it.

Thinking out loudm I could infer that the behavior will depend on the value of UserCount, infer the values of UserCount that I need to test with, and generate the equivalent of

with @vacations

having UserCount = 501

should_require @distributed_messaging

Inferring the values will be difficult in non-trivial cases like this. An idea could be to tell the test-interpreter which value combinations are valid, so we'll need a DSL for that:

test @vacations

with UserCount = 501, OtherVariable=300

with UserCount = 409

the test-dsl interpreter should know what the main-dsl should output with those values.

Again, I'm not talking about doing this with your boo approach, as I'm not sure is suitable to this. It could be done with a custom grammar.

Andres,

I think that we are talking about two different things here.

When we are testing, we are writing things twice. Once in the test, the second in the production code.

That verification gives us a lot of advantages.

Asking the DSL to produce it is like asking the compiler to verify itself. Sure, you can do that, but that is pretty much beside the point

Yes, when you test you write things twice, but the code you write to test is usually very different than the code you write to implement it.

If the code is basically the same, then I don't see the point of writing it.

But it is not the same, it is mirror image.

Comment preview