Today I ran into this Reddit post, detailing how Moq is now using SponsorLink to encourage users to sponsor the project.

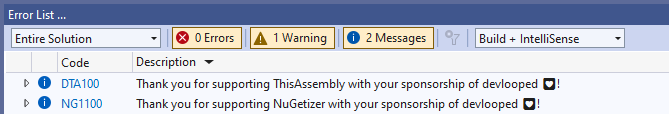

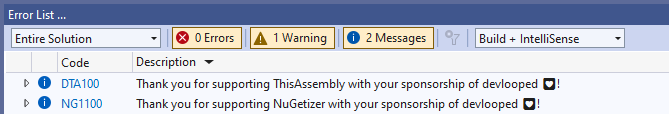

The idea is that if you are using the project, you’ll sponsor it for some amount, which funds the project. You’ll also get something like this:

This has been rolled out for some projects for quite some time, it seems. But Moq is a far more popular project and it got quite a bit of attention.

It is an interesting scenario, and I gave some thought to what this means.

I’m not a user of Moq, just to note.

I absolutely understand the desire to be paid for Open Source work. It takes a lot of time and effort and looking at the amount of usage people get out of your code compared to the compensation is sometimes ridiculous.

For myself, I can tell you that I made 800 USD out of Rhino.Mocks directly when it was one of the most popular mocking frameworks in the .NET world. That isn’t a sale, that is the total amount of compensation that I got for it directly.

I literally cannot total the number of hours that I spent on it. But OpenHub estimates it as 245 man-years. I… disagree with that estimate, but I certainly put a lot of time there.

From a commercial perspective, I think that this direction is a mistake. Primarily because of the economies of software purchases. You can read about the implementation of SponsorLink here. The model basically says that it will check whether the individual user has sponsored the project.

That is… not really how it works. Let’s say that a new developer is starting to work on an existing project. It is using a SponsorLink project. What happens then? That new developer is being asked to sponsor the project?

If this is a commercial project, I certainly support the notion that there should be some payment. But it should not be on the individual developer, it should be on the company that pays for the project.

That leaves aside all the scenarios where this is being used for an open source project, etc. Let’s ignore those for now.

The problem is that this isn’t how you actually get paid for software. If you are targeting commercial usage, you should be targeting companies, not individual users. More to the point, let’s say that a developer wants to pay, and their company will compensate them for that.

The process for actually doing that is atrocious beyond belief. There are tax implications (if they sponsor with 5$ / month and their employer gives them a 5$ raise, that would be taxed, for example), so you need to submit a receipt for expenses, etc.

A far better model would be to have a way to get the company to pay for that, maybe on a per project basis. Then you can detect if the project is sponsored, for example, by looking at the repository URL (and accounting for forks).

Note that at this point, we are talking about the actual process of getting money, nothing else about this issue.

Now, let’s get to the reason that this caused much angst for people. The way SponsorLink works is that it fetches your email from the git configuration file and check wether:

- You are registered as a SponsorLink sponsor

- You are sponsoring this particular project

It does both checks using what appears to be: base62(sha256(email));

If you are already a SponsorLink sponsor, you have explicitly agreed to sharing your email, so not a problem there. So the second request is perfectly fine.

The real problem is the first check, when you check if you are a SponsorLink sponsor in the first place. Let’s assume that you aren’t, what happens then.

Well, there is a request made that looks something like this:

HEAD /azure-blob-storage/path/app/3uVutV7zDlwv2rwBwfOmm2RXngIwJLPeTO0qHPZQuxyS

The server will return a 404 if you are not a sponsor at this point.

The email hash above is my own, by the way. As I mentioned, I’m not a sponsor, so I assume this will return 404. The question is what sort of information is being provided to the server in this case?

Well, there is the hashed email, right? Is that a privacy concern?

It is indeed. While reversing SHA256 in general is not possible, for something like emails, that is pretty trivial. It took me a few minutes to find an online tool that does just that.

The cost is around 0.00045 USD / email, just to give some context. So the end result is that using SponsorLink will provide the email of the user (without their express or implied consent) to the server. It takes a little bit of extra work, but it most certainly does.

Note that practically speaking, this looks like it hits Azure Blob Storage, not a dedicated endpoint. That means that you can probably define logging to check for the requests and then gather the information from there. Not sure what you would do with this information, but it certainly looks like this falls under PII definition on the GDPR.

There are a few ways to resolve this problem. The first would be to not use email at all, but instead the project repository URL. That may require a bit more work to resolve forks, but would alleviate most of the concerns regarding privacy. A better option would be to just check for an included file in the repository, to be honest. Something like: .sponsored.projects file.

That would include the ids of the projects that were sponsored by this project, and then you can run a check to see that they are actually sponsored. There is no issue with consent here, since adding that file to the repository will explicitly consent for the process.

Assuming that you want / need to use the emails still, the problem is much more complex. You cannot use the same process as k-Anonymity as you can use for passwords. The problem is that a SHA256 of an email is as good as the email itself.

I think that something like this would work, however. Given the SHA256 of the email, you send to the server the following details:

- prefix = SHA256(email)[0 .. 6]

- key = read(“/dev/urandom”, 32bytes)

- hash = HMAC-SHA256(key, SHA256(email)

The prefix is the first 6 letters of the SHA256 hash. The key has cryptography strength of 32 random bytes.

The hash is taking the SHA256 and hashing it again usung HMAC with the provided key.

The idea is that on the server side, you can load all the hashes that you stored that match the provided prefix. Then you compute the keyed HMAC for all of those values and attempt to check if there is a match.

We are trying to protect against a malicious server here, remember. So the idea is that if there is a match, we pinged the server with an email that it knows about. If we ping the server with an email that it does not know about, on the other hand, it cannot tell you anything about the value.

The first 6 characters of the SHA256 will tell you nothing about the value, after all. And the fact that we use a random key to sending the actual hash to the server means that there is no point trying to figure it out. Unlike trying to guess an email, guessing a hash of an email is likely far harder, to the point that it is not feasible.

Note, I’m not a cryptography expert, and I wouldn’t actually implement such a thing without consulting with one. I’m just writing a blog post with my ideas.

That would at least alleviate the privacy concern. But there are other issues.

The SponsorLink is provided as a closed-source obfuscated library. People have taken the time to de-obfuscate it, and so far it appears to be matching the documented behavior. But the mere fact that it is actually obfuscated and closed-source inclusion in an open-source project raises a lot of red flags.

Finally, there is the actual behavior when it detects that you are not sponsoring this project. Here is what the blog post states will happen:

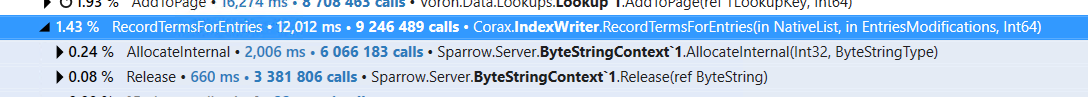

It will delay the build (locally, on your machine, not on CI).

That… is really bad. I assume that this happens on every build (not sure, though). If that is the case, that means that the feedback cycle of "write a test, run it, write code, run a test", is going to hit significant slowdowns.

I would consider this to be a breaking point even excluding everything else.

As I previously stated, I’m all for paying for Open Source software. But this is not the way to do that, there is a whole bunch of friction and not much that can indicate a positive outcome for the project.

Monetization strategies for Open Source projects are complex. Open core, for example, likely would not work for this scenario. Nor would you be likely to get support contracts. The critical aspect is that beyond just the technical details, any such strategy requires a whole bunch of infrastructure around it. Marketing, sales, contract negotiation, etc. There is no easy solution here, I’m afraid.

_thumb.png)