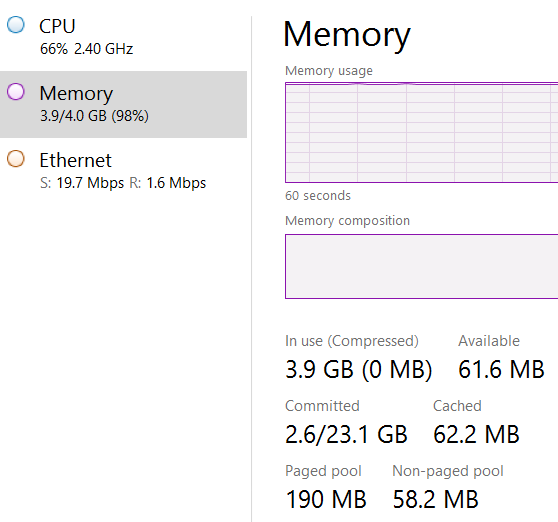

A user reported that when running a set of unit tests against a RavenDB service running on a 1 CPU, 512MB Docker machine instance they were able to reliably reproduce an out of memory exception that would kill the server.

A user reported that when running a set of unit tests against a RavenDB service running on a 1 CPU, 512MB Docker machine instance they were able to reliably reproduce an out of memory exception that would kill the server.

They were able to do that by simply running a long series of pretty simple unit tests. In some cases, this crashed the server.

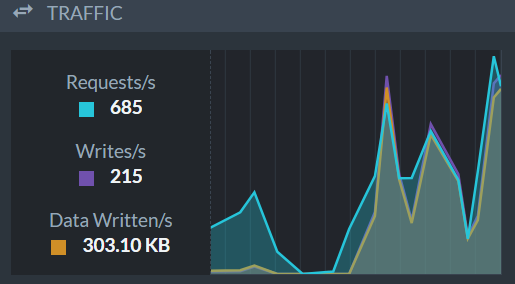

It took a while to figure out what the root cause was. RavenDB unit tests are run on isolated databases, with each test having their own db. Running a lot of these tests, especially if they were short, will effectively create and delete a lot of databases on the server.

So we were able to reproduce this independently of anything by just running the create/delete database in a loop and the problem became obvious.

Spinning and tearing down a database are pretty big things. Unit tests asides, this is not something that you’ll typically do very often. But with unit tests, you may be creating thousands of them very rapidly. And it looked like that caused a problem, but why?

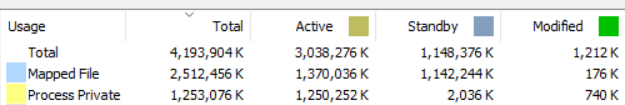

Well, we had hanging references to this databases. There were two major areas that caused this:

- Timers of various kinds that might hang around for a while, waiting to actually fire. We had a few cases where we weren’t actually stopping the timer on db teardown, just checking that if the db was disposed when the timer fired.

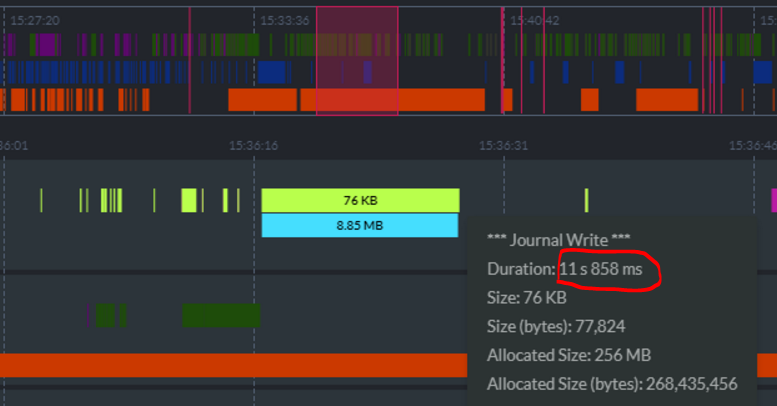

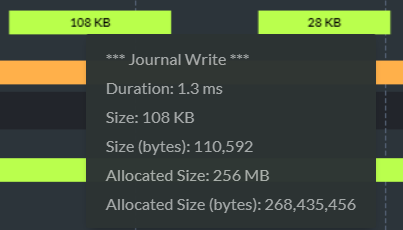

- Queues for background operations, which might be delayed because we want to optimize I/O globally. In particular, flushing data to disk is expensive, so we defer it as late as possible. But we didn’t remove the db entry from the flush queue on shutdown, relying on the flusher to check if the db was disposed or not.

None of these are actually really leaks. In both cases, we will clean up everything eventually. But while that happens, this keeps a reference to the database instance, and prevent us from fully releasing all the resources associated with it.

Being more explicitly about freeing all these resource now, rather than waiting a few seconds and have them released automatically made a major difference in this scenario. This was only the case in such small machines because the amount of memory they had was small enough that they run out before they could start clearing the backlog. On machines with a bit more resources, everything will hum along nicely, because we had enough spare capacity.