Why I don’t like the MVC Mini Profiler codebase

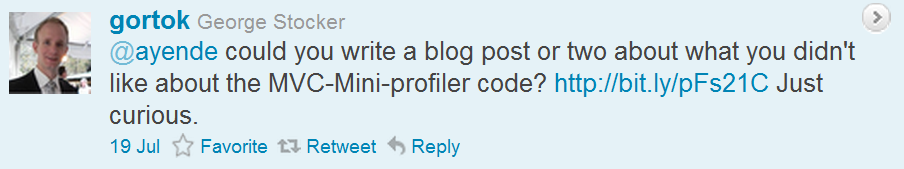

I mentioned in passing that I didn’t like that in the blog post about the MVC Profiler Support for RavenDB.

There were three major reasons for that. First, I don’t like code like this:

This seems to be incredibly invasive, it is really not something that I want to force on my users. Considering how much time I spent building and designing profilers, I have strong opinions about them. And one of the things that I consider as huge selling points for my profilers is that they are pretty much fire and forget. Once you initialize them, you can forget about all the rest.

Obviously, a general purpose profiler like this can’t do that, but I still don’t like it very much. And since we were talking about profiling RavenDB, I saw no reason to go to something like this.

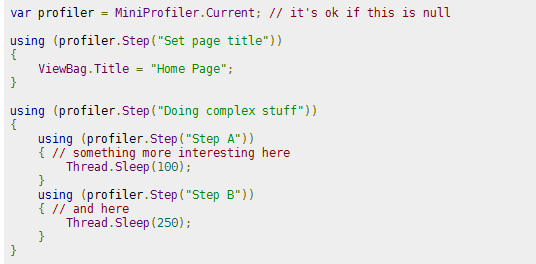

Next, there is a pretty big focus on profiling SQL:

That is pretty meaningless for us when we talk about Profiling RavenDB.

And finally, there was the Stack Trace management. Providing Stack Trace information is incredibly useful for a profiler, and the MVC Mini Profiler supports that, but I didn’t like how it did it. Calling new StackTrace() is pretty expensive, and there are better ways of doing that.

One of the goals for the RavenDB profiler is to allow people to run it in production, same as I am doing right now in this blog, after all.

Mostly, the decision to go and write it from scratch was based on making it easier for us to build something that made sense for our purposes, not try to re-use something that was built for a completely different purpose.

Comments

I think you missed a few points about MVC Mini Profiler;

first: you don't have to add that "invasive" code; if you just want a trace of the SQL code, that will all happen automatically with the connection tracer - no code changes beyond connection setup. This will include stacktrace etc. That code is to help you profile other code - not all of a major system is TSQL. Indeed, at SO we cache like damnation.

second: you state one of your goals is to run it in production. Same here; we run it on the entire network of Stack Exchange sites (including Stack Overflow etc). 24x7. All the time. In production.

The purpose is not different.

Marc, As I understand it, this is only enable for specific cases, not for every page request, which is the case with RavenDB Profiler. As for not having to use the invasive code, I agree, but as it is the very first example... it seems that it is meant to be that. I didn't need a generic profiler for RavenDB, all I needed was something to handle RavenDB scenarios.

I dunno, the stack trace trick is kind of fancy and allows you for pretty awesome optimization, however there is no arguing it is risky and could just break out of the blue.

Considering that we are talking 0.1ms for the typical case this only introduces a 2ms slowdown for a page with say 20 queries which is all you should probably be running if you want to scale.

Also we only instrument for particular users (admins) and for sampling purposes, so impact is minimal.

Regarding the code blocks, that is a subject for a big blog post. You can have a slightly cleaner pattern using attributes and PostSharp style interception - if you are feeling particularly crazy you can attach to the running process once in a while and gather a list of interesting stack traces that seem to be hit frequently and then recompile with the instrumentation in place or jack it in with ICorProfiler. However the fancy means the solution becomes way more brittle.

There is something to be said for having logging in your application, I would argue the the profiling blocks are just another form of logging.

Sam, The problem is that you can get pretty high numbers for a lot of things once you start getting into the hang of it. And 0.1 ms starts getting big pretty fast, once you add things up. Your scenario doesn't need it, but mine did, I have it enabled for all users.

I would have zero problems if you did that via an ActionFilter, for example. I just really don't like having it inside everything.

+1 on logging, it is _important_, but the way you handle it in the sample code? I would call it an anti pattern.

Totally agree we should wire up time in view vs time in action automatically. Ideally we should hook in somehow and get time in each partial as well.

However that only gets us a certain way, often we need to time subsections inside controllers and such to get better visibility of orm overhead and non db work.

Sam, That is actually interesting, why do you need that? Do you have significant non db costs somewhere?

We have a number of scenarios where we query data from places other than a DB, sure. And knowing how they perform is key. Not everything is the DB. For example, some very high volume queries (that aren't a standard flat cache hit scenario) can be served from in-memory structures, but we still need to know how that performs.

Ultimately, anything can become a performance issue if you don't keep a reasonable eye on it. Of course, a simpler answer here would be:

Q: Do you have significant non db costs somewhere? A: If we didn't profile, how could we answer that?

You could say "subtract DB time from total time", but that isn't necessarily enough - some performance issues can exist in the glue between layers. For example, we found significant chunks of time being swallowed by the materializer we were using. Replacing that with "dapper" solved that.

Performance is hugely important to us, and having suitable tools to analyze and fix (with evidence) any performance goes a long, long way beyond just the database.

What about fluent configurations with Lamda expressions and reflection wizadry?

The MP is a great tool, but you need to have a think about how you're going to use it.

One thing I think Sam and the guys could do better is explain that the benefit of the Step calls is it gives you the ability to have very precise fine grained control over what is profiled.

A lot of people seem to miss the point with the MVC mini profiler and the Step calls. They're nothing more than the gateway into the profiler and how you use them is up to you.

If you want to go pouring them wholesale throughout your code then that's up to you but obviously that's going to cause you problems.

If you are going to sprinkle them through your code after careful consideration as to what is going to provide the best benefit to you, then that is probably closer to the approach these guys were trying to encourage.

The point is, that the control is thrown into the developers court.

Contrasting this with NHProf Ayende, the approach you take is to profile everything (which is fine) but if you want to ignore specific queries, you can use this method outlined here: http://nhprof.com/Learn/Usage/IgnoreResumeProfiling

I use this feature a lot because by default, I have a lot of queries that I know I can safely ignore (i.e. cached queries etc) that end up just being noise in the profiler.

The only difference I see in this approach and the MVC mini profiler is that NHProf is opt out and MVC mini profiler is opt int.

I think both tools are great and I use them both, but for different purposes and certainly wouldn't want to go back to writing apps without them.

Name, Huh? What do you mean?

Phil, Are you aware that NH Prof contains a sophisticated filtering engine?

Name == Nick

I was thinking you can use fluent configuration to specify which methods you want to profile via the wonder of lambdas. Behind the scenes this would use reflection to hook into a method call - just before and just after - to measure the time it takes.

I don't know if you can definitely do this, it was more of a question.

Nick, You generally can't do that without a lot of complex infrastructure (proxying, weaving, etc)

Ayende,

Yep use it all the time, particularly to ignore cached quereis :) The reason I use the Ignore / Resume calls is that in our solution we have to interact with a couple of DLL's that were written by another team and I have no control over. I don't really care about profiling the calls made from those DLL's and it's a little tricky to filter them out via the UI so I just ignore them.

I guess I was just trying to emphasise the point that you take an "opt out" approach and they take an "opt in" approach.

If you install the NuGet package for the mini profiler and put the one liner for the includes in your master page, you have essentially enabled the profiler and it basically logs nothing.

You then build up what makes sense for you to profile and in fact, you can often do it in very unobtrusive ways using things like ActionFilters, IoC and hooking into the ViewEngine pipelines makes this all pretty easy and straight forward.

Basically with around 20 lines of code, I can get timings on how long it takes me to render / locate views, execute action methods and it gives me a birdseye view of what's happening in my code without actually having to change the actual code base. This code all sits on the outside and it really is minimal.

@Sam @Marc: MVC Mini Profiler is a great tool! Thank you very much you developing it and giving away for free to us.

Comment preview