Database assisted denormalization

Let us say that I have the homepage of the application, where we display Blogs with their Post count, using the following query:

select dbo.Blogs.Id, dbo.Blogs.Title, dbo.Blogs.Subtitle, (select COUNT(*) from Posts where Posts.BlogId = Blogs.Id) as PostCount from dbo.Blogs

Given what I think thoughts of denormalization, and read vs. write costs, it seems a little wasteful to run the aggregate all the time.

I can always add a PostCount property to the Blogs table, but that would require me to manage that myself, and I thought that I might see whatever the database can do it for me.

This isn’t a conclusive post, it details what I tried, and what I think is happening, but it isn’t the end all be all. Moreover, I run my tests on SQL Server 2008 R2 only, not on anything else. I would like to hear what you think of this.

My first thought was to create this as a persisted computed column:

ALTER TABLE Blogs ADD PostCount AS (select COUNT(*) from Posts where Posts.BlogId = Blogs.Id) PERSISTED

But you can’t create computed columns that uses subqueries. I would understand easier why not if it was only for persisted computed columns, because that would give the database a hell of time figuring out when that computed column needs to be updated, but I am actually surprised that normal computed columns aren’t supporting subqueries.

Given that my first attempt failed, I decided to try to create a materialized view for the data that I needed. Materialized views in SQL Server are called indexed views, There are several things to note here. You can’t use subqueries here either (likely because the DB couldn’t figure which row in the index to update if you were using subqueries), but have to use joins.

I created a data set of 1,048,576 rows in the blogs table and 20,971,520 posts, which I think should be enough to give me real data.

Then, I issued the following query:

select dbo.Blogs.Id, dbo.Blogs.Title, dbo.Blogs.Subtitle, count_big(*) as PostCount from dbo.Blogs left join dbo.Posts on dbo.Blogs.Id = dbo.Posts.BlogId where dbo.Blogs.Id = 365819 group by dbo.Blogs.Id, dbo.Blogs.Title, dbo.Blogs.Subtitle

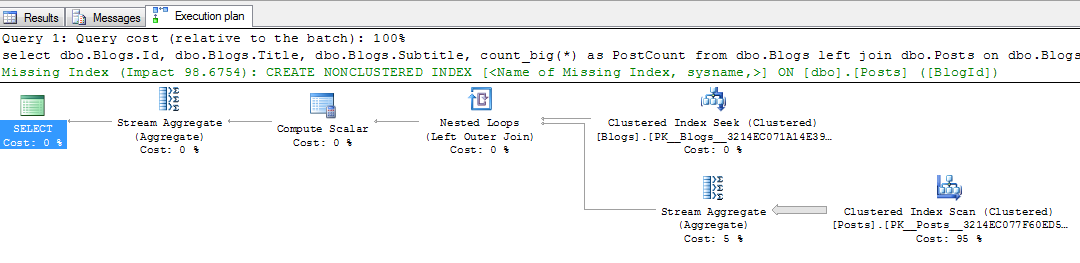

This is before I created anything, just to give me some idea about what kind of performance (and query plan) I can expect.

Query duration: 13 seconds.

And the execution plan:

The suggest indexes feature is one of the best reasons to move to SSMS 2008, in my opinion.

Following the suggestion, I created:

CREATE NONCLUSTERED INDEX [IDX_Posts_ByBlogID] ON [dbo].[Posts] ([BlogId])

And then I reissued the query. It completed in 0 seconds with the following execution plan:

After building Raven, I have a much better understanding of how databases operate internally, and I can completely follow how that introduction of this index can completely change the game for this query.

Just to point out, the results of this query is:

Id Title Subtitle PostCount

----------- --------------------- ---------------------- --------------------

365819 The lazy blog hibernating in summer 1310720

I decided to see what using a view (and then indexed view) will give me. I dropped the IDX_Posts_ByBlogID index and created the following view:

CREATE VIEW BlogsWithPostCount WITH SCHEMABINDING AS select dbo.Blogs.Id, dbo.Blogs.Title, dbo.Blogs.Subtitle, count_big(*) as PostCount from dbo.Blogs join dbo.Posts on dbo.Blogs.Id = dbo.Posts.BlogId group by dbo.Blogs.Id, dbo.Blogs.Title, dbo.Blogs.Subtitle

After which I issued the following query:

select Id, Title, Subtitle, PostCount from BlogsWithPostCount where Id = 365819

This had the exact same behavior as the first query (13 seconds and the suggestion for adding the index).

I then added the following index to the view:

CREATE UNIQUE CLUSTERED INDEX IDX_BlogsWithPostCount ON BlogsWithPostCount (Id)

And then reissued the same query on the view. It had absolutely no affect on the query (13 seconds and the suggestion for adding the index). This make sense, if you understand how the database is actually treating this.

The database just created an index on the results of the view, but it only indexed the columns that we told it about, which means that is still need to compute the PostCount. To make things more interesting, you can’t add the PostCount to the index (thus saving the need to recalculate it).

Some points that are worth talking about:

- Adding IDX_Posts_ByBlogID index resulted in a significant speed increase

- There doesn’t seem to be a good way to perform materialization of the query in the database (this applies to SQL Server only, mind you, maybe Oracle does better here, I am not sure).

In other words, the best solution that I have for this is to either accept the cost per read on the RDBMS and mitigate that with proper indexes or create a PostCount column in the Blogs table and manage that yourself. I would like your critique on my attempt, and additional information about whatever what I am trying to do is possible in other RDMBS.

Comments

"I can always add a PostCount property to the Blogs table, but that would require me to manage that myself" - yep and this will be probably most simple, universal (will work with any database) and most fast (from performance point of view) solution in RDMBS ! And it's very easy to do this actually!

I simply can't accept as solution any query you write in post, sorry, because they simply NOT scale (too many groupings, left joins), looks too complex (i.e. require whole a lot of experienced in DBA) and database specific (i.e. say in MySQL it will be too problematic to made something like this possible) etc...

In the other hand, in most real life situations, you anyway have some kind of vector cache (say in Memcached) for all your blog posts, so it's not a big issue to calculate qty in cache directly and does not go to database at all if your cache valid :) If your cache for some reason become not valid (say you just restart cache or something else), than I don't see any issue to calculate qty of posts in database using simply count query from begging of the post because it will be not so frequently anyway.

So my vote to move it to a bit higher level than database and / or just use most simple solution with denormalization :)

Agree or?

Is it safe to say that on similar big tables (high record count), there should be an index on foreign keys? SQL Server does not do this automatically.

Evereq,

Show me the count that count the quantity in the cache.

Oh, and remember to add 1 ms overhead for every memcached call, see where you get there.

Your other option is to implement a trigger on the Posts table that updates the blog table whenever a post changes. You could then adjust your ORM so the PostCount value on the Blogs table is a read-only field, and that would work.

I'm not a huge fan of triggers personally as I belive they're a good way to "hide" logic, but I thought I'd throw it in there.

@Ayende Rahien.

What I mean is that because you have so big amount of blogs / posts you probably use cache, right? So probably you anyway spend some time to maintain vector cache for each blog posts, right (to display on blog page all latest posts for given blog)?

If so, why not to maintain as well following structure in your cache hashtable:

Key | Value (count of posts)

blog_count_by_id_4594594954 | 54

blog_count_by_id_9695695950 | 21

And if you maintain it in cache, it will take you exactly 1ms (or even less) to get count for blogId = 4594594954 :) which will be 54 in our example :)

Also note that Memcached support "atomic" increment, so you even not need to issue locks etc :)

After working some time with high loaded / huge data in storage systems, I prefer to have all data like this in cache anyway, and go to database only for "backup" when cache become invalid for some reason... Sure this apply only when you build something "big" :) If you have less load (both storage size and amount of transactions per minute) probably I think it's best way to go with simple query and just carefully select indexes in database like you done in post... But think for a minute if you use federation? What will be with your query? etc...

Evereq,

Oh, okay, phew!

I thought you were suggesting scanning the data in the cache to calculate it.

ha! ;-)

P.S. I just not like complex queries really, because of possible federation / partitioning and BAD support of complex queries in such cases (especially with indexing efficiently etc)... And because many projects after some time, probably will start using sharding / federation anyway, I really afraid how you will scale with selected approach in long run... Again we don't speak as I see about few blogs web site :) We speak more as I see in terms of some SaaS solution with whole a LOT of blogs (in your example > 1 milion) and > 20 mil posts that growing :D

I have experimented with indexed views a lot. The automatic matching is very fragile and uncapable

If you add the with (noexpand) hint your query will fly.

I found the following limitations on automatic indexed view maching: Max 2 joins, only simple predicates with rules not fully known to me. Now we have another limitation. Very annoying because those views are so damn powerful. I hope we get stacked indexed views in the next release which are going to rock big time.

If you considered a query with a few more queries, I'd propose saving a denormalized view of your entity (with all the counts, calculated during saving) in another table, each time, you save your basic entity. Then, for query purposes, use the second table.

In my experience, COUNT(*) queries on a properly index field are not too expensive. I suspect that you over-thought this and made this more complicated than it needed to be. Put a NONCLUSTERED index on the BlogID field in your Posts table, and perhaps compute statistics as well. Then, let us know the performance results of your original query:

select

from dbo.Blogs

Good post. Thanks.

Nonclustered index is perfect for calculating such aggregates. You do not need to read actual data, just to count rows. Nonclustered indexes are even faster then clustered for this.

select

from dbo.Blogs

I suppose, this query will be much more slower, because you actually need to read all posts many times (due to clustered vs nonclustered index fragmentation)

like tobi said, use the with(noexpand) hint. the results of the entire view (including the aggregate) was persisted when you created a clustered index. however, by default, the query optimizer will expand the view first, and if the estimated plan it finds falls withing a certain threshold, it will just use it (to save time in the optimizer). by using the "noexpand" hint, you tell the optimizer to skip expanding the query and just use the clustered index.

Its rudimentary SQL to have an index on a table with 20 million rows. I don't understand why you would be trying to do it any other way than have that index. If you're using a SQL database then it should be used as recommended.

evereq, that SQL statement with the grouping is not 'too complex'. People learn that on the first day in a SQL class and its not database specific as long as you use ANSI sql which is what they all can understand.

Unfortunately many people do not know how to get extreme performance from sql server. There is a handful of tricks that seemingly nobody used but that are absolute killers. Materialized views and covering nonclustered indexes (which are equivalent to a second clustered index!) are the most underused features in my opinion.

When you tested this, did you execute dbcc freeproccache and dbcc dropcleanbuffers before each select?

... and add the postcount into IDX_BlogsWithPostCount

managing the count yourself will always lead you into a corner

when considering simple inserts, updated, and deletes managing the count yourself is fine

when you get into bulk operations where some posts will be inserted and some will be deleted... complexity arises

then take into considerations where/when something fails...

i would much rather take the hit for the aggregate, index the tables properly and give myself a chance to enhance or change the criteria for "count" than attempting to manage it myself through some column where no body knows what "count" means

just my two cents

@meisinger - Agreed. Especially since this post doesn't detail the actual performance benchmarks of the original query, or whether simpler strategies like verifying/adding indexes were tried. Premature performance optimization is the root of all kinds of evil.

@Ayende - I use indexed views a bit (i don' t have many, but I use them where I can) because they are abstracting a lot of scehmas into some really useful forms.

ANYWAYS - a massive trap for young players (It's a Trap!) is that indexed views do NOT use the index (WTF?) on SQL SERVER EXPRESS or STANDARD editions without manually specifying WITH (NOEXPAND)

eg.

select Id, Title, Subtitle, PostCount

from BlogsWithPostCount WITH (NOEXPAND)

where Id = 365819

It's one of the 'bonuses' when u pay for an enterprise, plus plus plus edition of SQL SERVER.

Try that query now - 0 secs again?

Proof: "You must use NOEXPAND in all versions of SQL Server other than Developer and Enterprise editions to have SQL Server process a query against an indexed view directly. " MSDN docs: http://msdn.microsoft.com/en-us/library/dd171921(SQL.100).aspx

IT'S A TRAP!

The same doc also says :

"Only Enterprise and Developer editions support automatic query-to-indexed-view matching. Reference the indexed view by name and include the NOEXPAND hint to have the query processor use the indexed view in all other editions."

meisinger speaks the truth.

Don't think instead of SQL Server, never do.

And for missing index hints, these features already exist in SQL Server 2005 but less direct ( DMVs ) or via the dashboard.

That's because the SQL of the view is embedded in the query at the spot where you name the view, so it's not using some special shortcut, because it's a view.

I also agree with others here, that NOEXPAND should be used to trigger the usage of the index.

Ok, here is my take on this - any aggregation (map/reduce) you do in a document database, there is nothing preventing you from doing the same in a relational world.

In our website, we analyze prospect behavior and there might be decisions made on the the number of vsiits etc, so we build separate tables that aggregates the #times somebody has visited, #times they submitted a page, saw terms & conditions etc.

Obviously these are had to compute real time even with proper indexes.

Good example is in the home page you want to show not the #posts, but the #posts per month, or per category...

Also not for such simple aggregations, but data warehouse solutions like analysis services play a huge role in pre-computing these aggregations by different dimensions and allow to drill down extremely fast...

Ultimately all it matters is the right tool for the job :-)

Ajai

@Joe

"that SQL statement with the grouping is not 'too complex'. People learn that on the first day in a SQL class and its not database specific as long as you use ANSI sql which is what they all can understand."

I don't tell "too complex to understand :D" (I see before queries 10 pages long and understand them very well...)... And No, it IS TOO complex to SCALE ones you start using partitioning for example :) and will have 100s millions of records in DB (think how your grouping will work in that case)! Or if you will need to federate some tables from another storage? etc.

About "database specific" - I mean approaches with Materialized Views etc, not just ANSI sql features sure thing :) Try for example to do it in MySQL your hands (because of no build-in support for Materialized Views in MySQL for now!)

Comment preview