Raven & Event Sourcing

I keep trying to work on the replication bundle for Raven, but I keep getting distracted with more interesting stuff.

I keep trying to work on the replication bundle for Raven, but I keep getting distracted with more interesting stuff.

In this case, I kept coming back to several discussions that I had with people who want to use Raven for storing events, and were thinking about how to go from a stream of events to a complete aggregate. I kept thinking that Raven should already be able to handle that. And indeed it can, quite easily, it turns out.

Raven is already capable of running operations over a stream of document to produce a value, to go from there to event stream producing an aggregate is easy. The only problem was that we needed to support external views. That was easy enough to do, so let me show what we have now.

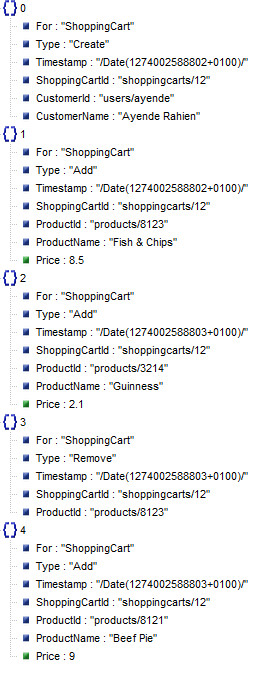

Let us assume that we have the events shown on the right stored in Raven, as you can see, this is a stream of events for a shopping cart. What we want to have is to go from there to an actual shopping cart.

We define the following view:

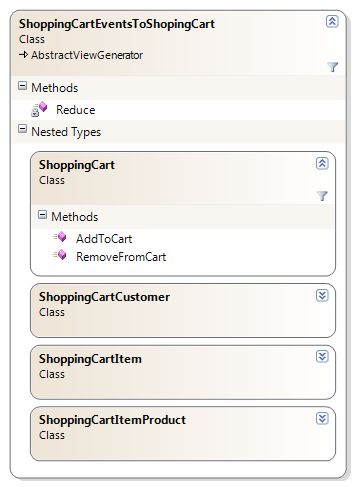

I am showing the class diagram here to show you all the types that are involved here. Note that ShoppingCart has AddToCart and RemoveFromCart method, which has the typical implementation.

Now, let us look at the actual view code:

[DisplayName("Aggregates/ShoppingCart")] public class ShoppingCartEventsToShopingCart : AbstractViewGenerator { public ShoppingCartEventsToShopingCart() { MapDefinition = docs => docs.Where(document => document.For == "ShoppingCart"); GroupByExtraction = source => source.ShoppingCartId; ReduceDefinition = Reduce; Indexes.Add("Id", FieldIndexing.NotAnalyzed); Indexes.Add("Aggregate", FieldIndexing.No); } private static IEnumerable<object> Reduce(IEnumerable<dynamic> source) { foreach (var events in source .GroupBy(@event => @event.ShoppingCartId)) { var cart = new ShoppingCart { Id = events.Key }; foreach (var @event in events.OrderBy(x => x.Timestamp)) { switch ((string)@event.Type) { case "Create": cart.Customer = new ShoppingCartCustomer { Id = @event.CustomerId, Name = @event.CustomerName }; break; case "Add": cart.AddToCart(@event.ProductId, @event.ProductName, (decimal)@event.Price); break; case "Remove": cart.RemoveFromCart(@event.ProductId); break; } } yield return new { cart.Id, Aggregate = JObject.FromObject(cart) }; } } }

We are doing several interesting things happening in the constructor:

- The display name is the name of the index.

- In the constructor, we define the map part as filtering for events for the shopping cart.

- We will create a shopping cart per shopping cart id, so we specify the group by extraction method. Raven will use that to optimize updates.

- Note the indexes definition, we want to id to be stored as as a primary key, and the aggregate data to be stored, not analyzed for searching.

Now, let us talk about the interesting bits, the Reduce function.

That function should be pretty easy to follow, I think. We are getting a stream of events, grouping them by their shopping cart id. Then, for each shopping cart, we sort the events by date, and proceed to build the aggregate.

Finally, we return the data so Raven will store it in the index.

The result, by the way, is this:

I think this is cool.

Using this approach, Raven will automatically keep the aggregate definition up to date with the event streams coming on. Furthermore, that aggregate will only be computed when a change happen, so accessing it is very cheap.

Finally, if we have a storm of events on a particular shopping cart, we can choose whatever to wait and see it in its most version, or get a potentially stale view of it really fast.

Comments

this is something like couchdb materialized views?

This is really cool and tips my balances in favor of RavenDB against NH again.

I have one remark though: You should mention that you violate OCP voluntarily to keep the code simple and that in production the switch statement should be replaced by an extensible approach, i.e visitor or strategy patterns.

Uriel,

All of Raven's indexes are similar to materialized views

Markus,

Yeah, pretty much. There is a much more extensive example that does it in a manner that is much nicer that is currently working up.

This is just to show how things are working

So what is the difference between this and a regular raven index?

Uriel,

It isn't, really. It is just a different way to build the index, that is all.

I'd be interested in what amount of information in the materialized view/index will be recalculated if the underlying "table" changes. Say, we were to use raven for logging all of our http requests and we had an index "count of requests per day". Would the index be updated incrementally?

In which process does this view-generator run? Since raven needs to keep the index up to date, I'm assuming it has to run in raven's process?

If so, do you have something like a plugin/extension system where you drop some dll somewhere or how does it work?

How would you define this index using ravens webinterface?

I have to add, that I never tried raven or looked at the code and so I don't have a lot of knowledge of its internal structures besides the post of yours. I'm just curious ;-)

Beautiful.

Yes, this code is beautiful, really beautiful. And my sql oriented brain almost blew up when I tried to understand the process.

Tobi,

Yes, the index update on every new commit, and it is updated in an efficient incremental manner.

In your case, it would only update the current day.

Thomas,

This runs in Raven's process.

Yes, Raven has a plugin mechanism, drop a dll to Plugins/, and everything works.

Defining indexes via the web interface:

www.ravendb.net/documentation/docs-http-indexes

Could you comment a bit more on why you prefer using raven in a document oriented way instead of in a relational way? It seems to me that it would result in less code if you were to keep everything normalized and dry. I know that performance might not be that good but disregarding that I think it would be a superior approach. You would just define indexes for all access path' to the data that you have so it still would be lightning fast. (you might notice that development speed is my major concern because I do not develop apps with huge data sizes. This is certainly a common scenario that might justify a dedicated post... ;-) ).

And I want to add that this series is really starting to get my attention! This project is certainly having a pragmatic lead.

Tobi,

Try writing something like this on a relational backend.

The performance difference would be pretty big.

Moreover, the amount of effort that you'll have to go through to get things done is extremely non trivial.

Then try to add three new events, and see what happens.

Ok, I get it for event processing, but I am more interested in the day to day work of users, orders, products... Some of us still have to get their hand dirty with boring stuff to pay the rent ;-)

Tobi,

Take a look at my other posts on the subject.

Look at the NoSQL category

That's a pretty sweet and elegant example, hard to get it done with any less effort.

Though I'm not sure about modifying the Raven Process with custom application logic .dll's - seems like a pretty fragile/state-full approach that would cause some pain during deployment, versioning, backing up, upgrading etc.

Just trying to work out how efficient this solution is since I can't see the optimal code-path, i.e. when a user adds an Item to an existing cart does it rebuild the entire aggregate for all users shopping carts or just the one modified? or is that what the 'GroupByExtraction' lambda is for?

Demis

Versioning is actually pretty easy, you just maintain the old index and deploy a new dll with the new index under a different name.

The code needs to be written to be able to handle all users, but in practice, what we are doing is only re-executing the documents for the current user, not for all users.

The reason that the code is written this way is that wew want to allow ourselves the option to do major optimizations down the road, where we can just shove data through this without having to do sorting up front.

Oren,

Looks good but generally I would run far far away from using TimeStamps to order and would instead use version numbers.

Cheers,

Greg

to be clear the problem is actually shown in your sample events. you have two with the same timestamp (create/add) and providing deterministic ordering in such a case is problematic.

Greg,

Agreed. Version numbering with Raven is a bit interesting, basically you need a separate document to do the versioning, but it is pretty easy all around.

Comment preview