Slaying relational hydras (or dating them)

Sometimes client confidentiality can be really annoying, because the problem sets & suggested solutions that come up are really interesting. That said, since I am interesting in having future clients, it is pretty much a must have. As such, the current post represent a real world customer problem, but probably in a totally different content. In fact, I would be surprised if the customer was able to recognize the problem as his.

That said, the problem is actually quite simple. Consider a dating site, where you can input your details and what you seek, and the site will match you with the appropriate person. I am going to ignore a lot of things here, so if you actually have built a dating site, try not to cringe.

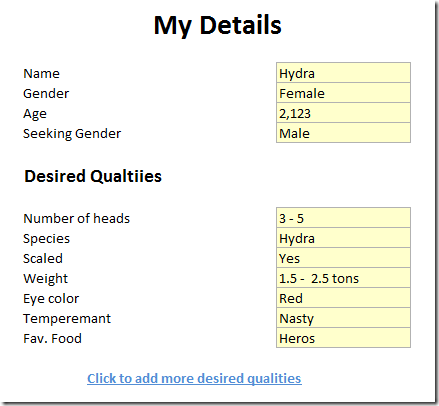

At the most basic level, we have two screens, the My Details screen, where the users can specifies their stats and their preferences:

And the results screen, which shows the user the candidate matching their preferences.

There is just one interesting tidbit, the list of qualities is pretty big (hundreds or thousands of potential qualities).

Can you design a relational model that would be a good fit for this? And allow efficient searching?

I gave it some thought, and I can’t think of one, but maybe you can.

I’ll follow up on this post in a day or two, showing how to implement the problem using Raven.

Comments

I think a correctly indexed attribute value pair model would work. You could use a few strategies for the search like multiple pass reducing constraint match - or just plain old aggregate function to see how many attributes in common.

The nice thing about the av pair model is that as long as you think through your search and data entry - nothing says that the list of qualities has to stay constant. It could change over time without affecting the integrity of previous entries.

You of course pay a price for the av pair model in mapping data to - well - pretty much anything. You will need adapter if you use things like mvc or databinding code in general. But it does serve the purpose.

J

Joe,

Try to show me SQL that make a search work.

select * from people p inner join qualities q on q.personid=p.id and q.attribute = 'species' and q.value = 'Hydra'

Erik,

You need to match on all the possible qualities, not on just one of them.

Right, so then it turns into a where clause with a series of and'd or or'd search terms, depending on what you're wanting to match.

select * from people p inner join qualities q on q.personid=p.id

where

(q.attribute = 'heads' and q.value >= '3' )

and (q.attribute = 'heads' and q.value <= '5' )

and (q.attribute = 'species' and q.value = 'Hydra' )

and (q.attribute = 'scales' and q.value = 'Yes' )

and ...

It's not very elegant, but no EAV problem is when you're stuck in traditional RDBMS.

en.wikipedia.org/wiki/Entity-attribute-value_model

Erik,

You will never return anything using this approach.

q.attribute - 'heads' and q.attributes = 'species' are mutually exclusive.

Convert the attributes to categories, like

Gender_Male

Species_Hydra

Eyes_Red

and build a Lucene index on all people, including these words.

select mq.MemberID, count(*) as CountOfMatchingAttributes

from MemberDesiredQualities mdq

join MemberQualities mq on mdq.QualityID = mq.QualityID and mdq.ValueMin <= mq.Value and mdq.ValueMax >= mq.Value

where mdq.MemberID = 1000

group by mq.MemberID

order by mq.MemberID

MemberQualities: QualityID, MemberID, Value

MemberDesiredQualities: MemberID, QualityID, ValueMin, ValueMax

Both tables clustered uniquely on the first two columns. Query returns instantly all members with at leas one matching attibute plus the count of matching attributes.

Rafal,

That isn't relational solution.

Oh, sh*t, the task was to design a RELATIONAL model...

Tobi,

Hat tip, that is a really nice solution.

You really shouldn't be solving this with a SQL database (unless you're using something like Postgis to represent this as a multidimensional space). This is really a multi-dimensional graph problem at it's heart.

Tobi,

Thinking about this, what about if I don't have just ranges, but direct matches?

Eye color = Red, for example.

Or a single quality with multiple values (Fav. Food: Heroes, Witches).

Maybe this isn't what you're looking for, but in most cases (and I'm not only talking about dating sites) what you want isn't "someone with the most exact matches" but "someone with the closest match to each of the attributes." Consider it a multi-dimensional map and you want the closest point.

If my dream girl is blonde with blue eyes and a size L breast, I'd probably rather have a blonde girl with bluish-green eyes and a size M breast (1 match, two very close attributes) than a blonde with blue eyes and no breast (2 matches, one very far attribute).

What is normally done is you give a weight and a desired value to each attribute (the weight acts somewhat like the opposite of a standard deviation). Then for each object you calculate the weighted distance from the object of your desires and you choose the top ones. How would you index that in a relational database?

Maybe you can separate the multidimensional map into sectors. A 3-headed beast with red eyes is sector 6485, and a blonde with a really big breast is sector 20316.

You'd have the following table

Name, Type, Eye color, Sector

Blondie, Human, Blue, 20316

Hydra, Hydra, Red, 6485

Than, you choose all the sectors that are close enough to the desired value (probably the exact one and one sector to each side so you'd have 3^(number of attributes) sectors), and only make an approximate calculation for each of the values in your narrowed-per-sector resultset. There are some more ways you can optimize the sector-choosing, and the calculation, but that's the main idea.

Ayende, according to the execution plan that I got with a few million test-data rows sql server does not use the predicate "mdq.ValueMin <= mq.Value and mdq.ValueMax >= mq.Value" in its index seeks. It should not do any harm to change this predicate to anything at all.

For multiple data types you could create multiple nullabe columns or multiple tables.

select p.Id, COUNT(*) from people p inner join qualities q on q.personid=p.id

where

(q.attribute = 'heads' and q.value >= '3' )

or (q.attribute = 'heads' and q.value <= '5' )

or (q.attribute = 'species' and q.value = 'Hydra' )

or (q.attribute = 'scales' and q.value = 'Yes' )

group by p.Id

order by p.Id desc

returns people and how many things they have in common.

Posting as AC because I'm suspecting something is horribly wrong with this solution if you didn't come up with it :-)

You could add a marker ro switch between range match and value match, e.g.

...

join MemberQualities mq on (mdq.QualityID = mq.QualityID and mdq.ValueMin <= mq.Value and mdq.ValueMax >= mq.Value and mq.IsRangeMatch = 1) or (mdq.Value = mq.Value and mq.IsRangeMatch = 0)

....

If you need multiple matches just have more than one "quality" for choices, e.g. a "favourite food 1" and a "favourite food 2", both of which have the same qualityID. Watch the number of matches though, if another member had red eyes and liked eating heroes and witches you'd get three matches, where you may be expecting two. Add the quality to the group by to help with this - the rowcount would then give you the number of matching qualities.

You can't have a fixed set of values like this:

mdq.ValueA = mq.ValueB or mdq.ValueB = mq.ValueB

Because you need to cross match A with B, not too bad if there are only two choices, but it soon gets ugly:

mdq.ValueA = mq.ValueA or mdq.ValueA = mq.ValueB

or mdq.ValueA = mq.ValueC or mdq.ValueB = mq.ValueA

or mdq.ValueB = mq.ValueB or mdq.ValueB = mq.ValueC

or mdq.ValueC = mq.ValueA or mdq.ValueC = mq.ValueB

or mdq.ValueC = mq.ValueC

nasty!

configurator, you could use my solution for this. In addition to the count(*) predicate, you add

sum(Value - MinValue) as Difference

Then you can calculate the average difference or other metrics of closeness.

Erik has the right idea as a starting point, but you'd have to use subqueries or temp tables instead of the join. It's not that hard to build the query dynamically. The hard part would be getting it to perform.

is the matching criteria relatively static or dynamic ?

i.e. - do I fill out what qualities I like on a search page or do I fill them out once in a long while in an account preferences page ?

Where am I going with this ? - if the search itself is relatively static, then you might want to precalculate matches and save/search those....

Yoni,

Assume that it is dynamic enough to cause problems

Proposes adjustment of Tobi's solution to allow for multiple values in both desired and actual member qualities. And I would treat single values as a range with same ValueMin and ValueMax values.

select mq.MemberID, count(mq.QualityID) as CountOfMatchingAttributes

from MemberDesiredQualities mdq

join MemberQualities mq

on mdq.QualityID = mq.QualityID

and (mdq.ValueMin between mq.ValueMin and mq.ValueMax

or mdq.ValueMax BETWEEN mq.ValueMin and mq.ValueMax)

where mdq.MemberID = 1000

group by mq.MemberID, mq.QualityID

order by mq.MemberID

Small correction in grouping logic:

select mq.MemberID, count(DISTINCT mq.QualityID) as CountOfMatchingAttributes

from MemberDesiredQualities mdq

join MemberQualities mq

on mdq.QualityID = mq.QualityID

and (mdq.ValueMin between mq.ValueMin and mq.ValueMax

or mdq.ValueMax BETWEEN mq.ValueMin and mq.ValueMax)

where mdq.MemberID = 1000

group by mq.MemberID

order by mq.MemberID

What about this?

Catalog : CatalogID, Prompt, Type, etc

Person : PersonID, FirstName, LastName, etc

Member : PersonID, MemberSince, Status, etc

PersonStringAttributes : PersonID, CatalogID, Value

PersonDateAttributes : PersonID, CatalogID, Value

PersonTextAttributes : PersonID, CatalogID, Value

DesiredStringAttributes : PersonID, CatalogID, MinValue, MaxValue

DesiredDateAttributes : PersonID, CatalogID, MinValue, MaxValue

DesiredTextAttributes : PersonID, CatalogID, MinValue, MaxValue

Now you have a few possible ways to return the resulting data. You can combine the separate asynch returns on the middle tier. You can have a view on top of your data structure.

...this is a transcript of our conversation to date...

2010/3/11 Joe Cincotta xxxx@spiralglobal.com

There would be 2 tables.

ID as GUID

other columns for the user

ID as GUID

UserID as GUID (FK)

Attribute as String

Value as String

My thought was that I would do a select where (attribute=x and value=y) or (attribute=z and value=a) etc etc for each attribute in the source profile. Then you would group by the result by the UserID - using an aggregate function to order by the most results on a UserID to the least. This would provide you the best matches first and the least further down.

I would have to spend more time than I have to give the precise SQL, but this at least gives my idea. It would use a fair amount of processing power performing an aggregate SQL function if you are talking about thousands of attributes.

On 12/03/2010, at 2:17 AM, Ayende Rahien xxxx@ayende.com wrote:

The problem is that this becomes expensive very quickly. You can't use indexing, for example.

2010/3/11 Joe Cincotta xxxx@pixolut.com

You could create some artificial indices. Like, for example - every time you 'save' a user profile you make a checksum. Create a checksum table which looks like

CHECKSUM

ID as Guid

UserID as Guid (foreign key)

Checksum as varchar or byte - could use a nice big crypto SHA hash as the checksum so there are no collisions.

When we do a save on the user profile we concat all attributes and values together and then hash them use a big (1024 bit?) SHA hash.

We can index this table easily and then exact match searches get waaaaay faster. One entry per user instead of per attribute.

You could also use a variation of this to cluster results - like

Do the above strategy but make several tables for the attribute clusters which are important. For example maybe everyone wants exact matches to 'gender, marital status, and age' - we make a checksum table for those attributes which gives us a shortlist for the more complex aggregate function.

The more 'checksum' tables the more funky you can get. You essentially can segment data and control performance of the search. The place you pay performance hit is on the create or update operations. This would be deemed acceptable.

J

On 12/03/2010, at 7:06 PM, Ayende Rahien xxxx@ayende.com wrote:

At which point, I am better off with a non relational option, it is simpler to follow, faster to program with and faster to execute.

On Fri, Mar 12, 2010 at 8:23 AM, Joe Cincotta xxxx@pixolut.com wrote:

If that's an option then, sweet. But you end up with other issues - do you use a non- relational data store? Does it provide the measured scalability of SQL? It's not just about the language you choose - it's about reliability. What would you suggest as an alternative to Sql?

J

Joe,

Yes, I do, and yes, it does.

But I can't really answer that question without having more information, such as what the actual need is.

...ende...

Ayende, my software is heavily using metade, or so called "client properties".

The real part of this is getting data validation and consistency if not defined.

But there is no major issue, just take a look at sharepoint, it's exactly the design principle used by moss.

Comment preview