The Tale of the Lazy Architect

So, there is the interesting debate in the comments of this post, and I thought that it might be a good time to talk again about the way I approach building and architecting systems.

Let me tell you a story about a system I worked on. It started as a Big Project for a Big Company, and pretty much snowballed into getting more and more features and requirements as time went by. I started up as the architect and team lead for this project, and I still consider it to be one of the best projects that I worked on and the one that I hold up to compare to others.

Not that it didn’t have its problems, for once, I wasn’t able to put my foot down hard enough, and we used SSIS on this project. After that project, however, I made a simple decision, I am never going to touch SSIS again. You can’t pay me enough to do this (well, you can pay me to do migrations away from SSIS).

Anyway, I am sidetracking, I was on the project for 9 months, until its 1.0 release. At that point, we were over delivering on the spec, we were on schedule and actually under budget. The codebase was around 130,000 lines of code, and consisted of a huge amount of functionality. I was then moved to a horrible nasty project that ended up with me quitting after the first deliverable. The team lead was changed and a few new people were added, in the next 6 months, the code size doubled, velocity remained more or less fixed (and high) and the team released to production on scheduled with very few issues.

I lost touch with the team for a while, but when I reconnected with them, they had switched the entire time again, this time they brought it fully in house, they were still working on it, and a code review that I did revealed no significant deterioration in the codebase, in fact, I was able to go in and look at pieces that were written years after I left the project, and follow the logic and behavior as if no time has passed or the entire team changed.

Oh, and just to make things even more interesting, there were no tests for the entire thing. Not a single one. I did mention that we did frequent releases and had low amount of bugs, right? Yes, I am painting a rosy picture, but I did say that I consider this to be the best project that I was on, now didn’t I?

The question arises, how did this happen? And the thing that is responsible for this more than anything else was the overall architecture of the system.

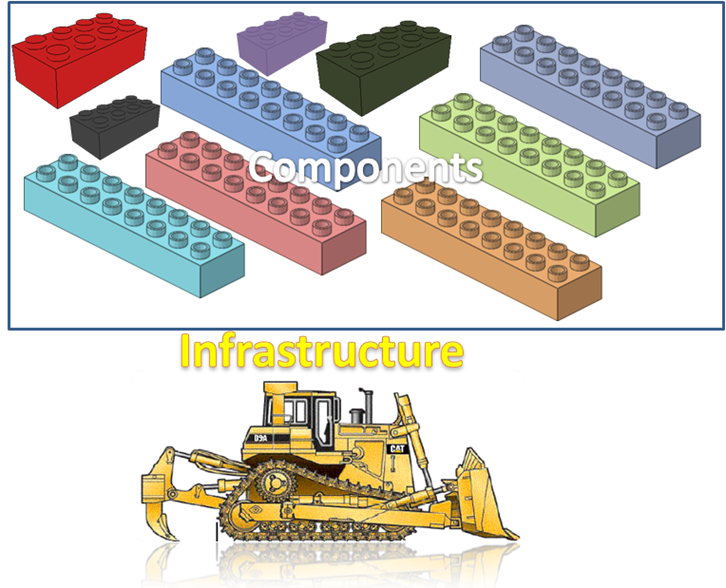

Broadly, it looks like this:

Except that this image is not to scale, here is an image that should give you a better idea about the scales involved:

That tiny red piece there at the bottom? That is the application infrastructure. Usually, we are talking about very few classes. In NH Prof case, for example, there are less than five classes that compose the infrastructure for the main functionality (listener, bus, event processor (and probably another one or two) if you care).

The entire idea was based around something like this:

We had provided a structure for the way that the application was built. The infrastructure was aware of this structure, enforced it and used it. The end result that we ended up with a lot of “boxes”, for lack of a better word, where we could drop functionality, and it would just pick it up. For example, adding a new page with all new functionality usually consisted of about few things that had to be changed:

- Create the physical page & markup

- Create the controller for the page

- Optional: Create associated page specific markup

- Optional: Create associated page specific Json WebService

If new functionality was required in the application core itself, it was usually segregated to one of few functional areas (external integration, business services, notifications, data) and we have checklists for those as well. It was all wired up in such a way that the steps to get something working were:

- Create new class

- Start using new class (no new allowed, expose as ctor parameter)

- Done

The end result was that within each feature box, if you weren’t aware of the underlying structure, it would look like a mess. There is not organization at the folder (or namespace), because that wasn’t how we thought about those things. What we had was a feature based organization, and a feature usually span several layers of the application and dealt with radically different parts.

Note: Today, I would probably discard this notion of layering and go with slightly different organization pattern for the code, but at the time, I had a strict divide between different parts of the application.

Anyway, because we worked with it that way, the way that we actually approached source code organization was at the feature level. And a feature usually spanned all parts of the application and had to be dealt with at all layers. So let us take the notion of tracking down a feature, and what composed it. We usually started from the outer shell (UI) and moved inward, by simply looking at the referenced . Key part of that was the ability to utilize R#’s capabilities for source code browsing.

I’ll talk a bit more about this in a future post, because it is not really important for this one.

What is important is that we had two very distinct attributes to the system:

- You rarely had to change code. Most of the time, you added code.

- There was a well defined structure to the application’s features.

The end result that we produced a lot of code, all to the same pattern, more or less, and all of it was isolated from the other code in the same level.

It work, throughout several years of development, several personal change and at least two complete team changes. I just checked, and the team added new features since the last time I visited the site.

Comments

It seems that you are using somehow the Mediator pattern: From wikipedia: Objects no longer communicate directly with each other, but instead communicate through the mediator. This reduces the dependencies between communicating objects, thereby lowering the coupling.

Personally I rely extensively on this pattern (like for example to simplify communication between the numerous VisualNDepend panels).

Notice that the wikipedia definition of Mediator comes with the word 'dependencies' and 'coupling'. 2 essential concepts IMHO.

As far as I understood, you care only for interaction/coupling between ‘lego boxes’ and the Mediator/Infrastructure. But coupling between ‘lego boxes’ themselves is not in your scope, it is out of control. This coupling can evolve wildly, with no care for cyclic dependencies and layering. There is no vertical nor horizontal association between features/technical layer and namespaces/folders. Maybe I misunderstood, am I right?

If (and only if) I understood well, I disagree on this assertion fundamentally. While I am a big fan of evolutionary design, I refuse no design at all. No design at all in the sense, there is no obvious partitioning of the code (horizontal partitioning by feature, vertical partitioning by technical layer).

Interestingly enough I wrote a post 4 months ago about the benefit of using commonly evolutionary design + Direct Acyclic Graph DAG.

codebetter.com/.../...cyclic-componentization.aspx

The conclusion is that: keeping a code base levelized discards the need for most design decisions. Good design is implicitly and continuously maintained. Iterations after iterations, the design will evolve seamlessly toward something continuously flawless and unpredictable. Like in traditional building architecture, the structure won't collapse.

At that point you might ask what are the benefits of such DAG structures? The answer is Divide and Conquer, the translation of the Discourse of Method of Descartes applied to software design.

On divide:

Types are partitioned in relatively small group of types named component. Artifacts to represent these groups are made obvious in the code itself (namespace/folder/assemblies…). The graph of static dependencies between classes is cohesive. There is no wild evolution of dependencies between group of types: each static dependency has been well thought out and a constant goal is to keep these static dependencies minimal and acyclic. (this is my understanding of the high coupling/low cohesion buzzword).

On Conquer:

Related types are physically near. I don’t need any sophisticated tool like R# nor NDepend to identify and isolate a group of related types. I just need to open a folder in solution explorer. Then I can work immediately on this group types because it is isolated from the rest of the program. During this work (bug fix, refactoring, code review, development of automatic tests…) I will have to cop with In and out static dependencies anyway. But the pain is reduced to a minimum because coupling between groups of types is minimal, acyclic and often abstract.

I would call it pub/sub vs. mediator, but yes, I would agree with that.

There is rarely, if ever, coupling between the lego pieces in the same box, when inter component communication is needed, this tend to happen using event aggregator pattern.

True, I don't really worry about this too much, simply because in this architectural style it tend not to happen.

The mess that I was referring to was that I don't bother to segregate different components in the same box. In other words, all controllers are going to be in the same place.

The one part that sucked was when I had to maintain one component state (in essence, the location of a search page with all its options) in all the other related components (to support a feature that would allow Back To Search). That was yucky, I'll admit.

Great post Ayende. A bit off the beaten path but still quite good. I've had similar experiences which I have come to refer to as the "boring architecture". These architectures where you can just drop functionality in boxes and make it work are out there and, from my experience, they work in favor of collective project success and not individual developer success (esteem, ego, etc). For this reason, these architectures don't always get a lot of exposure and aren't as popular with the alpha-geek developers.

As a big ice hockey fan, I think of your architecture as analogous to the Soviet system of ice hockey. The system produced the best hockey teams in the world for 20 or 30 years and was able to sustain across multiple generations of Soviet ice hockey players. It succeeded by providing a structure where you could drop a player into the system like one of the lego pieces you illustrated. The success of more individualistic styles of hockey (and software engineering) depends entirely upon the talent and makeup of the team at a particular point in time.

Analogous to your post yesterday on the NHibernate codebase where you say you use automated metrics as tracer bullets and focus on other metrics, team focused sports systems focus on macro-metrics (games won, gold medals, etc) and not micro-metrics (goals per player, shots on goal). Sounds like you made one of the most important architectural tradeoffs on your project, trading short-term sexiness for long-term stability. It's remarkable how difficult it is for most architects to make this concession.

Hey I'm glad that you decided to blog about the importance of system architecture, as I think its importance has been lost over the current fad of TDD architecture.

Isolated component development is also how I approach enterprise software development with my current open source 'web services stack' http://code.google.com/p/servicestack.

That is, to create a new web service you just need to create three classes, the request and response DTO's (using POCO DataContract's) and the 'Service Handler' that handles that request, That's it. There is no need to modify or touch any existing classes to integrate your new services, and the framework takes care of making it instantly discoverable and callable via SOAP 1.1, SOAP 1.2, JSON, XML, REST, etc.

The important part is the isolation. Each service encapsulates business logic, and you could potentially end up with hundreds of web services. It is important that your architecture never prohibits the addition of new or modification of existing services.

You shouldn't need to know about how any of the other services work nor should it be possible for your service to affect the behaviour of the existing ones. Unit testing isolated components is naturally easy as you will only need to mock the dependencies that service needs and not other services dependencies that might otherwise be coupled.

What's your beef with SSIS or is that a story for another day ?

SSIS

http://ayende.com/wiki/I%20Hate%20SSIS.ashx

Then how do you explain the very high coupling between types of NH? Please, notice that I use the word 'types' here, not 'namespaces'.

This very high coupling is a reality, please compare these 2 Depedencies Matrix:

The 1456 classes of NH: Each black cell represent 2 classes entangled (mutually using each other). At a glance, I would say that 40% of classes are entangled.

codebetter.com/.../TypesDSM.jpg

The 1 399 classes of NDepend: The matrix is almost triangular, the few black cells around the diagonal shows that we allow entangling inside a namespace.

codebetter.com/.../NDependTypesDSM.jpg

Comparing code bases is a delicate exercise because ego is involved. So please, forget about the ego and let's focus on facts. The only point here is to demonstrate that in the real-world, if one doesn't care about dependencies, code base is spaghetti (the word 'entangled' is more politically correct). The other point, essential to me, is that the opposite is true: by constantly caring for layering, you can fight efficiently spaghetti.

If I understand well, there is a Controllers namespace/assembly that contains controllers classes only. Which means that you are segregating types by their layer, there is a controller layer. Then I have a question: do you require that controllers must sits (directly or indirectly) above DB, or do you grant to developers the freedom to invoke controller from DB?

Patrick,

This isn't a story about NHibernate.

When you wrote in the NH post that you'll write a post about your vision of structuring, I though that you were applying this vision to NH, I misunderstood.

I am still concerned about your 2 assertions because that contradicts everything I saw in my programming life. IMHO there is no such things as an auto-clean structuring code base. That's like the bunch of electrical wires behind my desk: if I don't care for them, they'll end up entangled.

Also, I would like to know your opinion on that Controllers namespace/assembly I mentioned in my previous post.

Hi Ayende,

I also worked on the project you stated above. I was brought in to maintain the application after your team had left and I completely agree with everything you've said. I think BizTalk and SSIS was 90% of the pain, for me, at least. Just remembering the codebase, it definitely has your personal stamp all over it. Aside from the excellent architecture you had laid down, I think there were severe performance issues due too many inefficient Nhibernate criterias and front-end web service calls.

Haifeng,

Given a chance, I would completely rip out a lot of the data handling code, absolutely.

Much of the problem is that we tried to do futures there but it wasn't fully baked yet.

I really don't like the stuff that we did with Multi Criteria there. Future queries would just make a lot of that pain disappear.

Another issue that we had there that related to perf was caching and data organization.

I objected to starting to use caching upfront, and I think that even now, because we didn't provide that option in the infrastructure, caching is not used or is rarely used.

I know that quite some time was spent on making DB indexes to make things work faster.

Another problem was that we basically always queried the same model.

We probably should have gone with specific data stores for specific needs, but again, the SSIS & BizTalk crap was forced on us, and I wasn't able to make enough noise to make them go away.

Like all of projects, I can probably do a good postmortem and find a lot of stuff to improve there.

But from architecture point of view, it really solidified the way that I am thinking about building software.

Ayende,

I think I've read you write about these subjects before. I'm convinced that you're designing a better breed of applications using this model, and I've gotten a scent of what you mean by 'infrastructure' in the enhancements you helped make to Jeremy Miller's "Build Your Own Cab". However, you mostly loose me in the level of abstraction you use to discuss the infrastructure.

I would really love to see you write a example application...a ToDo List manager or some such...using these principals, and make it concrete for those of us that aren't already following your patterns. Alternatively, is this kind of thin infrastructure something that would be useful as a general purpose application foundation framework? Rhino.Application?

Thanks for your blog. I end up agreeing with almost everything you advocate--three months later when I finally understand it.

The architecture was most definitely a high-end solution. During my stay in Israel, we didn't even notice windsor.boo was wiring everything up until the my last day. Things just seem to mysteriously work with very small amount of code :). Given the critical nature of this project, supporting thousands of customers and internal users, it clearly demonstrated that large enterprise applications can run very successfully using the Rhino suite of open source frameworks and utilities.

There are 2 possible paths from your post:

First path, you invented a new way to structure the code, something I've never heard about personally. As people said on your blog they find it efficient.

In that case you should develop more in-depth your ideas and pretend to the Turing award because discarding the notion of components and modularity in software would be something ground-breaking.

All what you are writing advocates against modularity, layering and componentization (on this we agree right?). For a good reference to the modern conception of a component I am refering to, I would advise:

www.amazon.com/.../0201178885

The second possible path is that what you advocate for, is leading to very highly coupled code (as I suspect, especially when looking at the code of NH on which you worked on if I understood well).

Then the readers that are following your ideas just need to care for their infrastructure layer. You give them a solid reason to not componentize their code, not care for dependencies, not care for layering, not care for modularity. IMHO, doing so they will end up with the nightmare of spagetthi code. Due to the very high number people that are following your ideas, the responsability would be pretty high.

Let us not to this kind of discussion, please.

Hm?! My entire design philosophy is based on the notion of independent components.

The NH architectural decisions were usually made by the Java guys. We have made some modifications, but in general, we have stuck quite close to what they did.

There are two lines of conversation here, one of them about the NH codebase, the second about my approach to software.

They are not identical, although the later was certainly influenced by the former.

Again, no.

I don't care for layering, but I do care for the rest.

I place a lot of emphasis on the notion of components that work together, but I don't bother to make this an in-your-face decision, it is a fall out of the way the infrastructure guide the application.

When one give me the impression to play against componentization it is hard to not become ironic. But anyway, apologize for this.

The word 'component' doesn't appear even once in your post.

Do you have any kind of component definition to refer to?

If you don't group type by namepsace/folders/assemblies are you materializing component somehow?

How can you define component boundaries if you don't care for dependencies except the ones with the infrastructure layer?

Can a class belong to 2 components?

It seems that in your approach components are made implicit by your philosophy On a project where several developers are working with turn-over, where design decision are forgotten very quickly because no simple rules are here to enforce them, IMHO it is impossible that it doesn't end up in entangled/unmaintainable code.

"Can a class belong to 2 components?"

I think this is the key. What I understand from the article is that a feature F is build using new code most of the time and/or based on existing code inside the codebase already, and forms together the code for feature F, which IMHO you could see as a 'component', am I correct?

Otherwise indeed it's a bit of a blur what 'component' really means in the context of the article as the definition is already overloaded to death.

My question about the article is that by adding code most of the time, isn't it so that you repeat yourself a lot? Otherwise, re-use would be more appropriate instead of adding.

This is for the rest of the blog readers, Patrick and I are currently in a side channel communication, in which I am trying to get an accurate picture from NDepend about what is going on there. This is an indication of my relative inexperience with NDepend, not about NDepend's capabilties.

I'll probably answer in a separate post about this.

Oh, there is a controllers namespace. For that matter, in the specific project that I am talking about, we actually have a Controllers assembly.

I would probably collapse that into a single assembly now, though.

Controllers is a box, inside we have well defined components (controllers), that intreact with other components in other boxes in well defined ways.

Other than that, I don't really care.

I wouldn't call this a layer, though. Finders, for example, is another box, which is logically at the same elevation as Controllers. Layer assume a hierarchy that doesn't really exists.

As to that, no, I don't require it.

And yes, there are actually scenarios in which a database actions will call to controller level code. That happened fairly rarely, but it was important for several data integrity scenarios.

Well, that is not quite true. What actually happened was that we pushed out a notification that the controllers were responsible for handling.

Then let me rephrase, boxes are a set of one (or very few) type of components. Inside, the lego pieces that I refered to, are all components.

Yes, type.

That is, you are a controller if you inherit from AbstractController, you are a service if you inherit from AbstractScriptService, etc.

No, but a component may be made up of several classes.

Um, you did notice the story that I am talking about here, right? The fact that there was turn over, the fact that design decisions not only weren't forgotten, but still in place years after the original team has left?

Then let me rephrase, boxes are a set of one (or very few) type of components. Inside, the lego pieces that I refered to, are all components.

Yes, type.

That is, you are a controller if you inherit from AbstractController, you are a service if you inherit from AbstractScriptService, etc.

No, but a component may be made up of several classes.

Um, you did notice the story that I am talking about here, right? The fact that there was turn over, the fact that design decisions not only weren't forgotten, but still in place years after the original team has left?

Are you familiar with the fallacy of reuse?

In the end, it leads to more complex code, and very brittle one. Change one thing and you touch everything.

About the feature stuff, I'll post a separate post about it.

With a codebase with several 100KLoC which is multiple years old, yes, I know :)

But there's an interesting point about re-use: if you build elements in such a way that they represent generic knowledge, (e.g. algorithms which are general, well known, or at least generic within the application domain), re-use is beneficial and should be promoted: re-use in that way also puts the pressure on writing code using general algorithms instead of self-invented ones. It's often easy to refactor a piece of code in a somewhat generic method so it's re-used by multiple methods. However, that's often short-sighted: it's often better to look at the algorithm behind the method and refactor it in such a way that it implements a generic variant of the algorithm so it can be re-used whenever you want and doesn't have to change (as the algorithm is general/generic). Example of this is object graphs vs. the usage of a graph structure + its algorithms.

Yes, that can be a possibility. The question then is, how do you conquer DRY ? As that seems to be out of the window then. (I'm again testing you about what you preach and what you do, if that wasn't clear. I'm not advocating DRY as a principle here, I just want to know why you preach DRY but at the same time advocate a way of organizing code which conflicts with DRY)

Ok, then you are making explicit components. That was not clear when you wrote:

I suppose that the frontier of a component is the interface presented by its base type, meaning that concrete impl shouldn't call each others across components boundaries.

Then we agree on the importance of defining explicitly components (me by grouping types, you by inheriting from a type). It seems that we still disagree on the importance of layering (i.e having a direct acyclic graph of static dependencies between components) but actually when you wrote:

... its seems that the infrastructure notification tricks to invoke the 'controller' layer from DB is a way to statically de-couple calls from DB to controllers. Which means that finally, you are using some sort of abstractions to preserve layering and avoid unnatural things like direct call from DB to controller. The abstractions being the infrastructure layer interfaces.

That can happen, but it is... icky. I would generally avoid that.

One scenario where this tend to happen in when you have a recursive component, though.

For example, you have a controller that is orchestrating a bunch of other controllers. See my next post for what I mean in more details.

I am using a lot of abstractions, yes. I just don't think that I am doing this for layering purposes.

Or, to be rather more exact, I suppose my main beef is with the UI->BL->DAL layering scheme, not with layering in general.

But I tend to think about software more like stacked boxes than a set of horiziontal layers, anyway. Within the box, have fun.

Outside the box, you want to consider what you are doing carefully.

Then we agree :o)

Within the box, have fun => High cohesion inside a component

Outside the box, careful => Low coupling between component

Patrick,

Not quite, because a box is not a component. A box is a set of components.

@Frans

But as your argument is fallacious it comes off as trying to make yourself sound smart by employing yet another straw man attack.

This smacks of False Dichotomy, Fallacy Of The General Rule and Oversimplification.

False Dichotomy as you say that you either always obey DRY or you write bad code. Over generalizing code to avoid repetition can lead issues just as copy and pasting code can.

Fallacy Of The General Rule as you imply that DRY is law and laws must never be broken under any circumstances. Stating that all chairs have 4 legs does not mean that a three legged stool is not a chair.

Oversimplification as you imply that Oren has stated "You must alway enforce DRY!" and yet here he has stated that having similar code in two locations is OK, because of these mutually exclusive statements all SOLID programming principles must be wrong. As the saying goes make things as simple as possible, but no simpler. It must be left to each programmer in the context of their program to determine if it is best to violate DRY or any other principle. But realizing the reasons for applying DRY can allow you to make that decision and understand the ramifications of it.

Interesting read, thank you.

I think that having some sample code and a little more details would help to better understand your intentions and approach to architecture. Pictures of lego blocks are cool, but do not provide a lot of insight, I guess. Because when you hear "no layering" it definitely raises a lot of questions that would be answered with simple example otherwise.

Plus it would alleviate the need to explain yourself to Frans and Patrick to the extent that is happening here (and give them less ammo for attacking you).

But I know that this sort of requests (code, code, code) is a common one in your posts.

@iLude

"But as your argument is fallacious it comes off as trying to make yourself sound smart by employing yet another straw man attack."

Oh dear.

Let me put it differently then: if you preach DRY and at another time suggest an architecture which on paper clearly conflicts with DRY (as code is almost never re-used, only added, so repeating yourself is a 100% chance), why are you preaching DRY as it conflicts with the architecture one should use? If you shouldn't repeat yourself, you shouldn't repeat yourself.

You make it sound like "Yes, DRY, but if you do it in the way I do it, it's ok".

Yes, you are smarter than me, iLude (wtf is wrong with posting with your real name?), because I don't see the logic in that.

@Frans

SOLID principles, which DRY is one of, are not laws that must be obeyed at all times lest you go to hell. They are principles that you should understand and apply where they make sense. I'm confused as to why that distinction is hard for you to grasp. Overgeneralizing to avoid copy and paste code can lead to issues and in such cases it maybe acceptable to violate DRY. The key is that you should understand and be able to defend why you did it.

This is a complete and utter misrepresentation of anything and everything said to this point. No one ever said that they do not reuse code. Oren's architecture is made up of components. Those components provide behavior. If you need a behavior you use the component that provides it (see IOC). If a component does not provide the exact behavior you need you create one that does. This can sometimes lead to a violation of DRY. But in makes the system easier to maintain because the components providing the behaviors have simple focused code. I would assume that you have read Evans, but if not you are going to have to. Otherwise you are going to have a more difficult time understanding the big picture that Oren and others are discussing.

Please see en.wikipedia.org/.../Don%27t_repeat_yourself There is no contradiction, if you are facing one, check your premise! (props to Ayn Rand)

Frans, I apologize if I came off as a smart ass it was not my intention. As I stated I am aware of who you are and what you've done and I very much respect that. But often times your arguments do not place you in the best of light due to the fact that they are logically flawed.

Nothing really and if you follow the link in my name and click About Me its right there in the first sentence. That page also contains a bit about where iLude came from.

Um, Frans.

You know that I don't post under my real name, right?

"You know that I don't post under my real name, right?"

Yes, but your name is right there, on this same page.

"They are principles that you should understand and apply where they make sense. I'm confused as to why that distinction is hard for you to grasp. Overgeneralizing to avoid copy and paste code can lead to issues and in such cases it maybe acceptable to violate DRY. The key is that you should understand and be able to defend why you did it."

The point is: 'when they make sense' is subjective, and thus the principle can be tossed out the window. To avoid clones in your code is good because there is research to back that up. "When it makes sense" isn't something you can back up with any research other than your own gut feeling.

Let's be blunt, because you are too: I am fed up with gut-feeling driven software engineering principles. 'when it makes sense' is not something you can base your decisions on, because it's different for everybody. The worse part of that is that if you PROMOTE any of these gut-feeling driven principles as something you should practise, you are actually a fraud: no way in hell is anyone going to be able to apply the principle at the right moment, because 'when it makes sense' is not known. Yes, of course: when it makes sense for the person who promotes the principle, however not when that person is bashing other people's code.

"But often times your arguments do not place you in the best of light due to the fact that they are logically flawed."

If you judge my ability to reason then it's indeed true: you're smarter than me. And that's the last of it.

ps: if I have to click somewhere to read someone's name, I won't do that. Fill in your name in the 'Name' textbox, so people know who you are. Anyway, have fun promoting your handwaving science.

When you can give me the formulas for building robust software, I'll listen.

Software Engineering is about as much art as an engineering practice.

Worse, there are so many aspects that trying to follow a single set of rules is nearly impossible.

Worse yet, if your ruleset doesn't explicitly contains a context and you are careful to apply it correctly.

"When you can give me the formulas for building robust software, I'll listen. Software Engineering is about as much art as an engineering practice."

I wouldn't go so far as calling it art but I'll say it's both science and experience, so trying to sell the idea that some set of principles have to be followed is therefore bullshit, unless you do what you tell others: obey these principles. Don't take this lightly: there's no excuse for dropping any of these principles yourself if you preach to others to use them: the people who listen in and read about these principles think that by obeying to these principles their software will become better. However if the people who tell others that these principles are not always the best way to go, when is that exactly and more importantly: how are these people going to judge themselves when and when not to apply these principles?

So: either follow your own advice and do what you tell others, or stop telling others what to do.

Formulas for building robust software? Let's give it a try:

think first. Doing is for later

analyse what you need. Try to find generic, proven algorithms and datastructures which might be what you need, and build on top of that

prove your algorithms first. This is not that hard and doesn't require any code

implement algorithms/datastructures as designed. Then check whether you implemented the algorithm correctly. This is doable by simply looking at what you wrote. How you implement it, is dictated by the algorithm, not by some blogpost.

test the implementation of what you wrote. As the algorithm is already proven, the tests prove the implementation. If you want, you can also start with this before 'implement algorithms' by implementing it using mocking and tests. That's not the point, the point is that the code is the end-result, not the start.

Think about it, in your head you're likely following these steps every day. Seasoned software engineers can do some of these steps very quickly without a lot of problems, as they either already know how to solve problems in a generic way, or know how to quickly implement any algorithm as they are well skilled in the code/platform used.

See, no principle needed. The problem with these principles is: they're telling the carpenter how to hold the hammer, that the screw's sharp side should be placed against the wood, not the other side. However, the real important question to ask is not how to drill the screw into the wood, but why the screw has to be there. That's answered by taking a couple of steps back and reason why one would put a screw there.

Code without theoretical base is code you can toss away: it has no reason to be there. You don't learn that by applying a truckload of principles, you learn that by thinking what you're doing and why.

You have your reasons why the code you wrote / ported is the way it is. I'm perfectly fine by that. What I find silly is that you go against proven scientific research. That's showing you're closing yourself for new knowledge. Instead, one should open for new knowledge, you never know when it will be of any benefit. But I think you already knew that.

Agreed!

Context, context, context.

Yes, of course. The problem is that you can't really teach _thinking_. That is why we have experience for.

I think this goes back to the whole apprentice concept, though.

Well said Frans.

Speaking of False Dichotomy, I think this is something that Frans is trying to avoid for the general audience. Personally between Frans and Oren I think they both know how to build solid software. The problem is that both of them have establish a large followers in the community. Without Frans counter arguments, many of Oren's follower will blindly follow his advises without understanding the underlying context. Worse yet, they now have an establish authority to refer to, hence the False Dichotomy.

I like Oren short-to-the-point posts. I also enjoy Frans counter arguments. They compliment each other nicely and provide a more balance view for the general audience.

@Frans

Database normalization is a form of DRY. It is well researched and has scientifically proven value. But there are times when it makes sense to denormalize your database. Understanding when its OK to and not to is a matter of experience. Using that experience to solve a problem is referred to as Critical Thinking. And Critical Thinking has just as much research backing it as any other form of scientific research. If you would like to accuse me of PROMOTING critical thinking, well then yes I am.

Why is doing for later? This sounds suspiciously principled to me. By your logic any type of engineering prototype would be unnecessary as you have already proved your solution correct before you ever began.

The simple fact is very few software projects have perfectly defined end goal. The design specification can not be done entirely upfront. DDD and SOLID principles prove a set of tools for dealing with the inevitable design changes that will arise during the course of building an application.

No one doubts that the measurements are mathematically valid. But those measurements do show anything useful in the case of NH. Namespace scoping is but one way of structuring code, but it is far from the only way. So stating that a code base is poorly structured because it fails metrics that measures structure using namespaces, is an faulty conclusion if that code base is structured using some other form.

I'm sorry to hear that. I have to believe you may find something of value there.

And If I put Michael Glenn there does that tell you if I'm a programmer living in PA (which I am), or maybe I'm the programmer living in Canada, or perhaps the basketball coach.... Its a fairly common name. Oren uses Ayende on other sites. You taking so much issue with it is petty and distracts from the important discussions we are having.

Frans and Patrick need to depart to their own blogs.

Their voices here are so loud and distracting, others don't have a chance to make conversation about the actual topic.

f*ck it, I'll post it in 2 parts. Anyway, last posts on this matter.

"Yes, of course. The problem is that you can't really teach _thinking_. That is why we have experience for.

I think this goes back to the whole apprentice concept, though."

You can teach thinking, it just requires a break away from the vast amount of different things a lot of people try to tell others, which are all focussed around 'doing' instead of 'thinking': Every time someone tries to explain something and a piece of code is shown it's a missed chance: the code implies the thought process is already over.

"Database normalization is a form of DRY. It is well researched and has scientifically proven value. "

Database normalization is actually a result of applying a well thought-out process: projecting a higher level abstract entity model onto a relational database. This automatically results in 3rd normal form, and if you're clever 5th. As 3rd is in general the most economical, people don't dig much further.

"But there are times when it makes sense to denormalize your database. Understanding when its OK to and not to is a matter of experience. Using that experience to solve a problem is referred to as Critical Thinking. [...] If you would like to accuse me of PROMOTING critical thinking, well then yes I am."

I'm not accusing you of anything other than you're pointing at me with all kinds of name calling. Denormalization is a process that's sometimes introduced indeed which could lead to redundant data, and should be applied with care. However, you apparently misunderstood me: I'm not saying you should never abandone DRY, I'm saying that if you preach and live by a principle, that principle shouldn't be abandoned, as it's unclear for the people you preach to when THEY should take that same decision. If it's left to the person applying the principle, it's not a principle anymore, but a hint.

"Why is doing for later? This sounds suspiciously principled to me. By your logic any type of engineering prototype would be unnecessary as you have already proved your solution correct before you ever began. "

You seem to think that I promote waterfall of some sort. But I didn't talk about a whole system, did I? What I'm talking about is per feature or subpart of a feature if you will. And yes, doing is for later. You don't engineer software behind the keyboard.

part 2

"The simple fact is very few software projects have perfectly defined end goal. The design specification can not be done entirely upfront. DDD and SOLID principles prove a set of tools for dealing with the inevitable design changes that will arise during the course of building an application."

I didn't say anything about a whole system. What do you think, that I designed llblgen pro first in a big fat document and then started writing all different subsystems? No, not at all. I designed it per feature, like 'recursive saves'. You can perfectly prototype these kind of things, with tests if you want.

Anyway, try it what I talked about above. Not one system as a whole, but feature for feature. Document what you thought of PRIOR to writing code. It's very easy and are often small parts of texts. What you should document are the alternatives you've analysed (hence the analysis) and which you've chosen and _why_. That's it. After that, start coding the feature. I bet, the coding is straight forward and quick. And it works, I can assure you. It works because you're facing the real problems directly. You can't mess your way through it to the next milestone, you have to actively think first, reason about what you're going to do. This is very time effecient because you avoid coding crap which won't work, and also get more insight in the problem at hand. Not system wide waterfall mess, but in small chunks.

"... So stating that a code base is poorly structured because it fails metrics that measures structure using namespaces, is an faulty conclusion if that code base is structured using some other form."

Sure: if you have a codebase C and you can look at it in say 3 ways, X, Y and Z. Looking at it from X, it looks great. If you look at it from Y and Z however, it more and more looks like a pile of goo and should be fixed pronto. The thing is here the Z way of looking at it still suggests that a lot of classes are coupled. It might be logical from viewpoint X, that doesn't make the coupling go away. What you are likely after is: is viewpoint Z that important, if X reveals that everything is OK? That's a question that's obviously subjective and has a million answers. Murphy's Law tells us that ignoring Z might be a gamble which could bite back at the most worse moment, but that moment could be so far in the future that it's worth a shot. :)

iLude, the debate on this has now moved here and is focused on the NH types inter-dependencies, not the namespaces inter-dependencies.

ayende.com/.../...-ndash-skimming-the-surface.aspx

@Ian Thompson:

I totally agree with you.

@Frans and @Patrick:

You constant arguing and attempts to attack Oren are getting very annoying - you are no longer constructive or convincing. You sound like kids in a kindergarten. You can go and bitch in your blogs and your audience will gladly listen to your whining - in the end if people read you then they for some reason think you are worth their time. I was going to read up on your blogs but changed my mind after all this annoying and repetitive noise here. So, please, lets wrap this arguing up and move on.

Comment preview