Architecting Twitter

There are some interesting discussions about the way Twitter is architected. Frankly, I think that having routine outages over such a long period is a sign of either negligence or incompetence. The problem isn't that hard, for crying out loud.

With that in mind, I set out to try architecting such a system. As far as I am concerned, twitter can be safely divided into two separate applications, the server side and the client side. The web UI, by the way, is considered part of the client.

The server responsibilities:

- Accept twits from the client, this include:

- Analyzing the message content, (@foo should go to foo, #tag should be tagged, etc)

- Forward the message to all the followers of the particular user

- Answer to queries about twits from people that a certain person is following

- Answer to queries about a person

- It should scale

- Clients are assumed to be badly written

The client responsibilities:

- Display information to the user

- Pretty slicing & dicing of the data

Obviously, I am talking as someone who knows that there is even the need to scale, but let us leave this aside.

I am going to ignore the client, I don't care much about this bit. For the server, it turn out that we have a fairly simple way of handling that.

We will split it into several pieces, and deal with each of them independently. The major ones are read, write and analysis.

There isn't much need to deal with analysis. We can handle that using on the backend, without really affecting the application, so we will start with processing writes.

There isn't much need to deal with analysis. We can handle that using on the backend, without really affecting the application, so we will start with processing writes.

Twitter, like most systems, is heavily skewed toward reads. In addition to that, we don't really need instant responsiveness. It is allowed to take a few moments before the new message is visible to all the followers.

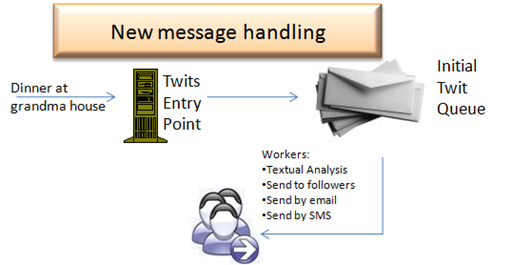

As such, the process of handling new twits is fairly simple. A gateway server will accept the new twit and place it in a queue. A set of worker server will take the new twits out of the queue and start processing them.

There are several ways of doing that, either by distributing load by function or by simple round robin. Myself, I tend toward round robin unless there is a process that is significantly slower than the others, or require extra requirements (sending email may require opening a port in the firewall, as such, it cannot run on just any machine, but only on machines dedicated to it).

The process of handling a twit is fairly straightforward. As I mentioned, we are heavily skewed toward reads, so it is worth taking more time when processing a write to make sure that a read is as simple as possible.

The process of handling a twit is fairly straightforward. As I mentioned, we are heavily skewed toward reads, so it is worth taking more time when processing a write to make sure that a read is as simple as possible.

This means that our model should support the following qualities:

- Simple - Reading the timeline should involve no joins and no complexity whatsoever. Preferably, it should involve a query that uses a clustered index and that is it.

- Cacheable - There should be as few a factors that affects the data that we need to handle as possible.

- Shardable - the ability to split the work into multiple databases would mean that we will be able to scale out very easily.

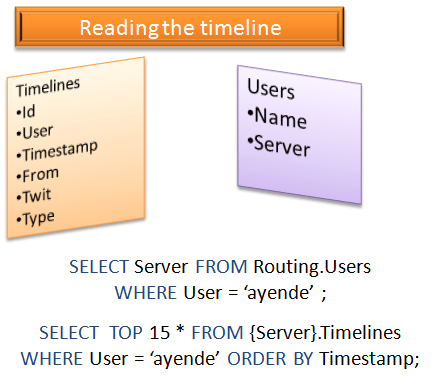

As such, the model on the right seems like a good one (obviously this is very over simplified, but it works as an example).

This means that in order to display the timeline for a particular user, we will need to perform exactly two queries. One to the routing database, to find out what server this user data is sitting on, the second, to perform a trivial select on the timelines table.

What this means, in turn, is that the process for writing a new twit can be described using the following piece of code:

followers = GetFollowersFor(msg.Author) followers.UnionWith( GetRepliesToIn(msg.Text) ) for follower in followers: DirectPublish(follower, msg)

DirectPublish would simply locate the appropriate server and insert a new message, that is all.

If we will take a pathological case of someone who has 10,000 followers, what this means is that each time this person will publish a new twit, the writer section of the application will have to go and write the message 10,000 times. Ridiculous, isn't it?

Not really. This model allows us to keep very low contention, since we don't have any need for complex coordination, it is easily scalable for additional servers as needed, and it means that even in the case of several such pathological users suddenly starting to send high amount of messages, the application as a whole is not really affected. That is quite a side from the fact that inserting 10,000 rows is not really big. Especially since we are splitting it across several servers.

But if it really bothered me, I would designate separate machines for handling such high volume users. It will ensure that regular traffic in the site can still flow while some machine in the back of the data center is slowly processing the big volume. In fact, I would probably decide that it is worth my time using bulk insert techniques for those users.

All of that said, we now have a system where the database end is trivially simple (probably because the problem, as shown in this post, is trivial, I am pretty sure that the real world is more complex, but never mind that), scaling out the writing part is a matter of adding more workers to process more messages. Scaling out the database is a matter of putting more boxes in the data center, nothing truly complex. Scaling the read portion is a good place for judicious use of caching, but the model lends itself well for that.

Now, feel free to tell me what I am off the hook...

Comments

Personally, I'm just happy its a free service, with no ads. The fact that it goes down all the time is neither here nor there to me. Like blogs, the information is asynchronous and disparate. I can see why others are frustrated though.

So, given a service that doesnt crash would be an order of magnitude nicer, and its not everyday free advice gets given out, so hopefully the guys at Twitter are able to take advantage of your point of view.

This is a really informative post, it's great to see your perspective on it. I'm looking forward to seeing reponses to this.

2 implementation questions if I may, as I've not done much distributed stuff...

Do you have a favourite way of implementing sharding?

Would you use linked databases and actually use the server name in the sql, or would clients get the appropriate server name from the routing server and make a separate connection?

Cheers

I like your architecture. It's hard to make too many assumptions about twitter itself, but this is a nice design for a service like twitter.

Given the large number of users, with each user having essentially a different timeline to see, instead of caching (which would be very fragemented - there's probably not a lot of "reusable" stuff to cache is there?) I'd be experimenting with pre-computing the content. Someone tweets? Don't write it to a database, just append the HTML there and then to the user's HTML file. Got web services? Append it to the XML file your web service is returning. Then you don't wear the overhead of database inserts at all, and you don't need indexes.

To the point.

de-normalising the DB for the actual use (reads vs. writes) is 100% correct.

Doing async computations while keeping http-response quick is 100% correct (when a "processing" response is expected)

I suspect Twitter is a classic case of letting the technology drive the business ... someone with Rails experience had a good idea, they implemented it in the technology they knew, they got funding, they expanded it they way they knew how to ... and then they get where they are ...

Twitter is a pretty simple system, it isn't complicated, it doesn't do very much, and as your post clearly shows, a few hours of thought could probably re-architect most of the problems out ... but then it seems the guys at Twitter are tied to a technology they picked, and the approach that technology gives them, or surely they would have rewritten most of it by now ...

@Casey

I wonder if they just mis-judged their scaling efforts and used memcached + replication instead of message queues + sharding? Rails would work well with either AFAIK.

@Tobin

I have no idea what they did use, but I suspect that they don't have a well architected solution, but a big ball of mud, otherwise they would have solved their problems long ago - whether by using queues+shards under rails, or by using some other technological solution ... technology is not important, only the architecture is.

A good architecture would have allowed them to quickly adapt to their new found success, a bad architecture will submerge them deeper and deeper in the mud as they grow.

As Oren points out, even with some fairly simple thought applied, a large amount of the problems Twitter have would go away.

Tobin,

For myself, I would want to use the lowest tech solution possible. In this case, the data is naturally sharded, and there is no need for cross DB operations.

This means that I would simply create a new connection and work from there.

The least reliance I can make on the infrastructure, the better. That reduce the amount of unusual stuff going on, reduce the amount of configuration needed, and ensure that I don't have to have a homogenous mix of servers.

@Ayende

Cheers for the answers!

@Casey

Yeah, looks like they put in lots of instrumentation, then added memcaching and replication. From what I've read, this is often a first port-of call for Rails scalability. However, I think you're right, they focused on scaling the wrong architecture rather than finding a better one.

Great post...

What are the other routing options you considered, DNS, algorithm? Would be great if Udi would chime in on the messaging bits.

Please do more on Architecting.

Mike,

I don't think that I follow. What routing options are you talking about?

1) Have you tried some simple benchmarks to measure the performance of the architecture? I think the pinch would be felt with real numbers. What would the performance be if you double the users and then doubled them again

2) Slightly off topic -- what do you use to create your diagrams?

Ayende,

DNS - username.twitter.com = server1.twitter.com

Algorithm - A routing table based on characters in the name.

1/ This architecture should be usable for just about any number of users. The only scaling out approach would be to introduce additional DB servers and additional writers. There isn't any single place with load.

2/ Powerpoint & Paint

Mike,

I don't think that there should be usage of either.

There is a sharding table, which map a user to a particular DB, and that is all.

You allocate users to databases in round robin fashion, with a cap on how much you allow per DB, perhaps, but that is all.

Hi All,

Interestingly no-one is considering aspects other then technology and architecture. Surely, architecture and technology are very important but real world projects are funded by real world money (consider money somewhat loosely - personal time, fame, personal satisfaction) and developed by developers who have different skills and abilities - and deadlines.

All projects I worked on had serious constraints in mentioned "resources". You are constrained by technologies known by your developers, which again affects architecture since if you want to use something that is not "common" knowledge you need training etc. etc. The once you start you develop prototype, managers say: oh you already have almost ready product. We always agree that prototype is prototype and should be discarded, but at the end of the day it is not you who is going to decide on that but someone who is not really in technology.

Then you enter production and half of the team is heavy on support, and the other half is reassigned to other projects and we start all over again.

Sorry if this wasn't technical enough, just wanted to point out that there are other things that are at least as important as architecture whether you like it or not.

Btw, the article is cool, and as people asked already, why not writing more on architecture?

Cheers,

Mihailo

Mihailo,

Yes, there are constraints to that. But when you are faced with routine downtimes, this is bad. This means that your priority should be to fix this, now.

All of that said, the outlined architecture isn't really hard to build.

About architecture, I am writing on that when I am finding interesting things to write about.

Feel free to send me topics.

What do you guys think of using amazon simpleDB and amazons queueing service (for building something like twitter)?

Regarding tagging a message, how would you implement that in such a simple setup? One point you made above was that no joins should happen when reading the timeline. Would you put the tags for a message in a varchar field and perform a text search on that field or use a relationship between message and tag? Surely a properly indexed join would be faster than the comparison on a text field.

James,

You do that as part of the processing of an incoming message.

Really good stuff, nice to see a discussion of a real world example.

On the negligence/incompetence angle, if they truly had only 3 engineers and one operations staff in late 2007 (as reported) then I don't find it that surprising that they weren't able to get the time to rethink their architecture.

Dare Obasanjo also had an interesting post on this topic: http://www.google.com/reader/view/#search/twitter/2/feed%2Fhttp%3A%2F%2Ffeeds.feedburner.com%2FCarnage4life

Comment preview