Writing high performance code despite C#

Consider the following C code snippet:

This code cannot be written in C#. Why? Because you can’t use ‘+’ on bool, and you can’t cast bools. So I wrote this code, instead:

And then I changed it to be this code:

Can you tell why I did that? And what is the original code trying to do?

For that matter (and I’m honestly asking here), how would you write this code in C# to get the best performance?

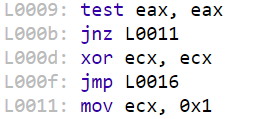

Hint:

Comments

I suppose I would use InlineIL.Fody.

What is the expected distribution of

dw?To make it look like C (but like C code, we always run all tests):

var symbol = (dw > 0x000000FF ? 1 : 0) + (dw > 0x0000FFFF ? 1 : 0) + (dw > 0x00FFFFFF ? 1 : 0);

ocoanet ,Interesting solution. I wouldn't have thought about going that route.

svick,Most of the time, pretty small.

Simon,The issue isn't how it looks, the problem is how it _works_.In the C version, there are no branches, in the C# version, there are a bunch of them.In general, for high perf code, you want to have branch free code, since that allows the CPU a lot more freedom with how the code is executed.

At the IL level, bool is an integer type, you can even use bool for an enum underlying type. The standard does not specify that true is 1, it only states that false is 0. Yet, I suppose that the machine code generated by my sample code is similar to the machine code generated by your C code snippet.

You can't cast a bool to an uint directly, but you can use the FieldOffsetAttribute (and StructLayoutAttribute) to force a bool field to overlap with an uint field. Now you can set the bool fields and then add up the uint fields.

Zero branches, but I don't know the performance characteristics.

Also keep in mind that using this struct the other way around will not allow you to convert a random number into a 1 or 0:

First of all, your altered version is interesting - if counter-intuitive. I looked at the JITed code and it is really the most compact one can possibly achieve.

So you speculatively assign the result eeach time, and with 1/2 probability, that's already it and we're done. Any other way to put it seems to produce more jumps.

For you interest, just to have a proof-of-concept, here is an algorithm without branching. I doubt it will be faster than the branching one because it is quite heavier, but in some funny scenarios it might rather help to not disturb the prediction and cache lines. The example is unit-tested and should really be correct.

And this yields

There's a new shiny intrinsic for this in .NET Core 3:

Lzcnt.LeadingZeroCountalong with a wrapper:BitOperations.LeadingZeroCount.Here's an implementation I came up with:

This is very efficient compared to other solutions so far.

I wrote benchmarks for all solutions suggested here, and here are the results:

The disassembly of the

Lzcntbenchmark method is:Lucas,I'm really impressed by this. That is an awesome approach to solving this cleanly and efficently.

Comment preview