The importance of redundancy

This Sunday, our monitoring systems sent us an urgent ping. A customer instance in RavenDB Cloud was non responsive. Looking into the issue, we realized the following:

This Sunday, our monitoring systems sent us an urgent ping. A customer instance in RavenDB Cloud was non responsive. Looking into the issue, we realized the following:

- RavenDB on that instance was inaccessible from the outside world.

- Azure’s metrics said that the system is fine.

- Admin was unable to SSH to the machine.

- Unable to run commands on the machine.

The instance was part of a cluster, and the cluster has automatically failed over all work to the rest of the actives nodes. I don’t believe that the customer using this cluster was even aware that this happened.

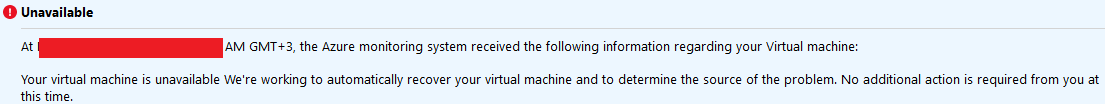

We reached out to Azure support, and we got the following messages:

- There was a platform issue which has been resolved.

- Engineers determined that instances of a backend service became unhealthy, preventing requests from completing. Engineers determined that the issue was self-healed by the Azure platform.

There has been no outage in Azure in general on Sunday, this is just the normal status of things. Some backend thingamajig broke, and an instance went down. You might be looking at 99.999% numbers and consider them impressive, but remember that this applies to the whole system.

For one of our instances, we had a downtime of several hours. It all depends on your point of view. At scale, failure is not something that you try to avoid, it is absolutely going to happen, and you need to manage it.

In this case, RavenDB Cloud isn’t reliant on a single instance. Instead, a RavenDB cluster inside of RavenDB is distributed among multiple availability zones (or availability sets on Azure, if zones aren’t available in the region) to maximize survivability of the nodes in the cluster. And with the way RavenDB is designed, even a single node being up can mask most of the failures.

To be honest, I didn’t expect to run into such issues so soon after launching RavenDB Cloud, but we did planned for it, and I’m happy to say that in this instance, everything worked. Even under unpredictable failure scenario, everything kept on ticking.

Usually when I write such posts, I’m doing a postmortem to analyze what we did wrong. In this case, I wanted to highlight the same process that we run when stuff actually go the way we expect it to.

This makes me very happy.

Comments

Reminds me of Rachel-by-the-bay's Your nines are not my nines from last week

Comment preview