RavenDB 4.1 FeaturesCluster wide ACID transactions

One of the major features coming up in RavenDB 4.1 is the ability to do a cluster wide transaction. Up until this point, RavenDB’s transactions were applied at each node individually, and then sent over to the rest of the cluster. This follows the distributed model outlined in the Dynamo paper. In other words, writes are important, always accept them. This works great for most scenarios, but there are a few cases were the user might wish to explicitly choose consistency over availability. RavenDB 4.1 brings this to the table in what I consider to be a very natural manner.

One of the major features coming up in RavenDB 4.1 is the ability to do a cluster wide transaction. Up until this point, RavenDB’s transactions were applied at each node individually, and then sent over to the rest of the cluster. This follows the distributed model outlined in the Dynamo paper. In other words, writes are important, always accept them. This works great for most scenarios, but there are a few cases were the user might wish to explicitly choose consistency over availability. RavenDB 4.1 brings this to the table in what I consider to be a very natural manner.

This feature builds on the already existing compare exchange feature in RavenDB 4.0. The idea is simple. You can package a set of changes to documents and send them to the cluster. This set of changes will be applied to all the cluster nodes (in an atomic fashion) if they have been accepted by a majority of the nodes in the cluster. Otherwise, you’ll get an error and the changes will never be applied.

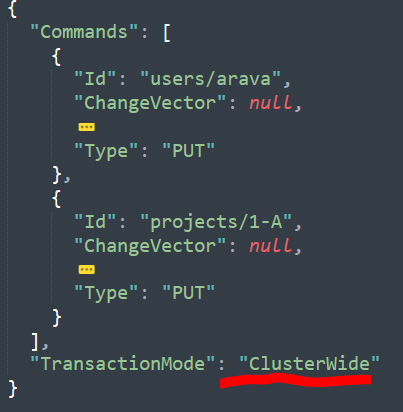

Here is the command that is sent to the server.

RavenDB ensures that this transaction will only be applied after a majority confirmation. So far, that is nice, but you could do pretty much the same thing with write assurance, a feature RavenDB has for over five years. Where it gets interesting is the fact that you can make the operation in the transaction conditional. They will not be executed unless a certain (cluster wide) state has an expected value.

Remember that I said that cluster wide transactions build upon the compare exchange feature? Let’s see what we can do here. What happens if we wanted to state that a user’s name must be unique, cluster wide. Previously, we had the unique constraints bundle, but that didn’t work so well in a cluster and was removed in 4.0. Compare exchange was meant to replace it, but it was hard to use it with document modifications, because you didn’t have a single transaction boundary. Well, now you do.

Let’s see what I mean by this:

As you can see, we have a new command there: “ClusterTransaction.CreateCompareExchangeValue”. This is adding another command to the transaction. A compare exchange command. In this case, we are saying that we want to create a new value named “usernames/Arava” and set its value to the document id.

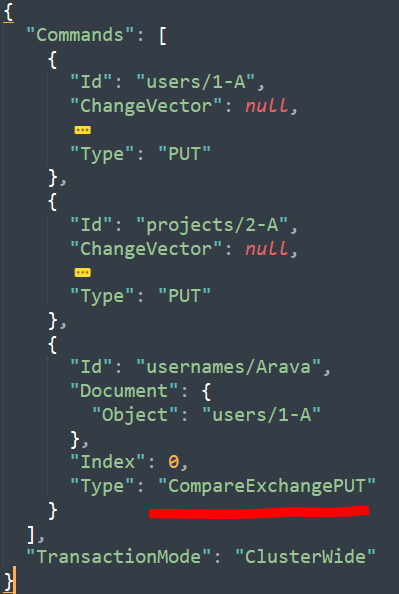

Here it the command that is sent to the server:

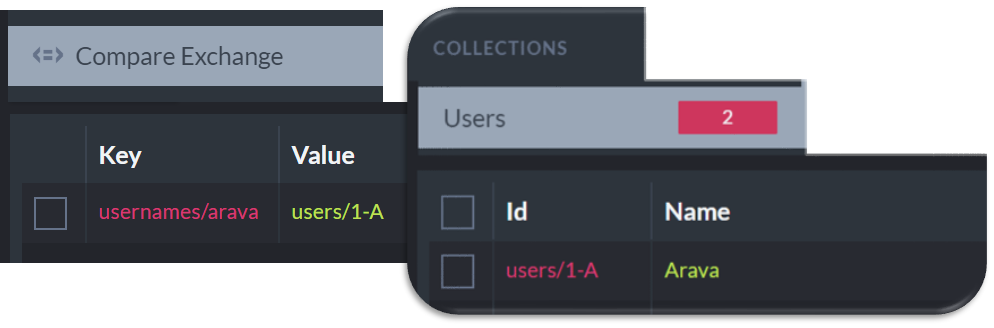

At this point, the server will accept this transaction and run it through the cluster. If a majority of the nodes are available, it will be accepted. This is just like before. The key here is that we are going to run all the compare exchange commands first. Here is the end result of this code:

We add both the compare exchange and the document (and the project document not shown) here as a single operation.

Here is the kicker. What happen if we’ll run this code again?

You’ll get the following error:

Raven.Client.Exceptions.ConcurrencyException: Failed to execute cluster transaction due to the following issues: Concurrency check failed for putting the key 'usernames/Arava'. Requested index: 0, actual index: 1243

Nothing is applied and the transaction is rolled back.

In other words, you now have a way to provide consistent concurrency check cluster wide, even in a distributed system. We made sure that a common scenario like uniqueness checks would be trivial to implement. The feature allows you to do in-transaction manipulation of the compare exchange values and ensure that document changes will only be applied if all the compare exchange operations (and you have more than one) have passed.

We envision this being used for uniqueness, of course, but also for high value operations where consistency is more important than availability. A good example would be creating an order for a seat in a play. Multiple customers might try to purchase the same seat at the same time, and you can use this feature to ensure that you don’t double book it*. If you manage to successfully claim the seat, your order document is updated and you can proceed. Otherwise, the whole thing rolls back.

This can significantly simplify workflow where you might have failure mid operation, by giving you transactional guarantee around the whole cluster.

A cluster transaction can only delete or put documents, you cannot use a patch. This is because the result of the cluster transaction must be self contained and repeatable. A document modified by a cluster transaction may also take part in replication (including external replication). In fact, documents modified by cluster transactions behave just like normal documents. However, conflicts between documents modified by cluster transactions and modifications that weren’t made by cluster transaction are always resolved in favor of the cluster transactions modifications. Note that there can never be a conflict between modifications on cluster transactions. They are guaranteed proper sequence and ordering by the nature of running them through the consensus protocol.

* Yes, I know that this isn’t how it actually work, but it is a nice example.

More posts in "RavenDB 4.1 Features" series:

- (22 Aug 2018) MongoDB & CosmosDB Migration Wizards

- (04 Jul 2018) This document is included in your subscription

- (03 Jul 2018) Detailed query timing details

- (02 Jul 2018) Of course I know ya, dude

- (29 Jun 2018) Running RavenDB embedded

- (26 Jun 2018) Can you explain that choice?

- (20 Jun 2018) Cluster wide ACID transactions

- (19 Jun 2018) Explain that choice

- (22 May 2018) Highlighting

- (11 May 2018) Counting my counters

- (10 May 2018) JavaScript Indexes

- (04 May 2018) SQL Migration Wizard

Comments

Does it mean, that up until this point RavenDB 4.* confirmed writes after flushing them to only one of nodes of the cluster. In other words, there was not quorum confirming write?

Scooletz, Up until this point, all versions of RavenDB (going back to 1.0) have always confirmed the write on a single node. We added the ability to ensure that a write is resident on multiple nodes in 2013, see: https://ayende.com/blog/161985/ravendb-clusters-write-assurances

What this feature brings is the fact that if this fails, the write doesn't go through on any of the nodes, whereas before we'll always first accept the write, then distribute it.

Comment preview