Rejection, dejection and resurrection, oh my!

Regardless of how good your software is, there is always a point where we can put more load on the system than it is capable of handling.

Regardless of how good your software is, there is always a point where we can put more load on the system than it is capable of handling.

One such case is when you are firing about a hundred requests a second, per second, regardless of whatever the previous requests have completed and at the same time throttling the I/O so we can’t complete the requests fast enough.

What happens then is known as a convoy. Requests start piling up, as more and more work is waiting to be done, we are falling further and further behind. The typical way this ends is when you run out of resources completely. If you are using thread per requests, you end up with all your threads blocked on some lock. If you are using async operations, you start consuming more and more memory as you hold the async state of the request until it is completed.

We put a lot of pressure on the system, and we want to know that it responds well. And the way to do that is to recognize that there is a convoy in progress and handle it. But how can you do that?

The problem is that you are currently in the middle of processing a set of operations in a transaction. We can obviously abort it, and roll back everything, but the problem is that we are now in the second stage. We have a transaction that we wrote to the disk, and we are waiting for the disk to come back and confirm that the write is successful while already speculatively executing the current transaction. And we can’t abort the transaction that we are currently writing to disk, because there is no way to know at what stage the write is.

So we now need to decide what to do. And we choose the following set of behaviors. When running a speculative transaction (a transaction that is run while the previous transaction is being committed to disk) we observe the amount of memory that is used by this transaction. If the amount of memory being used it too high, we stop processing incoming operations and wait for the previous transaction to come back from the disk.

At the same time, we might still be getting new operations to complete, but we can’t process them. At this point, after we waited for enough time to be worrying, we start proactively rejecting requests, telling the client immediately that we are in a timeout situation and that they should failover to another node.

The key problem is that I/O is, by its nature, highly unpredictable, and may be impacted by many things. On the cloud, you might hit your IOPS limits and see a drastic drop in performance all of a sudden. We considered a lot of ways to actually manage it ourselves, by limiting what kind of I/O operations we’ll send at each time, queuing and optimizing things, but we can only control the things that we do. So we decided to just measure what is going on and react accordingly.

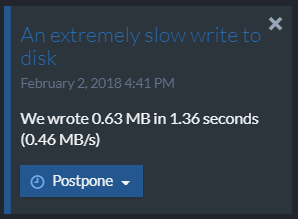

Beyond being proactive to incoming requests, we are also making sure that we’ll surface these kind of details to the user:

Knowing that the I/O system may be giving us this kind of response can be invaluable when you are trying to figure out what is going on. And we made sure that this is very clearly displayed to the admin.

Comments

Comment preview