Overloading the Windows Kernel and locking a machine with RavenDB benchmarks

During benchmarking RavenDB, we have run into several instances where the entire machine would freeze for a long duration, resulting in utter non responsiveness.

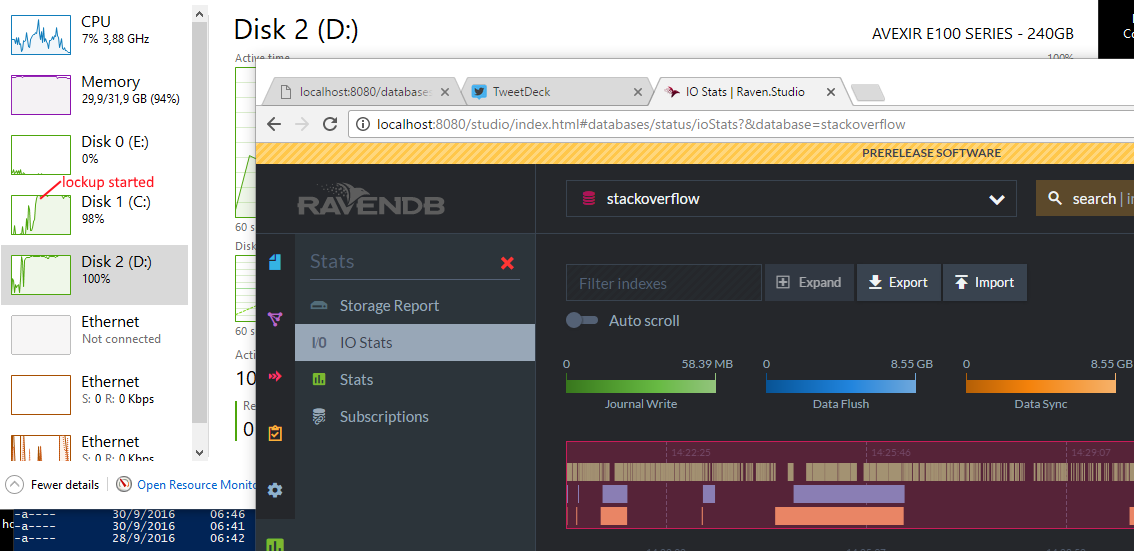

This has been quite frustrating to us, since a frozen machine make it kinda hard to figure out what is going on. But we finally figured it out, and all the details are right here in the screen shot.

What you can see is us running our current benchmark, importing the entire StackOverflow dataset into RavenDB. Drive C is the system drive, and drive D is the data drive that we are using to test our RavenDB’s performance.

Drive D is actually a throwaway SSD. That is, an SSD that we use purely for benchmarking and not for real work. Given the amount of workout we give the drive, we expect it to die eventually, so we don’t want to trust it with any other data.

At any rate, you can see that due to a different issue entirely, we are now seeing data syncs in excess of 8.5 GB. So basically, we wrote 8.55GB of data very quickly into a memory mapped file, and then called fsync. At the same time, we started increasing our scratch buffer usage, because calling fsync ( 8.55 GB ) can take a while. Scratch buffers are a really interesting thing, they were born because of Linux crazy OOM design, and are basically a way for us to avoid paging. Instead of allocating memory on the heap like normal, which would then subject us to paging, we allocate a file on disk (mark it as temporary & delete on close) and then we mmap the file. That give us a way to guarantee that Linux will always have a space to page out any of our memory.

This also has the advantage of making it very clear how much scratch memory we are currently using, and on Azure / AWS machines, it is easier to place all of those scratch files on the fast temp local storage for better performance.

So we have a very large fsync going on, and a large amount of memory mapped files, and a lot of activity (that modify some of those files) and memory pressure.

That force the Kernel to evict some pages from memory to disk, to free something up. And under normal conditions, it would do just that. But here we run into a wrinkle, the memory we want to evict belongs to a memory mapped file, so the natural thing to do would be to write it back to its original file. This is actually what we expect the Kernel to do for us, and while for scratch files this is usually a waste, for the data file this is exactly the behavior that we want. But that is beside the point.

Look at the image above, we are supposed to be only using drive D, so why is C so busy? I’m not really sure, but I have a hypothesis.

Because we are currently running a very large fsync, I think that drive D is not currently process any additional write requests. The “write a page to disk” is something that has pretty strict runtime requirements, it can’t just wait for the I/O to return whenever that might be. Consider the fact that you can open a memory mapped file over a network drive, I think it very reasonable that the Kernel will have a timeout mechanism for this kind of I/O. When the pager sees that it can’t write to the original file fast enough, it shrugs and write those pages to the local page file instead.

This turns an otherwise very bad situation (very long wait / crash) into a manageable situation. However, with the amount of work we put on the system, that effectively forces us to do heavy paging (in the orders of GBs) and that in turn lead us to a machine that appears to be locked up due to all the paging. So the fallback error handling is actually causing this issue by trying to recover, at least that is what I think.

When examining this, I wondered if this can be considered a DoS vulnerability, and after careful consideration, I don’t believe so. This issue involves using a lot of memory to cause enough paging to slow everything down, the fact that we are getting there in a somewhat novel way isn’t opening us to anything that wasn’t there already.

Comments

Depending on how stuck things are could you hit the "Open Resource Monitor" link in task manager and get a quick idea of what processes (probably "System") is using C: and which files its reading/writing? It'd also be interesting, albeit with heisenberg effects, to run procmon for a brief moment or two during the complete system slowdown.

I used it the other day to determine a problem with a FoxPro driver's configuration { yep... :( } seeing it read sequential 4kb blocks, one at a time, from a really large file accessed via a UNC path, completing ignoring the index. Tweaking some options made that all better :)

That theory can not be correct because memory mapped files do not add to the commit charge but page file usage does. This would invalidate the system of commit charge (which guarantees, that unlike on Linux allocated memory is never pulled away).

Also, I have not read about anything like that in Windows Internals but I might have forgotten.

Why would Windows not just write some non-memory mapped data to the page file instead to make room?!

Over the years I have found many problematic behaviors in the Windows memory management policies. Seeing such a screw up does not surprise me but the explanation likely is different.

You could find out by looking at the page out rate or at the IO rate of the page file.

tobi, I absolutely agree that this make no sense, and my theory that this can happen because the I/O to the actual drive is saturate, leading Windows to write to the page file is just that, a theory. Note that the only thing that is happening while this is going on is a lot of memory work on memory mapped files on the other drive, so the C drive should have no work.

" is just that, a theory"

IMHO 'hypothesis' is a better match here.

Does Raven work on Linux? It would be interesting to see what happens there.

You should try running this benchmark on an Azure shared VM - if it locks it there will be a very quick fix from Microsoft.

Are you mapping only portions of the scratch file into your file mapping region? Or are you unmapping parts of the file during the test but not closing the file mapping handle fast enough? If yes this: https://aloiskraus.wordpress.com/2017/02/26/the-mysterious-lost-memory-which-belongs-to-no-process/ could be the reason.

Alois, this is very interesting, we are using mmap here, but the scratch files we use should be cleared almost immediately, and they aren't backed by the page file, but by a phyiscal file on another drive.

Bob, Yes, we work on Linux, we intend to do the same tests there, but we already figured out what we did to pressure the I/O system and removed that, so it might give different results

The Windows Performance Analysis toolkit might provide some insights as to what is actually happening.

Comment preview