The beauty of fully utilized machine

System administrators like to see graphs with server utilizations sitting at the very low end of the scale. That means that they don’t need to worry about spikes, capacity or anything much, they are way over provisioned, and that means less waking up at night.

System administrators like to see graphs with server utilizations sitting at the very low end of the scale. That means that they don’t need to worry about spikes, capacity or anything much, they are way over provisioned, and that means less waking up at night.

That works very well, until you hit a real spike, hit some sort of limit, and then have to scramble to upgrade your system while under fire, so to speak. [I have plenty of issues with the production team behavior as described in this post, but that isn’t the topic for this post.]

So one of the things that we regularly test is a system being asked to do something that is beyond its limits. For example, have 6 indexes running at the same time, indexing at different speeds, a dataset that is 57GB in size.

The idea is that we will force the system to do a lot of work that it could typically avoid by having everything in memory. Instead, we’ll be forced to page data, and we need to see how we behave under such a scenario.

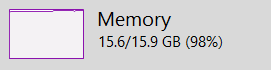

Here is what this looks like from the global system behavior.

If you’ll show this to most admins, they will feel faint. That usually means Something Is About To Break Badly.

But things are slightly better when we look at the details:

So what do we have here? We have a process that (at the time of running it, has mapped about 67 GB of files, and has allocated 8.5 GB of RAM). However, only about 4.5 GB of that is actively used, and the rest of the working set is actually the memory mapped files. That lead to an interesting observation, if most of your work is local and transient (so you scan through sections of the file, like we do during indexing), the operating system will load those pages from disk, and keep them around until there is memory pressure, at which point it will look at all of those nice pages that are just sitting them, unmodified and with a source on disk.

That means that the operating can immediately discard them without having to page them out. So that means that they are very cheap. Oh, we’ll still need to load the data from disk into them, but we’ll have to do that anyway, since we can’t fit the entire dataset into memory.

So that means that our allocation strategy basically goes something like this:

- Ignore the actually free space the operating system report.

- Instead, take into account the private working set and compare it to the actual working set.

The private working set is what goes into the page file, it mostly consists of managed memory and whatever unmanaged allocations we have to do during the indexing. So by comparing the two, we can tell how much of the used memory is actually used by memory mapped files. We are careful to ensure that we leave about 25% of the system memory to the memory mapped files (otherwise we’ll do a lot of paging), but that still gives us leave to use quite a lot of memory to speed things up, and we can negotiate between the threads to see who is faster (and thus deserve more memory).

Comments

Did you find this to be stable? The working set can be reduced to near zero while still not actually discarding any pages. For example if you call EmptyWorkingSet or whatever it is called all pages move from WS to the "standby list" but they are still in memory. (I don't understand what that standby list is good for, btw.) Who knows what else causes similar behavior.

It seems dangerous to equate working set contents with actively uses data.

Tobi, The working is set is what I'm currently working on. It means that unless there is memory pressure, we won't be dropping stuff from it. The standby list is the pages that can be taken with no extra work. Basically, when the system need a page, it will:

-See if there is any free

-See if there is any in the standby

-See if there is any in the active working set that hasn't been modified

And only if all of aren't true will it write a page to disk.

Working set is the actively used data, that is the definition.

What's the average CPU usage when the memory is at 98% ?

@Pop currently around 68%. It is difficult to know without a proper understanding of the actual retired instructions or without access to the BIOS to disable hyperthreading. When we reach the time to do that kind of optimization we will definitely look if that 68% is actually CPU bound or not.

@Federico as long as using more memory leads to using more CPU then the systems behaves as expected. Still using 98% memory just for indexing work is rather worrying. What if Raven is not used on a dedicated machine? what if there are other services on that machine? IIS stops processing requests when memory is this low. I know memory usage can be limited in Raved but it is very unusual for windows services to do this.

Pop Catalin, Notice what the commit size is vs. the total memory used on the system. RavenDB in this case allocated just 8GB while processing, the rest is actually working set on mmap files that can be dropped easily.

In our tests, under heavy load, we saw no major impact on performance.

That sounds like a fragile thing as long as you do not lock the working set like SQL server does. If someone overcommits the machine then the OS will force a pageout of all pageable memory which could bring your running indexes down or cause timeouts because now 6 indexes are concurrently hitting the disk hard.

@Alois We have safeguards in place to handle those scenarios. Indexes will not overcommit to bigger batches if the start getting low-memory notifications. If you are processing way more than you can actually handle (memory wise) you will be getting page-faults no matter what system you are using. The degradation in performance on those cases cannot be fixed if your batches are currently the lowest possible. No timeouts can happen if 6 indexes are concurrently hitting the disk because one thing is the paging and another entirely different is the fsync. We have limited the fsync impact -by not doing it at all until required- without losing ACID properties; that guarantees that write indexes wont overcommit the IO system. Also we do not flush multiple indexes at once, that also diminish the random IO access patterns. Strictly speaking v4.0 has a write queue to deal with that.

Alois, The whole point is that we allow the OS to manage that. We intentionally not going to lock things to allow the OS to free those pages, we also have monitoring for the amount of memory we have in use and will start backing off long before we'll need to fear paging.

All of that said, if you ask the machine to do more than it can, then paging is one of the only ways to go about it

Comment preview