Time series feature designQuerying over large data sets

What happens when you want to do an aggregation query over very large data set? Let us say that you have 1 million data points within the range you want to query, and you want to get a rollup of all the data in the range of a week.

As it turns out, there is a relatively simple solution for optimizing this and maintaining a relatively constant query speed, regardless of the actual data set size.

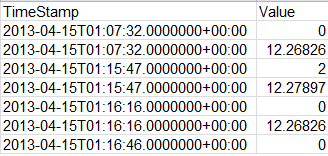

Time series data has the great benefit of being easily aggregated. Most often, the data looks like this:

The catch is that you have a lot of it.

The set of aggregation that you can do over the data is also relatively simple. You have mean, max, min, std deviation, etc.

The time ranges are also pretty fixed, and the nice thing about time series data is that the bigger the range you want to go over, the bigger your rollup window is. In other words, if you want to look at things over a week, you would probably use a day or hour rollup. If you want to see things over a month, you will use a week or a day, over a year, you’ll use a week or a month, etc.

Let us assume that the cost of aggregation is 10,000 operations per second (just some number I pulled because it is round and nice to work with, real number is likely several orders of magnitude higher). So if we have to run this over a set that is 1 million data points in size, with the data being entered on per minute basis. With 1 million data points, we are going to wait almost two minutes for the reply. But there is really no need to actually check all those data points manually.

What we can do is actually prepare, ahead of time, the rollups on an hourly basis. That gives us a summary on a per hour basis of just over 16 thousand data points, and will result in a query that runs in under 2 seconds. If we also do a daily rollup, we move from having a million data points to less than a thousand.

Actually maintaining those computed rollups would probably be a bit complex, but it won’t be any more complex than how we are computing map/reduce indexes in RavenDB (this is a private, and simplified, case of map/reduce). And the result would be instant query times, even on very large data sets.

More posts in "Time series feature design" series:

- (04 Mar 2014) Storage replication & the bee’s knees

- (28 Feb 2014) The Consensus has dRafted a decision

- (25 Feb 2014) Replication

- (20 Feb 2014) Querying over large data sets

- (19 Feb 2014) Scale out / high availability

- (18 Feb 2014) User interface

- (17 Feb 2014) Client API

- (14 Feb 2014) System behavior

- (13 Feb 2014) The wire format

- (12 Feb 2014) Storage

Comments

Comment preview