In this post, we are going to talk about the actual design for a time series feature, from a storage perspective. For the purpose of the discussion, I am going to assume the low level Voron as the underlying storage engine.

We are going to need to store the data in a series. And the actual data is going to be relatively ordered (time based, usually increasing).

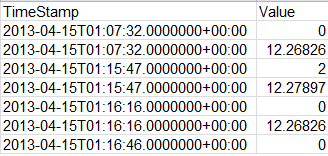

As such, I am going to model the data itself as a set of Voron trees, one tree per each series that is created. The actual data in each tree would be composed of key and value, that are each 8 bytes long.

- Key (8 bytes): 100-nanosecond interval between Jan 1, 0001 to Dec 31, 9999.

- Val (8 bytes): 64 bits double precision floating point number.

There can be do duplicate values for a specific time in a series. Since this represent one ten millionth of a second, that should be granular enough, I think.

Reading from the storage will always be done on a series basis. The idea is to essentially use the code from this post, but to simplify to a smaller key. The idea is that we can store roughly 250 data points in each Voron page. Which should give us some really good performance for both reads & writes.

Note that we need to provide storage API to do bulk writes, since there are some systems that would require it. Those can either be systems with high refresh rates (a whole set of sensors with very low refresh rates) or, more likely, import scenarios. The import scenario can be either a one time (moving to a new system), or something like a nightly batch process where we get the aggregated data from multiple sources.

For our purposes, we aren’t going to distinguish between the two.

We are going to provide and API similar to:

This ugly method can handle updates to multiple series at once. In human speak, this is an enumerable of updates to a series where each update is the time and value for that time. From the storage perspective, this creates a single transaction where all the changes are added at once, or not at all. It is the responsibility of higher level code to make sure that we optimize number of calls vs. in flight transaction data vs. size of transactions.

Adding data to a series that doesn’t exists will create it.

We also assume that series names is up to printable Unicode characters of up to 256 bytes (UTF8).

The read API is going to be composed of:

- IEnumerable<Tuple<DateTime, double>> ScanRange(string series, DateTime start, DateTime end);

- IEnumerable<Tuple<DateTime, double[]>> ScanRanges(string []series, DateTime start, DateTime end);

Anything else would have to be done at a higher level.

There is no facility for updates. You can just add again on the same time with a new value, and while this is supported, this isn’t something that is expected.

Deleting data can be done using:

- void Delete(string series, DateTime start, DateTime end);

- void DeleteAll(string series);

The first option will delete all the items in range. The second will delete the entire tree. The second is probably going to be much faster. We are probably better off checking to see if the max / min ranges for the tree are beyond the items for this series and falling to DeleteAll if we can. Explicit DeleteAll will also delete all the series tags. While implicit Delete(“series-1”, DateTime.MinValue, DateTime.MaxValue) for example will delete the series’ tree, but keep the series tags.

Series can have tags attached to it. Those can be any string up to 256 bytes (UTF8). By conventions, they would usually be in the form of “key:val”.

Series can be queried using:

- IEnumerable<string> GetSeries(string start = null);

- IEnumerable<string> GetSeriesBy(string tag, string start = null);

- IEnumerable<string> GetSeriesTags(string series);

Series can be tagged using:

- void TagSeries(string series, string []tags);

There can be no duplicate tags.

In summary, the interface we intend to use for storage would look roughly like the following:

public interface ITimeSeriesStorage

{

void Add(IEnumerable<Tuple<string,IEnumerable<Tuple<DateTime, double>>> data);

IEnumerable<Tuple<DateTime, double>> ScanRange(string series, DateTime start, DateTime end);

IEnumerable<Tuple<DateTime, double[]>> ScanRanges(string []series, DateTime start, DateTime end);

void Delete(string series, DateTime start, DateTime end);

void DeleteAll(string series);

IEnumerable<string> GetSeries(string start = null);

IEnumerable<string> GetSeriesBy(string tag, string start = null);

IEnumerable<string> GetSeriesTags(string series);

void TagSeries(string series, string []tags);

}

Data sizes – assume 1 value per minute per series, that gives us an update rate of 1,440 updates per day or 525,600 per year. That means that for 100,000 sensors (not an uncommon amount) we need to deal with 52,560,000,000 data items per year. This would probably end up being just over 3 GB or so. Assuming 1 value per second, that gives us 86,400 values per day, 31,536,000 per year and 3,153,600,000,000 values per year for the 100,000 sensors will user about 184 GB or so. Those seems to be eminently reasonable values for the data size that we are talking about here.

Next, we’ll discuss how this is all going to look like over the wire…