After spending so much time building my own protocol, I decided to circle back a bit and go back to TLS itself and see if I can get the same thing for it that I make on my own. As a reminder, here is what we achieved:

Trust established between nodes in the system via a back channel, not Public Key Interface. For example, I can have:

On the client side, I can define something like this:

Server=northwind.database.local:9222;Database=Orders;Server Key=6HvG2FFNFIifEjaAfryurGtr+ucaNgHfSSfgQUi5MHM=;Client Secret Key=daZBu+vbufb6qF+RcfqpXaYwMoVajbzHic4L0ruIrcw=

Can we achieve this using TLS? On first glance, that doesn’t seem to be possible. After all, TLS requires certificates, but we don’t have to give up just yet. One of the (new) options for certificates is Ed25519, which is a key pair scheme that uses 256 bits keys. That is also similar to what I have used in my previous posts, behind the covers. So the plan is to do the following:

- Generate key pairs using Ed25519 as before.

- Distribute the knowledge of the public keys as before.

- Generate a certificate using those keys.

- During TLS handshake, trust only the keys who we were explicitly told to trust, disabling any PKI checks.

That sounds reasonable, right? Except that I failed.

To be rather more exact, I couldn’t generate a valid X509 certificate from Ed25519 key pair. Using .NET, you can use the CertificateRequest class to generate certificates, but it only supports RSA and ECDsa keys. Safe sizes for those types are probably:

- RSA – 4096 bits (2048 bits might also be acceptable) – key size on disk: 2,348 bytes.

- ECDsa – 521 bits - key size on disk: 223 bytes.

The difference between those and the 32 bytes key for Ed25519 is pretty big. It isn’t much in the grand scheme of things, for sure, but it matters. The key issue (pun intended) is that this is large enough to make it awkward to use the value directly. Consider the connection string I listed above. The keys we use here are small enough that we can just write them inline (simplest and most oblivious thing to do). The keys for either of the more commonly used RSA and ECDsa are too big for that.

Here is a ECDsa key, for example:

MIHcAgEBBEIBuF5HGV5342+1zk1/Xus4GjDx+FR rbOPrC0Q+ou5r5hz/49w9rg4l6cvz0srmlS4/Ysg H/6xa0PYKnpit02assuGgBwYFK4EEACOhgYkDgYY ABABaxs8Ur5xcIHKMuIA7oedANhY/UpHc3KX+SKc K+NIFue8WZ3YRvh1TufrUB27rzgBR6RZrEtv6yuj 2T2PtQa93ygF761r82woUKai7koACQZYzuJaGYbG dL+DQQApory0agJ140T3kbT4LJPRaUrkaZDZnpLA oNdMkUIYTG2EYmsjkTg==

And here is an RSA key:

MIIJKAIBAAKCAgEApkGWJc+Ir0Pxpk6affFIrcrRZgI8hL6yjXJyFNORJUrgnQUw i/6jAZc1UrAp690H5PLZxoq+HdHVN0/fIY5asBnj0QCV6A9LRtd3OgPNWvJtgEKw GCa0QFofKk/MTjPimUKiVHT+XgZTnTclzBP3aSZdsROUpmHs2h4eS9cRNoEnrC1u YUzaGK4OeQNLCNi1LyB6I33697+dNLVPoMJgfDnoDBV12KtpB6/pLjigYgIMwFx/ Qyx9DhnREXYst/CLQs8S/dmF+opvghhdhiUUOUwqGA/mIIbwtnhMQFKWCQXEk7km 5hNg/fyv/qwqvTkqQTZkJdj0/syPNhqnZ9RurFPkiOwPzde8I/QwOkEoOXVMboh4 Ji3Y6wwEkWSwY/9rzUK2799lzTmZlvUu2ZxNZfKxQ84vmPUCvP288KXOCU4FxIUX lujBu7aXUORtQE9oZxBSxqCSqmCEb7jGwR3JOpFlUZymK7W0jbY4rmfZL8vcDYdG r0msuXD+ggVjYzpHI7EH5MtQXYJZ2aKan5ZpSL/Lb0HsjkDLrsvMi+72FcwXH+5P Q1E30uxs5y9xOTSqff9T9x6KPAOwIpmrv4Bc3J0NgEgWiKxG9nM1+f8FkKlCRino rrF9ZrC+/l/vc67xye+Pr1tLvEFT5ARu/nR1JH/Lv/CsAU9y51wOPqD6dQUCAwEA AQKCAgBJseTWWcnitqFU8J62mM94ieCL8Q3WYZlP7Zz38lfySeCKeZRtWa/zsozm XEQY0t7+807pHPLs0OhMHlFv1GQKj09Wg4XvWWgqvLOSucC7QZ6cLfNUoUNhCxGp dbnAKGuXN9wwx7NBBljl5V4Ruf//UgxRw7YuklWk0ZjoUSrGGDX3siOtaZ17Nxwf NAB8qWKWwzSgquUmEH+kr4HeZorSRfC/+ntEUaa6y5T28g7Vosb4NYgLxJqiN3te 3B0yY6O3N4bZkyQ6TEblSdua7LCsPUCjbdi6LlZg664RDQqIcVATkwzVC14A95Mj tjkzqzU5ttxpkmP21cHdX6847QcpERgQ7NzAbjrU5UH8aBOsetaZo/1yDr5U13ah YcAq9XX6tLeAA0rUsnXKAWBQswtWIU0jXBuRRSE7xDXv+82SWEoPqZMSAv77p+uc AeogN+zzZPPet/AOERKLcGC9WoC/HT7q/H3zFAsRPoKY6qMfLFntdosc0lmRxvHv b9NXBzKdDuOiUXhdRMhL5Yld8ivvHuwRnPfcZycplSFrA9E5xo/S3RQj+Re9L0yR 8tNzjl+lcgtk8Q0CSJl6eW2Fjja5ZrvDD8qL97+WFqHR7LTTqZ7TmiT7u1MXW1Il wTuccWCQ85BzxpRbyzPXLdsxMgPCmjicX/23+srOXAk2z42bOQKCAQEAzM1Mocnd w0uoETHZH0VX29WaKVqUAecGtrj+YNujzmjLy2FPW10njgBfZgkVQETjFxUS9LBZ xv/p6fCio3NgXh3q7O/kWLxojuR8JB7n4vxoKGBwinwzi1DHp37gzjp/gGdr4mG9 8b7UeFJY8ZPz0EoXcPr3TL+69vOoLieti/Ou9W7HbpDHXYLKclFkJ0d/0AtDNaM7 kCNvI7HgC5JvCCOdGatmbB09kniQjtvE4Wh4vOg/TtH1KoKGXbC8JnjHNRjJtgqU 1mhbq36Eru8iOVME9jyHAkSPqphqeayEUdeP3C1Bc2xmrlxCQALZrAfH37ZWcf44 UuOO5TMnf5HTLwKCAQEAz9F59/xlVDHaaFpHK6ZRTQQWh6AVBDKUG2KDqRFAGQik 6YqQwJFGSo1Z+FjXzidGEHkqH6KyGtSxS6dTgqqfTC96P1rdrBab5vgdXpfSa/0S Qke2sH3eZ1vWJe95AD7AuVfsN/6IXIBHP5fWjXthGuo6U3vkkNjdjJGNxjfuMuug SbxjjVV6kZI6gwX2gfTQDKUT+yRjEnqGAyCcFeZXwWGryF1IseOFaNB2ATVKSqn9 oXI7AaI3ZRX3SyfOfyo3TaZEXabS1tfEg4JwIGNpx8WvRxb/X7WZi46be8u0ya4L BDJ6ZIOBf7lpvaI1Dr3dzCuPqjGQ3V/xPwGy5D8+CwKCAQEAuFVUUw6pjn0LIabX QQEd6hzgq7X+H5Q8A7yQIMewMTk7rKvCTH6U+oe1VdZ5DSazqvPp4tjThXyTol9X U3ymUS/mYiotQf0asvpODgjPOAttCGJ9CPhvQEaN3WEioBwg5IaxoMnOt8bF4CJm MdG0ElaNsMACVE8BzgJS7nACEURcxkVWNVsURkNRSgGd/oipLqzkamOoWby67MrN 2DyNuSqs3QzbnBXZdHsVya9fDm8EtSroyF3Lp95hZ/SJ9KqiylSsQTBW9IBrefjf HcDY8fWaMrMZ5V2mXarfsvInCq7VqhwFnAkGhos9ifXGy8MZEG9CcUmakmiFFiCr vXOYOwKCAQADL9Yr/F3dbapIwWGoBLPod3CVAdpwpwnoZZlZRV9zQtOslShlG5U1 XXeMvGgKzEVhyUnhFFCg4rQZUeaQ8Wbh9zRrtkwB8JLRduqUYcWjTE00YP8nM7bu ZNUi3cpAO7Ye4X9I2Ilkyb7N9dkfcE3r6L2ePB8kLX8wQacn7AGmHEDoAJCSQUZQ 5yooijXehk+OchWdW1B9nw1hDOX33AFqgMHun6eWusN3+QJmQFf0TykJicPn4YHx 9eVF7MVY49/XO/5+ZSmEi+iCj8SCaqPboWdvsqWV5SYGotg1jMkn8phOpyuDURTy TXiWpN8la7n0AJMCbCIpkugTLEZ/A41DAoIBAAr73RhOZWDi40D6g+Z2KLHMtLdn xHMEkT0bzRZYlr0WGQpP/GPKJummDHuv/fRq2qXhML7yh7JK8JFxYU94fW2Ya1tx lYa5xtcboQpBLfDvvvI4T4H1FE4kXeOoO46AtZ6dFZyg3hgKlaJkR+pFPLr5Aeak w9+6UCK8v72esoKzCMxQzt3L2euYRt4zTKL3NnrgS7i5w56h2UvP1rDo3P0RVoqc knS1ToamVL2JaPnf/g+gUUVZyya9pyu9RP8MIcd1cvnxZec8JaN89WWnsA2JJbPw stYBnWMvLFabPtPXVcsLrWMEmLFI2yn+fU4YTviwRSs/SrprXDdsqZO2xd8=

Note that in both cases, we are looking at the private key only. As you can imagine, this isn’t really something viable. We will need to store that separately, load it from a file, etc.

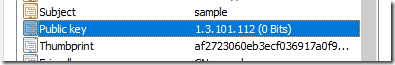

I tried generating Ed25519 keys using the built-in .NET API as well as the Bouncy Castle one. Bouncy Castle is a well known cryptographic library that is very useful. It also supports Ed25519. I spent quite some time trying to get it to work. You can see the code here. Unfortunately, while I’m able to generate a certificate, it doesn’t appear to be valid. Here is what this looks like:

Using RSA, however, did generate viable certificates, and didn’t take a lot of code at all:

We store the actual key in a file, and we generate a self signed certificate on the fly. Great. I did try to use the ECDsa option, which generates a much smaller key, but I run into sever issues there. I could generate the key, but I couldn’t use the certificate, I run into a host of issues around permissions, somehow.

You can try to figure out more details from this issue, what I took from that is that in order to use ECDsa on Windows, I would need to jump through hoops. And I don’t know if Ed25519 will even work or how to make it.

As an aside, I posted the code to generate the Ed25519 certificates, if you can show me how to make it work, it would be great.

So we are left with using RSA, with the largest possible key. That isn’t fun, but we can make it work. Let’s take a look at the connection string again, what if we change it so it will look like this?

Server=northwind.database.local:9222;Database=Orders;Server Key Hash=6HvG2FFNFIifEjaAfryurGtr+ucaNgHfSSfgQUi5MHM=;Client Key=client.key

I marked the pieces that were changed. The key observation here is that I don’t need to hold the actual public key here, I just need to recognize it. That I can do by simply storing the SHA256 signature of the public key, that ensures that I always have the same length, regardless of what key type I’m using. For that matter, I think that this is something that I want to do regardless, because if I do manage to fix the other key types, I could still use the same approach. All values in SHA256 will hash to the same length, obviously.

After all of that, what do we have?

We generate a keypair and store, we let the other side know about the public key hash as the identifier. Then we dynamically generate a certificate with the stored key. Let’s say that we do that once per startup. That certificate is going to be different each run, but we don’t actually care, we can safely authenticate the other side using the (persistent) key pair by validating the public key hash.

Here is what this will look like in code from the client perspective:

And here is what the server is doing:

As you can see, this is very similar to what I ended up with in my secured protocol, but it utilizes TLS and all the weight behind it to achieve the same goal. A really important aspect of this is that we can actually connect to the server using something like openssl s_client –connect, which can be really nice for debugging purposes.

However, the weight of TLS is also an issue. I failed to successfully create Ed25519 certificates, which was my original goal. I couldn’t get it work using ECDsa certificates and had to use RSA ones with the biggest keys. It was obvious that a lot of those issues are because we are running on a particular operating system, which means that this protocol is subject to the whims of the environment still. I have also not done everything that is required to ensure that there will not be any remote calls as part of the TLS handshake in this case, that can actually be quite complex to ensure, to be honest. Given that these are self signed (and pretty bare boned) certificates, there shouldn’t be any, but you know what they say about assumptions ![]() .

.

The end goal is that we are now able to get roughly the same experience using TLS as the underlying communication mechanism, without dealing with certificates directly. We can use standard tooling to access the server, which is great.

Note that this doesn’t address something like browser access, which will not be trusted, obviously. For that, we have to go back to Let’s Encrypt or some other trusted CA, and we are back in PKI land.

![image_thumb1[1] image_thumb1[1]](https://ayende.com/blog/Images/Open-Live-Writer/3888eb7042bd_129BA/image_thumb1[1]_thumb.png)

![image_thumb3[1] image_thumb3[1]](https://ayende.com/blog/Images/Open-Live-Writer/3888eb7042bd_129BA/image_thumb3[1]_thumb.png)

![image_thumb2[1] image_thumb2[1]](https://ayende.com/blog/Images/Open-Live-Writer/3888eb7042bd_129BA/image_thumb2[1]_thumb.png)