In RavenDB 5.2 we made a small, but significant, change to how RavenDB is processing cluster wide transactions. A cluster wide transaction in RavenDB prior to the 5.2 release would look like this:

A cluster wide transaction in RavenDB allows you to mix writing documents as well as compare exchange values. The difference between those two options is that documents are stored at the database level and compare exchange values are stored at the cluster level.

As such, a cluster wide transaction can only fail if the compare exchange values aren’t matching the expected values. To be rather more blunt, optimistic concurrency on a document with a cluster wide transaction is not going to work (in fact, we’ll throw an error if you’ll try). Unfortunately, it can be tricky to understand that and we have seen users in the field that made the wrong assumptions about how cluster wide transactions interact with documents.

The most common mistake that we saw was that people expected changes to documents to be validated as part of the cluster wide transaction. However, RavenDB is a multi master database, so changes can happen across cluster wide transactions, normal transactions as well as replication from isolated locations. That make it challenging to match the expected behavior. Leaving the behavior as it, however, means that you have a potential pitfall for users to stumble into. That isn’t where we want to be, so we fixed it.

Let’s consider the following piece of code:

Notice the code in line 8, we are creating a reservation for the username by allocating a document id for the new document. However, that is the kind of code that would work when running in a single server, not in a cluster. The change we made in RavenDB 5.2 means that we are now able to make code this simple happen across the cluster in a consistent and usable manner.

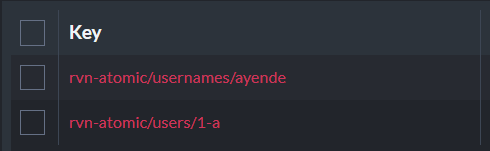

Behind the scenes, RavenDB creates these guard compare exchange values. Those are used to validate the state of the transaction across the cluster.

The change vectors of the documents are also modified, like so: "RAFT:2-XiNix+yo0E+sArbTyoLLyQ,TRXN:559-bgXYYHaYiEWKR2mpjurQ1A".

The idea is that the TRXN notation gives us the relevant compare exchange value index, which we can use to ensure that the cluster wide state of the document matches the expected state at the time of the read.

In short, you can skip using compare exchange values directly in cluster wide transactions. RavenDB will understand what you mean and create the relevant entries and manage them for you. That would work also when you are working with both cluster wide and regular transactions.

A common scenario for this code above is that you’ll include the usernames/ayende reservation document whenever you modify the user’s name, and that will always be using a cluster wide transactions. However, when modifying the user and not the user name, you can skip the cost of a cluster wide transaction.