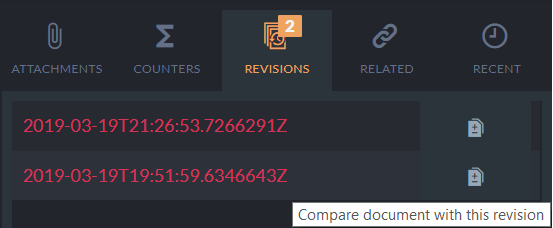

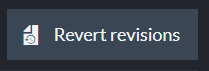

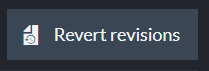

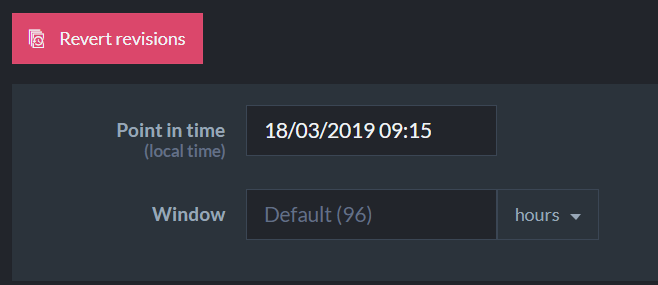

The feature outlined in this post is a hidden behind a small button at a relatively obscure part of the RavenDB Studio (Database > Settings > Document Revisions). You can see how it looks like on the right. Despite its unassuming appearance, this is a pretty important feature. Revisions revert is a feature that we wish that no one use, though, which make it an interesting one.

The feature outlined in this post is a hidden behind a small button at a relatively obscure part of the RavenDB Studio (Database > Settings > Document Revisions). You can see how it looks like on the right. Despite its unassuming appearance, this is a pretty important feature. Revisions revert is a feature that we wish that no one use, though, which make it an interesting one.

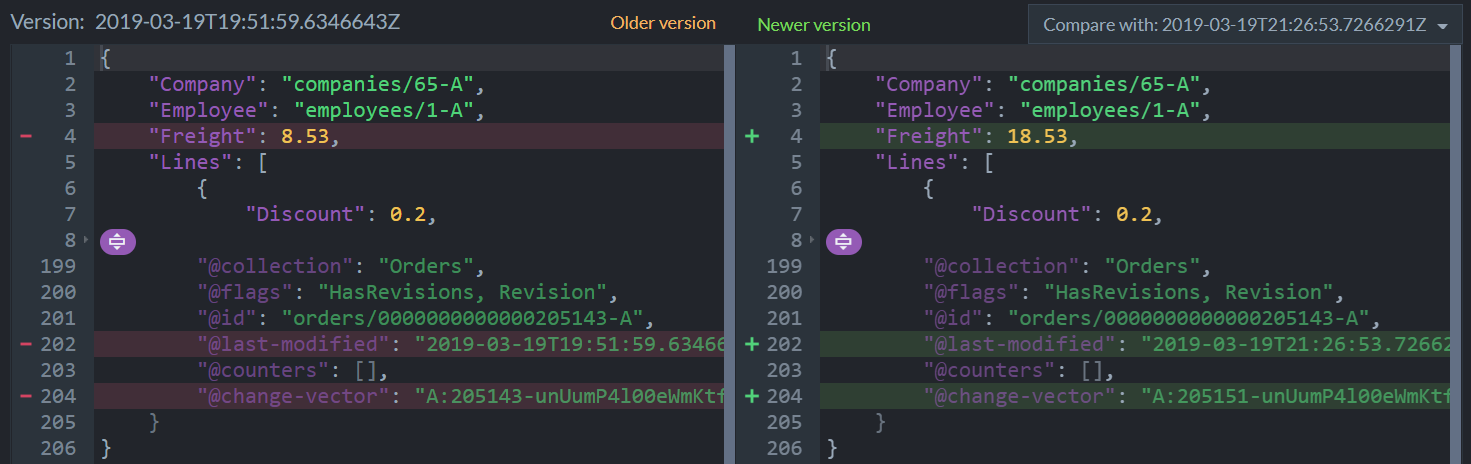

Revision Revert allow you to take your entire database back to a particular moment in time. Documents changes will be undone, deleted documents will be restored, new documents will be removed, etc.

So far, this isn’t a surprising feature, being able to restore to a point in time is a feature that many other database have. How is this feature different? In most systems, a point in time restore require you to… well, restore. In a large database, that can take a lot of time. Revision Revert is an alternative to that. Instead of restoring from scratch, it utilize the revisions features in RavenDB to allow you to just hit the time machine button and go back to the desired time.

The common use case for that is immediately after the “Opps” moment. You have run an query without specifying a where clause, deployed a bad version of your app that removed important fields, etc.

Revision Revert is an online operation, you don’t need to take the database down. In fact, you can still serve reads while the process is going on. Since typically you’ll need to go back in time a relatively short period, this is a very quick process.

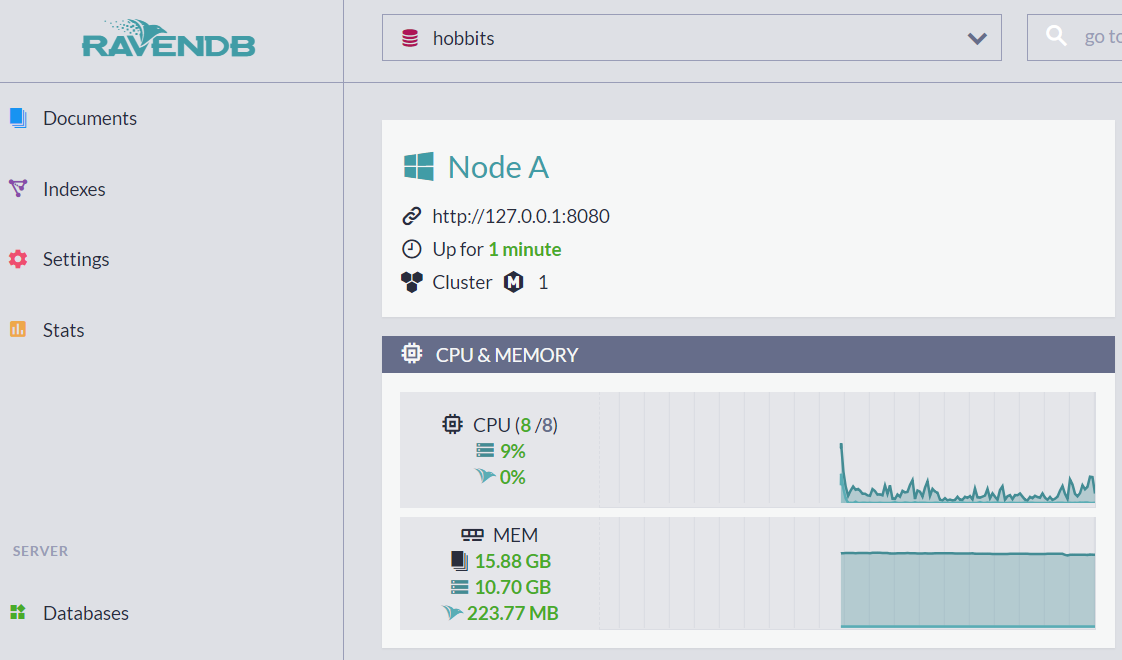

In a distributed system, the admin will invoke this process on one of the nodes in the system and the reverts will be applied on that node and then replicated from there to all the other nodes in the system. We have made every attempt to make what is likely to be a pretty stressful event as easy as possible.

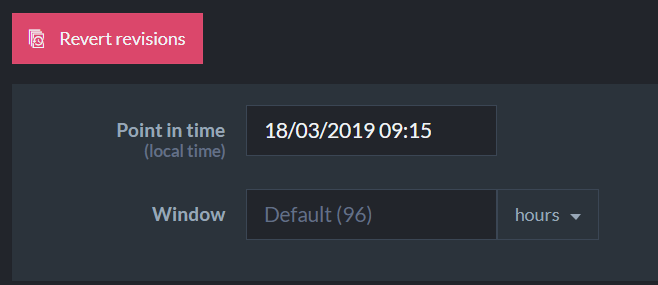

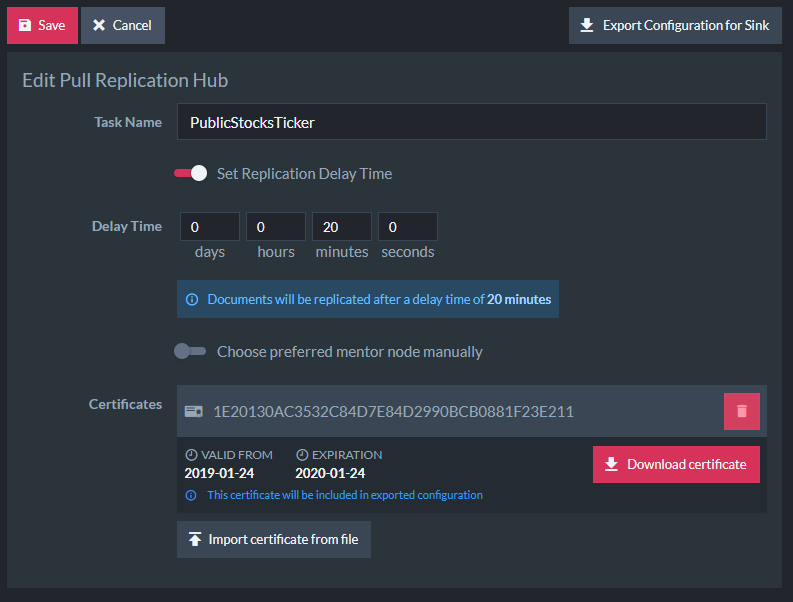

You might have noticed the Window configuration in the screen above. What is that about?

To be honest, this is something that we expect most users to never really care about. It is there for correctness’ sake in a distributed environment. Let’s dig a little deeper into this feature.

First thing we need to talk about is time. The point in time that we’ll restore to is the user’s local time. This is converted into UTC internally and used to compute the cutoff point for the revert. In a distributed system, it is possible (even likely) that different machines on the network will have different clocks. (Note that while RavenDB will work just fine and do the Right Thing if your nodes have different timezones, we have found that really confusing. Better to keep all nodes on the same timezone and clock sync system).

This means that one problem for this feature is that changes happening on two machines at the same time may have different time stamps (in UTC, the local time is not relevant). You need to take that into account when using Revision Revert since that is what RavenDB uses to decide what stays and what go.

The second problem is that just because two updates happened at the same time, it doesn’t mean that we learned about them at the same time. What I mean here is that a change that two changes that happened at the same time on different machine may have reached a particular node at very different times. That is where the Window option come into play. We scan the revisions log for all changes to the system. And we scan them in the order that we learned about them. By default, we’ll go back 4 days until we are sure that there aren’t any revisions that we got out of order and missed.

A few additional things about this feature. Obviously, it requires that you’ll have revisions enabled (and have enough revisions capacity to go back far enough in time, naturally). It support live restores and operates nicely in a distributed environment. Note that if you are doing Revision Revert and not all your documents have revisions enabled, only those that have revisions will be reverted.

Currently we apply this revert globally, we are considering allowing you to select specific collections to revert, but I’m not sure how useful that would be in practice.