Usually when talking about authentication we think about how we authenticate a user, but in this case, we refer to authenticating the encryption itself. You might consider that this is something that a cryptographer might need to do to prove a new algorithm, but it actually refers to something quite different.

Consider the following encrypt cookie: {"Qdph":"Ruhq","Dgplq":"Q"}

This was encrypted using Caesar’s cypher, with the secret key 3. Because it is encrypted, no one can figure out what is written inside it (let’s assume that this is the case and this is actually a high security methods, showing how things actually works with bits is too cumbersome).

The problem is that we handled an opaque block to the user (who is not to be trusted) and we will get it back at some later point in time. This is great, except for the part where the user might modify the data. Now, sure, they don’t know what the encryption key is, but let’s assume that they have good idea about the structure of the data, which something like:

{“Name”: <user name>, “Admin”: <N / Y> }

Given this knowledge, I can start mutating the end of the encrypted buffer. Because the decryption of the data is a pure transformation function, it doesn’t matter to it that the data has changed, and it will “decrypt” it just fine.

Now, in many cases that would decrypt to something totally wrong. Changing the encrypted value to be: {"Qdph":"Ruhq","Dgplq":"R"} will give us a decrypted value of “Admin”: “O”, which is obviously not valid and will cause an error. But all I have to do is keep trying until I get to the point where I send a modified encrypted value where decrypting “Admin”: “Y”.

This is because in many cases, developers assume that if the value was properly decrypted and has the proper format it is known to be valid. This is not the case and there have been many real world attacks on such systems.

The solution to that is to add, as part of the encryption algorithm itself, a part where we verify a signature on the data. This signature is also signed with the secret key, so the idea is that if the data was modified, if you don’t have the secret key, you’ll not be able to fix the signature. The decryption process will fail. In other words, we authenticated that the value was indeed encrypted using the secret key, and wasn’t modified by a 3rd party somewhere along the way.

There has been a case where we wrote to a temporary file without also doing authenticated encryption and a case where we validated a hash manually while also using authenticated encryption. Unfortunately, they did not balance each other out, so we had to fix it. Luckily, it was a pretty easy fix.

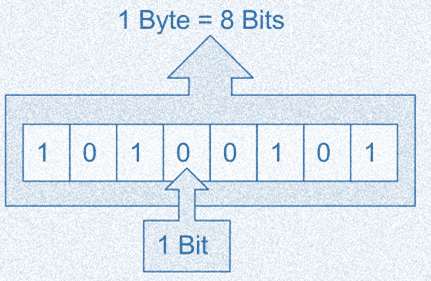

This issue in the RavenDB Security Report is pretty simple, when we generate a certificate, we need to generate a certificate serial number. We were using a random number that is 64 bits in length, but that is too small. The problem is the birthday attack. For a 64 bits number, you only need about 5 billion attempts to generate a collision. In modern cryptography, that is actually a very low security threshold.

This issue in the RavenDB Security Report is pretty simple, when we generate a certificate, we need to generate a certificate serial number. We were using a random number that is 64 bits in length, but that is too small. The problem is the birthday attack. For a 64 bits number, you only need about 5 billion attempts to generate a collision. In modern cryptography, that is actually a very low security threshold.