The low level trie challenge is storing a trie structure inside a 32KB buffer, and allowing read, write and delete operations on it. The idea is that you can have no additional data about the trie other than the buffer. The full implementation is available on github.

Because of that, you need to think very differently about how you approach the solution. Here is the header file with the main trie operations:

You’ll note that the only field that I have on the class is a 32KB buffer. All the data is stored inside it. In order to handle that, we are going to treat this buffer as our main memory and “allocate” our data inside it. I guess we could do this by using C++ allocators, but that seem to be very heavy weight and I don’t think it will work very well for this scenario. Instead, we define the following basic structures:

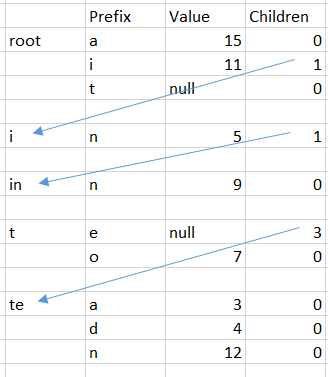

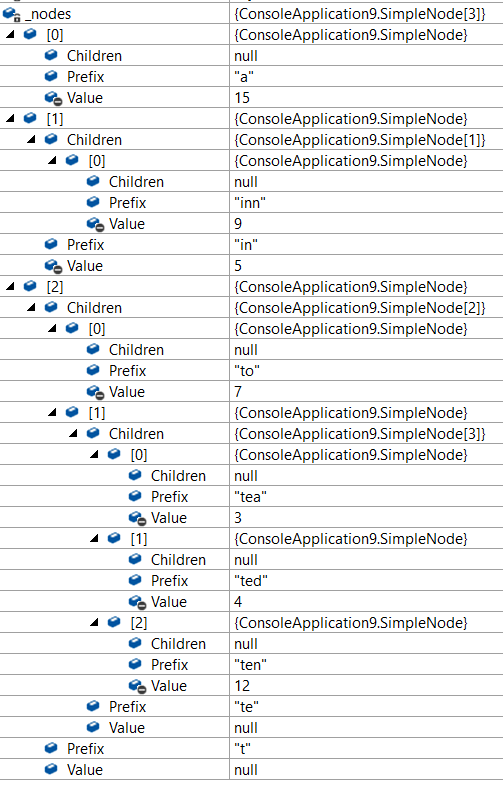

The trie header is located at the very beginning of the buffer, and contains the basic information about the trie. And then we have each node entry, which is about a specific entry. In particular, the offset fields in the node_header_info are actually pointers into the buffer itself. And the next_alloc field in the trie_header_info is used to “allocate” additional memory inside the buffer.

The idea is that whenever we need more memory, we just take it from the top of the buffer, until we run out of room. Here is how I add the very first entry:

I’m forcing it into a specific well known place in the buffer (line 4), and then I handle all rest of the memory assignments from there. The same holds true when we start getting into the nested structure. Each entry has a array of offsets that points to its children, which is pointed to by the children_offset value.

When we need to add a new child to a node, we aren’t modifying the old array, instead, we are allocating a completely new one, adding the new value and sorting the whole thing.

The sorting thing is actually fun, because it means that when I’m reading from the trie, I can reduce the amount of work that I have to do per level.

Here is how I read from the trie:

We first try to find a match in the trie, starting from the root node. The find_match method is then called to find if the current node is a match, and if not, whatever we can go further. It does so by comparing the key to the stored value, and if there is a partial match,recursing into the next level.

A fun part happens inside the find_child method, where we do a sorted search over the children array to find the node with the matching starting byte.

The whole idea is that because I’m using constrained memory, I can make do with very little actual work to manage the memory, I’m just using and discarding it all the time. But I am keeping track of when I can next allocate the memory, and how much of the buffer is in use. When I hit the memory limit, I’m going to defrag the trie. This is like so:

There is quite a lot going on in there, but the basic idea is that we are going to copy the buffer to a temporary buffer, reset our own buffer (this all happens in the first 4 lines), and then we are going to traverse the data in the temp buffer and insert it into the real buffer directly. One of the things that is most important here is that we properly calculate the size of the children array for each node.

I could have probably have done more here:

- Ensure that the data is aligned

- Merge nodes that have only a single child and no value of their own

And probably a lot of other stuff, but this has been a fun experiment.