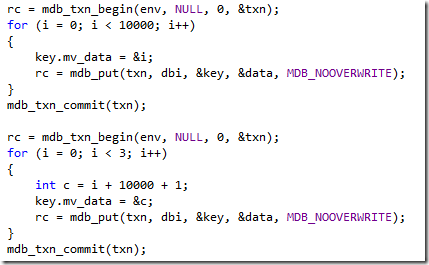

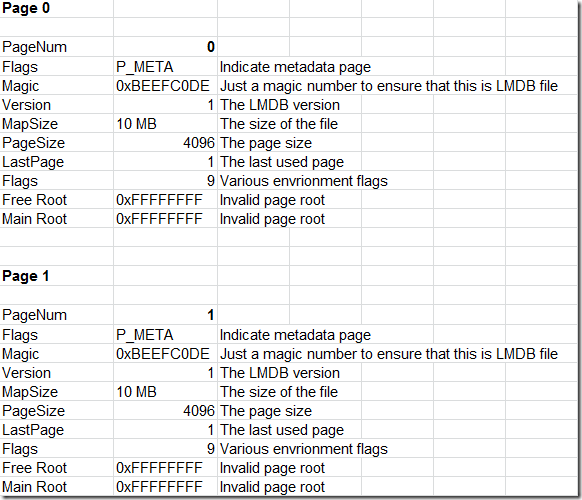

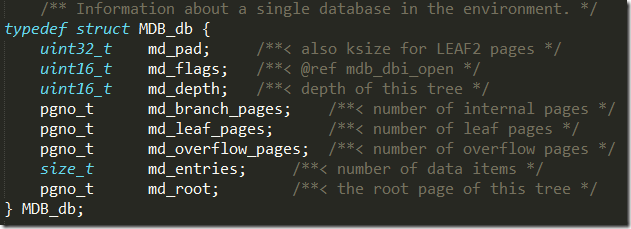

As mentioned, the data in LMDB is actually stored in page. And I am currently tracking through the process in which we add a new item to the database. The first thing that happen is that we allocate a leaf page. I think that I’ll have to go over how pages are allocated now, which should explain a lot about how LMDB reuses disk space.

A new page is allocated by first finding the oldest transaction that is still active. This is how LMDB implements MVCC. Basically, any free page that is older than any existing transaction is eligible for reuse. The first db (although a better term would be to call it btree, or maybe a table) contains the free pages, and at first we search there for an available page. If we can’t find such a page, we use the end of the file for that. This happens in mdb_page_alloc.

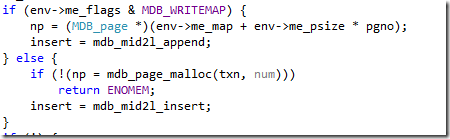

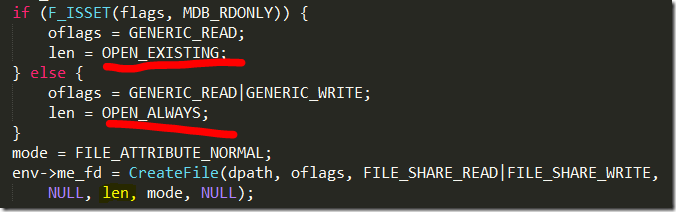

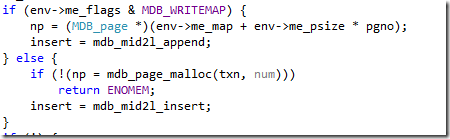

An very interesting aspect is the fact that LMDB allows direct memory writes (with the additional risk of corrupting the db if you messed the data), interestingly, this is done in the following code:

If the options allows directly memory writes, you get a point to the mmap file. Otherwise, LMDB will allocate a page (or re-use one that has already been allocated).

I am not really sure what is going on with the insert yet. This is a function pointer (delegate for C#). And it select which behavior will happen later on. I am just not sure what those two different function do yet.

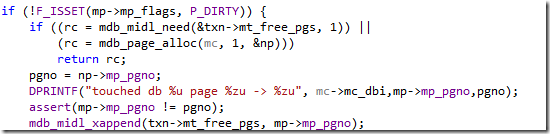

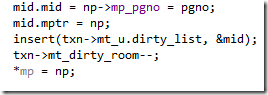

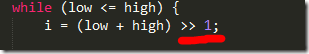

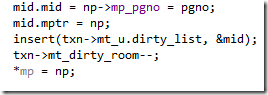

I think I got it, the key is here:

You can see that we use the insert method to add the mid variable to the dirty list. So if we give you a direct pointer, we append it to the list. But if we give you allocate a page, we need to add it in order.

The mdb_mid2l_insert will add an item to the list in the appropriate location to ensure sorting. I think that this is done so when we actually write the dirty page to disk, if we do that using file I/O, we will do that in ascending file order, so we can get good seek times from the disk. A new page has 4,084 bytes available for it (12 bytes are taken by header data).

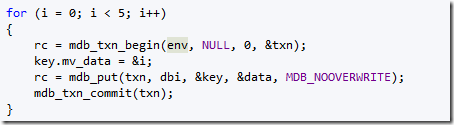

A database is actually created the first time data is written to it. The root page is allocated and recorded. And then the data is added to the page.

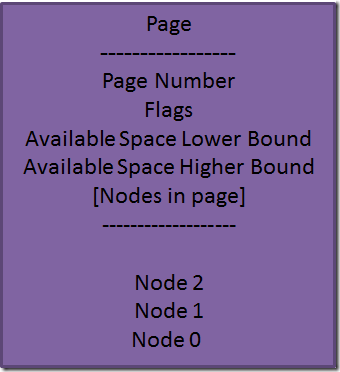

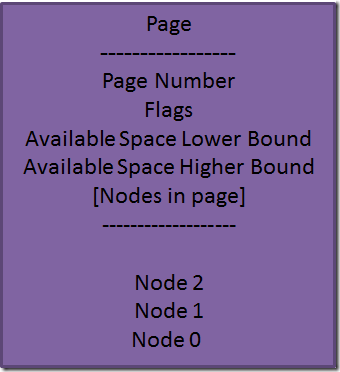

As you might recall, data inside a page is kept in a sorted list. But remember that the data is also stored on the page, and there is the whole notion of overflow pages, so I think that I am going to have to draw a picture to figure out what is going on.

This is more or less how it works. But I don’t think that I really get it. Note that there are two collections here. First is the positions of nodes in the page, and the second is the node themselves. The reason we have this complexity is that we need to maintain both of them in an efficient manner. And we need to do that without too much copying. Therefor, the list of nodes in the page is in the beginning, growing downward, and the actual nodes are in the end, growing upward. The page is full when you can’t fit anything between those two lists.

A node, by the way, is the LMDB internal name for a key (and maybe data). But I think that I might have an error here, because I don’t see the code here to actually add nodes to the page in a sorted fashion, so it might be doing linear search on the content of a node. I am not tracking through the code for adding a second value into a database. And I think that I have to be wrong about linear node search. The code I was looking at was invoked at the the new db creation section, so it might be taking shortcuts that aren’t generally available.

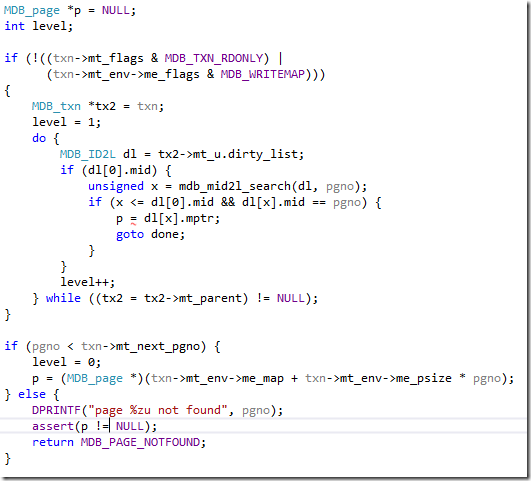

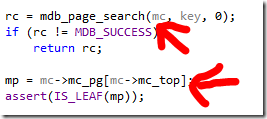

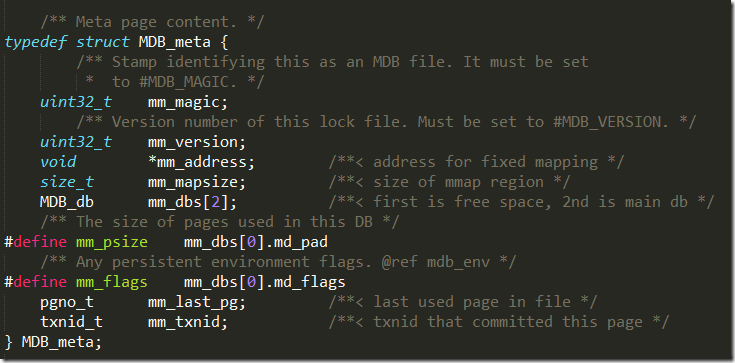

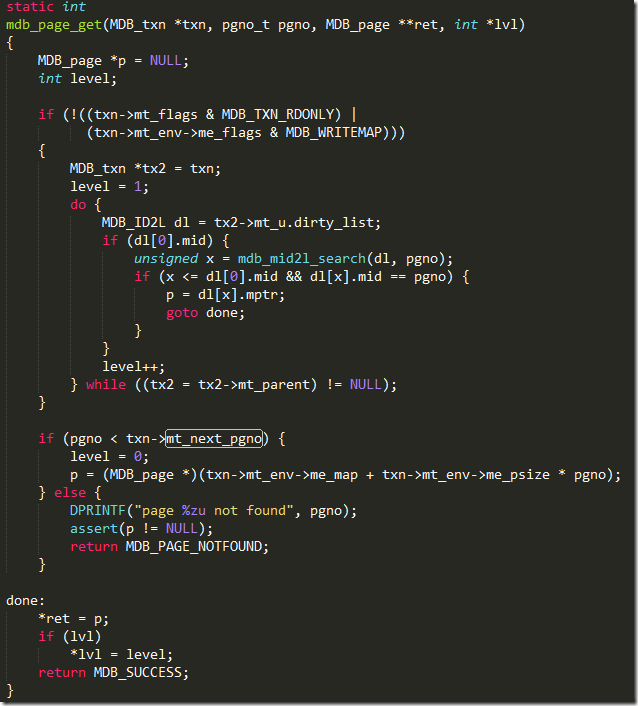

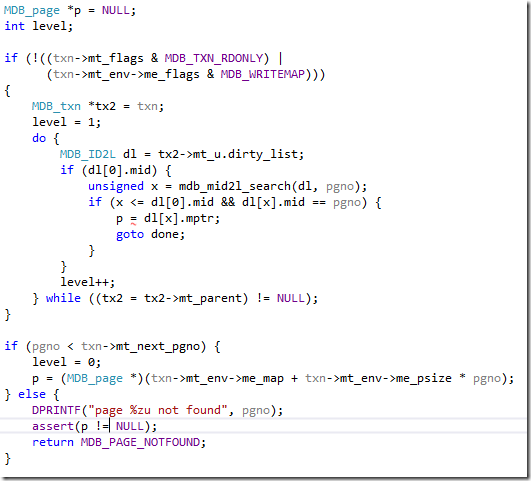

At any rate, the logic for adding an item goes like this… first we need to find the appropriate page. And we do this by starting from the root and asking for the actual page. Which gets us to mdb_page_get, where we have the following:

The really interesting part here is that each transaction have a dirty list of pages, so if you modified a page, you’ll get your own version, even before the data was committed. Otherwise, we will just hand you the current version. This is quite nice.

And then we get to the already familiar mdb_page_search_root function, and I can confirm that the nodes in a page are actually sorted, which only make sense. I am not sure where that happens yet, but I have got the feeling that I am going to see that in a bit.

Okay, I am going to go on a bit of a rant here, mostly against C, to be honest.

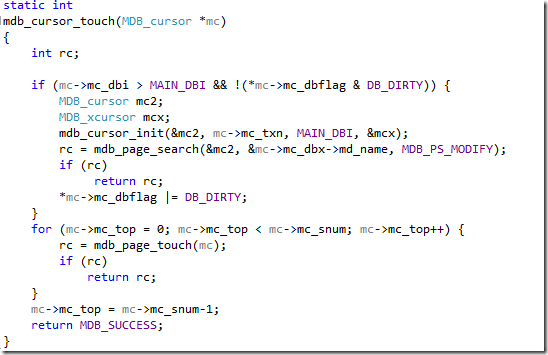

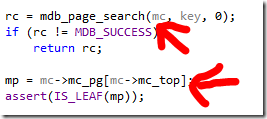

Take a look at this code. mdb_page_search does something, but what it does it mutate the state for the cursor (mc), and you are then going to access the mc->mc_pg[mc->mc_top] to get the actual result you wanted. To make things more interesting, the is also a mc->mc_ki, which is the index of the node inside the node. And it drove me crazy, because I just couldn’t see the association between those three values, especially because I am so not used to this type of code that I never even considered this as a possibility.

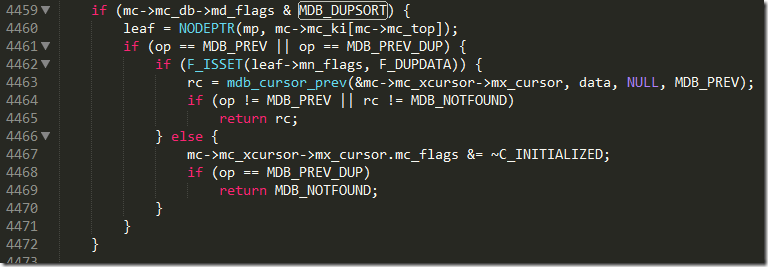

At any rate, now I know how it gets to insert things in the right order. When doing mdb_page_search, it calls to mdb_node_search, which does the appropriate setup to tell the next call where it need to actually do the insert to get things in a sorted fashion. I am currently in the mdb_cursor_put, which is trice cursed and really hard to follow. A 400+ lines method with gotos galore.

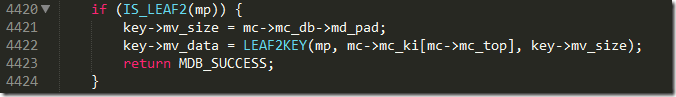

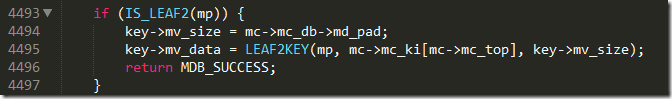

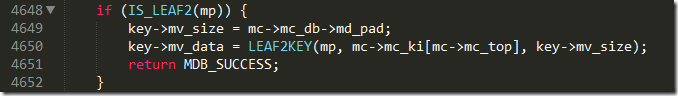

But I am in the section where we are actually going to be writing a new value. (new_sub: goto header, if you care). And the code is pretty straight forward from there.

Next up, I want to see how it handles a page split, but that is a subject to another post.

![]() .

.