In the process of working on RavenDB 4.0, we are going over our code and looking for flaws. Both in the actual implementation and in the design of the API. The idea is to clear away the things that we know are bad in practice. And that leads us to today’s topic. Subscriptions.

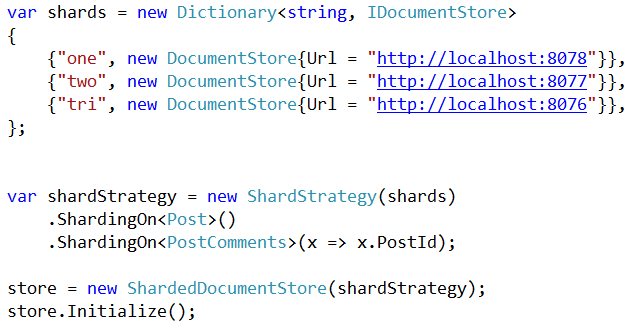

Subscriptions are a way to ask RavenDB to give us, in a reliable way, all documents that match a certain criteria. Here is what the code looks like in RavenDB 3.5:

You typically write this in some form of background processing / job style. The key aspect here is that RavenDB is responsible for sending the data to the subscription, and making sure that you will not lose any updates. So the code is resilient for client errors, for connection errors, database restarts, etc.

However, the topic today isn’t actually subscriptions, it is their API. In practice, we noticed several deficiencies in the API above. To start with, the subscription actually started in an async manner, so opening the subscription and subscribing to it might actually be racy (it is possible to subscribe and start getting documents from the server before you attach your delegate to handle those documents). We had to write a bit of code to handle that scenario, and it was complex in the presence of adding / removing subscriptions when the subscription was live.

The other problem was that subscriptions are reliable. This means that if there is an error, the subscription will handle it. A disconnect from the server will automatically reconnect, and as far as the caller is concerned, nothing has really changed. It isn’t aware of any network errors or recovery that the subscription is doing.

Which is all well and good, until you realize that your connection string is wrong, and the subscription is going to forever retry to connect to the wrong location. We have a way to report errors to the caller code, but in most cases, the caller doesn’t care, the subscription is going to handle it anyway. So we have a problem. How do we balance both the need to handle errors and recover internally and let the caller know about our state?

We currently have the OnError() method we’ll call on the caller to let it know about any errors that we have, but that is not really helping it. The caller doesn’t have a good way to know if we can recover or not, and asking the caller to implement an error recovery policy is something that we don’t really want to do. This is complex and hard to do, and not something that the client should do.

What we ended up with is the following. First, we changed the API so you can’t just add / remove observers to a subscription. You create a new subscription, you attach all the observers that you want, and then you explicitly demarcate the stage in which the subscription starts. Having such an explicit stage frees us from the concurrency and race conditions that we previously had to handle. But it also gives us a chance to actually report something explicit to the calling code.

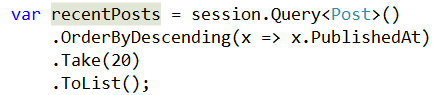

Let’s look at code, then discuss it:

The idea is that if you wait on the task returned from the StartAsync, which is very natural to do, you can tell whether the first time connection was successful or not. Then you can make determination on what to do next. If there is an error, you can dispose the subscription and report it upward, or you can let it recover automatically. This still doesn’t cover some scenarios, unfortunately. We also report all errors to the subscribers, which can make their own determination there.

The problem is that there are some errors that we just can’t recover from. If a user’s password has changed, eventually we’ll recycle the connection, and get an unauthorized error. There is nothing that the subscription can do to fix that, but at the same time, there is a very little chance for it to know that (a bad password is easy, a firewall rule blocking access is very hard to distinguish from the db being down, and in either case there is nothing that the subscription can do).

We are thinking about adding some sort of limit, something like if we retried for certain number of times, or for a certain duration, and couldn’t recover, we’ll report it through an event / method that must be registered (otherwise we’ll just throw it upward and maybe crash the process). The idea is that in this case, we need someone to pay attention, and an unhandled exception (if you didn’t register to catch it) would be appropriate.

I would like to get some feedback on the idea, before going ahead and implementing it.