I run across this post by Damien Katz, and was immediately intrigued. That has several reasons. The last time I did a deep review of Damien’s code was about a decade ago, when I went over the CouchDB source code, in an attempt to learn Erlang. What end up happening was that I wrote RavenDB.

Note: This is pretty much my thoughts as I’m going through the code, it isn’t meant to be a guide or anything except a record of what went through my head as I read the code.

Another reason is that this is obviously a subject matter that is near & dear to my heart. Searching is something that we do all the time, and I would dearly love to read some code that isn’t Lucene to do so. Finally, the code is written in Rust, which I have some passing interest at, so I consider this a win all around. You can find the project site here and the code is here. I’m reviewing 48d808375e541eca383826ea928272976bce064d.

Some things that are obvious from the get go, just by skimming through the docs. This is a small project, less than 10,000 lines of code. That is great, since it means that I can review it in one sitting. I also don’t really care that much for the parser and format that they do, I’m mostly interested in how they are storing and searching the data. The storage is done via RocksDB, which is interesting, because it impose certain limitations on the solution (in particular, single key space) so it is interesting how the data is stored. Most importantly, I really want to see how this is handling updates, which is what I find to be the hardest problem for search impl.

As usual, I’m going to start reading code in file name order, without any relation to the current structure of the code, and jump around from there.

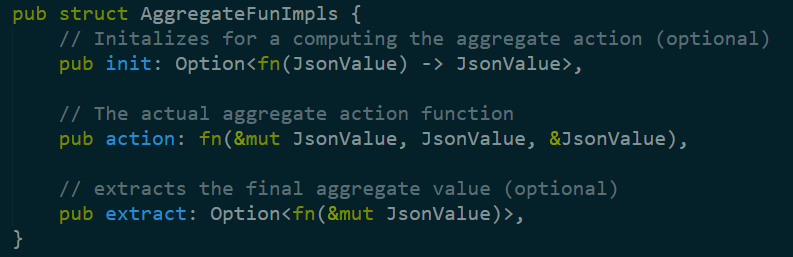

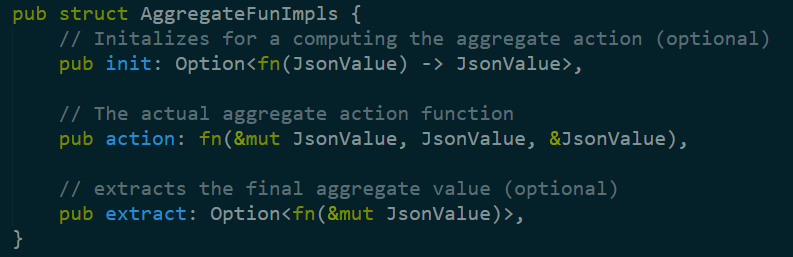

In aggregates.rs, we find this piece of code, which allows to run an aggregation. The init / action / extract are the set of operations that happen in the aggregation process.

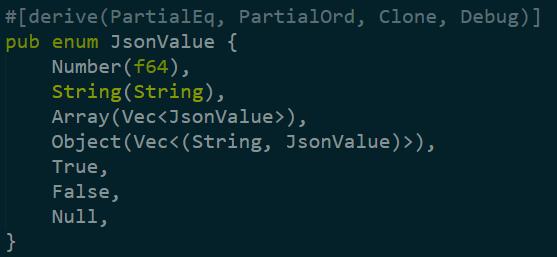

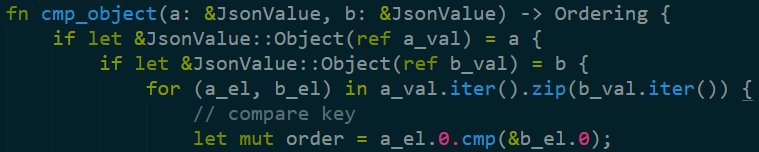

Right now I don’t know what JsonValue is, but it is interesting to read just from the signatures. The action function can get a reference to a mutable instance, an immutable instance and a reference to an immutable instance. I’m assuming that the mutable instance is the one that is being modified to hold the aggregated value.

The aggregation itself is implemented by defining an enum that has a get_fun_impls method that initialize the AggregateFunImpls based on the specific aggregation value required.

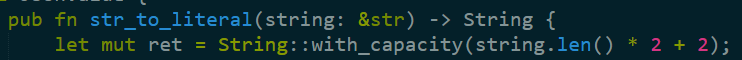

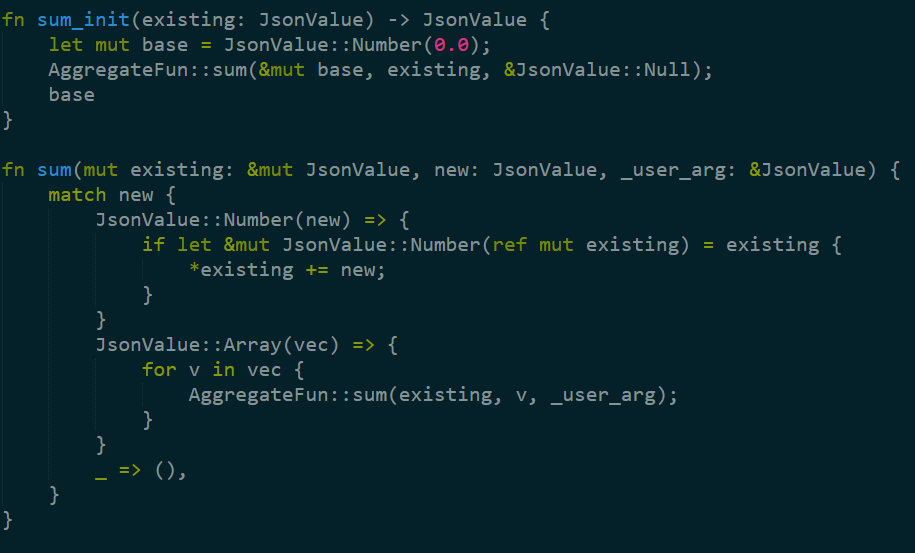

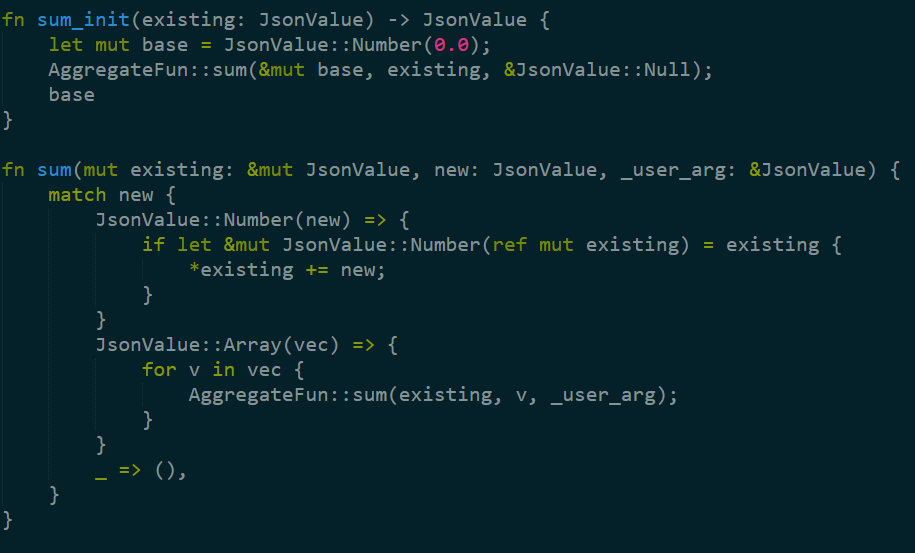

Sum is defined as two functions, sum_init and sum, without any final step. The actual implementation is:

That is fun to read, because it pretty simple, although the syntax in the if let line is really confusing to me (first time in the code, non Rust dev). Basically, it says that we’ll take the JsonValue instance, convert it from a JsonValue to a number instance, and then set it to the same variable name as the original one.

If I was writing it in C it would probably look like:

JsonValue* existing = …;

int* existing = extract_number_(existing);

existing += new;

That is leaving aside the fact that the “new” that is used here and the “new’ in the function arguments are not the same (well, they are conceptually, but not the same type, etc). I find this confusing, but I know that this is fairly common in Rust code.

The rest of the file is pretty similar constructs for other aggregation operations, and the next is error.rs, which is just boilerplate code that Rust requires from anything. The next file is filters.rs, which seems really interesting, but it doesn’t make sense at the current stage of the code, because it refers to a lot of other things that I haven’t checked yet, so moving to the next one, index.rs. Hopefully that will start the juicy bits.

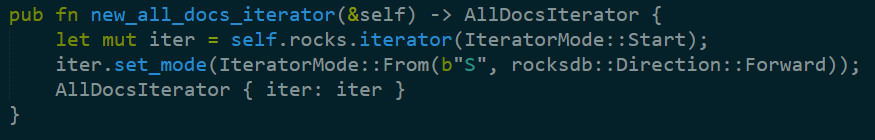

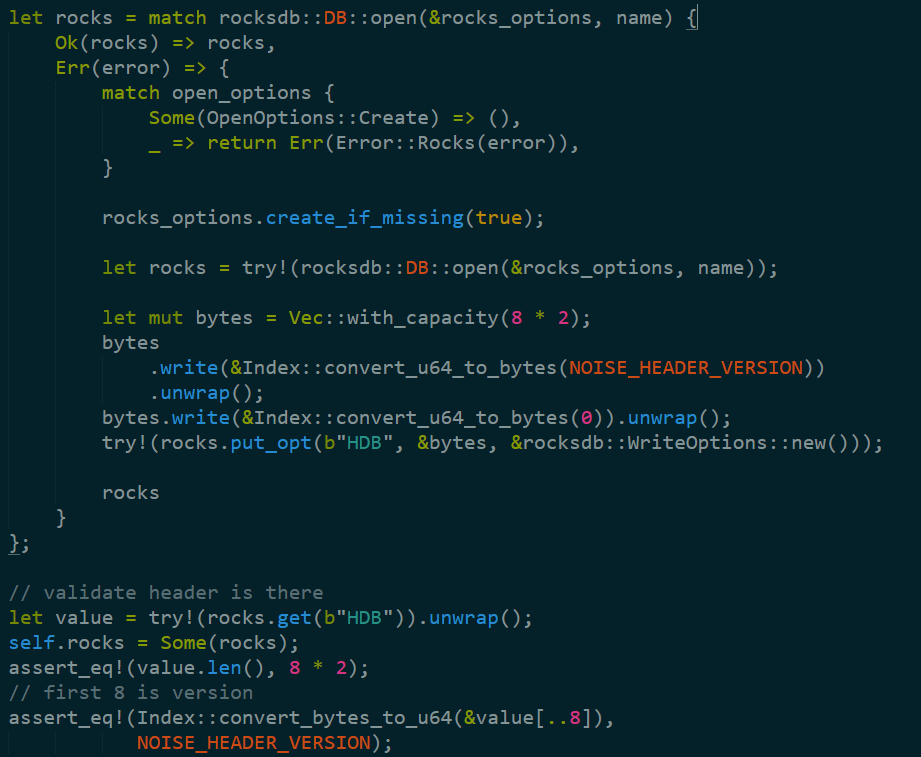

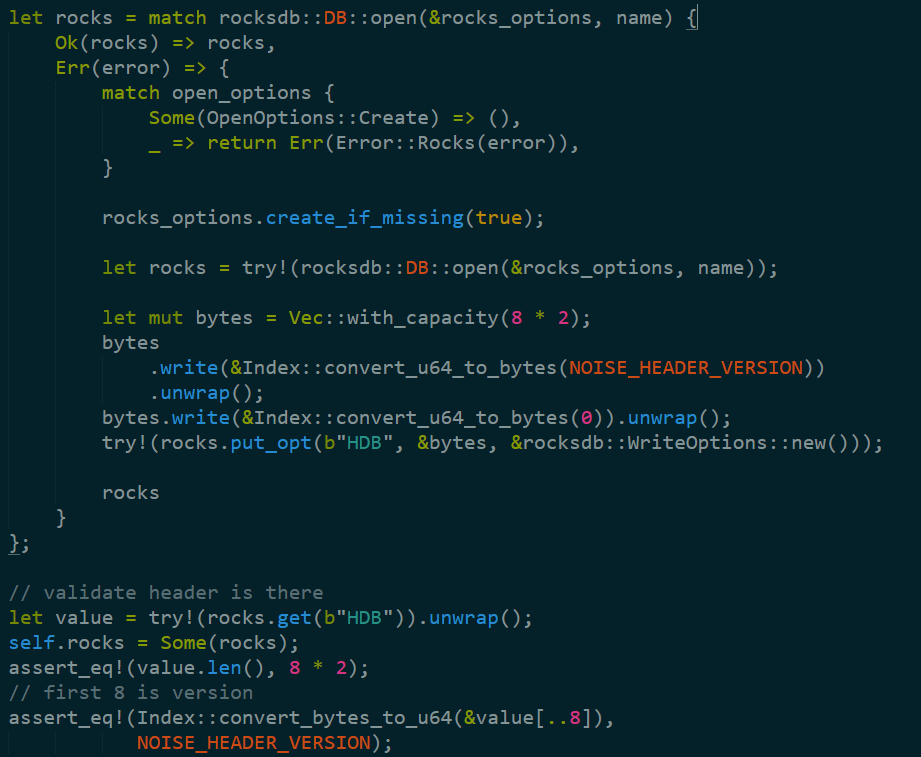

Indeed, the interesting bits start when we call open, where we open up a rocksdb instance and start playing around. There is some interesting error handling here:

In particular, note that if the error failed because the database didn’t exists, it is created, and a header version is added. I wholeheartedly approve, because such early planning will pay dividends down the road. It is important to note that assert statements in Rust are going to be there for release. Only debug_assert will be removed in release builds.

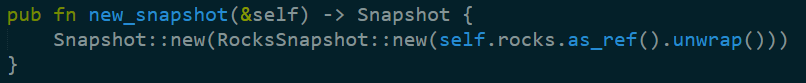

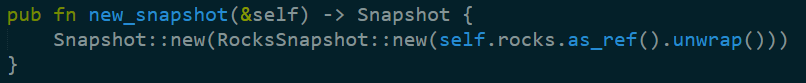

The index has the ability to create a snapshot, based on the RocksDB Snapshots. I wrote a blog post on how that works on LevelDB (which RocksDB is based on).

I do wonder about the unwrap call here. If I understand correctly, this would cause a panic if I’m access an instance that isn’t open. That might be bad if you have a query hitting the server during shutdown, I’m guessing. I assume that this is oversight because it make the code easier to consume.

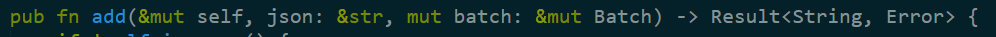

The add method there is where stuff really start to happen.

This is quite interesting, because you pass it a json string, and a batch (a Noise concept that basically wraps a RocksDB batch).

Actually, thinking about this, there is something that might be trippy here. RocksDB allow concurrent batches, but while it has support for optimistic concurrency, I’m guessing (at this point it is a guess) that this isn’t used by Noise, and that can lead to severe problems if you are trying to add to the index from concurrent threads. This is important because parallel indexing is pretty much the first optimization step that anyone will try, and Lucene calls it out explicitly as a best practice.

The json is parsed by a class called shredder, I’m assuming that this is the JSON parser used by Noise.

The json is parsed by a class called shredder, I’m assuming that this is the JSON parser used by Noise.

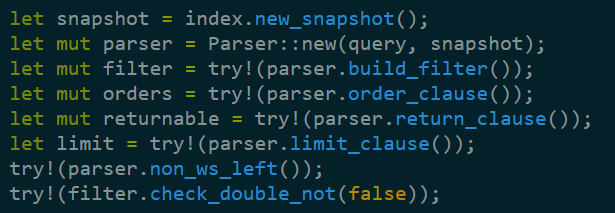

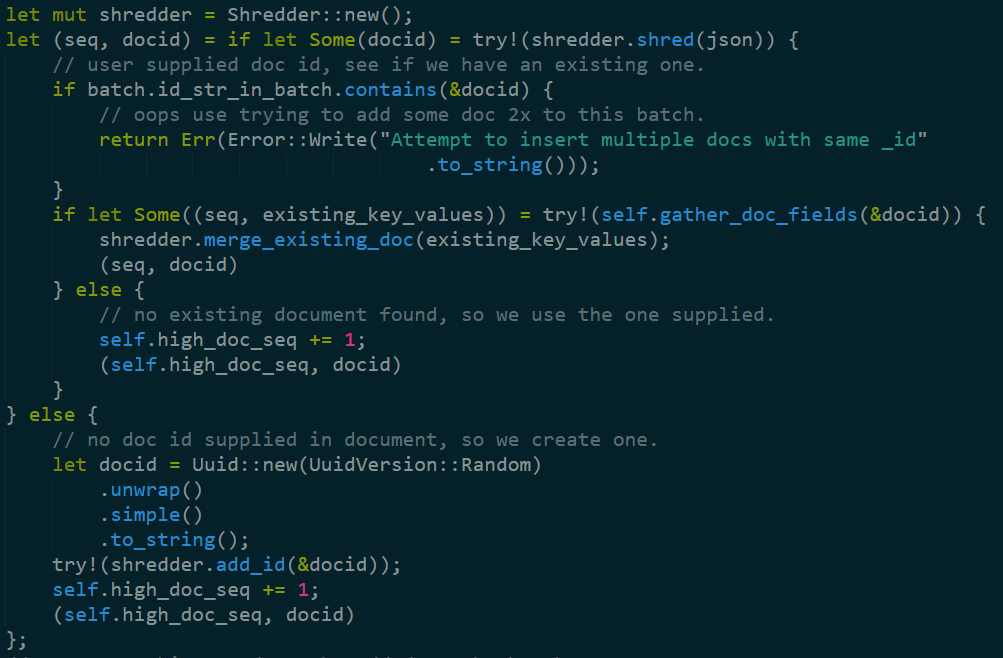

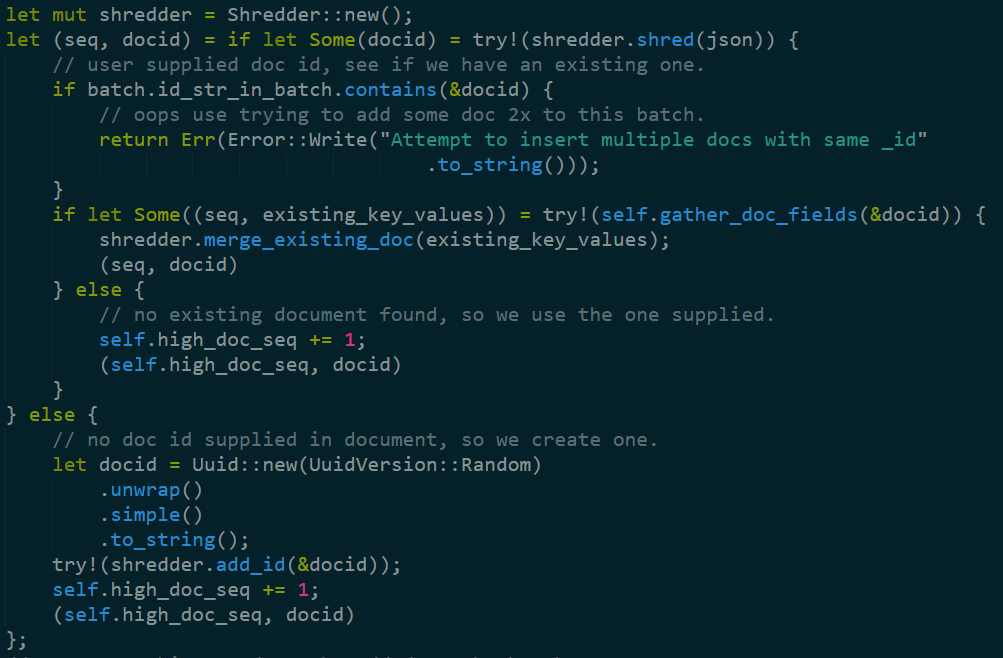

The actual behavior is quite interesting.

We create a new shredder, then set it on the JSON string. The “let … if let” syntax gave me a pause for a bit. It is elegant, in a crazy sort of way.

But the really interesting bit here is that this code says quite a lot about the way Noise works. First, we now know that Noise has stable document ids, since we can see it being used when we check if the document is already in the index. That means that there is update support, which is pretty cool. Although I do wonder why the values are merged back in. For deletion, or are they just creating a large piece?

If there is no id, Noise uses a guid. I would usually call out that this might be a problem (because down the line, given enough work, it will create a lot of compaction work around the files), but I’m suspecting that not specifying an id is going to be a pretty rare occurrence, so that isn’t important.

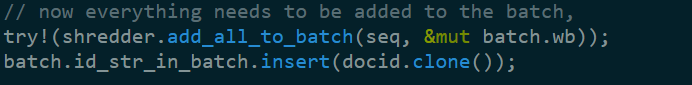

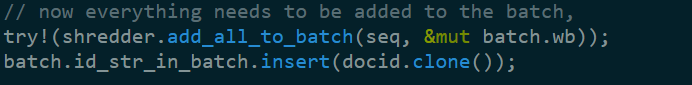

Finally, we add it to the batch:

That means tat the shredder is probably a very important piece in the system. I’ll continue to read the index code for now and see what I can figure out, before jumping to that code.

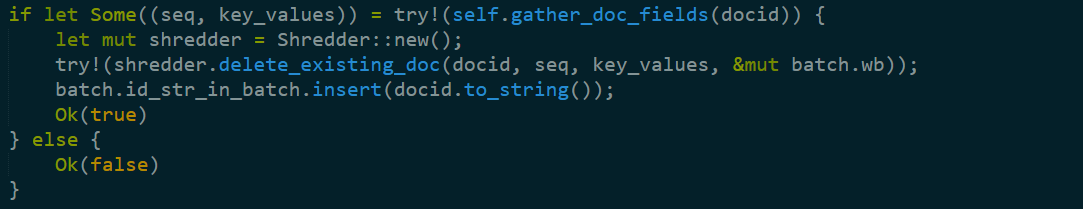

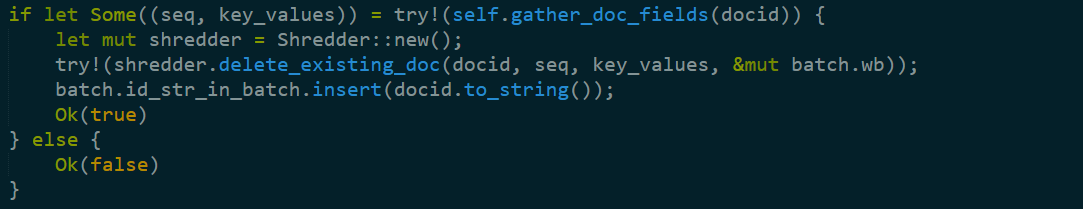

Next, we have delete, and the key part of that method is:

So we have delete support, and comparing that to the update process is very interesting. They are different, which means that updates aren’t just delete/insert. That is interesting.

Next we have gather_doc_fields, which gets a string document id. What happens from there is interesting. Let us assume that our document id is “users/1”.

Noise searches the RocksDB for a value named “Iusers/1” <- Note that I prefix, I assume to stand for id. That happens in fetch_seq. If there is such a key, its value would be a string representation of int64, which is parsed and returned. That seq is the internal sequence number, I’m assuming. And I’m guessing it is used for most operations. Let us assume that the seq number returned in 1234.

gather_doc_fields then do another RocksDB search for “V1234#”. This is done via a KeyBuilder, which Noise is using to generate keys based on the depth of the search, I’m guessing. Haven’t really looked into that yet.

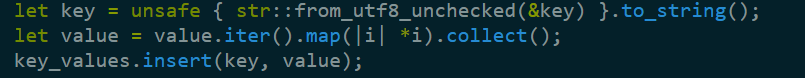

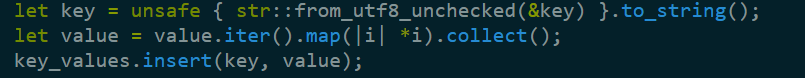

The code to collect the keys for a document sent me into a bit of a wild goose chase, in the sense that I couldn’t figure out what was going on here:

RocksDB is a key/value database, it doesn’t support multiple values per key. Took me a while to figure out that this is a (I’m guessing inefficient) way to to clone the value from the database owned memory to memory controlled by the Noise.

The next method, flush, just update the internal data (version and last sequence number) and submit the write batch. That concludes it as far as I’m concerned, unless something really strange is going on, indexing in Noise must be done in a single threaded manner. Note that there isn’t anything wrong with that (this is what we ended up doing in RavenDB 4.0), but it is something that needs to be stated out load, because it is contrary to the expected practice. Then again, I haven’t seen anything on indexing speed or any sign of benchmarking yet, maybe the team didn’t get there at this point.

The last thing on this file that is of interest are the RocksDB management functions. The compaction_filter – Will keep any key that starts with K or C, but if the value doesn’t start with them, then the value is interpreted as int32, and if it is 0, it is discarded. This is interesting, because HDB (the header for the db) key is not one of the protected values, and so the reason it isn’t thrown out is that Noise is using little endian value here, and accidently the int64 value (1 in this case) is interpreted as non zero int32. I’m assuming that the same holds true for the rest of values, but it seems more like accidental behavior. There is also the fact that we already know that there are some values (sequence numbers) whose value is a stringified int64. That also will pass the “not zero int32”, but even if it works, it looks wrong.

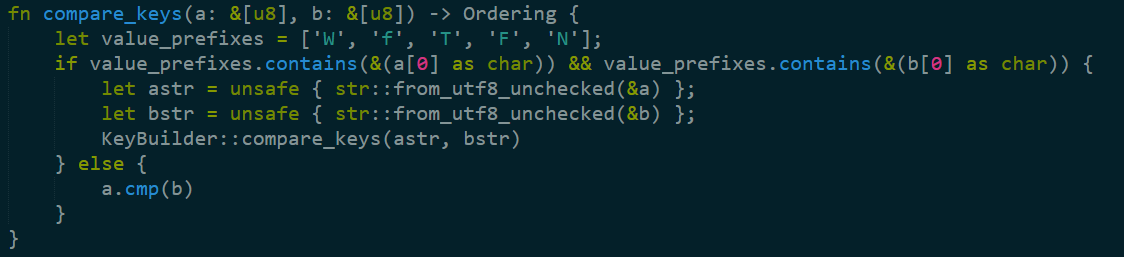

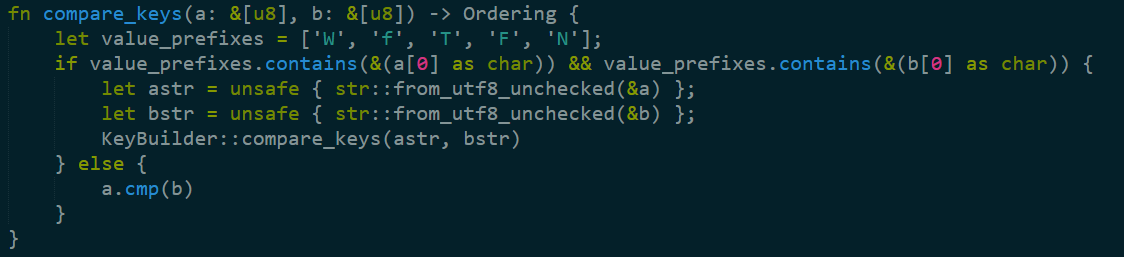

So basically, we have a few more key prefixes, which have a certain meaning, yet unknown. But if they are those values, they should be compared using the KeyBuilder comparison, otherwise, just compare them as is. I don’t like the single letter prefixes. RocksDB uses prefix compression, so I don’t think that using more readable prefix would cost anything.

Finally, we have the sum_merge, which is called by RocksDB when you make a Merge call. This is an interesting approach to handling updates, and what this basically does in the case of Noise is that it allow to merge multiple calls to the same value and return the sum total of them. I’m assuming that the value used here is the ref count or some such. Given the interplay between sum_merge and compaction_filter.

That is it for the index.rs file. There are actually tests there for the index, which is interesting. I don’t like having tests in the same file, but I think it is common in Rust.

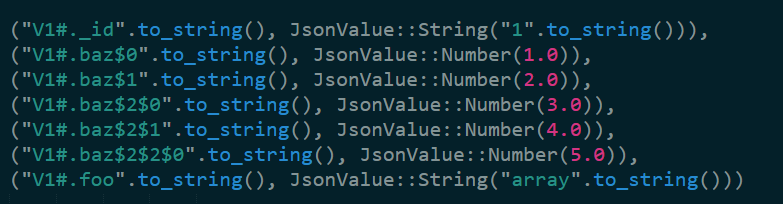

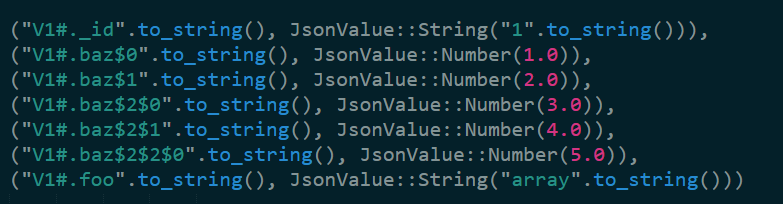

The only thing of note there is that we find some interesting information about the format of the keys:

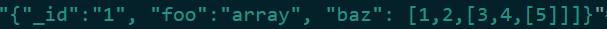

The document:

Is translated into the following:

That is the job of the KeyBuilder, I think, but I’ll leave digging into that to another post.

After the last three parts, I think that I have a good high level understanding of what is going on in the Noise codebase, as a reminder, I’m reviewing 48d808375e541eca383826ea928272976bce064d.

After the last three parts, I think that I have a good high level understanding of what is going on in the Noise codebase, as a reminder, I’m reviewing 48d808375e541eca383826ea928272976bce064d.