When we started writing RavenDB, the idea of collection was this notion of “just a way to say that those documents are roughly similar”. We were deep in the schemaless nature of the system, and it made very little sense at the time to split different documents. By having all documents in the same location (and by that, I mean that they were all effectively stored in the same physical format and the only way to tell the difference between a User document and an Order document is by reading their metadata), we were able to do some really cool things. Indexes could operate over multiple collections easily, replication was simple, exporting documents was very natural operation, etc.

Over time, we learned by experience that most of the time, documents in separate collections are truly separate. They are processed differently, behave differently, and users expect to be able to operate on them differently. This is mostly visible when users have a large database and try to define an index on a small collection, and are surprised when it can take a while to index. The fact that we need to go over all the documents (because we can’t tell them apart before we read them) is not something that is in the mental model for most users.

We have work around most of that by utilizing our own indexing structure. The Raven/DocumentsByEntityName index is used to do quite a lot. For example, we often are able to optimize the “small collection, new index” scenario using the Raven/DocumentsByEntityName, deletion / patching of collections through the studio is using it, etc.

In RavenDB 4.0 we decided to see what it would take to properly segregate collections. As it turned out, this is actually quite hard to do, because of the etag property.

The etag property of RavenDB goes basically like this: Etags are numbers (128 bits in RavenDB up to 3.x, 64 bits in RavenDB 4.0 and onward) that are always increasing, and each document change will result in a higher etag being generated. You can ask RavenDB to give you all documents since a particular etag, and by continually doing this, you’ll get all documents in the database, including all updates.

This properly is the key for quite a lot of stuff internally. Replication, indexing, subscriptions, exports, the works.

But by putting documents in separate physical locations, that means that we won’t have an easy way to scan through all of them. In RavenDB 3.0, we effectively have an index of [Etag, Document], and the process of getting all documents after a particular etag is extremely simple and cheap. But if we segregate collections, we’ll need to merge the information from multiple locations, which can be non trivial, and has a complexity of O(N logN).

There is also the issue of the document keys namespace, which is global (so you can’t have a User with the document key “users/1” and an Order with the document key “users/1”).

Remember the previous post about choosing Voron as our storage engine for RavenDB 4.0? This is one of the reasons why. Because we control the storage layer, we we able to come up with an elegant solution to the problem.

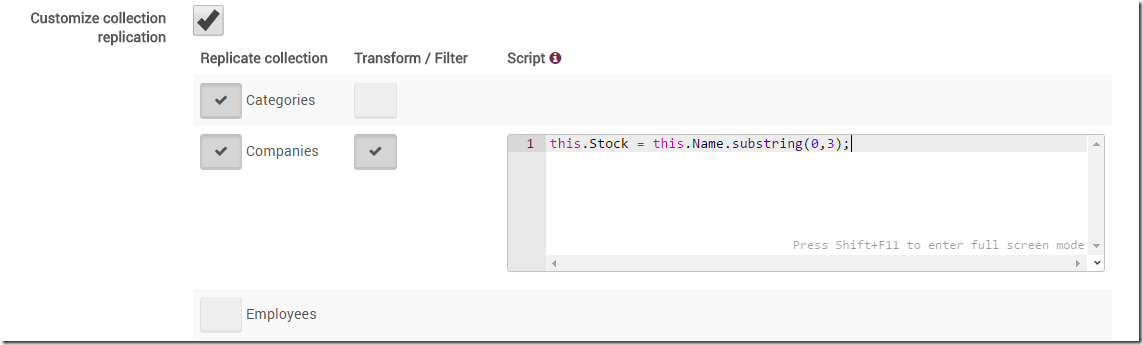

Each of our collections is going to be stored in a separate physical structure, with its own location on disk, its own indexes, etc. But at the same time, all of those separate collections are also going to share a pair of indexes (document key and document etag). In this manner, we effectively index the document etag twice. At first it is indexed in the global index, along all the documents in the database, regardless of which collection they are on. This index will be used for replication, exports, etc. And it is indexed again in a per collection index, which is what we’ll use for indexing, patch by colelction, etc. In the same manner, the documents key index is going to allow us to lookup documents by their key without needing to know what collection they are on.

Remember, those indexes actually store the an id that gives us an O(1) access to the data, which means that processing them is going to be incredibly cheap.

This has a bunch of additional advantages. To start with, it means that we can drop the Raven/DocumentsByEntityName index ,it is not longer required, since all its functioned are now handled by those internal storage indexes.

Loaded terminology term: Indexes in RavenDB can refer to either the indexes that users define and are familiar with (such as the good ol` Raven/DocuemntsByEntityName) and also storage indexes, which are internal structures inside the RavenDB engine and aren’t exposed externally.

That has the nice benefit of making sure that all collection data are now transactional and is updated as part of the write transactions.

So we had to implement a relatively strange internal structure to support this segregation. But aside from the “collections are physically separated from one another”, what does this actually gives us?

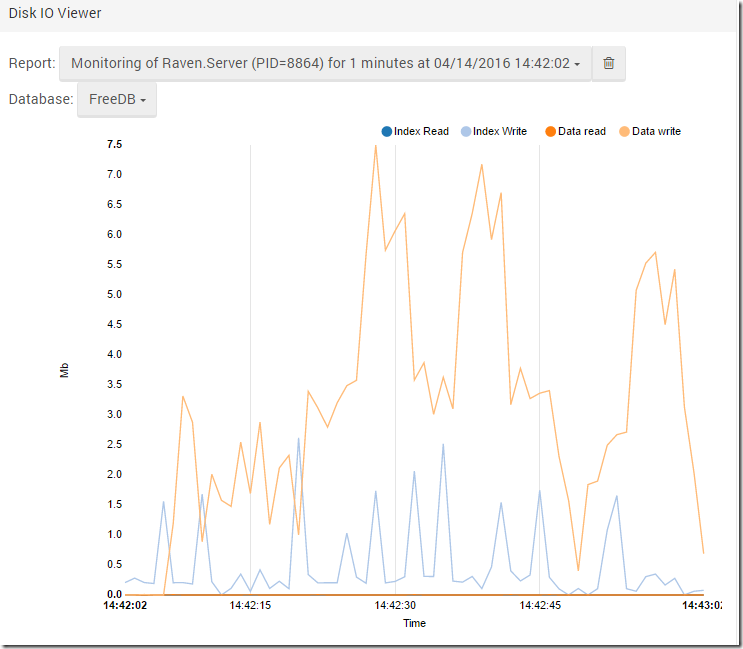

Well, it make certain tasks, such as indexing, subscriptions, patching, etc that work on a per collection basis much easier. You don’t need to scan all documents and filter the stuff that isn’t relevant. Instead, you can just iterate over the entire result set directly. And that has its own advantages. Because we are storing documents in separate internal structures per collection, there is a much stronger chance that documents in the same collection will reside nearby one another on the disk. Which is going to increase performance, and opens up some interesting optimization opportunities.