This can be a very short post, just: See CAP. Unfortunately, we have a lot of people who actually have experience in using distributed transactions, and have a good reason to believe that they work. The answer is that yes, they do, as long as you don’t run into some of the interesting edge cases.

By the way, that is not a new observation, see The Two Generals.

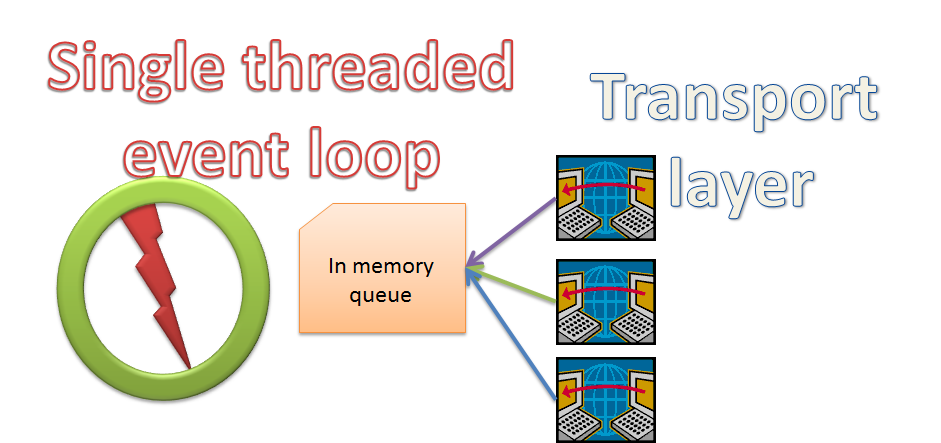

Allow me to demonstrate. Assume that we have a distributed system with the following actors:

This is a fairly typical setup. You have a worker that pull messages from a queue and read/write to a database based on those messages. To coordinate between them, it uses a transaction coordinator such as MSDTC.

Transaction coordinators use a two phase commit (or sometimes a three phase commit protocols) to ensure that either all the data would be committed, or none of it would be.

The general logics goes like this:

- For each participant in the transaction, send a prepare message.

- If the participant answered “prepared”, it is guaranteeing that the transaction can be committed.

- If any of the participants failed on prepare, abort the whole transaction.

- If all of the participants successfully prepared, send the commit message to all of them.

The actual details are a bit more involved, obviously, but that is pretty much it.

Now, let us take a look at an interesting scenario. Worker #1 is pulling (in a distributed transaction) a message from the queue, and based on that message, it modify the database. Then it tells the transaction coordinator that it can commit the transaction. At this point, the TC is sending the prepare message to the database and the queue. Both reply that they have successfully prepared the transaction to be committed. The TC sends a commit message to the queue, completing the transaction from its point of view, however, at this point, it is unable to communicate with the database.

What happens now?

Before I answer that, let us look at another such scenario. The TC needs to commit a transaction, it sends a prepare message to the database, and receive a successful reply. However, before it manages to send a prepare message to the queue, it becomes unavailable.

Note that from the point of view of the database, the situation looks exactly the same. It got (and successfully replied) to a Prepare message, then it didn’t hear back from the transaction coordinator afterward.

Now, that is where it gets interesting. In an abstract world, we can just wait with the pending transaction until the connection with the coordinator is resumed, and we can actually get a “commit / abort” notification.

But we aren’t in abstract world. When we have such a scenario, we are actually locking records in the database (because they are in the process of being modified). What happens when another client comes to us and want to modify the same record?

For example, it is quite common for to host the business logic, queue and transaction coordinator on the same worker instance, while the database is on a separate machine. That means that in the image above, if Worker #1 isn’t available, we recover by directing all the users to the 2nd worker. However, at that point, we have a transaction that was prepared, but not committed.

When the user continue to make requests to our system, the 2nd worker, which has its own queue and transaction coordinator is going to try and access the user’s record. The same user whose record are currently locked because of the ongoing transaction.

If we just let it hang in this manner, we have essentially created a situation where the user’s data become utterly unavailable (at least for writes). In order to resolve that, transactions comes with a timeout. So after the timeout has expired, we can roll back that transaction. Of course, that leads to a very interesting situation.

Let us go back to the first scenario we explored. In this scenario, the queue got both Prepare & Commit messages, while the database got just a Prepare message. The timeout has expired, and the database has rolled back the transaction. In other words, as far as the queue is concerned, the transaction committed, and the message is gone. As far as the database is concerned, that transaction was rolled back, and never happened.

Of course, the chance that something like that can happen in one of your systems? Probably one in a million.