I am pretty sure that it would surprise you, but the World’s Smallest No SQL Database has a well defined concurrency model. Basically, it is using Last Write Wins. And we are safe from any concurrency issues. That is pretty much it, right?

Well, not really. In a real world system, you actually need to do a lot more with concurrency. Some obvious examples:

- Create this value only if it doesn’t exists already.

- Update this value only if it didn’t change since I last saw it.

Implementing those is actually going to be pretty simple. All you need to do is to have a metadata field, version, that is incremented on every change. Here is the change that we need to make:

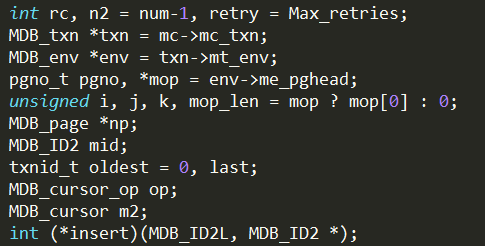

1: public class Data

2: { 3: public byte[] Value;

4: public int Version;

5: }

6:

7: static readonly ConcurrentDictionary<string, Data> data =

8: new ConcurrentDictionary<string, Data>(StringComparer.InvariantCultureIgnoreCase);

9:

10: public HttpResponseMessage Get(string key)

11: { 12: Data value;

13: if(data.TryGetValue(key, out value) == false)

14: return new HttpResponseMessage(HttpStatusCode.NotFound);

15:

16: return new HttpResponseMessage

17: { 18: Headers = { "Version", value.Version }, 19: Content = new ByteArrayContent(value.Value)

20: };

21: }

22:

23: public void Put(string key, [FromBody]byte[] value, int version)

24: { 25: data.AddOrUpdate(key, () =>

26: { // create 27: if(version != 0)

28: throw new ConcurrencyException();

29: return new Data{ Value = value, Version = 1 }; 30: }, (_, prev) =>

31: { // update 32: if(prev.Version != version)

33: throw new ConcurrencyException();

34: return new Data{ Value = value, Version = prev.Version +1 }; 35: });

36: }

As you can see, it merely doubled the amount of code that we had to write, but it is pretty obvious how it works. RavenDB actually uses something very similar to that for concurrency control for writes, although the RavenDB ETag mechanism is alos doing a lot more.

But the version system that we have above is actually not enough, it only handle concurrency control for updates. What about concurrency controls for reads?

In particular, how are we going to handle non repeatable reads or phantom reads?

- Non repeatable reads happen when you are reading a value, it is then deleted, and when you try to read it again, it is gone.

- Phantom read is the other way around, first you tried, but didn’t find anything, then it was created, and you read it again and find it.

This is actually interesting, because you only care about those for the duration of a single operation / transaction / session. As it stand now, we actually have no way to handle either issue. This can lead to… interesting bugs that only happen under very specific scenarios.

With RavenDB, we actually handle both cases. In a session lifetime, you are guaranteed that if you saw a document, you’ll continue to see this document until the end of the session, which deals with the issue of non repeatable read. Conversely, if you didn’t see a document, you will continue to not see it until the session is closed. This is done for Load, queries are a little bit different.

Another aspect of concurrency that we need to deal with is Locking. Sometimes a user has a really good reason why they want to lock a record for a period of time. This is pretty much the only way to handle “checking out” of a record in a scenario where you have to multiple users wanting to make changes to a record concurrently. Locks can be Write Or ReadWrite locks. A Write lock allows users to read the data, but prevent them from changing that. When used in practice, this is usually going to immediately fail an operation, rather than make you wait for it.

The reasoning behind immediate fail for write is that if you encountered a record with a write lock, it means that it was either already written to or is about to be written to. At that case, your write is going to be operating on stale data, so we might was well fail you immediately. For ReadWrite locks, the situation is a bit different. In this case, we want to also prevent readers from moving on. This is usually done to ensure consistent state system wise, and basically, any operation on the record would have to wait until the lock is removed.

In practice,ReadWrite locks can cause a lot of issues. The moment that you have people start placing locks, you have to deal with lock expiration, manual unlocking, abandoned lock detection, lock maintenance, etc. About the only thing that they are good for is to allow the user to make a set of changes and present them as one unit, if we don’t have better transaction support. But I’ll discuss that in another post. In the meantime, just keep in mind that from my point of view, ReadWrite locks are pretty useless all around.