In my previous post, I discussed some of the problems that you run into when you try to have a single source of truth with regards to an entity definition. The question here is, how do we manage something like a Customer across multiple applications / modules.

For the purpose of discussion, I am going to assume that all of the data is either:

- All sitting in the same physical database (common if we are talking about different modules in the same application).

- Spread across multiple databases with some data being replicate to all databases (common if we are talking about different applications).

We will focus on the customer entity as an example, and we will deal with billing and help desk modules / applications. There are some things that everyone can agree on with regards to the customer. Most often, a customer has a id, which is shared across the entire system, as well as some descriptive details, such as a name.

But even things that you would expect to be easily agreed upon aren’t really that easy. For example, what about contact information? The person handling billing at a customer is usually different than the person that we contact for help desk inquires. And that is the stuff that we are supposed to agree on. We have much bigger problems when we have to deal with things like customer’ payment status vs. outstanding helpdesk calls this month.

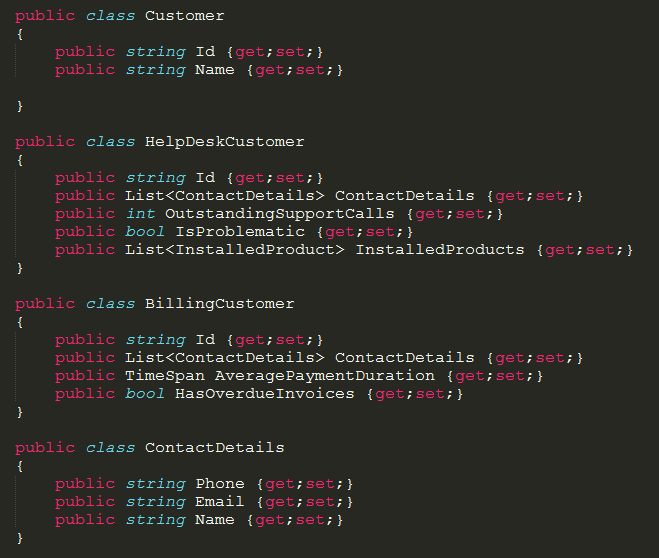

The way to resolve this is to forget about trying to shove everything into a single entity. Or, to be rather more exact, we need to forget about trying to thing about the Customer entity as a single physical thing. Instead, we are going to have the following:

There are several things to note here:

- There is no inheritance relationship between the different aspect of a customer.

- We don’t give in and try to put what appears to be shared properties (ContactDetails) in the root Customer. Those details have different meaning for each entity.

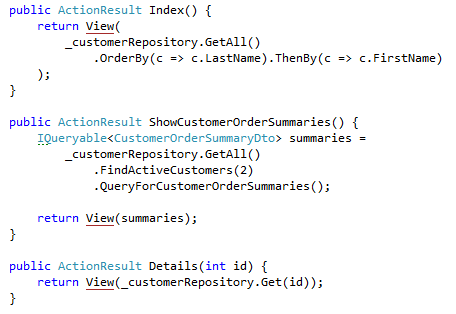

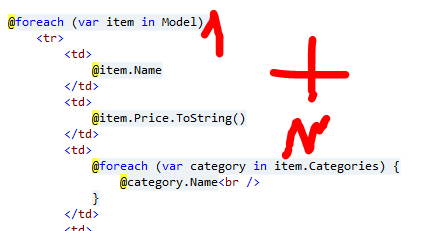

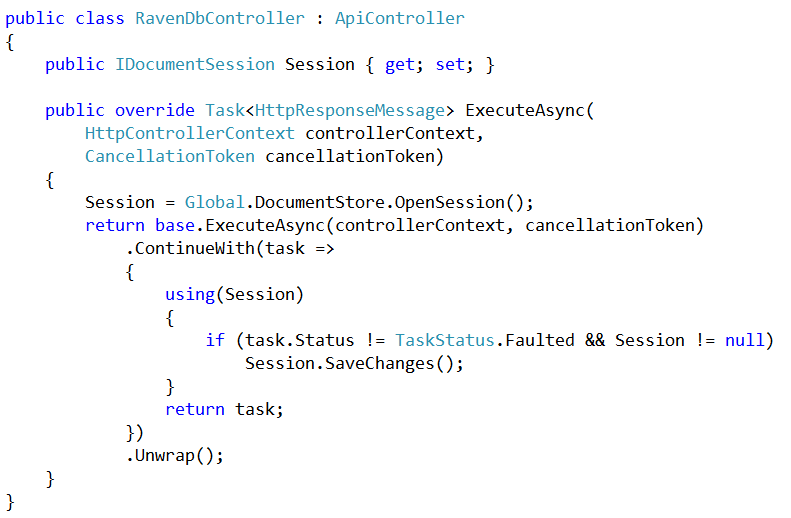

There are several ways to handle actually storing this information. If we are using a single database, then we will usually have something like:

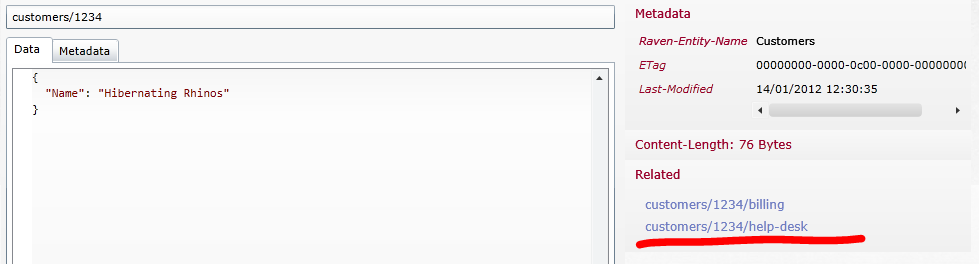

The advantage of that is that it makes it very easy to actually look at the entire customer entity for debugging purposes. I say for debugging specifically because for production usage, there really isn’t anything that needs to look at the entire thing, every part of the system only care for its own details.

You can easily load the root customer document and your own customer document whenever you need to.

More to the point, because they are different physical things, that solves a lot of the problems that we had with the shared model.

Versioning is not an issue, if billing needs to make a change, they can just go ahead and change things. They don’t need to talk to anyone, because no one else is touching their data.

Concurrency is not an issue, if you make a concurrent modification to billing and help desk, that is not a problem, they are stored into two different locations. That is actually what you want, since it is perfectly all right for having those concurrent changes.

It free us from having to have everyone’s acceptance on any change for everything except on the root document. But as you can probably guess, the amount of information that we put on the root is minimal, precisely to avoid those sort of situations.

This is how we handle things with a shared database, but what is going on when we have multiple applications, with multiple databases?

As you can expect, we are going to have one database which contains all of the definitions of the root Customer (or other entities), and from there we replicate that information to all of the other databases. Why not have them access two databases? Simple, it makes things so much harder. It is easier to have a single database to access to and have replication take care of that.

What about updates in that scenario? Well, updates to the local part is easy, you just do that, but updates to the root customer details have to be handled differently.

The first thing to ask is whatever there really is any need for any of the modules to actually update the root customer details. I can’t see any reason why you would want to do that (billing shouldn’t update the customer name, for example). But even if you have this, the way to handle that is to have a part of the system that is responsible for the root entities database, and have it do the update, from where it will replicate to all of the other databases.

This is a review of the

This is a review of the