Wow! The last time I had a post that roused that sort of reaction, I was talking about politics and religion. Let us see if I can clarify my thinking and also answer many of the people who commented on the previous post.

One of the core values that I look for when getting new people is their passion for the profession. Put simply, I think about what I do as a hobby that I actually get paid for, and I am looking for people who have the same mentality as I do. There are many ways to actually check for that. We recently made an offer to a candidate simply because her eyes lighted up when she spoke about how she build games to teach kids how to learn Math.

There were a lot of fairly common issues with my approach.

Indignation – how dare you expect me to put my own personal time into something that looks like work. That also came with a lot of explanation about family, kids and references to me being a slave driver bastard.

Put simply, if you can’t be bothered to improve your own skills, I don’t want you.

Hibernating Rhinos absolutely believes that you need to invest in your developers, and I strongly believe that we are doing that. It starts from basic policies like if you want a tech book, we’ll buy it for you. Sending developers to courses and conferences, sitting down with people and making sure that they are thinking about their career properly. And a whole lot of other things aside.

Personally, my goal is to keep everyone who works for us right now for at least 5 years, and hopefully longer. And I think that the best way of doing that is to ensure that developers are appreciated, they have a place to grow in professionally and are having to deal with fun, complex and interesting problems for significant parts of their time at work. This does not remove any obligations on your part to maintain your own skills.

In the same sense that I would expect a football player to be playing on a regular basis even in the off session, in the same sense that I wouldn’t visit a doctor who doesn’t spend time getting updated on what is changing in his field, in the same sense that I wouldn’t trust a book critic that doesn’t read for fun – I don’t believe that you can abjurate your own responsibility to keeping yourself aware of what is actually going on out there.

In the same sense that I would expect a football player to be playing on a regular basis even in the off session, in the same sense that I wouldn’t visit a doctor who doesn’t spend time getting updated on what is changing in his field, in the same sense that I wouldn’t trust a book critic that doesn’t read for fun – I don’t believe that you can abjurate your own responsibility to keeping yourself aware of what is actually going on out there.

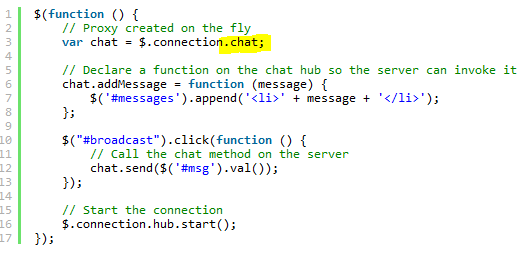

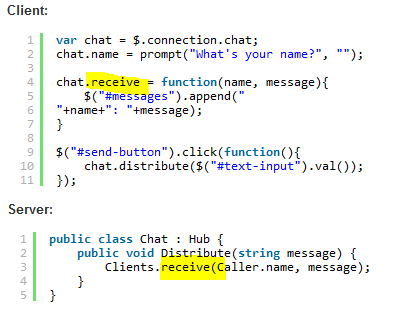

And I am sorry, I don’t care if you read a blog or two or ten. If you want to actually learn new stuff in development, you actually have to sit down and write some code. Anything that isn’t code isn’t really meaningful in our profession. And it is far too easy to talk the talk without being able to walk the walk. My company have absolutely no intention of doing anything with Node.js in the future, I still wrote some code in Node.js, just to be able to see how it feels like to actually do that. I still spend time writing code that is never going to reach production or be in any way useful, just for me to see if I can do something.

If you are a developer, your output is code, and that is what prospective employees will look for. From my perspective, it is like hiring a photographer without looking at any of their pictures. Like getting a cook without tasting anything that he made.

And yes, it is your professional responsibility to make sure that you are hirable. That means that you keep your skills up to date and that you have something to show to someone that will give them some idea about what you are doing.

Time – I can’t find none.

There are 168 hours in a week, if you can put 4 – 6 hours a week to hone your own skills, to try things, to just explore… well, that probably indicate something about your priorities. I would like to hire people who think about what they do as a hobby. I usually work 8 – 10 hours days, 5 – 6 days a week. I am married, we’ve got two dogs, I usually read / watch TV for at least 1 – 3 hours a day.

I have been at the Work Work Work Work All The Time Work Work Work And Some More Work parade. I got the T Shirt, I got the scary Burn Out Episode. I fully understand that working all the time is a Bad Idea. It is Bad Idea for you, it is Bad Idea for the company. This isn’t what I am talking about here.

Think about the children – I have kids, I can’t spend any time out of work doing this.

That one showed up a lot, actually. I am thinking about the children. I think it is absolutely irresponsible for someone with kids not to make damn sure that he is hirable. I am not talking about spending 8 hours at the office, 8 hours doing pet projects and 1.5 minutes with your children (while you got some code compiling). And updating your skills and maintaining a portfolio of projects is something that I think is certainly part of that.

I read professionally, but I don’t code - this is a variation on all of the other excuses, basically. Here is a direct quote: “I often find that well written blog entry/article will provide more education that can be picked up in a few minutes reading than several hours coding. And I can do that in my lunch break.”

That is nice, I also like to read a lot of Science Fiction, but I am a terrible writer. If you don’t actually practice, you aren’t any good. Sure, reading will teach you the high level concepts, but it doesn’t teach you how to apply them. You can read about WCF all day long, but it doesn’t teach you how to handle binding errors. Actually doing things will teach you that. You need actual practice to become good at something. In theory, there is no difference between reality and theory, and all of that.

I legally can’t - You signed a contract that said that you can’t do any pet projects, or that all of your work is owned by the company.

I sure do hope that you are well compensated for that, because it is going to make it harder for you to get hired.

You have a life – therefor you can’t spend time on pet projects.

So do I, I managed. If you can’t, I probably don’t want you.

Wrong this to do - I shouldn’t want someone who is on Stack Overflow all the time, or will spend work time on pet projects.

This is usually from someone who think that the only thing that I care about is lines of code committed. If someone is on Stack Overflow a lot, or reading a lot of blogs, or writing a lot of blogs. That is awesome, as long as they manages to complete their tasks I a reasonable amount of time. I routinely write blog posts during work. It helps me think, it clarify my thinking, and it usually gets me a lot of feedback on my ideas. That is a net benefit for me and for the company.

Some people hit a problem and may spin on that for hours, and VS will be the active window for all of that time. That isn’t a good thing! Others will go and answer totally unrelated questions on Stack Overflow while they are thinking on the problem, they come back to VS and resolve the problem in 2 minutes. As long as they manage to do the work, I don’t really care. In fact, having them in Stack Overflow probably means that answers about our products will be answered faster.

As for working on their own personal projects during work. The only thing that you need to do is somehow tie it to actual work. For example, that pet project may be converted to be a sample application for our products. Or it can be a library that we will use, or any of a number of options that you can use to make sure things interesting.

You should note as well that I am speaking here from our requirements from the candidate, not from what I consider to be our responsibilities toward our employees, I’ll talk about those in another post in more detail.

Then there was this guy, who actively offended me.

The author is a selfish ego maniac who only cares about himself. As an employer, you can choose to consume a resource (employee), get all you can out of it, then discard it. Doing so adds to the evil and bad in the world.

This is spoken like someone who never actually had to recruit someone, or actually pay to recruit someone. It costs a freaking boatload of money and take a freaking huge amount of time to actually find someone that you want to hire. Treating employees as disposable resources is about as stupid as you can get, because we aren’t talking about getting someone that can flip burgers at minimum wage here.

We are talking about 3 – 6 months training period just to get to the point where you can get good results out of a new guy. We are talking about 1 – 3 months of actually looking for the right person before that. I consider employees to be a valuable resource, something that I actively need to encourage, protect and grow. Absolutely the last thing that I want is to try to have a chain of disposable same-as-the-last-one developers in my company.

I have kicked people out of the office with instructions to go home and rest, because I would like to have them available tomorrow and the next day and month and year. Doing anything else is short sighted, morally repugnant and stupid.