This is a question that I get very frequently, and I always tried to dodged the bullet, but I get it so much that I feel that I have to provide an answer. Obviously, I am (not so) slightly biased toward NHibernate, so while you read it, please keep it in mind.

EF 4.0 has done a lot to handle the issues that were raised with the previous version of EF. Thinks like transparent lazy loading, POCO classes, code only, etc. EF 4.0 is a much nicer than EF 1.0.

The problem is that it is still a very young product, and the changes that were added only touched the surface. I already talked about some of my problems with the POCO model in EF, so I won’t repeat that, or my reservations with the Code Only model. But basically, the major problem that I have with those two is that there seems to be a wall between what experience of the community and what Microsoft is doing. Both of those features shows much of the same issues that we have run into with NHibernate and Fluent NHibernate. Issues that were addressed and resolved, but show up in the EF implementations.

Nevertheless, even ignoring my reservations about those, there are other indications that NHibernate’s maturity makes itself known. I run into that several times while I was writing the guidance for EF Prof, there are things that you simple can’t do with EF, that are a natural part of NHibernate.

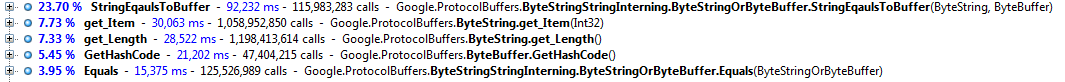

I am not going to try to do a point by point list of the differences, but it is interesting to look where we do find major differences between the capabilities of NHibernate and EF 4.0. Most of the time, it is in the ability to fine tune what the framework is actually doing. Usually, this is there to allow you to gain better performance from the system without sacrificing the benefits of using an OR/M in the first place.

Here is a small list:

- Write batching – NHibernate can be configured to batch all writes to the database so that when you need to write several statements to the database, NHibernate will only make a single round trip, instead of going to the database per each statement.

- Read batching / multi queries / futures – NHibernate allows to batch several queries into a single round trip to the database, instead of separate roundtrip per each query.

- Batched collection loads – When you lazy load a collection, NHibernate can find other collections of the same type that weren’t loaded, and load all of them in a single trip to the database. This is a great way to avoid having to deal with SELECT N+1.

- Collection with lazy=”extra” – Lazy extra means that NHibernate adapts to the operations that you might run on top of your collections. That means that blog.Posts.Count will not force a load of the entire collection, but rather would create a “select count(*) from Posts where BlogId = 1” statement, and that blog.Posts.Contains() will likewise result in a single query rather than paying the price of loading the entire collection to memory.

- Collection filters and paged collections - this allows you to define additional filters (including paging!) on top of your entities collections, which means that you can easily page through the blog.Posts collection, and not have to load the entire thing into memory.

- 2nd level cache – managing the cache is complex, I touched on why this is important before, so I’ll skip if for now.

- Tweaking – this is something that is critical whenever you need something that is just a bit beyond what the framework provides. With NHibernate, in nearly all the cases, you have an extension point, with EF, you are completely and utterly blocked.

- Integration & Extensibility – NHibernate has a lot of extension projects, such as NHibernate Search, NHibernate Validator, NHibernate Shards, etc. Such projects not only do not exists for EF, but they cannot be written, for the most part, because EF has no extension points to speak of.

On the other side, however:

- EF 4.0 has a better Linq provider than the current NHibernate implementation. This is something being actively worked on and the NH 3.0 will fix this gap.

- EF is from Microsoft.