Micro optimization decision process

There are some parts of our codebase that are simply going to have to be called a large number of times. Those are the ones that we want to optimize, but at the same time, unless they are ridiculously inefficient, there isn’t that much room for improvement.

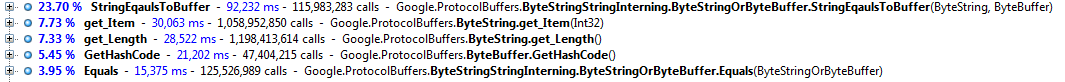

Let us look at this for a second:

The numbers are pretty hard to read in this manner, so I generally translate it to the following table:

| Method name | Cost per 1,000 invocations |

| StringEqualsToBuffer | 7 ms |

| get_Item | 0.2 ms |

| get_Length | 0.2 ms |

| GetHashCode | 4 ms |

| Equals | 1 ms |

It is important to note that what I am trying to check here is relative cost of calling a method. I use the thousands invocation just to give us back a number that we can actually understand easily, instead of dealing with nanoseconds.

As you can see, all of the methods in this piece of code are actually pretty fast, the slowest will complete in under ten nanoseconds. The problem is that they are called a lot. StringEqualsToBuffer cost me 90 seconds in this test run. This means that to improve its performance, we need to get it to drop to even fewer nanoseconds, or reduce the number of times it is called. Both of which are going to be hard.

You can look at how I dealt with this particular case in this post, but right now I want to talk about the decision process, not just the action that I took.

Usually, in such situations, I find the most costly function (StringEqualsToBuffer in this case) and then find any functions that it called, in this case, we can see that get_Item and get_Length are both costly functions called from StringEqualsToBuffer. Stupid micro optimization tactics, like referencing a field directly instead of through a property have enormous consequences in this type of scenario.

Next, we have things like GetHashCode, which looks to be very slow (it takes 4 nanoseconds to complete, I have hard time calling it slow :-)). This function is slow not because we are doing something that can be optimized, but simply because of what it does. Since we can’t optimize the code itself, we want to do the next best thing, and see if we can optimize the number of times that this code is called. In other words, apply caching to the issue. Applying caching means that we need to handle invalidation, so we need to consider whatever we will gain something from that, mind you. Often, the cost of managing the cache can be higher than the cost of calculating the value from scratch when we are talking about this kind of latencies.

Another issue to consider is the common memory vs. time argument, it is easy to err into one side of them when you are focused on micro benchmarks. You get a routine that completes in 1 nanosecond in the common case but uses up 10 Mb of cache. Sometimes you want that, sometimes it is a very bad tradeoff.

I generally start with simple performance tuning, finding out hotspots and figuring out how to fix them. Usually, it is some sort of big O problem, either in the function itself or what it is called on. Those tend to be easy to fix and produce a lot of benefit. Afterward, you get to true algorithmic fixes (find a better algo for this problem). Next, I run tests for memory usage, seeing if under the most extreme likely conditions, I am hitting my specified limits.

I’ll talk about reducing memory usage in a separate post, but once you run through that, another run to verified that you haven’t traded off in the other direction (reduced memory at the expense of running time) would complete the process.

Comments

Caching GetHashCode can be dangerous if you are using dictionary or hashtables!

Liviu,

See previous posts about this. In general, I AM using this in hashtables, and the cost saving are SIGNIFICANT

Have you noticed that StringEqaulsToBuffer is misspelled in the pb source?

"Eqauls" should be "Equals"

Comment preview