Want to see how modern applications handle complexity, scale, and cutting-edge features without becoming unmanageable? In this deep-dive webinar, we move From CRUD to AI Agents, showcasing how RavenDB, a high-performance document database, simplifies the development of a complex Property Management application.

When building AI Agents, one of the challenges you have to deal with is the sheer amount of data that the agent may need to go through. A natural way to deal with that is not to hand the information directly to the model, but rather allow it to query for the information as it sees fit.

For example, in the case of a human resource assistant, we may want to expose the employer’s policies to the agent, so it can answer questions such as “What is the required holiday request time?”.

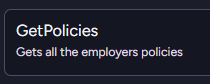

We can do that easily enough using the following agent-query mechanism:

If the agent needs to answer a question about a policy, it can use this tool to get the policies and find out what the answer is.

That works if you are a mom & pop shop, but what happens if you happen to be a big organization, with policies on everything from requesting time off to bringing your own device to modern slavery prohibition? Calling this tool is going to give all those policies to the model?

That is going to be incredibly expensive, since you have to burn through a lot of tokens that are simply not relevant to the problem at hand.

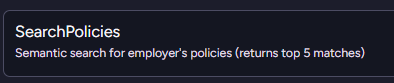

The next step is not to return all of the policies and filter them. We can do that using vector search and utilize the model’s understanding of the data to help us find exactly what we want.

That is much better, but a search for “confidentiality contract” will get you the Non-Disclosure Agreement as well as the processes for hiring a new employee when their current employer isn’t aware they are looking, etc.

That can still be a lot of text to go through. It isn’t as much as everything, but still a pretty heavy weight.

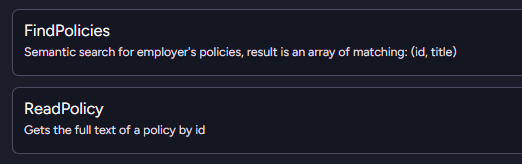

A nice alternative to this is to break it into two separate operations, as you can see below:

The model will first run the FindPolicies query to get the list of potential policies. It can then decide, based on their titles, which ones it is actually interested in reading the full text of.

You need to perform two tool calls in this case, but it actually ends up being both faster and cheaper in the end.

This is a surprisingly elegant solution, because it matches roughly how people think. No one is going to read a dozen books cover to cover to answer a question. We continuously narrow our scope until we find enough information to answer.

This approach gives your AI model the same capability to narrowly target the information it needs to answer the user’s query efficiently and quickly.

When using an AI model, one of the things that you need to pay attention to is the number of tokens you send to the model. They literally cost you money, so you have to balance the amount of data you send to the model against how much of it is relevant to what you want it to do.

That is especially important when you are building generic agents, which may be assigned a bunch of different tasks. The classic example is the human resources assistant, which may be tasked with checking your vacation days balance or called upon to get the current number of overtime hours that an employee has worked this month.

Let’s assume that we want to provide the model with a bit of context. We want to give the model all the recent HR tickets by the current employee. These can range from onboarding tasks to filling out the yearly evaluation, etc.

That sounds like it can give the model a big hand in understanding the state of the employee and what they want. Of course, that assumes the user is going to ask a question related to those issues.

What if they ask about the date of the next bank holiday? If we just unconditionally fed all the data to the model preemptively, that would be:

- Quite confusing to the model, since it will have to sift through a lot of irrelevant data.

- Pretty expensive, since we’re going to send a lot of data (and pay for it) to the model, which then has to ignore it.

- Compounding effect as the user & the model keep the conversation going, with all this unneeded information weighing everything down.

A nice trick that can really help is to not expose the data directly, but rather provide it to the model as a set of actions it can invoke. In other words, when defining the agent, I don’t bother providing it with all the data it needs.

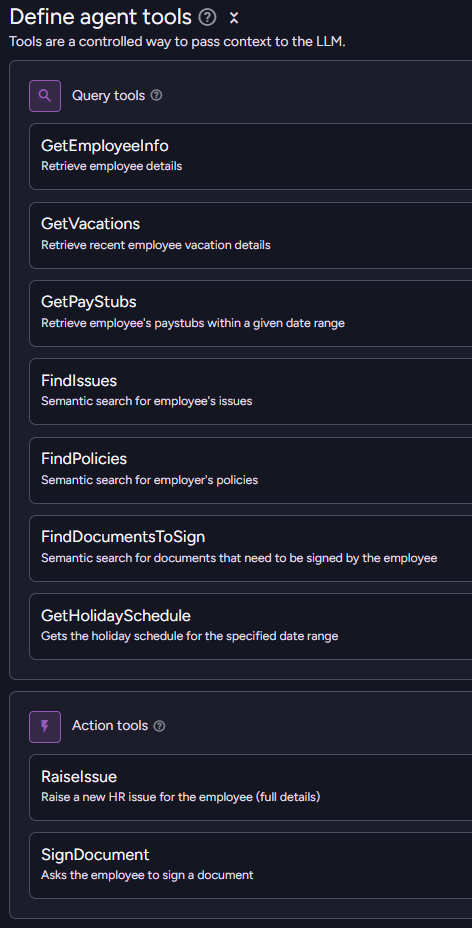

Rather, I provide the model a way to access the data. Here is what this looks like in RavenDB:

The agent is provided with a bunch of queries that it can call to find out various interesting details about the current employee. The end result is that the model will invoke those queries to get just the information it wants.

The overall number of tokens that we are going to consume will be greatly reduced, while the ability of the model to actually access relevant information is enhanced. We don’t need to go through stuff we don’t care about, after all.

This approach gives you a very focused model for the task at hand, and it is easy to extend the agent with additional information-retrieval capabilities.

Building an AI Agent in RavenDB is very much like defining a class, you define all the things that it can do, the initial prompt to the AI model, and you specify which parameters the agent requires. Like a class, you can create an instance of an AI agent by starting a new conversation with it. Each conversation is a separate instance of the agent, with different parameters, an initial user prompt, and its own history.

Here is a simple example of a non-trivial agent. For the purpose of this post, I want to focus on the parameters that we pass to the model.

var agent = new AiAgentConfiguration(

"shopping assistant",

config.ConnectionStringName,

"You are an AI agent of an online shop...")

{

Parameters =

[

new AiAgentParameter("lang",

"The language the model should respond with."),

new AiAgentParameter("currency", "Preferred currency for the user"),

new AiAgentParameter("customerId", null, sendToModel: false),

],

Queries = [ /* redacted... */ ],

Actions = [ /* redacted... */ ],

};As you can see in the configuration, we define the lang and currency parameters as standard agent parameters. These are defined with a description for the model and are passed to the model when we create a new conversation.

But what about the customerId parameter? It is marked as sendToModel: false. What is the point of that? To understand this, you need to know a bit more about how RavenDB deals with the model, conversations, and memory.

Each conversation with the model is recorded using a conversation document, and part of this includes the parameters you pass to the conversation when you create it. In this case, we don’t need to pass the customerId parameter to the model; it doesn’t hold any meaning for the model and would just waste tokens.

The key is that you can query based on those parameters. For example, if you want to get all the conversations for a particular customer (to show them their conversation history), you can use the following query:

from "@conversations"

where Parameters.customerId = $customerIdThis is also very useful when you have data that you genuinely don’t want to expose to the model but still want to attach to the conversation. You can set up a query that the model may call to get the most recent orders for a customer, and RavenDB will do that (using customerId) without letting the model actually see that value.

The RavenDB team will be at Microsoft Ignite in San Francisco next week, as will be yours truly in person 🙂. We are going to show off RavenDB and its features both new and old.

I'll be hosting a session demonstrating how to build powerful AI Agents using RavenDB.I’ll show practical examples and the features that make RavenDB suitable for AI-driven applications.

If you're at Microsoft Ignite or in the San Francisco area next week, I'd like to meet up.Feel free to reach out to discuss RavenDB, AI, architecture or anything else.

RavenDB Ltd (formerly Hibernating Rhinos) has been around for quite some time!In its current form, we've been building the RavenDB database for over 15 years now.In late 2010, we officially moved into our first real offices.

Our first place was a small second-story office space deep in the industrial section, a bit out of the way, but it served us incredibly well until we grew and needed more space.Then we grew again, and again, and again!Last month, we moved offices yet again.

This new location represents our fifth office, with each relocation necessitated by our growth exceeding the capacity of the previous premises.

If you ever pass by Hadera, where our offices now proudly reside, you'll spot a big sign as you enter the city!

You can also see how it looks like from the inside:

I ran into this tweet from about a month ago:

programmers have a dumb chip on their shoulder that makes them try and emulate traditional engineering there is zero physical cost to iteration in software - can delete and start over, can live patch our approach should look a lot different than people who build bridges

I have to say that I would strongly disagree with this statement. Using the building example, it is obvious that moving a window in an already built house is expensive. Obviously, it is going to be cheaper to move this window during the planning phase.

The answer is that it may be cheaper, but it won’t necessarily be cheap. Let’s say that I want to move the window by 50 cm to the right. Would it be up to code? Is there any wiring that needs to be moved? Do I need to consider the placement of the air conditioning unit? What about the emergency escape? Any structural impact?

This is when we are at the blueprint stage - the equivalent of editing code on screen. And it is obvious that such changes can be really expensive. Similarly, in software, every modification demands a careful assessment of the existing system, long-term maintenance, compatibility with other components, and user expectations.This intricate balancing act is at the core of the engineering discipline.

A civil engineer designing a bridge faces tangible constraints: the physical world, regulations, budget limitations, and environmental factors like wind, weather, and earthquakes.While software designers might not grapple with physical forces, they contend with equally critical elements such as disk usage, data distribution, rules & regulations, system usability, operational procedures, and the impact of expected future changes.

Evolving an existing software system presents a substantial engineering challenge.Making significant modifications without causing the system to collapse requires careful planning and execution.The notion that one can simply "start over" or "live deploy" changes is incredibly risky.History is replete with examples of major worldwide outages stemming from seemingly simple configuration changes.A notable instance is the Google outage of June 2025, where a simple missing null check brought down significant portions of GCP. Even small alterations can have cascading and catastrophic effects.

I’m currently working on a codebase whose age is near the legal drinking age. It also has close to 1.5 million lines of code and a big team operating on it. Being able to successfully run, maintain, and extend that over time requires discipline.

In such a project, you face issues such as different versions of the software deployed in the field, backward compatibility concerns, etc. For example, I may have a better idea of how to structure the data to make a particular scenario more efficient. That would require updating the on-disk data, which is a 100% engineering challenge. We have to take into consideration physical constraints (updating a multi-TB dataset without downtime is a tough challenge).

The moment you are actually deployed, you have so many additional concerns to deal with. A good example of this may be that users are used to stuff working in a certain way. But even for software that hasn’t been deployed to production yet, the cost of change is high.

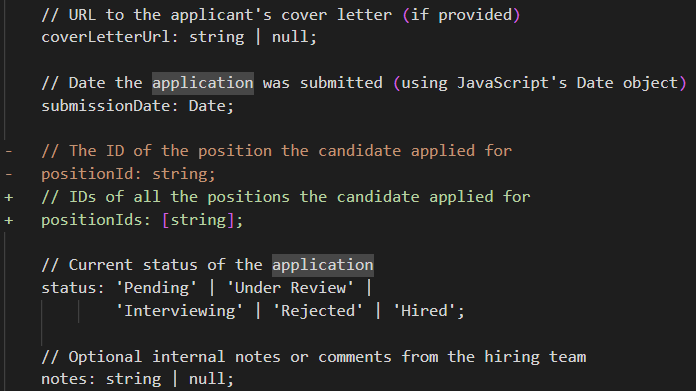

Consider the effort associated with this update to a JobApplication class:

This looks like a simple change, right? It just requires that you (partial list):

- Set up database migration for the new shape of the data.

- Migrate the existing data to the new format.

- Update any indexes and queries on the position.

- Update any endpoints and decide how to deal with backward compatibility.

- Create a new user interface to match this whenever we create/edit/view the job application.

- Consider any existing workflows that inherently assume that a job application is for a single position.

- Can you be partially rejected? What is your status if you interviewed for one position but received an offer for another?

- How does this affect the reports & dashboard?

This is a simple change, no? Just a few characters on the screen. No physical cost. But it is also a full-blown Epic Task for the project - even if we aren’t in production, have no data to migrate, or integrations to deal with.

Software engineersoperate under constraints similar to other engineers, including severe consequences for mistakes (global system failure because of a missing null check). Making changes to large, established codebases presents a significant hurdle.

The moment that you need to consider more than a single factor, whether in your code or in a bridge blueprint, there is a pretty high cost to iterations. Going back to the bridge example, the architect may have a rough idea (is it going to be a Roman-style arch bridge or a suspension bridge) and have a lot of freedom to play with various options at the start. But the moment you begin to nail things down and fill in the details, the cost of change escalates quickly.

Finally, just to be clear, I don’t think that the cost of changing software is equivalent to changing a bridge after it was built. I simply very strongly disagree that there is zero cost (or indeed, even low cost) to changing software once you are past the “rough draft” stage.

Hiring the right people is notoriously difficult.I have been personally involved in hiring decisions for about two decades, and it is an unpleasant process. You deal with an utterly overwhelming influx of applications, often from candidates using the “spray and pray” approach of applying to all jobs.

At one point, I got the resume of a divorce lawyer in response to a job posting for a backend engineer role. I was curious enough to follow up on that, and no, that lawyer didn’t want to change careers. He was interested in being a divorce lawyer. What kind of clients would want their divorce handled by a database company, I refrained from asking.

Companies often resort to expensive external agencies to sift through countless candidates.

In the age of AI and LLMs, is that still the case? This post will demonstrate how to build an intelligent candidate screening process using RavenDB and modern AI, enabling you to efficiently accept applications, match them to appropriate job postings, and make an initial go/no-go decision for your recruitment pipeline.

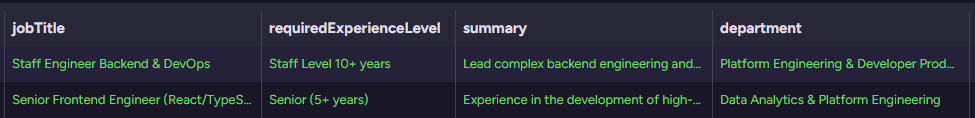

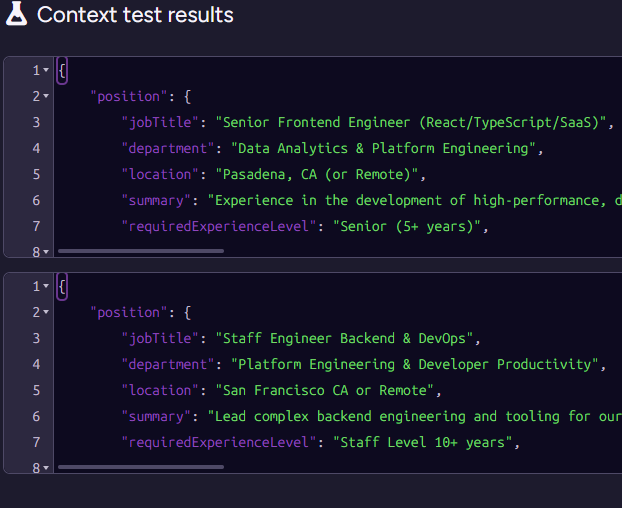

We’ll start our process by defining a couple of open positions:

- Staff Engineer, Backend & DevOps

- Senior Frontend Engineer (React/TypeScript/SaaS)

Here is what this looks like at the database level:

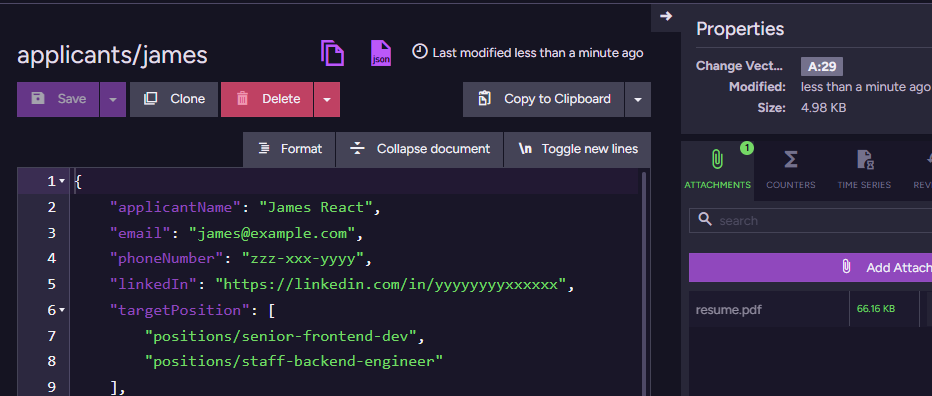

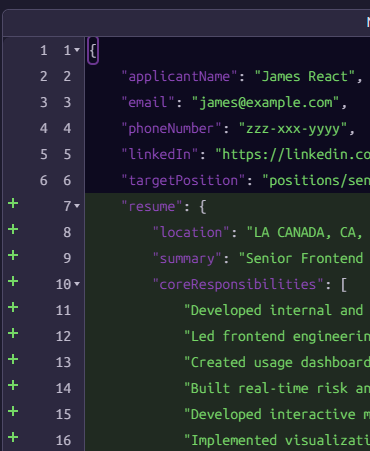

Now, let’s create a couple of applicants for those positions. We have James & Michael, and they look like this:

Note that we are not actually doing a lot here in terms of the data we ask the applicant to provide. We mostly gather the contact information and ask them to attach their resume. You can see the resume attachment in RavenDB Studio. In the above screenshot, it is in the right-hand Attachments pane of the document view.

Now we can use RavenDB’s new Gen AI attachments feature. I defined an OpenAI connection with gpt-4.1-mini and created a Gen AI task to read & understand the resume. I’m assuming that you’ve read my post about Gen AI in RavenDB, so I’ll skip going over the actual setup.

The key is that I’m applying the following context extraction script to the Applicants collection:

const resumePdf = loadAttachment("resume.pdf");

if(!resumePdf) return;

ai.genContext({name: this.applicantName})

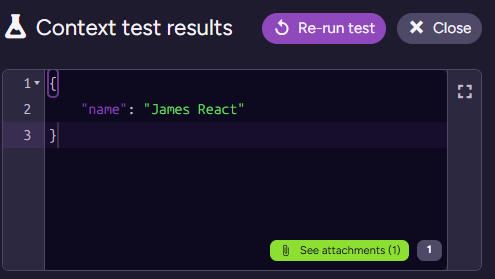

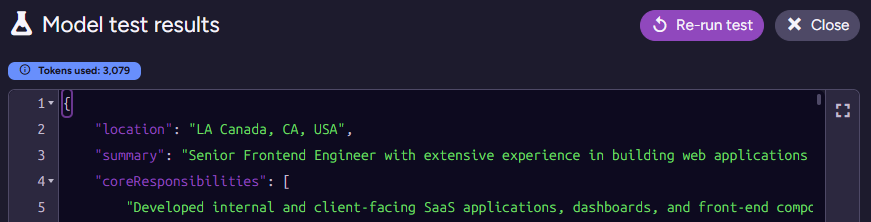

.withPdf(resumePdf);When I test this script on James’s document, I get:

Note that we have the attachment in the bottom right - that will also be provided to the model. So we can now write the following prompt for the model:

You are an HR data parsing specialist. Your task is to analyze the provided CV/resume content (from the PDF)

and extract the candidate's professional profile into the provided JSON schema.

In the requiredTechnologies object, every value within the arrays (languages, frameworks_libraries, etc.) must be a single,

distinct technology or concept. Do not use slashes (/), commas, semicolons, or parentheses () to combine items within a single string. Separate combined concepts into individual strings (e.g., "Ruby/Rails" becomes "Ruby", "Rails").We also ask the model to respond with an object matching the following sample:

{

"location": "The primary location or if interested in remote option (e.g., Pasadena, CA or Remote)",

"summary": "A concise overview of the candidate's history and key focus areas (e.g., Lead development of data-driven SaaS applications focusing on React, TypeScript, and Usability).",

"coreResponsibilities": [

"A list of the primary duties and contributions in previous roles."

],

"requiredTechnologies": {

"languages": [

"Key programming and markup languages that the candidate has experience with."

],

"frameworks_libraries": [

"Essential UI, state management, testing, and styling libraries."

],

"tools_platforms": [

"Version control, cloud platforms, build tools, and project management systems."

],

"data_storage": [

"The database technologies the candidate is expected to work with."

]

}

}Testing this on James’s applicant document results in the following output:

I actually had to check where the model got the “LA Canada” issue. That shows up in the real resume PDF, and it is a real place. I triple-checked, because I was sure this was a hallucination at first ☺️.

The last thing we need to do is actually deal with the model’s output. We use an update script to apply the model’s output to the document. In this case, it is as simple as just storing it in the source document:

this.resume = $output;And here is what the output looks like:

Reminder: Gen AI tasks in RavenDB use a three-stage approach:

- Context extraction script - gets data (and attachment) from the source document to provide to the model.

- Prompt & Schema - instructions for the model, telling it what it should do with the provided context and how it should format the output.

- Update script - takes the structured output from the model and applies it back to the source document.

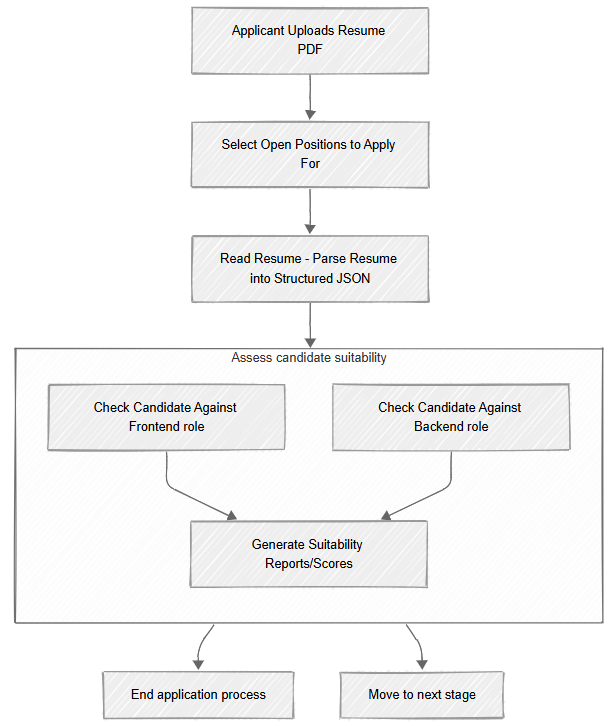

In our case, this process starts with the applicant uploading their CV, and then we have the Read Resume task running. This parses the PDF and puts the result in the document, which is great, but it is only part of the process.

We now have the resume contents in a structured format, but we need to evaluate the candidate’s suitability for all the positions they applied for. We are going to do that using the model again, with a new Gen AI task.

We start by defining the following context extraction script:

// wait until the resume (parsed CV) has been added to the document

if (!this.resume) return;

for(const positionId of this.targetPosition) {

const position = load(positionId);

if(!position) continue;

ai.genContext({

position,

positionId,

resume: this.resume

})

}Note that this relies on the resume field that we created in the previous task. In other words, we set things up in such a way that we run this task after the Read Resume task, but without needing to put them in an explicit pipeline or manage their execution order.

Next, note that we output multiple contexts for the same document. Here is what this looks like for James, we have two separate contexts, one for each position James applied for:

This is important because we want to process each position and resume independently. This avoids context leakage from one position to another. It also lets us process multiple positions for the same applicant concurrently.

Now, we need to tell the model what it is supposed to do:

You are a specialized HR Matching AI. Your task is to receive two structured JSON objects — one describing a Job Position and one

summarizing a Candidate Resume — and evaluate the suitability of the resume for the position.

Assess the overlap in jobTitle, summary, and coreResponsibilities. Does the candidate's career trajectory align with the role's needs (e.g., has matching experience required for a Senior Frontend role)?

Technical Match: Compare the technologies listed in the requiredTechnologies sections. Identify both direct matches (must-haves) and gaps (missing or weak areas). Consider substitutions such as js or ecmascript to javascript or node.js.

Evaluate if the candidate's experience level and domain expertise (e.g., SaaS, Data Analytics, Mapping Solutions) meet or exceed the requirements.And the output schema that we want to get from the model is:

{

"explanation": "Provide a detailed analysis here. Start by confirming the high-level match (e.g., 'The candidate is an excellent match because...'). Detail the strongest technical overlaps (e.g., React, TypeScript, Redux, experience with BI/SaaS). Note any minor mismatches or significant overqualifications (e.g., candidate's deep experience in older technologies like ASP.NET classic is not required but demonstrates full-stack versatility).", "isSuitable": false

}Here I want to stop for a moment and talk about what exactly we are doing here. We could ask the model just to judge whether an applicant is suitable for a position and save a bit on the number of tokens we spend. However, getting just a yes/no response from the model is not something I recommend.

There are two primary reasons why we want the explanation field as well. First, it serves as a good check on the model itself. The order of properties matters in the output schema. We first ask the model to explain itself, then to render the verdict. That means it is going to be more focused.

The other reason is a bit more delicate. You may be required to provide an explanation to the applicant if you reject them. I won’t necessarily put this exact justification in the rejection letter to the applicant, but it is something that is quite important to retain in case you need to provide it later.

Going back to the task itself, we have the following update script:

this.suitability = this.suitability || {};

this.suitability[$input.positionId] = $output;Here we are doing something quite interesting. We extracted the positionId at the start of this process, and we are using it to associate the output from the model with the specific position we are currently evaluating.

Note that we are actually evaluating multiple positions for the same applicant at the same time, and we need to execute this update script for each of them. So we need to ensure that we don’t overwrite previous work.

I’m not mentioning this in detail because I covered it in my previous Gen AI post, but it is important to note that we have two tasks sourced from the same document. RavenDB knows how to handle the data being modified by both tasks without triggering an infinite loop. It seems like a small thing, but it is the sort of thing that not having to worry about really simplifies the whole process.

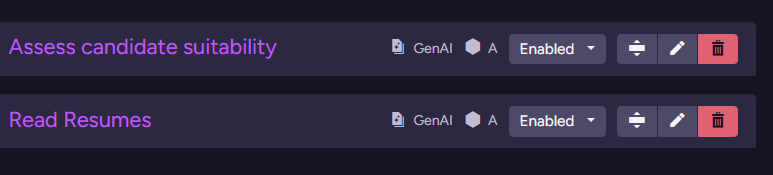

With these two tasks, we have now set up a complete pipeline for the initial processing of applicants to open positions. As you can see here:

This sort of process allows you to integrate into your system stuff that, until recently, looked like science fiction. A pipeline like the one above is not something you could just build before, but now you can spend a few hours and have this capability ready to deploy.

Here is what the tasks look like inside RavenDB:

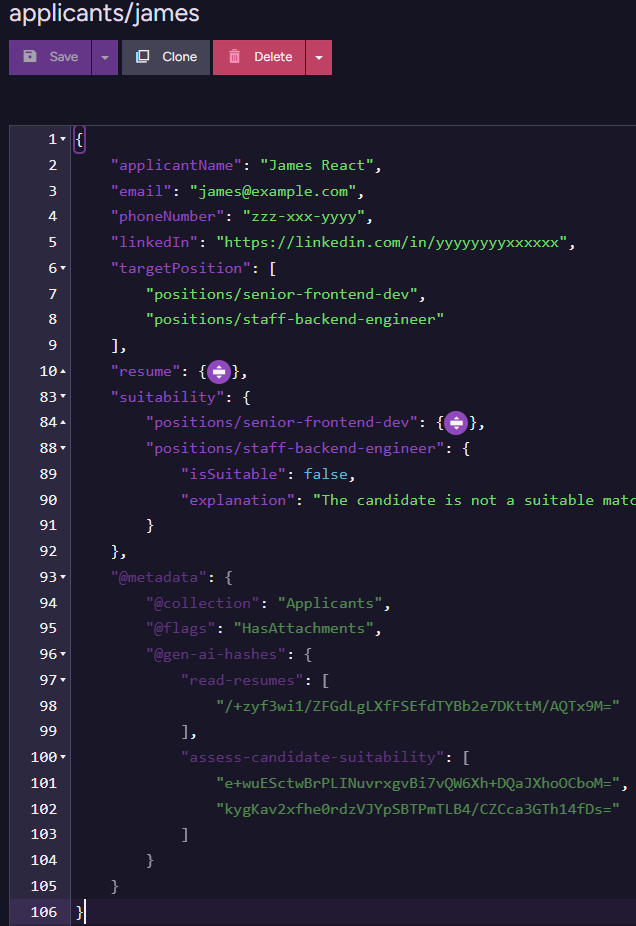

And the final applicant document after all of them have run is:

You can see the metadata for the two tasks (which we use to avoid going to the model again when we don’t have to), as well as the actual outputs of the model (resume, suitability fields).

A few more notes before we close this post. I chose to use two GenAI tasks here, one to read the resume and generate the structured output, and the second to actually evaluate the applicant’s suitability.

From a modeling perspective, it is easier to split this into distinct steps. You can ask the model to both read the resume and evaluate suitability in a single shot, but I find that it makes it harder to extend the system down the line.

Another reason you want to have different tasks for this is that you can use different models for each one. For example, reading the resume and extracting the structured output is something you can run on gpt-4.1-mini or gpt-5-nano, while evaluating applicant suitability can make use of a smarter model.

I’m really happy with the new RavenDB AI integration features. We got some early feedback that is really exciting, and I’m looking forward to seeing what you can do with them.

You might have noticed a theme going on in RavenDB. We care a lot about performance. The problem with optimizing performance is that sometimes you have a great idea, you implement it, the performance gains are there to be had - and then a test fails… and you realize that your great idea now needs to be 10 times more complex to handle a niche edge case.

We did a lot of work around optimizing the performance of RavenDB at the lowest levels for the next major release (8.0), and we got a persistently failing test that we started to look at.

Here is the failing message:

Restore with MaxReadOpsPerSecond = 1 should take more than '11' seconds, but it took '00:00:09.9628728'

The test in question is ShouldRespect_Option_MaxReadOpsPerSec_OnRestore, part of the MaxReadOpsPerSecOptionTests suite of tests. What it tests is that we can limit how fast RavenDB can restore a database.

The reason you want to do that is to avoid consuming too many system resources when performing a big operation. For example, I may want to restore a big database, but I don’t want to consume all the IOPS on the server, because there are additional databases running on it.

At any rate, we started to get test failures on this test. And a deeper investigation revealed something quite amusing. We made the entire system more efficient. In particular, we managed to reduce the size of the buffers used significantly, so we can push more data faster. It turns out that this is enough to break the test.

The fix was to reduce the actual time that we budget as the minimum viable time. And I have to say that this is one of those pull requests that lights a warm fire in my heart.

AI agents are only as powerful as their connection to data. In this session, Oren Eini, CEO and Co-Founder of RavenDB, demonstrates why the best place for AI agents to live is inside your database. Moderated by Ariel, Director of Product Marketing at RavenDB, the webinar explores how to eliminate orchestration complexity, keep agents safe, and unlock production-ready AI with minimal code.

You’ll see how RavenDB integrates embeddings and vector search directly into the database, runs generative AI tasks such as translation and summarization on your documents, and defines AI agents that can query and act on your data safely. Learn how to scope access, prevent hallucinations, and use AI agents to handle HR queries, payroll checks, and issue escalations.

Discover how RavenDB supports any LLM provider (OpenAI, DeepSeek, Ollama, and more), works seamlessly on the edge or in the cloud, and gives developers a fast path from prototype to production without a tangle of external services. This session shows how to move beyond chatbots into real, action-driven agents that are reliable, predictable, and simple to extend. If you’re exploring AI-driven applications, this is where to start.