When race conditions & unbounded result sets are actually the solution

Originally posted at 4/13/2011

As much as I rile against unbounded result set, I recently run into the first real case where “I need all of the data now” was a valid use case.

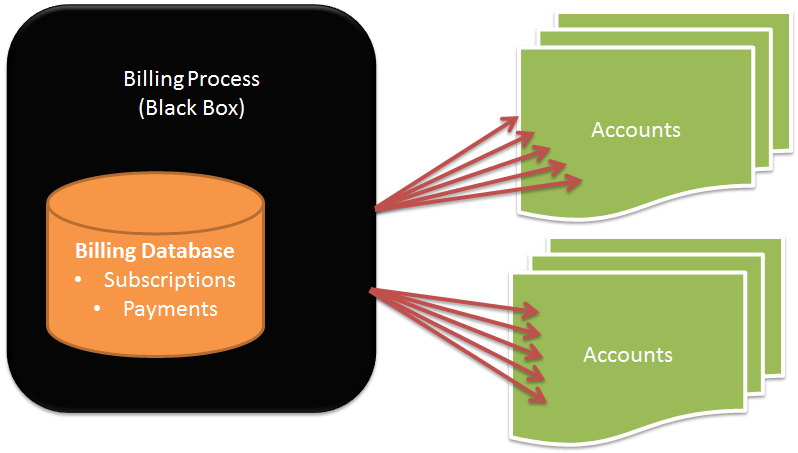

The issue is charging micro payments from customers in a particular market. In that market, accounts are usually handled using prepaid codes. So you open an account and load some amount of money into it. Once that money is over, there is no way to charge the account any longer. Users will occasionally re-charge their account. Now, we are talking about recurring billing scenario, where we need to charge users every week some amount of money.

So far, seems pretty reasonable, I hope. The catch is that it is pretty routine for billing to fail for insufficient funds. The strategy to resolve this is to analyze the user’s behavior and retry at likely times when we hope to catch the account with some money in it.

The problem?

Since failures are common, and since most people behave in a similar fashion, what happens is that billing cycles trends to focus on the likely times when we hope to find some money there. In other words, let us say that we noticed that on Sunday evening, most people charge their account…

What this means is that we will have to try to charge those accounts in that time period. To make things interesting, there are other parties as well, which probably also managed to figure out the times when most accounts have money in them. At that point, it actually becomes a race, who will be able to charge the accounts first, and get the money.

The requirement

Starting at a given time, charge as many accounts as fast as possible. Charging each account is a separate action, we don’t have the ability to do batching.

How would you solve this problem?

My solution, in the next post…

Comments

Having information about 'when' it will happen, you quantize time with, for instance 5 minute periods and get all the users within one period?

"At that point, it actually becomes a race, who will be able to charge the accounts first, and get the money|"

Pretty interesting business model ;-)

I'd prepare as much as possible before the billing process starts. E.g. retrieve all necessary biling information from the relatively slow DB to memory. Hardware and network optimization will also help.

You need the user's current balance. Storing that as a fixed number is prone to race conditions that cause you to overpay and record the wrong balance for the customer account. You could store a series of operations, but eventually you need to reconcile them. This way is prone to overpaying, but you can report an accurate balance.

So, you use database level locks and store the actual balance. Don't read before acquiring the write lock for that customer account. Record the account transactions in an append-only table and periodically ensure consistency.

Since no customer account needs to make reference to any other account, this partitions extremely well.

I assume batching would mean some kind of a script, where actions executed one-by-one in a chain. And we cannot do that, therefore I would vote for a parallel execution of the task.

In a simple scenario it can be multithreading, where each thread performs the job for one account in parallel to other threads.

Since we want it to be really fast, I would proceed in the following manner:

before running the service that charges clients, I would create tables with the same structure of the payments in the database, the number of tables would be equal to the number of parallel processes I would use

have the given process run in parallel, the more the better (equal with the number of tables created), and have them store the results in their tables

when the code finishes, it should do a "merge" from the new tables to the base table

drop the newly created tables

Ah, I misinterpreted. This is for the billing party, not the payment processor.

Comment preview