It is less expensive to do it inefficiently!

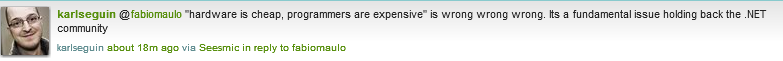

This is a continuation of a twitter conversation that I had with Karl Seguin.

One of the problems for developers is that we tend to have a hard time distinguishing between the right thing from a technical perspective and the right thing from a business perspective.

One of the known issues with the profiler from the very start was the how to handle large amount of data. The profiler use to keep all data in-memory, which put a hard limit to how much data it can manage. The right solution would have been to build persistence into the profiler from the get-go. It would eliminate an entire class of problems, after all, and we knew that we would have to get there in the end.

It is also, quite incidentally, the wrong decision to make. By keeping everything in memory, we significantly reduced the complexity that we had to deal with during the development of the profiler. It let us concentrate on getting features out the door and get to the point where people actually pay for it.

That decision has later cost me about two weeks of complex coding, and I would do it again in a heartbeat. One very important thing to remember is that in a project, every action that you take has a certain ROI value. Spending those two weeks earlier in the game would mean that I wouldn’t be able to provide as much features, get the required feedback and actually get some money in so we could continue development.

Of special interest are those comments:

The problem with this approach is that it makes an erroneous assumption, that your time is free. This is erroneous assumption because even if you don’t pay yourself, your time represent an opportunity cost to implement some other feature.

Let us try playing with Karl’s number for a minute, okay? Let us take a low end outsourcing hourly rate as our example, 20$ per hour.

We have two solutions in front of us, one will take about a week to develop, but would be three times less efficient than the alternative, which would take four weeks to develop. Remember, in most cases, the inefficient solution is simpler. It takes a lot of thought and sometimes complexity to figure out how to do something in the most efficient way possible.

In both cases, we are talking about a two men team, so the total cost for the first solution is 2x40x20$ = 1,600$, which the cost for the second one is 6,400$. The difference is 4,800$. That is a lot of money.

Amazon EC2 Double Extra Large costs (sounds like a McDonlands order, doesn’t it?) are:

- 1.20$ / hour – Linux

- 1.44$ / hour – Windows

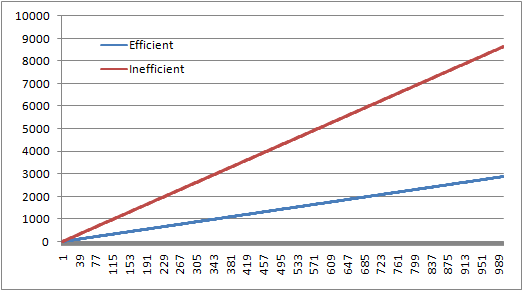

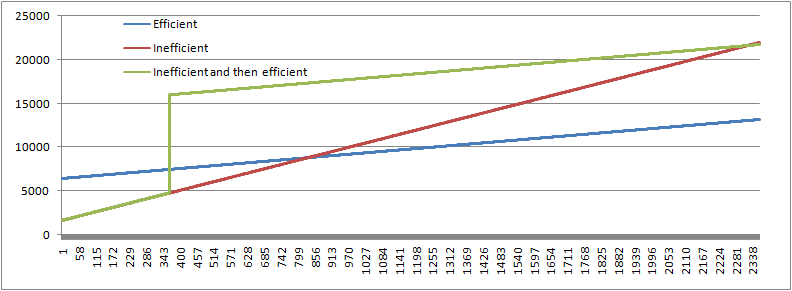

We will use the Windows number, since it is higher, and let us see what the results are. In the first solution, we consume 6 hours per day for this feature. Using the second one, we consume 2 hours per day. Let us plot the numbers over a period of two months, shall we?

This is a true no brainer, right? The more efficient solution (blue) handily thumps the less efficient (red) one. But what happens when we factor in the development cost?

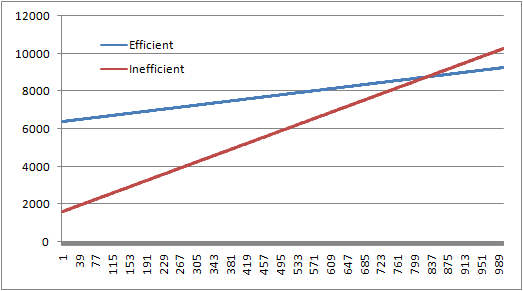

Now the story looks far different, right?

It takes over 800 days for the more efficient solution to gain over the less efficient one. Think about this, 800 days in which the less efficient solution is less expensive. That is two years and two months.

Let us plug in more realistic numbers for the cost of labor, shall we? Let us say that the labor cost is 80$ per hour (which is still cheap). At that point, it would take close to 5 years.

Nitpicker corner:

- The inefficient solution has to be adequate, of course, if it isn’t, it isn’t considered.

- Even if the inefficient solution is an order of magnitude worse than the efficient one, it will still be cheaper for a year!

- Yes, I am ignoring the refactoring cost:

- Inefficient – 1 week

- Efficient – 4 weeks

- Inefficient now, refactoring in a year – 1 week now, 7 weeks in a year.

That would look something like this:

But, and this is important, after a year, we know whatever we:

- actually need it?

- can afford it?

I would choose the inefficient solution every time.

In other words, we have technical debt vs. monetary debt and time debt here. And in most cases, getting to the point when you have a cash flow is the most significant target you should approach.

Comments

There is also the aspect of lost sales opportunities:

Waiting for the efficient version may result in lost sales up front.

Shipping an overly inefficient product may result in lost sales later.

Add these realities into the mix, and I tend to support the refactoring path most of the time.

Very well said. We are in the middle of a new project at the moment and we have a deadline to meet.

Of course we spend some extra time at the start to make the framework easy to work with but since we have just been implementing features because in the end a 100% finished product that is inefficient and may violate fundamental coding principles is still a product that can be sold and not a half finished piece of art.

We have however put in our project plan that soon after release, the code base will be refactored so that changes there after are painless and easily testable.

I agree. The balance between the right technical solution, the time it takes to implement it and the money required to do so should be in the back of every developers mind. Finding the right solution is a challenge however. Following the YAGNI principle can get you far, and the rest, I guess, is left up to experience.

I think it's "lame".

Companies produce crappy software because they need to deliver quick and get my money.

I will get hooked and use their third party tool component, and it will work in their demos, but it will fail in custom scenarios.

Sometimes i will have to wait for next version of the software in order to have something functional.

The quick decision will be to dump the component. But if you invested enough time to use it and code against it, you will not deliver yourself if you decide to change it.

so i will deliver crappy software too.

This is the curse of the software industry. We need to deliver shit soon, just let the marketing guys to compensate for the smell.

@liviu: You can select the most cost effective route and still create good software. You can compromise on scalability and maintainability. Not so much on customer satisfaction.

I think all developers should work on their own startup or as part of a start up to realise the financial constaints and what the real business concerns are.

The goal of building software 90% of the time is to build a product that people will buy to solve their particular problem.

Getting products out the door and getting actual and not perceived feedback will inevitably involve incurring technical debt.

Too many startups are from developers who ignore marketing and build something that there is not a market for.

If your product is not selling then your correct, uber solution will not stand you in good stead.

Get version 1 out the door and iterate fast.

I am trying to bring products to maket and I have to look at offshore development purely because of economics.

I think the craftsmen bores have their heads in the sand if they cannot see the financial constraints that most microisvs are under.

You ignored one specific very important issue: every time you release a version, you can't change it later on unless you can break your customer's software. This thus means that whatever you release, it is fixed in time, and will stay there forever.

Over time, you'll learn how to do things more efficiently, and then the old api/ the old tools are a burden, because they have to be kept around otherwise your existing customers will be angry and won't upgrade.

Another thing is that there might not be time to do research for doing things more efficient because it might very well be you need to release v2 with new features also a.s.a.p.

So it's not as simple as deciding to release early and fix inefficiencies later on, that's a very risky choice to make. I don't say you should develop everything as great as a human can possibly make it, but you at least have to realize that fixing it AFTER release is likely not going to happen and likely very difficult to do without creating a lot of problems for your customers.

To be blunt: only consultants who have a dayjob would consider this as a viable option. Any person who is depending on his/her ISV for income would not make this decision as it likely will not work out as planned.

This post reminded me of a famous real world example

www.wired.com/magazine/2009/12/fail_duke_nukem/

Won't it give competitors an edge to market their similar products more aggressively?

Ayende, I guess you're right for ISV scenarios (like for your Profiler). But i'm not sure if the less efficient solution is way to go in enterprise environments. Less efficient solutions might have some hidden costs as well. Typical enterprise applications usually last in decades so investing upstream will eventually pay off downstream.

Now, with Ayende's blessing, let's go and create some really bad software.

Don't forget calorie efficiency

Frans,

Changing something doesn't imply breaking changes. I release several times a day, sometimes, it works.

And if I don't fix it for v2.0, it probably isn't important enough to BE fixed.

Frans, I make these decisions, all the time.

Dhananjay ,

If your product isn't on the market, your competitors have more than an edge.

Edin,

I thought more about ent. scenarios than ISV ones, actually.

It is often easier to fix such things in ent. environments.

Alex,

sigh, now you know why I worry about whatever I should post stuff or not.

Premature optimization take us to a more efficient solution AND a bad software too. We are grown people, we are talking about having a well designed software that could be optimized later, not doing something inefficient just because.

Interesting perspective, although I think you are missing context as the importance of efficiency varies for different classes of software.

Admit-tingly most of the time its not important if you're an enterprise developer connecting web apps to a RDBMS. In most of these cases developer efficiency and time to market is more important for a business then time to develop the feature is the only ROI worth measuring.

But if you're developing software that is frequently run (i.e. a library or framework developer) or intended to be frequently used on a day-to-day basis than responsiveness and performance is very important for user satisfaction. This is where economies of scale makes sense and it becomes a good candidate for optimization.

Also for 'service software' that's needs to be available 24/7, hosted on the internet and available to thousands users efficiency becomes very important. A data-centre on the Internet is much more expensive to run than an in-house server room. You usually need 2 of everything and the cost of bandwidth, power, rack-space, networking equipment, etc. can quickly dwarf the cost of an expert developer.

Personally I think performance is highly underrated and the difference of a UI completing in a few ms to one that takes seconds to run is of paramount importance, its usually the difference of software that is a joy to use to one that is not used at all. This is a concept not lost on google where performance and efficiency is paramount.

Ahhh... the old warhorse, the reget cost. If we'd just done it properly the first time then we wouldn't regret the investment we're making now. This is wrong on so many levels.

The trick with business is to deliver in a timely fashion, rather than deliver the perfect solution to late for it to be of any use. We engineers see to forget that, driven as we are by the intellectual challenge the creating the perfect solution provides us.

Peter - your point would have been better made if you'd spelt 'regret' correctly in the link. reget cost I was thinking "cost of stale cache reads" or something like that.

Demis,

Actually, data centers on the internet tend to be cheaper (GoGrid, EC2).

And handling thousands of users is EASY, you don't think about perf at that level

Cloud computing is only cheaper if you need entry level servers with slow and low bandwidth. I was talking about if your software needs to run on your own data centre where you have your POP connected directly to tier 1 servers on the internet. In this case you need to buy and maintain your own routers, switches, backup, redundancy, etc. Needing 2 or 3 * extra servers because your software is inefficient adds up to a significant cost.

@Joseph, "reget" -> "regret" :( That it would, that it would.

The scary thing is, I'm having a non-trivial number of GMs ask me what the "regret cost" is.

Comment preview