Benchmark cheating, a how to

I already talked about the benchmark in general, but I want to focus for a bit on showing how you can carefully craft a benchmark to say exactly what you want.

Case in point, Alex Yakunin has claimed that NHibernate is:

NH runs a simple query fetching 1 instance 100+ times slower when ~ 10K instances are already fetched into the Session

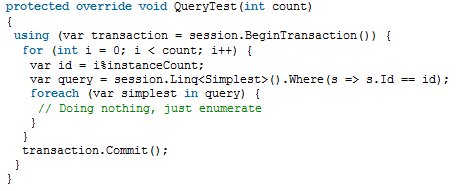

I am assuming that he is basing his numbers of this test:

And you know what, he is right. NHibernate will get progressively slower as more items are loaded. This is by design.

Huh? NHibernate is slower by design? WTF?!

Well, it is all related to the way you build the test. And this test appears specifically designed NHibernate looks bad. Let me go back a bit and explain. NHibernate underlying premise is that you live in OO world, and we take care of everything we can in the RDBMS world. One of the ways we are doing that is by automatically flushing the session if a query is performed that may be affected changes in memory.

Huh? That still doesn’t make sense. It is much simpler to understand in code.

using(tx = s.BeginTransaction()) { foo = s.Get<Foo>(1); foo.Name = "ayende"; var list = s.CreateQuery("from Foo f where f.Name = 'ayende'").List<Foo>(); Assert.Contains(list, foo); tx.Commit(); }

The following test will pass. When the times come to execute this query, NHibernate will check all the loaded instance that may be affected by this query, and flush any changes to the database.

NHibernate is smart enough to check only the loaded instances that may be affected, so in general, this is a very quick operation, and it means that you don’t have to worry about the current state of operations. NHibernate manages everything for you.

However, in the case of the QueryTest above, this is using this feature of NHibernate to show that it is slow. All queries are made against the same class, which forces NHibernate to perform a dirty check on every single loaded object. With more objects loaded into memory, that dirty check is going to take longer.

Now, there is no real world scenario where code like that would ever be written (except maybe as a bug), but the result of the “test” are being used to say that NHibernate is slow.

Of course you can show that NHibernate is slow if you build a test specifically for that.

Oh, and a hint, session.FlushMode = FlushMode.Commit; will make NHibernate skip the automatic flush on query, meaning that we will not perform any dirty checks on queries.

But that is not really relevant. Tight loop benchmarks for frameworks as complex as OR/Ms are always going to lie. The only way to really create a benchmark is to create a full blown application with several backend implementations. That is still a bad idea, as there are still plenty of ways to cheat. All you have to do is to look at the PetShop issues from 2002/3 to figure that one out.

Comments

That makes me think of this blog. This is advertisement for a commercial tool hidden behind a so called post about improving nhibernate performance.

www.orasissoftware.com/.../...rmance-problems.aspx

Even if you go to the blog homepage it s really well hidden in technical articles.

http://www.orasissoftware.com/blog/

I admit i felt in this trick and wasted time reading it.

Did you send the invoice of your advise and course to somebody ?

You are explaining what a lot of NH users knows and what beginners are asking in NH's forum. Who is moved by bad intentions should PAY to know how a very good persistent-layer should work.

I'm not even sure what a benchmark for OR/M means.

If you really are interested in performance of the OR/M you aren't loading 10k instances into the session, and if you are loading 10k instances into the session you don't care about performance. So what is the point in benchmarking this example... it is catch-22.

I guess you could argue that OR/M benchmark is that it does what you think it should do, but good luck benchmarking that.

Ayende, you know that the goal of this test is measure speed of compiling and execution LINQ query. If it does not work correctly, it will be surely changed. Why do think that the you can do in this case is to accuse its authors of cheating?

Then i must apologize in adavance for this beginner question.

I m not sure i understand.

Inside the scope of a session, when u issue a query against objects that have already been loaded by a previous query then manually modified , u get the in memory objects and not the real database ones ?

Does this company deserve this much publicity on your blog with so many visitors from the .NET community and the NHibernate community in particular?

Oren, the only different with your code and the benchmark test code is you're assigning foo.Name=value, which obviously should be flushed into database. And you statement is valid here ... that NH needs to flush out this to database so it is slower. But, in the benchmark test code it is not altering the object in any case. Why then it is slow ? I am not sure am getting it ...

Vadi -

I am no expert, but I believe the issue isn't whether or not the objec t was changed, it is that NHibernate has to check if the object was changed.

@Jim, the way I understand it:

When you query the DB with a session, the session first updates the DB so that the DB state equals the in memory state of your NH objects in the same session. And then you get consistent query results. But, in the same session, two DB results with the same PK (and from the same table/class etc) will become the same object in the NH session.

So in ayendes Foo.Name example:

You get all the rows with Foo.Name == ayende

The row with ID=1 was updated just before the query, so that row gets returned too.

But Foo with ID=1 was already in the session, so you get the in memory object for that.

And then you get new objects for all the other rows with Name==ayende.

If someone thinks I'm wrong, please correct me because I'm not a NH expert, just a casual user.

Fabio,

A good idea, but I don't think that I'm able to trust the business practices of the company in question

Vadi,

NHibernate doesn't know if the values where changed or not.

So it has to check before each query.

@Alex Kofman,

The behavior from the authors so far.

@Jim,

NHibernate implements Unit of Work, as such, if you load the same row by two different means (for example, using two different queries) you will get the same instance, yes.

"Ayende, you know that the goal of this test is measure speed of compiling and execution LINQ query."

Have you ever read up why Linq to sql has compiled queries to begin with? Because their internal visitor setup sometimes with very complex queries perform a lot of graph traversal.

This means that with the simple queries you're running, there's no way in h*ll you're going to measure any linq query compiling speed. If you want to measure that, create a very big complex query, with a false resulting predicate (not a constant) and run that.

I also fail to see why you want to fetch data with linq for tests which measure updates, if the o/r mapper framework also supports another way of querying the db....

Oren, it was out of our intention - to show it's slower. Espacially by Now we implemented all the things you (and others) pointed to. New NH results are:

Performance tests:

100 items:

NHibernate

Create: 8909 op/s

Update: 13655 op/s

Remove: 16013 op/s

Fetch: 6780 op/s

Query: 1161 op/s

Compiled query: 1161 op/s

Materialize: 31847 op/s

1000 items:

Create: 9438 op/s

Update: 17260 op/s

Remove: 18505 op/s

Fetch: 6987 op/s

Query: 1180 op/s

Compiled query: 1180 op/s

Materialize: 42992 op/s

5K items:

Create: 9647 op/s

Update: 16727 op/s

Remove: 18099 op/s

Fetch: 7047 op/s

Query: 1191 op/s

Compiled query: 1191 op/s

Materialize: 41045 op/s

10K items:

Create: 9673 op/s

Update: 16714 op/s

Remove: 18030 op/s

Fetch: 7065 op/s

Query: 1190 op/s

Compiled query: 1190 op/s

Materialize: 39796 op/s

30K items:

Create: 13837 op/s

Update: 16300 op/s

Remove: 17729 op/s

Fetch: 7031 op/s

Query: 1183 op/s

Compiled query: 1183 op/s

Materialize: 37724 op/s

Linq tests:

Passed: 28 out of 100; failed with assertion: 4.

Btw, let's see if the community will consider some of things we did as hacks for NH here.

The code is already @ google code: https://ormbattle.googlecode.com/svn/trunk

If anyone wants to get access to the repository, just notify us. We'll update code.ormbattle.net today as well.

"Espacially by" -> "Especially by such dirty tricks as "special tests"".

Concerning hacks: e.g. I won't accept trick with (i % 25), but we implemented even this stuff to make everything work faster:

Not sure about the opinion of community - let's see.

Alex,

IStatelessSession

I've mentioned we measure peak performance on any test.

But I agree, complex query compilation must be an interesting case as well. It must show expected lowest performance.

Btw, do you understand it isn't good for you:

We & EF support compiled queries. They show nearly the same subsequent execution performance.

You don't. And here it will just degrade.

Alex,

Regarding the new perf numbers. Did you read my previous post, or this one?

Tight loop benchmarks are a nice way to lie. They are meaningless because they ignore what actually happen in real world applications.

Show me an benchmark with real world concerns:

Reasonable data size (hundreds of thousands of rows, minimum)

Real world data load (create some entities, update some entities, read some entities) where some match what people actually do, usually CUD is < 10 and read is < 50.

Database located on a different physical machine

Then you can start getting some more realistic number.

Yep, we did. It is used in Update & Delete test, but in Insert test regular Session gives better results.

And it's unused in materialization test:

there is lo LINQ for it anyway

moreover, I think it's not a good idea to measure materialization without identity map (not sure if IStatelessSession maintains it - does it?).

Yep, we already decided to implement standard TPC tests. Are they ok for you? :)

Frankly speaking, I'm 100% sure results will be ~ the same. We've measured all basic operations, that will consume 90% of time. Yes, the difference there won't be so dramatic (because RDBMS will do all the dirty job consuming ~ 90% of time), but as I've said, there are no any reasons to expect some changes.

"Btw, do you understand it isn't good for you:

We & EF support compiled queries. They show nearly the same subsequent execution performance.

You don't. And here it will just degrade."

do YOU understand that my query pipeline might just be very very fast? my linq provider can compile any expression tree to query elements within 1ms or faster and the DQE engine to convert query elements to SQL is also very very quick (as in: it isn't even considerable as an option to optimize when you profile the code as it's not noticable as a slowdown in the complete pipeline).

So tell me, why should I add the overhead to cache stuff if I don't have to? Caching query compilation results requires overhead, memory, extra (error prone) code etc.

It will degrade? I don't see how. But that's of course one of my many 'serious mistakes', eh? ;)

And not good for me... I think the opposite. As my linq provider uses a few passes, at most it uses 10 visits for the whole tree or subtrees. That's it. Very quick, no compiled query mess with all the restrictions that come with it, just plain fast code. And if people believe you instead of me, they can always use our own query api, which doesn't require a parser at all (as the objects are already in ast form and require 1 visit).

I know my code very well, Alex. You don't, not even a single bit. The tests you still haven't removed, clearly show that. You didn't even spend a single second reading example code in the manual. Now, you're lecturing me where my code is slow? You should book a slot at comedycentral.com

Btw, that's nice you (I mean you and Frans), seeing very good compatitors, taking the tactics of saying "everything they measure is wrong".

Do you really believe such results on LINQ test can be just a result of some tricks? Oren & Frans, I think you know better than almost anyone else this is simply impossible, because LINQ is too complex.

The same is about performance. We compete well not with just NH or LLBLGen. Some of tested frameworks support wide variety of ways of storing the entities in RAM - from very simple ones to complex. And what? Does this play a significant role? E.g. NH entities are very simple. But it is 10+ times slower on materialization. The same is correct for CUD.

So... Being on your place, I'd just say "guys, you've astonished us". Instead, you started to protect your position saying "everything is not real"...

Yes, it's absolutely clear.

But have you seen this number for complied queries? ~ 0.1ms.

If query is cached, it runs ~ as fast as any fetch. If not, it runs 5-10 times slower.

So there are reasons for doing this. I won't comment your stuff about RAM waste. Just imagine how many distinct queries you should cache.

Alex,

Do you hear yourself?

Frans says that a query takes 1 ms to execute, you say that compiled query takes 0.1ms

Since it is all about the SQL generation, you should put it into context

The next operation is to make a _remote call that will probably touch the disk_. We are talking about tens and hundreds of ms here.

At that point, there is no sense at all in even trying.

Linq to SQL, for example, is known to take so long to compile the query that it is actually longer than executing the query. That is why then needed this hack.

And it is a hack, make no mistake.

Agreed, CC in EF simply sucks. Have you seen our own syntax?

var compiledQuery = Query.Execute(() =>

from s in Query <simplest.All

where s.Id == id

select s);

foreach (var item in compiledQuery))

...

Isn't it nice? :) You can even put this query to static variable.

Guys, how frequently the very same data is fetched? In typical application (without good in-memory result caching) it's frequently fetched 2-3 times, since any of its users normally deals with nearly the same data.

So if the first query will hit the disk, the others may not. And reply time in this case can be very fast.

Ok, final 2 arguments:

can you explain why guys from EF team implemented this?

so you assure me e.g. 1000 fetches per second is ok as well?

Alex,

What kind of database are you working on that the entire result set can be kept in memory?

And thousands of data base queries per second is _bad_. It means that you are hammering on the DB.

Most of the time, you would serve data out of the cache, and won't hit the DB _at all_.

Pff... Are you serious?

How many databases don't fit in RAM in real world? It's pretty well-known many database servers are equipped with RAM enough to cache the whole database, or at least a working set (~ 10%). So they have a huge chance of not hitting the disk at all.

And you're saying it's ok to spend e.g. 5-10 more times in this case.

Btw, I think it's better to stop discussing this ;) I'm pointing you that others do implement this. So there are reasons for this. You don't listen, and arguments you provide are FUD.

Ok, can you admit complied queries are helpful in some cases? ;)

Um, the vast majority of them.

I am not sure on what kind of applications you work, but when you deal with databases that have millions of rows in many tables, you don't assume in memory access.

So what is % of such applications? Your assumption?

Just FYI:

1M (row count) *1K (row size) * 1000 (tables, indexes) = 1Tb.

Servers with 64Gb RAM are pretty normal now.

"Btw, I think it's better to stop discussing this ;) I'm pointing you that others do implement this. So there are reasons for this. You don't listen, and arguments you provide are FUD."

EF team also implemented a graphical designer similar to L2S which can't deal with > 100 entities. In v4 it's the same.

And your argument was?

During profiling of our code, we can see where the bottlenecks are, which are simply around the datareader or in the ado.net provider + db, so out of our hands: i.o.w.: the vast amount of time spend from query definition to result is in the DB and not in the query parsing/SQL production subsystem, on the contrary.

But I get a 'your runtime lib has more duplicate code because it compresses better' deja-vu here. If you think you're the one who knows better than myself where our pipeline needs improvement, good for you.

Btw, still the site hasn't seen an update.

@Alex perhap your tool could load data faster than the other. It great to know, BUT I haven't find any good reason for loading lot and lot of data off the database server across the network could be yield any benefit? What are you trying to do? data replication? stealing data off a server? or your just want to solve a business problem?

If you tried to solve a business problem I believe you don't truth your database server could do set operations; then there is no point in choosing it the first place.

Back to the O/RM stuff : to me the best thing about O/RM is I don't have to worry about CRUD operation that much and NH Unit Of Work model served in that manner.

@Alex

What is the advantage of loading a table one row at a time?

I don't think so. Frans, you perfectly know what to do, as with O(N^3) vs O(N) case. I simply can't change your mind ;)

In comparison to what?

This code example is using an individual query to load each row in some table. Why not just use a single query?

You're pointing on their lack, while I pointed on their advantage. I understand you're trying to depreciate them by this. So you got my reply about O(N^3).

Will we continue talking the same way? :)

To measure peak materialization speed. What else can be done to measure it?

I just couldn't see myself doing this. I know that these aren't supposed to be real world examples, but there would be more value in a benchmark that demonstrates something I would actually do. If you want this or/m battle marketing campaign to take off at least make the examples a bit more compelling. Seeing that your framework is good at something that I don't need doesn't really get me all that excited about it.

Oren, I'm searching for good "production quality" sample with NHibernate usage. Last time I used NHibernate about 3 years ago and I'm afraid all knowledge is lost =) Can you recommend any serious sample application?

"I don't think so. Frans, you perfectly know what to do, as with O(N^3) vs O(N) case. I simply can't change your mind ;)"

But who says it's O(N^3) ?

if you think compiled queries have no overhead, I think you should look into the code again. Compiled queries might be faster for tight loops, but IMHO the effort to make them bug free (Linq to sql saw most of its bugs related to compiled queries, and users still aren't really happy with the system overall), the overhead etc. is not really worth it, IF the linq provider + sql production engine is simply very fast. As we don't generate IL at runtime anyway, we don't benefit one bit from compiled queries anyway, so we need to optimize our pipeline very well. Luckily our query api is designed as an AST, so all that's really 'slow' is the linq provider. As parsing linq queries can be done in several passes if you do a lot of work per pass and not unnecessarily through many visits (like MS), it's not really that much overhead (as in: it's not really noticable in the profiler).

Now, you can jump up and down all you want about 'performance', but again, I do know where the performance of a query is consumed, as I've seen the profile results and used them to optimize hotspots, and unfortunately for you, there's not that much overhead burned up, so compiled queries don't really gain here, as the vast majority of the time is spend in the DB, in the network etc.

I rather spent time in making eager loading more efficient with tunable codepaths, or excluding fields in queries.

that's also the irony of compiled queries: L2S and EF have compiled queries to make things faster, but at the same time their eager loading systems are pretty bad so way more performance is wasted there. Of course, you will say you have both covered, but as I explained in another comment... it's not really relevant anymore: 1, 2, 10ms ... It wont make you king of the hill.

What matters if the developer can write the code with proper support, manuals, enough features not to worry about workarounds etc. etc. The services around the data-access, not the raw query performance themselves. Performance is a slippery slope after all: there's always another concession you can make to get even more performance, by taking some crap for granted. That's not what O/R mapping is all about. Sure the code shouldn't be dog slow, but none of them is these days.

I trust it, but: CUD test does not measure this. Explanation is here: ormbattle.net/.../...ur-tests-are-unrealistic.html

"To fully explain this adopting this to our test suite, they propose to use executable DML to measure update & delete time. What they're going to measure by this way? They are going to measure the performance of SQL Server executing these operations, but not ORM performance. So such test won't be related to ORM at all."

Finally, trends show more and more logic is moving from RDBMS to BLL. RDBMS vendors integrating CLR inside just prove this. Distributed databases (that provide no any advantages for such operations) are used more and more widely. So necessity to use such operations is decreasing. That's just FYI.

I agree with this.

Agree with this.

We don't support eager loading so far - i.e. you should do this fully manually. But this part is in development ;}

I understood the following:

Some people clearly understand such tests as CUD & materialization.

Others need some real tests, and this site completely misses this part.

So as I've mentioned, we already decided to add standard TPC tests there. Hopefully this will fix the issue.

"We don't support eager loading so far - i.e. you should do this fully manually. But this part is in development ;}"

you don't ? I'm very surprised! Also not in nested queries in linq projections?

And you have a big mouth about linq provider quality concerning my work (which you suggested would fail if it was handed non basic queries) ? :)

If something is good for every day real life performance it's eager loading. Example:

var q = (from c in metaData.Customer

<orderentity(c => c.Orders).OrderByDescending(o => o.OrderDate).LimitTo(2)

filtered, ordered eager loading graphs, in linq.

Doing that by hand is really really expensive and slow. In real life scenario's that is of course. Perhaps you should add 50 or so linq queries which fetch graphs, filter the sub graphs etc. That would skew the results a bit, eh? :)

Surely eager loading is essential feature for OR mappers, especially when we a talking about ORM performance. It will be interesting to compare your implementation with another. I personally would like to see some comparison or overview.

I fully agree with this. But:

There is no standard for this. I.e. it's your own extension of standard. How can we test this?

And implementation of prefetch and quality of LINQ standard implementation are two different things. We test the second one, since it's obvious how to test it, and it's meaningful. Do you know how to do the same with prefetch?

So when they'll appear in DO4 (I think we need ~ month to get this fully working), obviously, we won't include this into any of such tests.

Finally, although I said we don't support EL, we support one more nice feature: while materializing query result, we load & materialize all the entities which properties are exposed in the final projection there. This provides some support for EL, and for now this is acceptable.

I think Oren will approve NH's LINQ is even worse from this point, but this is also acceptable.

But does your eager loading also tweak sub node queries on the fly? I.e.: to fetch subnode (by filter on parent), do you use simple IN clauses when possible and if not switch to subqueries?

eager loading at first isn't that difficult, to get it working with different graph setups (e.g. lots of parents, single child, not a lot of parents, many children etc.) is another story.

I really am surprised that you didn't implement this o/r mapper must have feature. Especially in distributed scenario's, eager loading is essential.

I don't know all the details (Alexis Kochetov is the guy responsible for this), but I'm 100% sure there are no IN queries:

List of items there is normally limited

This works only for simple keys.

On the other hand, I don't know what exactly happens now. Let's leave discussion of how this works till the moment this is fully done. As I said, what we have currently looks more than trick than like a real implementation.

Concerning the complexity of implementation of all this stuff: I can mention our query transformation pipeline is likely one of the most advanced ones. An example of one of transformations we do is here: blog.dataobjects.net/.../...n-pipeline-inside.html (that's one more reason of test failures on other frameworks except EF & DO4)

So I think we'll be able to implement EL. And I fully agree, this is a "must have" feature for any serious player here.

So: http://ormbattle.net/index.php/blog.html

Oren, if there will be any further suggestions on how to improve NH results, you're welcome.

As well as any other people ;)

Thanks for removing us.

About the Apply stuff... only sqlserver has that operator, it's only needed if you do cross-join operand filtering, which is actually pretty rare, IMHO. I have to check what we do internally (I think we either give up or try to rewrite it to something which does work, if possible with a custom filter in the on clause).

Well, yes. Apply is supported only by SQL Server. On the other hand, this is quite powerful construction: e.g. its presence eliminates the need of joins.

Frankly speaking, I don't see any reasons for not supporting it by other RDBMS: almost any of them supports subqueries (the underlying processing there must be almost the same as for APPLY), but we were unable to find APPLY analogue nether for Oracle, nor for ProsgreSQL.

Anyway, its absence makes ORM vendor's life much more complex.

FallenGamer,

Look at Sharp Architecture as a good example.

Hi Oren,

I have to confess that again, I don't think I get it:

You say that 1) the reason NH is slow is that it has to check if it needs to flush and 2) the reason this flush is slow is that it needs to go check all the objects in the identity map and that 3) The reason all this is good is because it allows NH to handle the example code in your post correctly.

But (as Alex has pointed out) other frameworks also handle your code example correctly, but without the non-linear overhead.

Thus, it can't be that feature (being able to handle the code example you show correctly) which is in itself responsible for the non-linear overhead - rather it must be either:

1) NH has made some suboptimal architectural decisions (and hey, who doesn't make those, I'm won't try to sound innocent!) or

2) There is some other feature that NH can do but that the other frameworks with linear response times can't . If this is the case then I am really curious what that feature is, because actually this very design decision of N/Hibernate has puzzled me since as long as I can remember. Unless I am mistaken, the "feature" that it actually does enable is that it allows you to use "new()" to create domain objects, so it does not require using factories (for runtime proxying of all domain objects) and also does not use a compilation time AOP solution that injects the necessary code for dirty tracking right into the original domain objects...though tbh whether not using something like that can be thought of as a feature is not clear to me). Please correct me if I am wrong?

/Mats

Mats,

The reason for it being slow is that NH needs to check if a flush is necessary.

And the reason that it is O(N) operation is because NHibernate doesn't place constraints on persistent classes.

As such, dirty checking must be done by NHibernate, and that requires scanning all persistent entities and comparing all their fields.

EF, for example, requires a base class and managed fields, so it can notify the context about dirty checks.

NH needs to perform the check explicitly.

This is a trade off for making the model freer from constraints, and something that only show up as a problem when you start dealing with large number of entities.

There are several ways of working around that, one is the flush mode setting, the second is to override NHibernate's dirty checking using the interceptor.

And if there are no constraints, it uses persistence by reachability pattern. Quite costly, yes? E.g. I'd prefer to forget about "persistence for every built-in type" (this is anyway not true) and deal with tracking or base types, rather than deal with such issues (just disabling flushes before queries isn't a solution - it's a workaround).

You know, it's possible to implement this without a base class. But you must communicate with tracking service in this case.

"And the reason that it is O(N) operation is because NHibernate doesn't place constraints on persistent classes."

And these (lack of) constraints are that N/H doesn't rely on a factory nor a compile time weaver? So I was correct to assume that is the "feature" responsible for the non-linear times, then? ;-)

To recap: The reason NH is slow here is that it can't do dirty tracking by relying on notifications from the domain objects (because it doesn't want to rely on factories or compile time weavers?). Instead you have to do dirty tracking by comparing the states of all the loaded objects to their original values. This of course gives the consequence that it becomes slow in scenarios where a lot of objects are loaded and you have to check them all. That N/H wants to check if it should flush to the DB in this very scenario is another design decision of N/H that has continued to puzzle me (*) but it is not really the issue here - the issue is that the decision to default to "comparison based" dirty tracking becomes slow whenever you have to check a lot of loaded objects, and this is just one example where this issue becomes a concern, since that check is triggered.

Again, using a compile time weaver (or factories for runtime subclassing) so that you'd get notification based dirty tracking would completely solve this, right? (Yes, N/H might still want to flush to handle the example in your post, but then this would be fast and linear)

"and something that only show up as a problem when you start dealing with large number of entities."

Of course. But that does happen eventually when use cases keep growing hairier and databases keep growing new tables ;-) But you are completely right of course that a lot of the time, this just isn't an issue!

"the second is to override NHibernate's dirty checking using the interceptor."

By using a compile time weaver on the side? Or how do I get my domain objects to issue the notifications?

Now, of course supporting the scenario where for some reason you can't use factories or weavers is nothing to be sneezed at - on the contrary, I think it is quite cool that there's a framework that does that (it certainly speaks to the purist in me!) but if NH really supports a notification based solution "out of the box" then I guess that's definitely something that should be taken into account by Alex's coders, because I imagine it will change numbers dramatically (and most importantly, make them linear). And I have to say I do wonder why it is not the default approach.

(*) About N/H doing that flush...it has always seemed strange to me:

Why not just start by taking the results from the DB and then checking the dirty objects in memory to see if any modifications should be made to the results? This would have the advantage of working outside a transaction: in a transaction you can roll back that flush, but if no transaction is active, what says I really want those dirty objects in memory going back to my database - it could well be that I want the fresh data from the DB to be able to determine that it would be a disaster for me to commit my UoW, right? :-) I do know that actually implementing this strategy used to be a hassle (since it basically required you to write a provider running your queries against an object model), but nowadays, with everyone running LINQ, LINQ to Objects does pretty much this for free, right?

(I'm sorry if this is something you have already answered a 100 times, or even once, if so please link, I haven't been able to see anything on the web properly addressing this question)

/Mats

Btw, can you confirm there is a near-optimal test code for NH now? Or there is something else left?

Here it is: code.ormbattle.net/

@Alex,

I think the part that is missing from your benchmark to give a useful, big picture understanding of what the results imply, is a clear explanation that put the numbers into the context of the overall optimization potential.

As others have pointed out, in a real project where you start involving databases on dedicated servers and business logic code on the application server that does more than run a loop, then there is the very real potential for any optimizations on the O/RM code to become relatively cost inefficient.

What I mean is: Say that you write a "real" test application (say, a PetShop or whatever) and you measure that the worst of the frameworks that you test only gives, say (pulling numbers out of my a** here for sure, but bear with me) 5% overhead when compared to a corresponding application using only barebones ADO - the reason being that even if lots could be optimized in the framework, the time it consumes is dwarfed by the time it takes to run over the network to the database, etc etc)

In such a scenario, there would just not be much opportunity for a framework to try to compete on raw speed, since the best it could hope to do would be to shave a bit off that 5% overhead. Even a magical framework that cut it down to 0% wouldn't make much difference to the overall performance (only 5%, as it were).

In such a scenario, customers would probably be better off searching for the framework that provided good documentation, feature richness, ease of use and so on, since trading all that away for a measly 5% speed improvement just wouldn't make much sense. Your framework could be 100 times faster than mine and it might still just translate to an overall 1% overall performance improvement in your application.

Now, you may protest that the span to the worst (interesting) competitor is larger than 5%, making the speed issue more important. But without seeing any tests to that effect, how do we know? That's why I think for a complete and honest picture, you should try to complement your tests with a discussion around, and a testing of, how big this type of overhead really is in a typical application.

Fully agree. I'm working on this...

@ Mats: Short answer: ayende.com/.../...marks-are-useless-yes-again.aspx

Alex,

No, I am not going to confirm near optimal code.

I am going to follow Fabio's suggestion, if you would like my NHibernate expertise, please contact me privately to discuss my rates.

Mats,

Yes.

The idea here is that you'll get the in memory results from the database in you make a query.

It is confusing for the user if this doesn't happen.

Yes, and if you want to do that, NH contains the hooks to provide it.

You miss the point, it isn't # of entities that is important, it is # of entity instances loaded into the session, and it is only noticable if you run something like this, which is sitting in a tight loop forcing this to happen over and over again.

However you want to do so. A common approach is to do it using IsDirty flag that is weaved in.

NH requires that you will override Interceptor.IsDirtyFlush() and provide an implementation, it doesn't dictate how.

Because it places constraints on the domain. And it is fragile. For example, imagine a method customer.DoSomething() that updates a field directly.

That DoSomething() method needs to set the IsDirty flag.

It is either that (which is very fragile), use AOP and force only property setters or try to intercept every field set.

Any of those have significant issues associated with them, so they aren't the default.

Because you might not get the values that you would get if you flushed first.

Imagine that the name is not set to the value you are selecting in the DB but is set to it in memory. By flushing it, you are ensuring that it is returned from the query.

It is also not possible to run this queries in memory, imagine that there is a trigger in the DB that change the value, or aggregation that requires DB values, or a SQL function, or... you get the point.

That is not an advantage, NH goes to a lot of trouble to be transactional.

And if you don't want this feature, you set the FlushMode appropriately.

Than let's forget about this. I fairly believe money will change something here. But if they would... This would depreciate the whole discussion.

Anyway, thanks for advices.

Thanks for the detailed reply,

I don't fully agree with you about whether it is a good default behavior to use comparison based dirty tracking, but I do appreciate that it is a trade-off and that your arguments make complete sense - again, it is a case where I value things differently in a trade-off, I suppose neither of us is more "right" but I'm always happy to better understand the technical aspects of the trade-offs so thanks again for the detailed reply.

Btw, regarding the flushes - Roger Alsing just pointed out to me that I get a problem with my approach in where clauses with aggregate constraints - true enough and so here I must concede that N/H does the right thing, after all! I tried to wriggle out of it by referring to map reduce but I don't think he bought it ;-)

Sorry I was unclear here. I simply meant that as more tables find there way into a use case, chances are that you'll end up having more instances in it, too...

LOL

Oren, I'm writing FAQ now, and found your example:

"C++

int[] arr = new int[100];

for(int i=0;i<100;i++)

arr[5] = i;

C#

int[] arr = new int[100];

for(int i=0;i<100;i++)

arr[5] = i;

And then pointing the perf difference related to array bound checking in a contrived scenario. There isn't a point in trying to fix anything, the scenario that you are using is broken."

I'm curious, do you know that exactly in this case .NET will run just 1 array bound check (one per the whole loop)?

Looked it up more carefully, and now I'm not fully sure about this: I'm 100% sure it will eliminate all unnecessary bound checks for arr[i] in this case. But you wrote arr[const] = i; so on one hand, this is even a simpler case; on another, a lot depends on JIT logic here.

Anyway, it was just a remark to your example. I understood what you wanted to say, of course.

Alex,

No, it won't.

If would optimize the case of arr[i], but not the case of arr[5].

The reason for that is that in a loop, you very rarely do arr[constant or variable that isn't loop var]

It doesn't make sense to try to optimize that.

In fact, it is a bad practice, because you are spending effort on something that won't happen.

You are adding complexity and not getting much in return

Sounds reasonable, especially for JIT.

Oren, I'd like to notify you I've made a post into ORMBattle.NET blog related to our discussions: ormbattle.net/.../...i-dont-believe-oren-eini.html

P.S. There is a good FAQs section now. So if you disagree with something, please check it first. Although it's mainly based on our debates here (no any quotes, of course).

Comment preview