Benchmarks are useless, yes, again

I was informed about ORMBattle.NET existence a few minutes ago. This is a site that:

ORMBattle.NET is devoted to direct ORM comparison. We compare quality of essential features of well-known ORM products for .NET framework, so this site might help you to:

- Compare the performance of your own solution (based on a particular ORM listed here) with peak its performance that can be reached on this ORM, and thus, likely, to improve it.

- Choose the ORM for your next project taking its performance and LINQ implementation quality into account.

NHibernate is faring rather poorly in the tests there, and I thought that I might respond to that.

First, I want to point out that the company behind the website is Xtensive, which makes another ORM Product. This is not to discredit them, they make it very clear who is behind the site and that they have an ORM product in the benchmark. And they were the one who contacted me about it.

The problem with benchmarks, especially when you are trying to compare a wide variety of products that have different feature sets and different capabilities, is that they are essentially useless. The problem is that in order to be able to measure anything useful, you have to resort to the common denominators, and they are pretty bad.

In the case of the benchmark scenarios used for NHibernate, it shows the problem rather clearly.

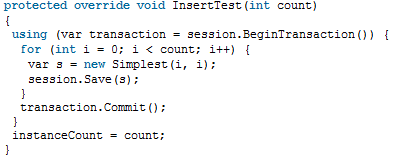

I don’t even need to think about anything to know that this is going to perform badly. There are several reasons for that. First, NHibernate was never intended to be a batch processing tool. It is an OLTP tool. The benchmark (all the tests in the benchmark) are aimed specifically at measuring batch processing. That is problem number one.

Second, NHibernate actually contains a lot of features that are aimed to give us great performance in many scenarios, including batch processing. For example, in this case, using a stateless session alone would generate a significant performance boost, not to mention that there is no use of batching whatsoever.

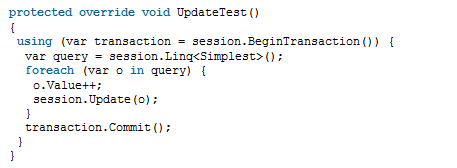

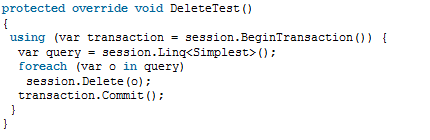

This two tests are also showing examples of “this isn’t how we do things”. For one thing, calling the database in a loop is a bug. For another, I would generate the same result, which greatly improved performance using Executable DML:

session.CreateQuery("update Simplest s set s.Value = s.Value + 1").ExecuteUpdate();

session.CreateQuery("delete Simplest").ExecuteUpdate();

And so on, and so forth.

To summarize, because I have talked about this before. Trying to compare different products without taking into account their differences is a flawed approach. Moreover, this benchmark is intentionally trying to measure something that NHibernate was never meant to perform. We don’t need to try to optimize those things, because they are meaningless, we have much better ways to resolve things.

And you know what? The problem is that even if you did put those in, they would still be invalid. Benchmarks tend to ignore such things as the impact of the builtin caching features, or the optimization options that are available in the mapping.

In short, if you want me to “admit” that NHibernate isn’t a batch processing tool, I will do so gladly, it was never meant to be. And benchmarks that try to show how it is in batch processing are going to show it being slow. For real world application development, however, NHibernate is a great fit, and show excellent performance.

Oh, and because management told me that I must, if you find perf problems with NHibernate, that is why we have the NHibernate Profiler for you :-)

Comments

what tool do you recommend for batching processing?

While I do agree that things can be done better in the code for comparing with Nhibernate, I do not like that you dismiss the whole project as useless because you can't compare different products with different capabilities.

All these products are meant to solve only one and very common problem most of the software project have: data persistence.

And all the developers (and their manager) know that the performance of the solution influence greatly the performance of the whole application.

It's just too easy to say that you should not compare.

Join the project, modify and add some tests, join the battle and show that Nhibernate is better!

The thing I found most interesting was that the high score for DataObjects.Net ... I hadn't heard about it, and it turns out, the creators of the website are also the developers of that product ( http://ormbattle.net/index.php/about.html) ....

So the benchmarks are made in the way the developers of that product think an ORM should perform well... In my opinion this is a bit biased...

I'm not saying there hiding their association, but still it should have been a good idea to attract some outside members to setup the benchmark suite..

Should have read better, you did notice it... (and they did contact you)

That's rather disgraceful way to advertise own tool. I will never use a product of the company who uses such dirty tricks.

fanny, I think comparing ORM 'performance' is a flawed idea because all ORM will have very similar performance in typical scenarios such as load, update or insert, at least they should have because they execute almost the same sql. The test cases should be more tricky, to check additional performance-enhancing features such as caching, query batching, intelligent prefetching and so on, now this would give us an idea of what benefits can be expected by choosing ORM over raw ADO.Net. I would also like to see the comparison of ORM 'usability' like the ease to set up and use in existing projects, 'integrability' with other platform components, learning curve, ugliness of data access code etc.

We use the delete case with the foreach in our project. Just because we did not find any alternative. And off course we suffer the bad performance.

from now on we ' ll use

"session.CreateQuery("delete Simplest where xxx = yyy").ExecuteUpdate();"

Thank for the tip.

With Nhibernate U can do things in several ways and lots of them are wrong.

I d really like to see somewhere a list of Nhibernate begginers common mistakes, because Nhibernate is so not beginner friendly.

@junior programmer: Are you sure you really need to download all that data from your persistent storage, do a little update and than upload it all again?

We use MSSQL2008 in about 98% of our projects. We don't write generic products, so we don't need cross platform database support and that means you can optimize for your database. But for every database their one very important step. Do as much on the database server itself.

Take an import for example. I parse the import file and put all records in an database and use SqlBulkCopy.WriteToServer to send it all to a global temporary table. Than we perform multi queries on that table and finally update the target table.

We use NHibernate in OLTP applications like websites, desktop applications and middleware (ESB, WCF, etc). We don't have much batch processing there.

@Fanny: There nothing wrong with comparing ORM framework, but use REAL tests (like you do with your unittests) and not a batch update benchmark. We didn't they write a simple blog application and written an implementation for every ORM framework that want to compare. That you can write stories and measure how long an certain use case takes. Like creating a blog, creating a blog entry, select the last 10 entries for syndication, etc. Than put all those timings in a matrix table and you have a good comparison of ORM tools because you benchmark something real.

If you want high performance, you don't use a ORM framework to import a massive CSV file. It can certainly make things easier, that than YOU have to trade performance for convenience..

Oren, can I quote my e-mail conversation with you? I disagree with you, and it explains my position very well.

Alex: I also mailed you because you use simply unfair code. I also mailed you how to fix them so you get much higher results with our framework for example.

I've seen these kind of sites before, and although I don't like the results we got (which I know are due to your lack of understanding of our framework, so I am not surprised the results of other frameworks like nhibernate is also poor), I agree with Oren in this: they're actually pretty useless.

Not only can I show you your testing method is seriously flawed, it's also a case of what would the user use in practise? Like Oren showed in this post: if a user has to do ABC, he will use the feature of the framework which suits ABC best. Which very likely will perform much better, because it 1) fits the job to do better as the feature is tailored for the job and 2) doesn't fall into the trap of doing ABC very inefficiently to begin with

However at the end of the day, I can only shrug and move on. I thank you for the 100 extra test queries for linq which showed 3 bugs in our provider which caused many queries to fail (which is a signal you're not benching very good) so I'll fix these for our customers. But you have to understand: these kind of sites have little value, as what Oren described: they don't show how a framework will behave in real-life, in real world projects with real world problems: like updating entities using an expression. Some frameworks can do that with 1 query (like we do, and nhibernate too, can yours?), so a user will do that with ... 1 query in practise not with a loop!

Despite what you might think: customers don't suddenly think "Oh! I have to use dataobjects.net!", as they haven't done that in massive droves in the past, so why would they do that now? You can pat yourself on the back for the most feature-rich framework there is (and I think many of my fellow o/r mapper developers will agree with that), but honestly... if you want to sell your work, you have to invest time in documentation, features your customers want and need, instead of comparison sites which have little value other than "oh look Ma, in my own benchmarks I'm the best!"-kind of ego boosting ;).

Oh, and Alex, where are these 'CUD' tests / calculation methods ? You babble about pages, but you don't use paging code and the Materialization 'test' is equal to the 'Query' test (except a where clause)... which makes no difference for systems other than the ones who use prepared commands.

You see, prepared commands are only useful for these kind of tests, in real life, you don't need them that much, as you need many different commands per batch anyway if you're saving many entities (as they're often changed on different places) or you have many different connections, and a prepared command is gone once the connection is gone :)

Let's hope for the O/R mapper users in the world that we, O/R mapper writers, aren't forced to add optimized code paths for these silly loops, like videocard driver writers have to do to their driver code to show up best in the used benchmarks (which thus says nothing about reallife performance, only that the code does great in the particular benchmark).

Frans, I just e-mailed you about unfair code. Certainly we'll fix all the issues in these particular test scenarios, but won't use anything like single statement table updates in these tests, because they test different case. I'll explain this here further.

And I confirm for LLBLGen there are some, but until now Oren didn't point to any issue to fix for NHibernate.

Concerning NHibernate-like frameworks: do you agree EF is NHibernate-like (POCO, similar DataContext behavior, etc.)? I didn't get any claims related to its tests yet (but let's wait, of course). Anyway, I'm 99.9% sure we know how such frameworks work very well.

Sorry, there must be "And I confirm there are some issues to fix for LLBLGen, but until now Oren didn't point to any issue to fix for NHibernate."

And I'll reply you later in the evening about everything else (e.g. paging). Should get some sleep :(

About prepared statements: for now we don't use them at all, because as you said, it's gone when connection is closed. Later - may be. But for now I'm not sure about this. They don't significantly reduce query time, if it is a query which plan can be cached (in our case this is always true).

AFAIK only OpenAccess uses prepared statements ("sp_prepexec" is frequently shown in SQL Profiler output on its tests).

Fanny,

The underlying premise of the project is flawed. It is trying to measure raw database calls, and that is just not something that is interesting in any OR/M that uses Unit of Work.

A real benchmark would be building a real world application on top of each, and optimize each story, not each specific test that they created. None of the created tests would ever be used in real code.

"About prepared statements: for now we don't use them at all"

err... how can you then be faster than sqlclient (see 30K batch tests for example) ?

That's batching, nothing else. And for batching there 's more clever code to be used. You know, the only FAIR way to test what you want without doing batching is to create for every insert a new session/context, open a transaction, save, commit/flush context/session, repeat.

Anyway, I'll mail you about the legal side of things about this, as management here isn't very pleased with you using our brand name for your marketing and want our name, results and any hint towards our framework removed from your site.

Alex,

Sure, you can use the email converstaion

Alex,

I did point out issues to fix in your NH tests, they are trying to test the wrong thing.

As such, I don't see much to bother with anything else.

When your underlying premise is flawed, you get GIGO

But here is a hint, set the batch size to a high level a see what is going on

Alex, you have email (about the removal of us from your marketing site)

Also, I found the derivatives calculations, but these are wrong: you insert/update/delete in a batch (single session/context, 1 commit/flush), and therefore can use optimized codepaths for that. However, you can't use that for extrapolation over single client actions. E.g. doing 30K updates using a single context, is a batch of 30K updates by a single client. This isn't the same as 30,000 clients doing 1 update however you seem to think it is and your site seem to suggest the tests are about that. They're not (otherwise you can't be faster than sqlclient)

@Alex

"Anyway, I'm 99.9% sure we know how such frameworks work very well."

But you are showing the code and seeing it I'm sure you don't know how work NH. We can change NH results without touch the wrong code of the test (only touching the cfg).

@Oren, have a look to the NH conf. they are using and compare it to some of their results.

Btw, good work @Alex. The time will say us which is the future of DO.NET.

Good luck.

I'll be again out of the blame chorus here.

Just to clarify things I'm not in anyway related to DO.Net and discovered it today with the benchmark site.

I'm an Nhibernate user (and btw also a Nhprofiler user too).

In the past I used several frameworks like Ibatis.Net and LLBLGen.

Reading the comments seems to me that this site scares...

It has touched one pain points we have when using ORM.

The tests may be biased in favor of creators ORM so what?

They released the source code and said you are free to modify and test by your own.

You do not like the code? Change it.

You would like a more real life example: add it. We would like it too.

We would love to have it because we could see all the application of best practices not all of us may be aware of.

On the same damn example.

Now we also have tools to stress test an application and see how it profiles with many different clients.

This would show how to apply an ORM in particular domain and how easy (or not) all the ORM in the .Net space solve it the same situation.

And Ayende yes: Nhibernate is hard (but for you) if you want to do in the best performing way and you know that as you created a tool for this (and btw I bought it).

You are creating an example app if I am not mistaken, use it as the example to test with the other ORM.

Just do not tell me that if I need to do some batch updates I must not use the ORM I am already using because is not intended to do that.

And Frans: your reaction with this threatening "legal department stuff" removal doesn't help you either. At least for the public image your tool has gained.

The only problem this site has was that it came from a "contestant" ORM producer so it is obviously in favor of that particular ORM.

This can be (and I would say it should be) changed.

But the "battle" is good.

Every battle shows us how to improve: Frans proved it in catching bugs.

Benchmarks are good, they are not all, but it is worth to have them.

fanny,

I think you are missing the main point.

It isn't a case of needing to optimize some code. It is a case of a benchmark that is specifically built to show off non real world scenarios that benchmark well on one ORM.

There is nothing to learn from trying to pass such a thing, except bad ways of building a benchmark.

@ fanny, NHibernate and LLBGen are two proven ORM framework. They both perform well in real world application and that is the most important test there is. While it's certain that Oren and Frans can tweak the test to suit their framework why bother to get caught up in this little game? In the end they are probably comparable to each other with some edge case.

Like most have already pointed out, this is a petty marketing game that being play by the benchmark owner.

"This would show how to apply an ORM in particular domain and how easy (or not) all the ORM in the .Net space solve it the same situation."

The question is how would we define this particular domain to suit the general audience? Then again does it really matter? Unless the ORM, or any frameworks, is crawling by its knee a few millisecond won't matter in most case. While performance matter, premature optimization tend to be a waste of time. Oren already touched on the subject of premature optimization else where on this site and I happen to agree with it.

Since you mentioned that you are a NHibernate user. Are you satisfy with its performance? If not then is NHibernate the bottleneck? Did NProf helped your project in anyway? Is there something else that you wish NProf would do for you? As a current NHibernate user, those are they kind of real world story that I am much more interested in rather than some useless benchmark.

@fanny:

"Reading the comments seems to me that this site scares...

It has touched one pain points we have when using ORM."

Are you joking? It's a misleading website posted by someone who has a clear bias and everything to gain by misleading people. It has nothing to do with pain points, it has to do with dishonesty and false advertising.

"And Frans: your reaction with this threatening "legal department stuff" removal doesn't help you either. At least for the public image your tool has gained."

So you would be ok with building a brand and then have a competing company use your brand (dishonestly) to make its product look better? I don't think many companies would agree with your point of view.

"And Frans: your reaction with this threatening "legal department stuff" removal doesn't help you either. At least for the public image your tool has gained."

I'm not threatening anything. Our management just asked them to remove us from their site, KINDLY. That you think we threatened them is your imagination. We didn't threat anyone with anything, why would we do that? It only costs money and time.

What doesn't help us is that this misleading FUD site keeps spreading lies about what o/r mapper is the fastest. We don't want to be in this challenge.

I'll give you a couple of examples why we don't want to be in this challenge:

1) our linq provider doesn't support Skip() without Take(). This is because our framework doesn't support skipping, only paging (in various ways, but that's another story). Adding Skip() to all linq queries make ALL fail and we therefore would score a 0. That must signal our linq provider is really bad, doesn't it?

2) what's the difference between:

a)

for(int i=0;i<30000;i++)

{

}

vs.

b)

// pseudocode, you get the drill

using(var s = new Session())

{

}

?

a) mimics 30000 users saving 1 entity. A real life (albeit a bit lame implemented) scenario. b) mimics a bulk insert, only used rarely in importtools which can't use the native import system of the database at hand.

The 'battle' uses b) because it can show how fast DO is compared to other systems which are optimized for a). You know how you can see that? because it's faster than plain ADO.NET. How can it be faster than 30000 times a plain SqlCommand.ExecuteNonQuery() call? I know how, because it uses an optimized path for b): a prepared query (it's described below the figures).

3) If you're asked to update the salary of all employees of department 3 with 10%, and you have just a sql prompt, would you do that with:

a) an insert in a temp table, a cursor over the temp table updating the rows and then a cursor over the temptable which updates the real rows in the main table?

or

b) you would use a single UPDATE statement?

The battle uses approach a) as it then again can optimize for bulk updates, however SQL offers more advanced techniques to do this, and some frameworks optimized therefore for b). But again these frameworks look like poor performers in this 'battle'.

Do you know all this when you just look at the numbers? no. The numbers show that there's a clear winner. That's not a surprise, this battle has no other winner but the product of the owner of the website. I.o.w.: there's nothing to win and absolutely nothing to gain from any other framework to be on that website.

That's why we asked kindly to get our name, the code of our framework and the results of our framework to get removed. After all, they claim to be 'honest', so I don't see why they won't do what we kindly asked them.

I looked again into the code, and saw that the SqlClient code was actually included.

Now, here's the deal: it uses Prepare() already. So the numbers of the sql client tests are already created using prepared statements. This means that you can't get faster than that in single query execution sequences, you can only get as quick as that. But as the sqlclient test uses a tight loop over a prepared SqlCommand, you really can't get quicker than that, unless you batch statements.

Batching can be done on sqlserver, by packing multiple queries together using ';' and eventually prepare them. It's more efficient if the query is equal every time, so the plan can be re-used for the packed query. You can only utilize this if you're doing batch inserts, if you're mimicing a lot of different actions on the DB you can't use batches, as they're not useful, there's nothing to batch. As I described above, if you move the loop outside the session/context creation, the batching isn't possible anymore and you will see very different numbers which will mimic real world scenarios instead.

There's another way of batching on sqlserver, but that way isn't public. It's the way SqlDataAdapter batches statements using internal code. (using SqlCommandSet).

Lots of databases don't support batching of multiple statements, like Oracle. It's however nowhere mentioned on this 'honest' site. ;)

Frans,

FYI, NHibernate supports batching for both SQL Server and Oracle using SqlCommandSet (and the equivalent for ORA).

You might want to take a look at how this is done and copy it.

Admittedly, [ThereBeDragons] was applied :-)

Heh :). Btw I didn't know odp.net had a batch pipeline under the hood.

I checked if we can implement it in the pipeline (after all, it processes elements in a queue anyway), but it's a little tricky as we have features which take place right after a single entity is saved, like validation after refresh, auditing and error recovery for a single entity failure during save is then out of the window (if packing 10 statements together makes statement 9 fail, how is this reported back so the calling code can recover and retry entity 9... ).

So only if the user doesn't want to use all that, AND bulk inserts are possible, it might be useful. Sounds like an edge case. (as, like you said in the blogpost, in OLTP you're more interested in a lot of features around the persistence, that's what makes using an o/r mapper useful, not bulk inserts, sqlBulkcopy is much faster than any o/r mapper.

hehe I see your comments on the class, my thoughts exactly :)

I've to look into whether this is worth the effort in our case (I'm not going to copy it, it's LGPL-ed code after all), considering the consequences for various features we have (which all have to be disabled or not used). Also reflection would destroy medium trust, some people like to have that due to shared hosting providers.

It's a trade off really, and I think in general users don't really want to give up the features for the batching just for a few extra cycles, as there are always other ways to make it even quicker (like bulk copies).

Frans,

NH uses the UoW model, so we don't have the concept of partial update, just a transaction failure.

With NH, batch updates disable some things, too, mostly related to update validation checking (if you are trying to update instead of insert, frex).

The class is actually taken from Rhino Tools, which is a BSD license, so you can use that instead of the one in NH.

But while this isn't a common scenario, it does show up fairly often.

For example, imagine a feature like copying an entity (deep copy), which may result in many statement. Batching that produces nice perf boost.

Ok guys, I see we've made some of you boil. First of all, let's stay cool ;)

Secondly, now I'm writing the answers to all the critics I got from you. They'll will be published in FAQ @ ormbattle.net in few hours. That's just because I dislike the idea to answer all the same questions for N times.

Good point. And thanks for the hint about the different version :).

I'll look into it later this year.

Yep...Time machine please...6 years ago ..

https://www.hibernate.org/157.html

I do totally agree with Frans, and the same should apply with NHibernate :

It is a shame to publish on a website this kind of information.

Who is stupid enough today to use an 'out of nowhere' commercial Framework, and use it, just because the provider said it is the best using its own benchmarks. Do you really think that .Net developpers are that stupid ?

At least, now .net has its "storm" benchmark...

Managers are...

The first answer:

ormbattle.net/.../...ur-tests-are-unrealistic.html

Others are upcoming.

Boil? Hardly. Disgusted? Definitely. That not the kind of feeling you want from a could-have-been potential customer.

Petty little marketing game disgust me. Programmers, or any other sensible being out there, are sensitively to thing like that :) . So I am glad that both Oren and Frans didn't fall for this dirty little trap.

I am a NHibernate user. While I haven't used LLBGen I would have no problem to recommend to customer that ask me about it. Why? Because both Oren and Frans impress me with their real knowledge and honest conversation. They showed me how much they know about their own product. If a future is missing it's missing for a reason. So if I ever need support from them I know I can expect a clear and reasonable explanation of why abc was done xyz way. It's something that I can appreciate. They won't give me the run around because they don't need to.

"The first answer:

ormbattle.net/.../...ur-tests-are-unrealistic.html"

You aren't seriously believing that, do you Alex?

Thanks Fred for digging up that old post. While we're at it:

ayende.com/.../PissedOffByVanatecOpenAccess.aspx

and my personal favorite:

blog.hibernate.org/.../11#versant

Two examples of other attempts to 'show' how 'crap' the competition is of product X, which actually didn't work that well...

That's one more pitfall you're falling into. Can I show you they have a good knowledge, but as anyone, make some serious mistakes? Guys, sorry about this :)

1) Frans written an excellent sequence of articles about implementing LINQ provider. I've been reading many of them, but frankly speaking, they didn't help us a lot. Mainly, because we've chosen a different way. The proposed one have lots of lacks you simply don't know because you didn't try. And I, as the that guy also passed the same path, can just show this is true: see their results on LINQ implementation tests. Just take a bit more complex case (subqueries, etc.), and it fails. Oren, actually I hardly even can imagine why you released such a "LINQ".

2) Read weblogs.asp.net/.../...rst-doing-is-for-later.aspx - Frans, I'm sorry again for being unkind there. Anyway, look on the facts, not the tone.

3) Oren, let's discuss your replies:

First case:

Me: Btw, NHibernate really drops query rate with the amount of already fetched instances. From ~ 1K/sec for 100 instances and to ~ 100/sec for 30K instances. I feel there is definitely some bug.

Ayende: Again, this is fully expected. NHibernate uses the Unit of Work model.

Can someone explain me, why:

NH's UoW model leads to dramatic performance decrease on read-only operations?

What kind of internals are there? I.e. I perfectly know how to implement UoW without such drametic wastes. Read e.g. about STS implementation in .NET 4.0 - obviously, it's provides much harder warranties, but performance decrease there is ~ constant (4...8 times). So it's simply hardly to imagine how to implement it in such a way!

Second case:

Ayende about our fetch test: Fetch is invalid usage, it is not something that you will ever there except as a bug.

Me: Why does it exist than? ;)

Ayende: For single use scenario. Calling it in a loop is a bug, you use a query for that.

I agree we test this on unusual scenario, but I explained why this is ok in this test. See ormbattle.net/.../...ur-tests-are-unrealistic.html

But let's return to the numbers: Oren, you're behaving like you don't see an obvious fact:

a) No one ever profiled this in NH

b) Ayende is not aware about this flaw

But he recommends this as panacea to the people. Imagine, even if I'd rewrite this test for NH to use just a single query, it will anyway FAIL to SqlClient! (you'd get materialization performance: 16399 op/sec, but SqlClient's fetch performance is 21129 op/sec).

So frankly speaking, I think NH users should simply say thanks to us for these tests. They show there are at least 2 huge flaws. I'd bet they'll be fixed in 6 months, if Ayende won't be so disappointed ;)

Finally, I can't mention the following: you guys have applied so many efforts here... What is your goal? DEPRECIATION OF RESULTS SHOWN AT ORMBattle.NET.

Ok, it's obvious at least NH simply fails there. And actually there are two paths to fix this:

Complex: spend few months on its performance profiling (instead of NHProf , yep ;) ) and make it work at least ~ as EF. I know it can, because they're very similar from the point of architecture & proposed approach - POCO, always "disconnected" graphs (sessions), AFAIK, data can be stored inside entity fields, etc.

Simple: to say it does not reflects the reality to depreciate it and hope this pretty false statement will be accepted by the people just because of your good reputation,

Frans, about Versant's advertisement and comparisons: you just mention FEATURE-BASED COMPARISONS. Have you read http://ormbattle.net/index.php/summary.html ?

Here I wrote why fully feature-based comparisons are initially false, and why we test just very very basic stuff.

@Alex, where have I insulted you? So why are you insulting me? ->

"Can I show you they have a good knowledge, but as anyone, make some serious mistakes? Guys, sorry about this :)"

Which mistakes did I make? I didn't implement silly code paths for some 'benchmark' of some competitor's fud site?

I'm really starting to get fed up by this crap. Remove our name and the results of LLBLGen Pro from your FUD spreading marketing site _NOW_. Ok? Not tomorrow, NOW. I've kindly asked you that yesterday, and also today a couple of times. We don't like being used as a pawn in a competitors ego boosting and self-promotion.

It looks like .NET developers & managers are stupid enough to use something that shows worst performance on very basic tests in comparison to other frameworks.

Concerning DO: yes, it performs very well on these tests now. But I think it easily can loose its positions there in near future:

All you need to compete with leaders on CUD tests is batching. Have I shown you here it's nearly as usual as future queries? Why just we and OpenAccess developers noticed it can be used? Btw, they use even faster option: they automatically build DELETE FROM ... WHERE Id IN (...) queries for removals, but we (currently) use just batches consisting of a set of regular DELETE FROM ... WHERE Id==@Id statements. So OpenAccess is #1 on entity removal test. It does removals almost 1.5 times faster than DO, and 6+ times faster than anyone else. Is this information useless?

You can be #1 on materialization test. We compete well with EF there (although it is definitely #1 on this test) just because our materialization pipeline is likely the most optimized one on the market, but in general it's really hard for DO to compete with EF-like frameworks on this test: if everything is optimized well, all depends on per-entity memory consumption here. So POCO entities must rule on this test. DO entities aren't POCO entities. That's it.

It's difficult to reach good result on LINQ tests. E.g. EF looses some points there mainly because they don't support equality comparisons for references (fields of Entity type; or, better to say, comparisons of non-primitive types) and few other strange limitations like impossibility to use First/Single in subqueries. But in general 6 months are enough to get 100 out of 100 score here; at least our team spend 6 months on LINQ provider for DO.

Finally, you must notice DO isn't explicitly shown as #1 here; moreover, it's easy to prove we didn't specially develop tests for it. If anyone will find something contradictory to this, please write us, we'll remove the test or part almost immediately and update the results.

Frans, you precisely know the mistake you've made. It's explained in my first post there.

So I must ask you here again (since you asked to not quote you), don't you find yourselve hiding important information from your customers? In fact, you prefer to hide the truth to sell you product better. Do you know how does this called?

@ Frans, concerning removal: it will be done tomorrow, if you want. I just need some help with this.

Alex,

Side note: Are you aware that your tone is becoming more and more offensive?

As for the results, as I mentioned, they reflect fake scenarios that has nothing to do with the real world.

As such, the benchmark results are worse than useless, they are misleading. I am willing to give you the benefit of doubt and assume that they are not intentionally misleading, but frankly, your behavior is... not encouraging that assumption.

I am not even going to try to answer your points, your are iterating things that were already answered. Please read the thread and take a look at the STORM reply from Hibernate. Pretty much everything else applies here as well.

As for the FAQ page, when I gave you permission to use my reply to you, I assume you would do it in an informal context, such as a comment or a blog post.

I don't think that the quotes in the FAQ page are appropriate and I am asking that you would remove them. The quotes, as shown, are out of context, both in terms of the actual discussion and the tone of discussion. Please remove them from the FAQ page, as I withdraw my permission to use them.

Well,

I know LLBL for being a customer. I know Nhibernate and using it since the first release. None is perfect.

But if your product is the best product (and it is not only related to performances), then it will be the market reality.

I don't care that Linq NH doesn't pass 99,9% of your tests : they do not represent real life examples.

When I look at your result, I only doubt of one thing : that you have understood the other products.

Let be honnest : You have just promoted your solution using a low moral solution. Not only is it stupid because it is too big and obvious to work, but it is also insulting for many people (commercial or oss) because you use their work as a leverage.

As far as I am concerned, I will probably use both LLBL and Nhibernate, with a preference for the second, but I will never work or spent a cent with your company or your product.

"So I must ask you here again (since you asked to not quote you), don't you find yourselve hiding important information from your customers? In fact, you prefer to hide the truth to sell you product better. Do you know how does this called?"

Important information? Contrary to your product, our framework comes with a 600+ pages manual and the knowledge that many thousands of projects (for example critical applications in banks and oil companies) use the runtime successfully every day, for many years already.

So, I don't think they'll lose anything when they can't read up how a competitor deliberately made our framework look really bad.

About the serious mistake I made... sorry but I don't follow you. Anyway, enough...

"concerning removal: it will be done tomorrow, if you want. I just need some help with this."

Yes, we want that, how many times do I have to repeat that, Alex? Thanks for removing us.

You must know that this whole mess really ruined my weekend and I still feel crap. I know I shouldn't be, after all it's marketing and who cares about marketing. But let me assure Alex, I won't forget this. When LLBLGen Pro v3 comes out with solid model first/ schema management etc. support for NHibernate, EF, L2S, LLBLGen Pro RT, Euss, genom-e and likely some others too, I will not spend a single second writing support code for your framework. You deliberately misused my life's work, and although I shouldn't, it feels like a personal attack. That's perhaps me, but so be it.

@Alex

While your tests may prove that your product is more performant on those exact tests, I think this was a mistake in a few ways:

From what I've seen, the major ORM developers are generally very cooperative and respectful of eachother's efforts.Doing a hand-picked speed comparison on a (misleading) marketing site probably just got you removed from the community as far as future cooperation is concerned.

That site appears to be set up as a impartial test results site. When I stumbled across that site, I saw no obvious implication that this was a site run by the creators of one of the products being testing. ORMBattle.Net? If you didn't intend to deceive, why not put this under your normal DataObjects.Net domain? This approaches astroturfing territory...bad.

There's nothing wrong with contributing ideas by debating techniques which may lead to better performance. Doing this in a public forum (such as this one) does everyone a lot of good. Skipping the debate and just showing raw benchmark scores does no good at all except from a marketing perspective... it is meant to impress managers who like to make major decisions based on charts.

ORM performance is about a lot more than how long it takes that to do a single entity update, fetch, delete, etc...for example, how does it make sure that only what needs to get updated, gets updated? How does it minimize database chattiness? These are issues that I would imagine are difficult to benchmark as there are so many different possible scenarios, but they are as important, if not more important than raw "scores" on simple CRUD operations.

I don't know if your test were meant to be an honest comparison between the different ORM frameworks out there, maybe they were...? But the way you go about it definitely shows, to borrow a phrase from the American legal system, the appearance of impropriety...which is bad in and of itself.

Side note: Do you see the same happens from your side? From my point of view, you say "everything is wrong", and now I must defend my position. Particularly, on such examples.

Don't you see such statements are just FUD as well?

Ok, have you seen my above post about at least two flaws in NH? Is this useless?

FUD again.

You mean this: https://www.hibernate.org/157.html ?

Yes, I've read it. And, frankly speaking, didn't find much similarity except the fact it was made by another one ORM vendor (it's not the flaw: let's think this is acceptable, if benchmark is honest).

There is a large part about fixing bugs related to NH usage in STORM test. I ASKED YOU PERSONALLY TO STUDY OUR TEST FOR NH TO AVOID ANY MISUSE OF NH THERE! I understand we aren't experts in NH, so I asked expert to do this. What did I got? Mainly, common answers like "your test is wrong" - I already mentioned this once here. And the whole this blog post.

The other left part there is this one: "With such small datasets, accessed repeatedly, the database is able to completely cache results in memory; the benchmark never actually involves any real disk access (watch your hard drive while STORM runs). We never get to see what happens once the dataset is too large to fit in memory, or is being updated by another transaction. We never get to see what happens with the database is under load. In fact, this benchmark involves no concurrency at all! We never get to see any joins or any of those other things that happen in realistic use cases. Furthermore, these kinds of benchmarks are often run against a local database, which gives results that are absolutely meaningless once the database is installed on a physically separate machine."

I can explain (and I already explained this in test suite description, but you don't see this) why MINIMIZING database load is GOOD for ORM test rather then BAD: any ORM adds some overhead to all the database operations; to measure it well (i.e. to expose JUST IT), you must MINIMIZE the database load. That's what we do.

In fact, since we measure the efficiency of intermediate layer, we must make all the layers behind it operating as fast as possible - to measure just the efficiency of that intermediate layer. Is this clear, or I must provide an example like with CPUs, add and multiply?

Ok, so I understood you allowed me to use your quotes here? :)

My first intention was to simply do this to show there is nothing like "out of context". But that's too long for a single post. So actually I don't see anything like "out of context" here. That FAQ page reflects exactly the parts we were talking about.

Anyway, since this isn't really important, I'll remove them all. I really tired to argue about stuff like"please remove my ..., I don't permit you to use it!".

We explicitly declared this almost everywhere. The site has separate domain name by two reasons:

It wasn't made to show DO4 is the best. And there is a long true story about this in "About" page. Yes, currently it markets DO4 well. But that's just currently. I don't fear id DO will be an average tool there in future. We'll simply try to keep its results god from this point.

Btw, we even didn't set up any explicit placing there. Again, by the same reason. I'd like such a benchmark site to run with our without DO4 results on it. And making this web site running on X-tensive domain would depreciate it further.

We seriously though (and still think) about making such benchmarks fully community based. E.g. shortly the code will be shared @ Google Code SVN (currently there is just .RAR with it). We didn't do this just because of lack of time.

So honestly, we are even ready to remove DO4 results from it at all (but I leave the permission to mention them on our own web site + make a public vote of adding DO4 back there).

If you have any ideas on how this (i.e. fully public & credible benchmark could be achieved), just share them here. I fully believe our test suite is done well - again, with or without DO4.

Hmm... More than two reasons, but anyway, it's a good explanation.

Shortly, I believe such a web site \ benchmark must exists. We are ready to gave it under the community control. And any ideas on how to achieve this are quite appreciated.

I almost immediately published link to this post there: ormbattle.net/.../...st-suite-and-its-results.html

I'm also for a complete openess of such things. And (actually) that's why I try to pull out any conversation here. Asking Ayende and Frans to run all of them them were was looking as simply impossible.

As you see, our CUD tests partially show how to minimize database chattiness. Btw, we could do the same with query test (there are future queries in some frameworks), but found this isn't fully honest, since this requires utilization of a special API, that leads to side effects. So likely, there will be a separate test for this shortly.

And I understand it's quite difficult to make a test that will be accepted by all of us.

If it will be too complex, people will say "it's just a particular case", Vendors will try to optimize their framework just for the scenario tested there (all of us know what graphics card vendors do to lead in complex benchmarks - i.e. they fake the results there by all possible ways)

If it will be too crude... Well, you see whats happens right now. Although the operations are really simplest from the simplest ones, and anyone can check this, I must answer why it isn't especially designed for us.

@Alex

"We explicitly declared this almost everywhere."

I look at the main page and the summary results page and see this mentioned nowhere. Again, I'm not suggesting impropriety, but this has very obvious appearances of impropriety, so much so that I wouldn't blame readers from completely distrusting the results.

I think its awesome to have a community based benchmark suite for the simple reason that it could improve the science of benchmarking, and may lead to benchmarks that are actually useful in a real-world context. However, I would expect this to be run and maintained by a completely impartial 3rd party, not by someone with such a vested interest in the outcome of the benchmarks!

To go down the "Bouma" road on this, I would suggest that the benchmarks themselves are not what is important, but rather the science that backs up the benchmarks. This thread seemed to have a promising start insofar as a discussion of what a "useful" benchmark are was introduced. Unfortunately, because of the whole appearance of impropriety issue, things turned to venom rather quickly which ended any useful discussion. A site dedicated to discussion of what a useful ORM benchmark is would be absolutely wonderful. Even some benchmark implementations would be nice, which you have obviously put quite a bit of effort into already.

Errr... neither of those are any of fear, uncertainty or death. This conversation has unfortunately degraded to uselessness, though to be fair to both Oren and Frans with the attitude you came in here with I'm not sure much else could have been hoped for.

I suspect some of that has been some miscommunication but much of it has been caused by your agressive attitude towards them and their work. A fact which can be directly traced back to you creating a site literally called "ORMBattle". I think it is pretty clear someone was looking for a fight.

One of the reasons benchmarks aren't that useful is that typically performance is only important if your software isn't meeting a necessary SLA/requirement. Thousands of people use NH and LLBL and it clearly meets this need for them. Largely because they use it correctly and for the types of real-world scenario's that matter.

Even if meaningful benchmarks exist they probably won't matter much to customers. They will be concerned with other issues like the frameworks flexibility and extensibility, or its community and support. You need to win on builidng a community, depth of knowledge, and proven reliability as Frans, Oren and others do. You should want to be seen as a go-to for insight into ORM issues. Issues in ORM are often subtle, tricky and complex as much as they can be simple. Proving you can be a bit faster is just such a small part of it.

You will not win customers by taking an aggressive approach like this. Trying to prove your product "beats" out others will only label you as biased and misleading and if that is FUD so be it, you brought it on yourself.

Even if, as you say, your benchmarks are not misleading, they will still be interpreted as such by most of the communities who now feel as if you are attacking. Remember developers care more about community, knowledge and support than they do about benchmarks which can say one thing on one day and something totally different on another.

@Alex

If you are serious you should open that site to public comments as this, and others sites, are open.

Allows users to leave theirs opinions about your tests, about the product they are using and about your product and, obviously, maintain each opinion public without any kind of moderation.

Note: public mean without a login in your site.

Your site has only your words as "the owner of the true".

+1 for never, ever, ever using this product, and recommending switching to something else if any of my customers are stupid enough to be already using it.

Way to go Alex, in addition to making your company look shady from a marketing perspective, you have made your product itself look bad by making a fool of yourself arguing with two people (Oren and Frans) who are much, much, much, much, much smarter than you are.

"it's quite difficult to make a test that will be accepted by all of us.

If it will be too complex, people will say "it's just a particular case", Vendors will try to optimize their framework just for the scenario tested there (all of us know what graphics card vendors do to lead in complex benchmarks - i.e. they fake the results there by all possible ways)

If it will be too crude... Well, you see whats happens right now. Although the operations are really simplest from the simplest ones, and anyone can check this, I must answer why it isn't especially designed for us."

You've said it yourself. Benchmark like this is useless because it's either too complex to judge or to general to have any value. In engineering there is always a design trade off. Each vendor choose to optimize their product differently for different scenario.

Let take the auto business for example. To show that one car and do 200 m/h vs another can only do 110 m/h is meaningless to the customer. What matter more is how far each company support their product in the long run. How much will it cost me for an oil change? How reliable is the car? In another word, critical stuff that any benchmark will fail to show.

Unless you are so confident that you have achieve an absolute breakthrough that your product will beat out all the other smack down. If that is the case why don't you put some money down if people like Frans and Oren can prove that you are wrong? Since I am sure they don't want to work for free :)))

If in the end with the necessary benchmark all product seem to be comparable to each other then what does that mean to me and the community? A big duh :) tell us something we didn't know.

@Alex:

Frans said:

"When LLBLGen Pro v3 comes out with solid model first/ schema management etc. support for NHibernate, EF, L2S, LLBLGen Pro RT, Euss, genom-e and likely some others too, I will not spend a single second writing support code for your framework."

Pwned.

We'll do this. Frankly speaking, initially there were comments. But we found two modules for Joomla! we used for this more worse than good. Basically, there were serious issues with appearance we couldn't accept.

But shortly they'll be back again. For now there only are public forums.

I won't even comment this ;)

Do you see they are comparable now? I don't see this. I see a huge disbalance there, which only proves that many of them were not profiled at all.

Did you know:

NH is 25...30 times slower on materialization that EF? So reading a large object sequence in it will take 30 times more time.

NH runs a simple query fetching 1 instance 100+ times slower when ~ 10K instances are already fetched into the Session? No one else exposes similar behavior.

Be honest, you didn't. Yep, this ugly truths. If you'd like to hide it, the simplest way is to say "there is nothing new here, don't pay attention to this". Just depreciate this.

And I'm not speaking about LINQ tests.

I suspect they'll continue doing this anyway - there is no need for bets. Moreover, you didn't say what exactly do you mean saying "you're wrong". Please state something particular, that will be easy to proof, not everything ;)

I won't discuss 600+ pages manual - I believe it's good, and actually I don't fully understand why you're trying to mix this here. ORMBattle is about performance and LINQ. You're writing about your manual and marketing results - exactly what ORMBattle isn't about.

In fact, you're saying "there are evidences this is HQ product" - have I ever mentioned it isn't? Yes, but is isn't the best on our test suite. Actually if your product is so good, I'd simply accept this being on your place. Explained, why others are better, and what are advantages of architecture you have developed.

Obviously, it isn't. Did I say something bad about your product here? I said you was wrong and shown few examples of this. Why? You're (as well as Ayende) trying to depreciate my own work by all possible means here; moreover, people believe you, and you're relying on this trying to use the tone "believe me, that's true". What did you expect?

Certainly, I don't dislike you personally. It's all about the opinion here.

Btw, it's even a bit funny you answer to me in such a tone. Actually, I don't expect this. I like to argue - it's a kind of sport for me. And I say "I was wrong" with ease, when I feel this. But currently I feel myself completely differently - and that's only because of your arguments.

Anyway, I don't like the idea of maintaining such a fight here further. I'll provide links to particular test discussions here tomorrow, as well as place to discuss the ideas on any other tests. I think this will be much more productive.

Saying "everything is wrong" is not an argument.

What's "real life" is actually very difficult to define.

We'll enumerate the test (and name the cases) failing for NH. We didn't do this for other products just because it is depreciative. But since NH is fully open source, I hope it is ok to publish this info. So anyone will be able judge by his own, if this is a "real life", or not. Currently I can only assure you lots of tests there are pretty simple.

That's why we contacted their vendors or experts. But I doubt we understood them so wrong.

I just mentioned we're ready to remove DO4 out of results there at all. If this will solve the problem with trustworthy of results, it's definitely ok. I believe such test simply must exists.

On contrary, what you see is what a particular vendor shows to you. So do you really think this is good? There are TPC-C and many other tests (btw, frequently testing very similar operations) for databases, but no any credible tests for the next layer. And you're, as customer, taking the position of your favorite vendor. You're so impressed that protect the idea that is attractive for just few vendors, nor customers.

Ok, forget about DO4 positions there. Let's think we already removed it. Do you still think such a benchmark is useless?

Btw, it's interesting to know what kind of framework would win in our performance tests, yes? It must be:

POCO-based. Its RAM overconsumption must be close to zero. This is needed to win materialization test.

As simple as it's possible, but complex enough to support reference fields and simplest collections. Again, that's about materialization test.

It must communicate with DB quite efficiency. No any chattiness. That's about CUD test.

Provide very good LINQ support.

Be highly optimized. Briefly, if you never profiled your framework, you have almost zero chances to be even #2...#3 there. We know leader of each test seems to be highly optimized at least for this particular scenario.

Looks like all this suits well for NH, yes? But it isn't winner there. EF wins materialization test - because their materialization is written pretty well + they use POCO. OpenAccess wins removal test because they optimized this almost ideally. And the product I can't name here either wins or is #2...#3 on other tests - I hope you understand this couldn't be a result of some particular optimization for these tests, or "just specific tests". Otherwise it would win everything.

It's mentioned at about page: http://ormbattle.net/index.php/about.html

I fully agree with you here.

Btw, we tried to find some standard benchmarks for ORM tools, and actually found nothing credible. E.g. I remember one testing paging implementation, but they didn't check even query plans there. Obviously, if they're too different (= one is just completely wrong), there is no reason to test paging at all.

So if there will be a test for paging (Take\Skip), we'll definitely take this into account. And there must be a separate test for paging without Take/Skip - that's what frequently used as well.

Anyway, we'll try to make more credible out of this - I believe this is more important what we could get for DO out of this. Obviously, we must remove DO for some period there (3...6 months?), and forward full control over the test suite code to the community. We'll think how to achieve this.

Sorry, I mistyped: "Anyway, we'll try to make more credible site out of this".

"I suspect they'll continue doing this anyway - there is no need for bets. Moreover, you didn't say what exactly do you mean saying "you're wrong". Please state something particular, that will be easy to proof, not everything ;)"

To prove that you are wrong for nothing meaning they are being dragged into your little game which is a waste of time.

"Do you see they are comparable now? I don't see this. I see a huge disbalance there, which only proves that many of them were not profiled at all."

Currently the test is being tweaked toward your framework, whether it's intentional or not. Hence the imbalance result. Like I said unless there is a breakthrough in your framework with the necessary optimization toward each framework the end result will be similar for the benchmark. So if you are confident why don't you put a price on the fact that after the necessary tweaking your product will still beat the other hand down? If not I would rather not see the time of the community being wasted.

I haven't read the comments here, but here's what I did...

read this article's topic

clicked the ormbattle link

didn't see any version number in contesting ORMs (like subsonic 3 has LINQ provider already)

saw the result, one (unknown to me to this day, I knew all the others) ORM stood out completely as a big winner

clicked "about us"

read:

__ We are experts in ORM tools for .NET, relational databases and related technologies. Moreover, our company has a product participating there (DataObjects.Net).

laughed, and closed the browser

Aren't comparisons like these useless? I mean, whatever ORM you use, it can be tweaked and finetuned. With the end result that the time spent in SQL server is much larger than the time spent in the ORM itself, and that ORM time is negligible. I think this is true for every good ORM out there.

Every ORM can reduce roundtrips (I love NH futures), and has options for optimizing the SQL code being generated.

People are better off looking at features/ease of use/support/price of the ORM. Don't worry about performance. After a bit of configuring and optimizing your ORM of choice, you can get almost the same SQL output, and performance, as all the other ORM's.

So the purpose of this was just having such a benchmark available? It's pure altruism regarding ORM? I think that's really noble of you.

Just so it's crystal clear: you would have created this separate website and posted the results of all these tests if your product had been the clear loser across the board?

That's very, very believable! I'll take 100 copies of your software! I can't wait to see the tripling of performance of my code!!! I'm using lots of exclamation points so it's clear that I'm being 100% sincere!!!!!

@Alex

__I can explain why MINIMIZING database load is GOOD for ORM test rather then BAD...

In fact, since we measure the efficiency of intermediate layer, we must make all the layers behind it operating as fast as possible - to measure just the efficiency of that intermediate layer.

well I'm having fun here (very interesting discussion) but you're a bit wrong here

2 different ORM will issue 2 different SQL statements to a database (trying to solve the same real-life problem), say ORM-A will issue 3 statements whereas ORM-B can do it in a one statement, because its LINQ provider (join support etc), or CRUD handling is more advanced (say will only issue UPDATE statement on particular changed collumns, not on ALL like Lightspeed is doing for example (amazing ORM btw, v3 will improve this))...

therefore DB engine (say SQL 2008) will spent say 85ms (big numbers just for the record) in the first case, 15ms in the second case...and multiply this.

That is what counts in the real world.

(so the formatted sql and the possibility to alter/change that, thanks to NHProfiler you know which side I'm in, so you should messure efficiency of DB engine as well, because it might be stressed by some dumb ORM these days;-)

And, as I replied to you when you asked, the main problem isn't with the code (there are severe problems there, but that isn't the issue).

The problem is that the permise of the benchmark is flawed. You are trying to testing _bulk data manipulation_, a scenario which is just not something that NHibernate (or most OR/M) are designed to solve.

As such, there really isn't a point in trying to fix code, it is the idea of the test itself that you should fix.

If you want to create a more realistic benchmark, you should try one of the suggestions above. Write an application and test that using things like web load test.

In other words, you benchmark do something like this:

C++

int[] arr = new int[100];

for(int i=0;i<100;i++)

arr[5] = i;

C#

int[] arr = new int[100];

for(int i=0;i<100;i++)

arr[5] = i;

And then pointing the perf difference related to array bound checking in a contrived scenario.

There isn't a point in trying to fix anything, the scenario that you are using is broken.

That is just nonesence. Trying to minimize database load means that you are removing real world concerns, that completely change the behavior of your applications.

It is like trying to show fuel consumption in city driving for a Formula 1 car. The test itself is meaningless for the designed goals.

Just so I'll make the example clearer. No one car about the fuel consumption in 20KPH, all they care about is fuel consumption in 200KPH. And trading off one for the other is a good design goal.

Thank you.

No, it isn't. You test is loading 20,000 objects per loop iteration, where you aware of that?

Didn't I cover that already?

NH uses the UoW model, which means that we manage all loaded objects internally.

That is expected and acceptable, for the simple reason that NH ISession isn't supposed to be used for bulk data manipulation, that is why we have IStatelessSession.

Oh, and as an aside, you might want to track down what SQL is being called for each test, the results should be quite educating.

And that is why your method is flawed. You mention nothing at all about things that are important for real world concerns like lazy loading or eager loading options, caching semantics, transactional behavior, dirty checking, automatic persistence, and more.

Those aren't just bullet points, they truly change the way you write software.

Wrong. There are results for 1K (first page), 5K and 30K (in .XLSX). NH slower at least 25 times.

Do you know that almost any other framework supports UoW and "manages loaded objects internally" as well?

Yes, normally there is a state associated with each loaded entity. But:

1) It is stored in dictionary-like object (or, better, an object internally relying on hash table). Thus state search time is constant - i.e. it does not depend on count of already loaded objects.

2) I don't understand what must happen there to make each query 100 times slower on large set of instances. Is NH iterating all the instances before each query (e.g. looping up if any of them is changed). Actually it looks like exactly this happens.

If you aren't sure there is a REAL problem, I'll show you a sequence for NH LINQ queries separately:

N (number of performed queries): Performance (op/sec)

100: 925

1000: 646

5000: 301

10000: 180

30000: 68

So performance has degraded ~ 15 times. You still don't see the problem? There is no any other framework exposing the same behavior.

Yes, I do not. But:

1) DO supports lazy & eager loading for all the fields by default. The entity tested on this test could be an entity with LL field with just one attribute added. Do you know we maintain "layered" field state? I.e. in fact, we know what was loaded initially, and what was changed? How its presence affects on results. Almost nohow.

Anyway, I believe there must be a separate test for this (LL); moreover, LL must not lead to much more costly while it's off. So in general, I think results must be ~ the same independently of LL support in a particular framework. If this isn't true, likely, there is a bug.

2) Caching was intentionally turned off for any framework, and we wrote about this. There must be a separate test for this. If it won't be off for some frameowork, we'd compare teleportation with regular transportition (i.e. two completely different technologies).

3) Transactional behavior, dirty checking: the same. E.g. DO relates any acquired entity state to a particular transaction, and can automatically re-load it on demand, if current transaction has changed. There are transactional methods - this ensures any operation you run on entities or persistent services will lead to a transaction, if necessary.

And what? All this stuff doesn't make it to operate slower than others.

4) Automatic persistence: oh, that's what exist in many of tested frameworks. Presence of this feature must not significantly affect on results.

Yes, I agree they must be implemented, but this test does not rely on these features. Moreover, I'm sure presence of these features must not significantly affect on test results. And actually test shows this: there are some frameworks implement all of them (I'm 100% sure DO does this), and its test results aren't bad at all.

This shows they can be implemented in a way that does not lead to serious performance degrade.

Any other "that's why your method is flawed"?

Sorry, corrections:

"How its presence affects on results?"

"Moreover, LL must not lead to much more costly operations while it's off."

I'm ready to beat for this: i.e. I'm 100% sure some of tested frameworks can beat DO after necessary tweaking on CUD, query & materialization tests (not speaking about LINQ tests - this is obviously true, but likely, I'll be waiting for too long ;) - it's complex as well).

So who wants? I can personally explain what must be done to achieve this.

Tested version of any framework is mentioned in its own page.

See e.g. http://ormbattle.net/index.php/subsonic.html

What a surprise - DataObjects is a leader. Seems like another ad for me.

Of course it is ad. All record of all product is ad :)

Well i know what i wont be recommending now. DataObjects.

@Alex, Instead of rehashing your own opinion here, you also could have invested 2 minutes to remove us from your website already. It's still up there.

The main problem with your way you look at things, Alex, is that you deliberately want to run these tight loops as 'tests' and use them to create other information like the CUD numbers.

I can prove to you that that route is not correct: because the updates are all done in bulk, you can also use bulk update statements. You ignore them because you then can't run the tight loops which show how great you optimized the batching pipeline. However, for the purpose of 'updating massive entities with the same expression' (as the tight loop implements, as you use 1 context for 30K updates!), using the bulk update statements in some o/r mapper frameworks, the update statements are done much faster: 1 query and they're done. Extrapolating that to CUD numbers therefore would make them the clear 'winners'. Therefore what you use as 'CUD' numbers isn't going to fly.

You don't use bulk update statements (which are just another way of optimizing what you optimized yourself inside the pipeline!) because you find that a 'hack' as you told me. That's your opinion of course, but as you and you alone call the shots on that website, your opinion is the only one that counts. So you can manipulate the tests to get the results you want. And you do that.

Another signal that you compare apples with oranges is the 'Query' test. You have absolutely no clue how our framework works, looking at the code. For starters you fetch inside a transaction. But we don't use a central session object, Alex. That's a deliberate choice. Secondly, your code starts fetch queries on a linq metadata object in a loop. But that simply creates a new expression tree, executes it and returns the object. By contrast, the code for DO creates a single transaction on the already live Session and fetches all queries on that same object. This isn't the same thing as you now can re-use cached objects, be it a query, an expression tree evaluation, anything.

Is this mentioned on your website, that we have a very fast pipeline and therefore don't suffer from problems where queries have to be re-evaluated every time, you know like in 'Real Life'. The website, ironically, mentions that you have setup the tests the way they are to make sure the overhead in the o/r mapper is maximized. However, by re-using session/context objects in a loop, you precisely don't do that, as you can re-use resources, query parsing results etc. which is not giving a good picture. Flawed, one would say.

(part 2)

On to the linq queries. Your '100' number suggests a percentage. The numbers show that 60% of the linq queries thrown at our linq provider failed. However, does that say that 60% of all queries one would write normally would fail? Your website suggests this, (as the linq tests are carefully created, am I right?) but this is utter nonsense. For starters, you exploit lack of support of some features deliberately. For example the linq queries never show a single FK-PK comparison. That might be because you don't support FK fields. Adding 25 FK-PK comparison queries would give you a 25% failure rate. We do support FK fields, in fact our linq provider doesn't support entity instance comparisons, as we don't think it's something that will be used much (and our linq provider is out for more than a year now and we never had that request), as we have fk-pk comparisons, which are closer to what people would do in sql anyway.

Yet, you keep your set of entity comparison queries, which makes sure a lot of the queries fail. You also have queries with multiple Skip() calls. Skipping is something one would rarely use in real life, as one would normally use paging. Did you want to test paging? If so, why didn't you write a couple of them? Oh, Skip(10).Take(50) isn't paging as it is used in real life.

I can go on and on, but enough time wasted. As a final remark, I wanted to add that what I wrote about the personal attack was to make you feel how you ruined my weekend. That you don't seem to understand a single bit of it, that's clear to me. C'est la vie.

You still have something to remove, btw.

We're working on this now. We'll make all fixes in tests suggested by other and remove LLBLGen with today's update.

I'm writing FAQ post about this. Briefly,

We'll add tests for single entity updates

Tests for multiple entity updates will be there.

Obviously, "reality" is somewhere in the middle: some transactions run just 1 update (btw, a rare case), others run many of them. So on general, true CUD average is ~ SingleUpdateTimeSingleUpdateProbability + MultipleUpdateTime MultipleUpdateProbability. E.g. 20%SingleUpdateTime + 80% MultipleUpdateTime.

Obviously, this is a number between SingleUpdateTime and MultipleUpdateTime. Moreover, I'm 100% sure SingleUpdateTime will be approximately the same for all ORM tools, ~ 5..10K op/sec. So this number won't dramatically change real life results (CUD average).

It reminds me one day, some people claimed that using dataset was better than using an ORM because some "benchmark" showed it. In fact they even claimed that it was faster than datareader...

And those, one should use Dataset over datareader or ORM tools. It was lovely and dumb.

I have to say Alex, it's clear that you're in love with your software...and as we all know, love is blind.

Stop wasting our time with your drivel, clean up your site, put a GIANT disclaimer on the main page saying you have a conflict of interest (i.e. you built D.O. and the tests in its favor) and let these two gentlemen (Frans/Ayende) get back to work.

Note, that authors of this web site don't claim that you must use ORM with the best performance. It is just a source of performance-related information, that can be useful in some cases.

OK tests are not perfect, but they are not completely wrong. One can suggest another test. How would good tests on ORM performance look like?

To be fixed. We support this, but likely, in many tests it was written as order.Customer==customer, that isn't supported by you.

You are using the following code:

while (i < count)

foreach (var o in session.Linq <simplest())

There is no paging, and NHibernate doesn't do lazy materialization. You are loading every single row (and there are 20,000 of them) for each while loop iteration.

The code make me think that you believe that it will do lazy materalization, though.

Alex,

I am not believing those numbers for several reasons.

a) Your code and statements about the code shows a big disconnect.

b) It is _not important_, NH isn't trying to optimize loading of a large set of queries. It doesn't matter because any optimization that we may try will be swallowed by the DB time.

c) NH ISession can most certainly handle large number of object in a query, but even then, it is not something that you are supposed to do.

d) State search is O(1), but NHibernate manages query persistence transperantly. What this means is that the following code will work with NHibernate.

tx = s.BeginTransaction();

foo = (Foo)s.Get(typeof(Foo), 1);

foo.Name = "ayende";

var list = s.CreateQuery("from Foo f where f.Name = 'ayende').List();

Assert.Contains(list, foo);

tx.Commit();

When you perform a query, NHibernate will check if it need to synchronize the state of loaded objects with the database (automatic flush) based on a set of rules that aren't really important right now.

Your test happen to force NHibernate to make a check of every object loaded to memory, that is a O(N) operation, by definition.

Now you get it?

Doesn't matter the perf implication, I mention those because you brought up selection criteria for OR/M that were nonsense.

so many words about "dirty marketing games"

so many words about "useless of this comparison"

so many words about "DO got anti-ad for their own"

looks funny...

Frans, cowgaR, GiorgioG, firefly, Fred, Ayende, and others... if discussed site and its idea is useless, if this is silly, if this only leads to depreciation of DO as product - why all of you spend so many time and words here? I would just have a one-minute fun looking at ormbattle.net and then return to my work, if like you've said - "it is not real life" - then we have nothing to worry about, because we are in real life :)

But such hysteria which i can see here, makes me think, that nothing hurts like the truth... Otherwise you would not spent here so many time.

That is extremely inaccurate. Care to name a single real world scenario where you need to update/insert 20K of records that isn't an import task?

Agree. Ayende, all you just wrote about materialization is lie. Or you don't study the test code well. 1 min, writing reply.

Alex,

Let me get this straight, are you telling me how NHibernate works?

I am willing to admit that I may not have the best knowledge about it, but I do believe that 6 years of working extensively with it does carry with them the capacity to understand how NHibernate operates.

So about materialization test for NH:

Look up the last method here: code.ormbattle.net/ (I paste full code, not just a peace of it).