Not all O(1) operations are considered equal

At some point in any performance optimization sprint, you are going to run into a super annoying problem: The dictionary.

The reasoning is quite simple. One of the most powerful optimization techniques is to use a cache, which is usually implemented as a dictionary. Today’s tale is about a dictionary, but surprisingly enough, not about a cache.

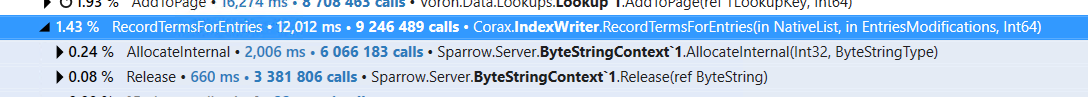

Let’s set up the background, I’m looking at optimizing a big indexing batch deep inside RavenDB, and here is my current focus:

You can see that the RecordTermsForEntries take 4% of the overall indexing time. That is… a lot, as you can imagine.

What is more interesting here is why. The simplified version of the code looks like this:

Basically, we are registering, for each entry, all the terms that belong to it. This is complicated by the fact that we are doing the process in stages:

- Create the entries

- Process the terms for the entries

- Write the terms to persistent storage (giving them the recorded term id)

- Update the entries to record the term ids that they belong to

The part of the code that we are looking at now is the last one, where we already wrote the terms to persistent storage and we need to update the entries. This is needed so when we read them, we’ll be able to find the relevant terms.

At any rate, you can see that this method cost is absolutely dominated by the dictionary call. In fact, we are actually using an optimized method here to avoid doing a TryGetValue() and then Add() in case the value is not already in the dictionary.

If we actually look at the metrics, this is actually kind of awesome. We are calling the dictionary almost 400 million times and it is able to do the work in under 200 nanoseconds per call.

That is pretty awesome, but that still means that we have over 2% of our total indexing time spent doing lookups. Can we do better?

In this case, absolutely. Here is how this works, instead of doing a dictionary lookup, we are going to store a list. And the entry will record the index of the item in the list. Here is what this looks like:

There isn’t much to this process, I admit. I was lucky that in this case, we were able to reorder things in such a way that skipping the dictionary lookup is a viable method.

In other cases, we would need to record the index at the creation of the entry (effectively reserving the position) and then use that later.

And the result is…

That is pretty good, even if I say so myself. The cost went down from 3.6 microseconds per call to 1.3 microseconds. That is almost 3 folds improvement.

Comments

What was the collision rate on the dictionary?

What is the GetHashCode() implementation for RecordedTerm?

"Let's se tup" should be "let's setup"?

Paulo,

The cost of the

GetHashCode/Equalsis not high. Roughly 0.4%, so significant, but not compared to the overall cost of the dictionary lookup.Collision rate should be roughly similar to strings, so not high at all.

Indy,

Actually, should be "set up", fixed, thanks

A bad hash code function might turn O(1) into O(N). that's why I was asking.

Paulo,

Yes, I"m aware, that wasn't the case in this scenario.

Nice post Oren, I recognize a pattern we're using as much as we can, better use a list index (or better an array index) than a dictionary lookup. This can be applied especially when doing some manual string parsing like instead of have something like

switch(char c) { case 'a': actionA(); case 'b': actionB();)we can use something likeAction[] actionsand callactions[c]();instead. Or even better if the switch/cases are dense enough the C# compiler will emit a O(1) jumptable through theswitchIL instruction. Roslyn condition to emit a jump table here: https://github.com/dotnet/roslyn/blob/9fb1877ba1366b4a35de96639a8276538f9f4090/src/Compilers/Core/Portable/CodeGen/SwitchIntegralJumpTableEmitter.cs#L69-L96Could you please upload the complete project to GitHub? I can't see how to make this work without more context. Some things can throw exceptions just by looking at them

recordedTermContainerId ref CollectionsMarshal.AsSpan(_termsPerEntryId)[_termsPerEntryId.TermContainerId]

...it would be awsome if you could provide the full context so we could test it. So far, the tests I've run gave me 0μs for the dictionary and 641μs for the list, so could probably be coding something wrong.

Thanks in advance.

Patrick,

Thanks for the code reference, that is awesome. And yes, that is a really powerful technique.

Frank,

The full project is on GitHub, it's just that it is 1M LOC or thereabout.

Check this method, which is what I actually talked about in the post: https://github.com/ravendb/ravendb/blob/9484ccc2215a85eca718257703106888b85cbcdb/src/Corax/IndexWriter.cs#L2041

Comment preview